Videos shared to YouTube that have been made using artificial intelligence but look real will have to carry a generated content label in the future. This will include allowing record labels to request the removal of a song that uses AI to mimic the voice of a singer or rapper.

Writing in a blog post announcing the new policy, YouTube says it is launching a new responsible AI policy and that includes making it clear when something was made by AI..

Several new AI features have been rolled out by YouTube in recent months including comment sorting, and integration with Bard for video analysis. More AI features will be launched in the coming months, including those designed to improve content moderation.

Why now?

YouTube says the nature of generated content required a level of responsibility above the normal community guidelines.

The initial rules are likely to evolve and change due to the speech at which AI itself is changing. Next year, for example, Google is expected to reveal Gemini, its next-generation advanced AI model that is thought to be more powerful than GPT-4 from OpenAI — although OpenAI has started training GPT-5.

“We have long-standing policies that prohibit technically manipulated content that misleads viewers and may pose a serious risk of egregious harm,” the company explained.

“However, AI’s powerful new forms of storytelling can also be used to generate content that has the potential to mislead viewers — particularly if they’re unaware that the video has been altered or is synthetically created.”

YouTube AI content: What's changing?

While the policies don’t prohibit the use of AI content, creators will be required to carry a warning that what the viewer is seeing is not real. This comes as the ease-of-use and quality of generative media improves day-by-day. Runway now makes it trivial to create video from text and OpenAI has added image generation into ChatGPT.

Creators will have to “disclose when they've created altered or synthetic content that is realistic, including using AI tools”. If it is clearly AI generated then the normal community guidelines would apply. It is about avoiding misleading content that could be harmful.

There is a global move to tackle the problem of misinformation from generative AI, where images, videos and audio content can be produced that looks and sounds authentic but is entirely fictional. This is a particular problem around elections.

AI’s powerful new forms of storytelling can also be used to generate content that has the potential to mislead viewers

YouTube

How will it work?

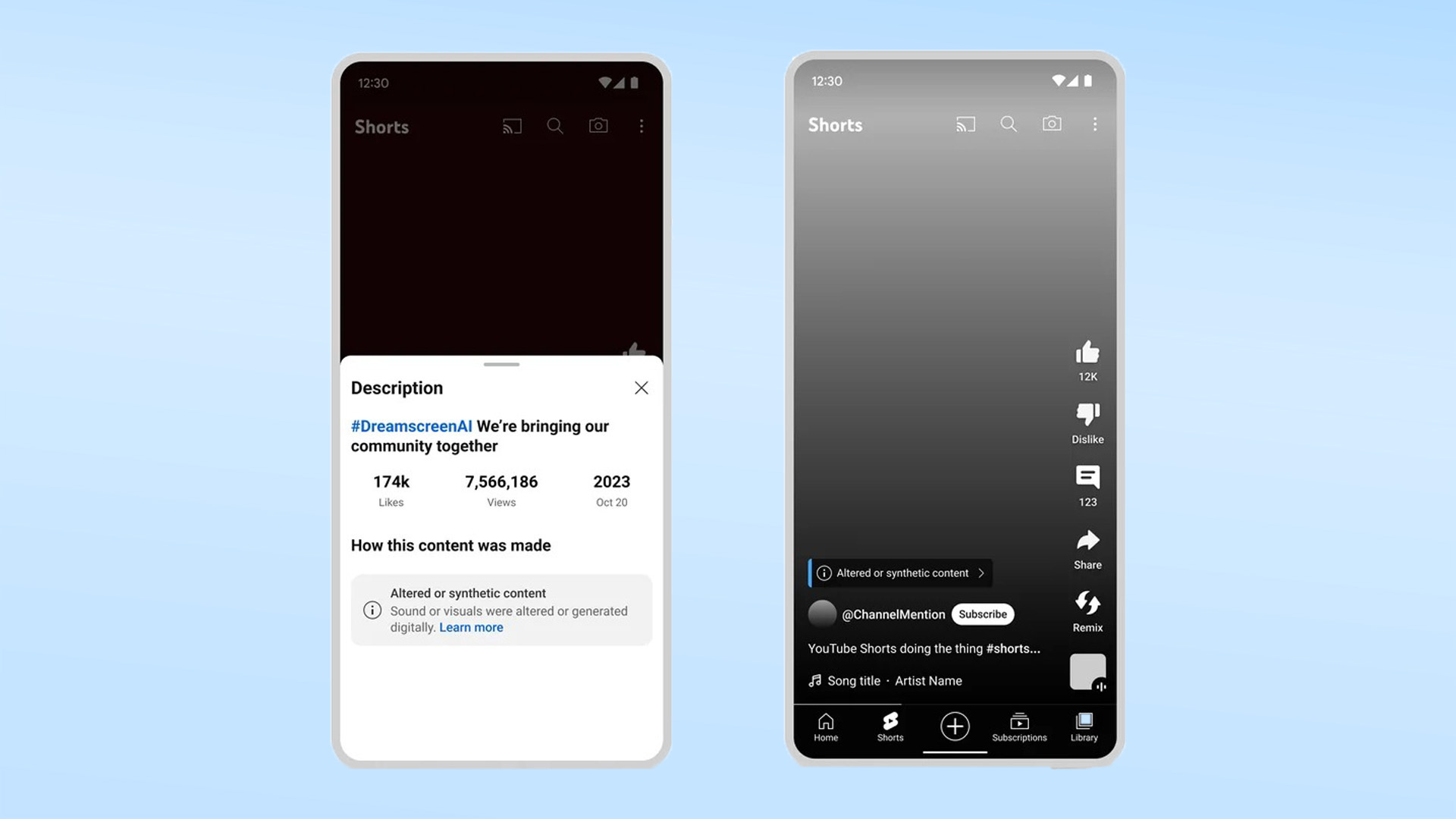

When a creator uploads a video to YouTube that includes plausible but artificially manipulated content they will be asked to declare it in the settings for the video, post, or Short.

“For example, this could be an AI-generated video that realistically depicts an event that never happened, or content showing someone saying or doing something they didn't actually do,” the blog post explains.

Users will see a label in the description panel making it clear some or all of the video was AI generated if the content is low risk. For high-risk content including real people or around political issues, the warning will be in the video player itself.

Removal of AI content

The next stage will see the rollout of new features they will allow people to request the removal of AI-generated content that includes a clone of their voice or likeness.

This is particularly useful for musicians in the wake of a growing number of songs using a clone of an artist's voice. For example, there are several videos on YouTube of Johnny Cash’s clone singing anything from Taylor Swift to Britney Spears.

“In determining whether to grant a removal request, we’ll consider factors such as whether the content is the subject of news reporting, analysis or critique of the synthetic vocals,” YouTube explained.