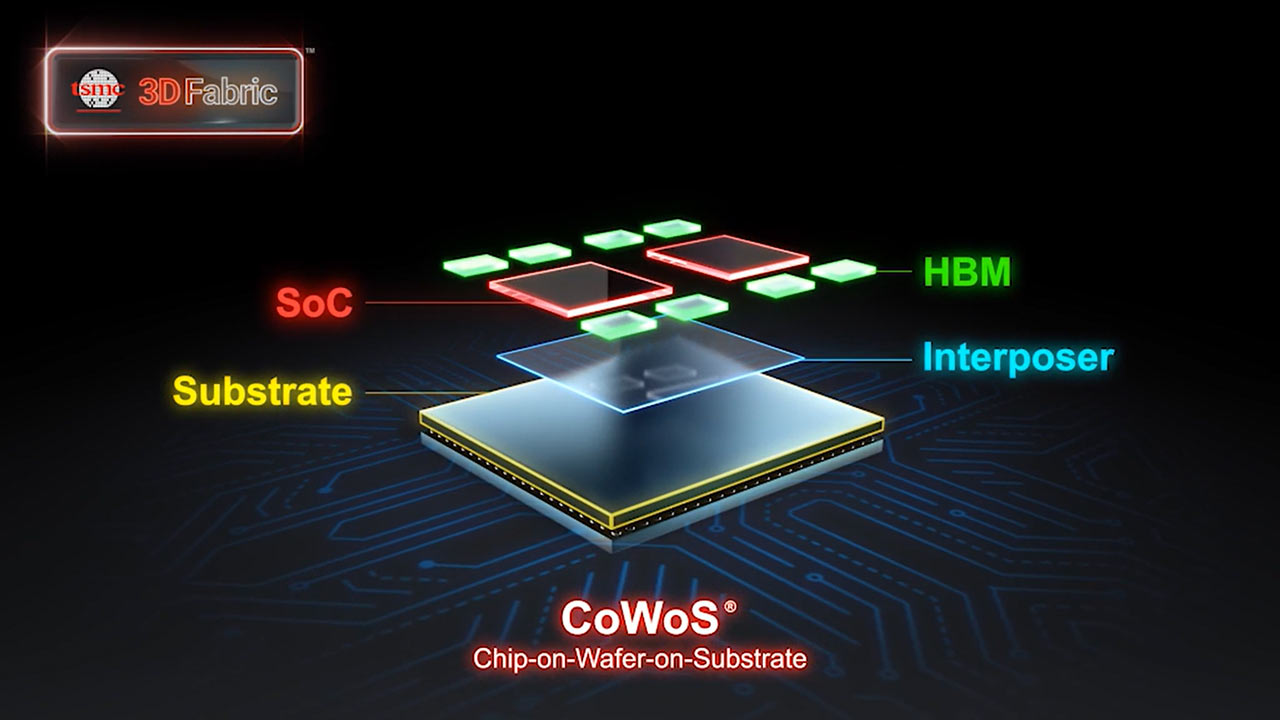

Major cloud service providers (CSPs) — AWS, Google, Meta, and Microsoft — are set to expand their AI infrastructure, and the total capital expenditure for this is projected to hit $170 billion in 2024. This surge in investment is driving up demand for AI processors and putting pressure on production capacities, particularly at TSMC, which is struggling to keep up with the demand for its chip-on-wafer-on-substrate (CoWoS) technology, TrendForce reports, citing Commercial Times.

The larger size of interposers required for the latest AI and HPC processors from Nvidia and AMD means fewer interposers can be obtained from each 300-mm wafer, straining CoWoS production capacity. Additionally, the number of HBM stacks integrated around GPUs is increasing too, adding to the production challenges. As the interposer area grows, the capacity to meet GPU demand diminishes. This has led to a persistent shortage in TSMC's CoWoS production capacity.

Nvidia's latest Blackwell-series processors (GB200, B100, B200) are expected to exacerbate this issue by consuming even more CoWoS capacity, with TSMC aiming to increase its monthly production to 40,000 units by the end of 2024, a significant rise from the previous year.

Producing HBM stacks is becoming increasingly complex, too, as more EUV layers are required to build faster HBM memory. SK Hynix, the leading HBM maker, used a single EUV layer for its 1α process technology, but is moving to three to four times more layers with its 1β fabrication process, which could cut cycle times, but will clearly increase the cost of new HBM3E memory.

Each new generation of HBM brings an increase in the number of DRAM devices. While HBM2 stacks four to eight DRAMs, HBM3/3E increases this to eight or even 12 devices, and HBM4 will push it further to 16, which will again increase the complexity of HBM memory modules.

In response to these challenges, industry players are exploring alternative solutions. Intel, for instance, is developing rectangular glass substrates to replace traditional 300-mm wafer interposers. However, this approach will require significant research, development, and time before it can become a viable alternative, underscoring the ongoing struggle to meet the current rising demands for AI processor production.