Bolstered by growing demand for, and hype about, artificial-intelligence technology, Nvidia was catapulted into the Biggest Tech sphere this year.

The chipmaker surged from a $750 billion valuation to well beyond a $1 trillion valuation on the heels of a double earnings beat in May, and it has remained strong since.

(NVDA) -), as Bank of America said in May, provides the "picks and shovels in the AI gold rush."

DON'T MISS: Here's What Companies Nvidia Is Joining In the $1 Trillion Market Cap Club

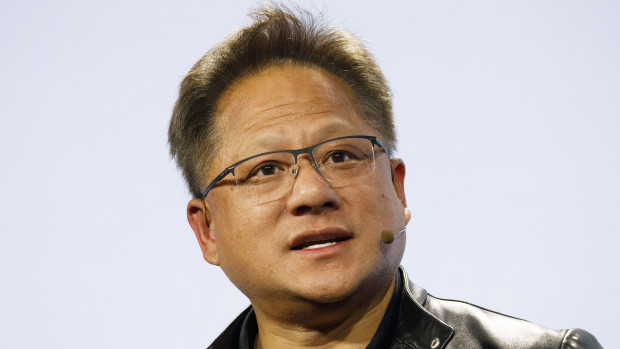

The company's CEO, Jensen Huang, said on Aug. 8 that the AI technology powered by Nvidia's chips will "reinvent the computer itself." Computing platforms as they are currently known, Huang said, will soon be replaced by powerful new AI platforms.

"Large language model is a new computing platform because now the programming language is human. For the very first time, after 15 years or so, a new computing platform has emerged," Huang said. "The computer itself will of course process information in a very different way."

More Business of AI:

- Here's the startup that could win Bill Gates' AI race

- Meet your new executive assistant, a powerful AI named atlas

- The company behind ChatGPT is now facing a massive lawsuit

And in terms of pivoting computers and computing platforms to large language models, Huang said, Nvidia's accelerated computing will work to support that. But at the same time, the coming environment, suffused by generative AI, requires a significant scaling up of the cloud.

To help revolutionize this cloud scaling, Huang said Nvidia is giving its Grace Hopper Superchip processor a "boost." The new chip, which Huang said is the Grace Hopper combined with the world's fastest memory chip, HBM3, is called the GH200.

"The chips are in production. This processor is designed for scale out of the world's data centers. It basically turns these two super chips into a super-sized super chip," Huang said.

"You could take just about any large language model you like and put it into this and it will inference like crazy. The inference cost of large language models will drop significantly."

One Stock We Believe Will Win in The AI Race (It's not Nvidia!)