Doctors in Perth have been ordered not to use AI bot technology, with authorities concerned about patient confidentiality.

In an email obtained by the ABC, Perth's South Metropolitan Health Service (SMHS), which spans five hospitals, said some staff had been using software, such as ChatGPT, to write medical notes which were then being uploaded to patient record systems.

"Crucially, at this stage, there is no assurance of patient confidentiality when using AI bot technology, such as ChatGPT, nor do we fully understand the security risks," the email from SMHS chief executive, Paul Forden said.

"For this reason, the use of AI technology, including ChatGPT, for work-related activity that includes any patient or potentially sensitive health service information must cease immediately."

Since the directive, the service has clarified that only one doctor used the AI tool to generate a patient discharge summary and there had been no breach of patient confidential information.

Call for national regulations

However, the case highlights the level of concern in the health sector about the new models of unregulated AI that continue to be let loose on the market.

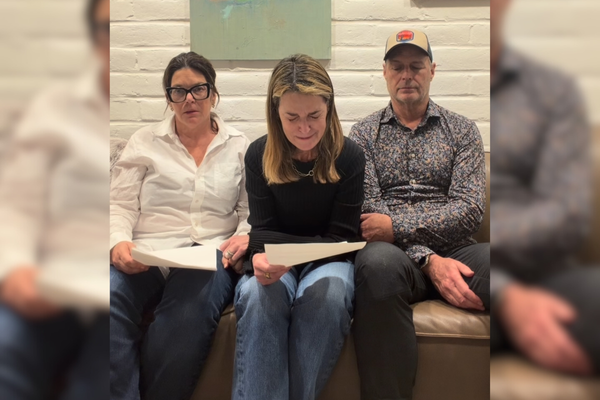

Australia's peak medical association is urging caution and calling for national regulations to control the use of artificial intelligence in the healthcare system.

The Australian Medical Association's WA President, Mark Duncan-Smith, said he did not think the use of tools like ChatGPT was widespread in the profession.

"Probably there are some medical geeks out there who are just giving it a go and seeing what it's like," Dr Duncan-Smith said.

"I'm not sure how it would save time or effort.

"It runs the risk of betraying the patient's confidence and confidentiality and it's certainly not appropriate at this stage."

That view is shared by Alex Jenkins, who heads up the WA Data Science Innovation Hub at Curtin University.

Mr Jenkins said the developer of ChatGPT had recently introduced a way that users could stop their data from being shared, but that it was still not a suitable platform for sensitive information like patients' records.

"OpenAI have made modifications to ensure that your data won't be used to train future versions of the AI," he said.

"But still, putting any kind of data on a public website exposes it to some risk of hackers taking that data or the website being exposed to security vulnerabilities."

Potential for AI in health

Mr Jenkins said there was huge potential for similar software to be developed specifically for hospitals.

"The opportunity to develop medical-specific AI is enormous," he said.

"If they are deployed safely inside hospitals, where people's data is protected, then we stand to benefit as a society from this amazing technology."

When it comes to AI in the health system generally, the possibilities are staggering.

Tech to tackle heart disease

John Konstantopoulos co-founded a Perth-based company that has developed AI to help measure the risk of heart disease within 10 minutes.

It is being currently trialled at radiology and cardiology practices around the country.

"In Australia, one person dies of heart disease every 12 minutes and it costs the Australian economy roughly $5 billion each year, which is more than any other disease that we experience," he said.

"What we found was that traditional diagnostic approaches for assessing people with heart disease have primarily focused on risk factors such as cholesterol, calcification and narrowing of coronary arteries but in roughly 50 per cent of people, the first indication of any heart disease was death."

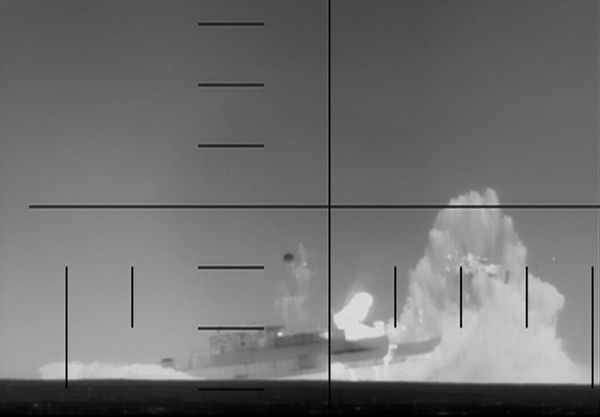

Mr Konstantopoulos said their AI was designed to identify plaque in CT scans that was "vulnerable" to inflammation and rupture.

He said it could help clinicians make a more informed assessment of what the patient's risk was and how best to treat them.

"That plaque is incredibly hard to see and very time consuming to find," he said.

"If you think of our AI almost like a driverless vehicle or autonomous vehicle, the sensors basically drive down the arteries in the CT scan and use those sensors across every coronary artery to find the disease and highlight that back up to the clinician so that they have a very specific view of what that patient's risk is."

Patients' rights need protection: AMA

While the AMA sees the potential of AI, it has written to the Federal Government's Digital Technology Taskforce, calling for regulation to protect patients' rights and ensure improved health outcomes are achieved.

"We need to develop standards where it is ensured that there is actually a human at the end of the decision-making tree that makes sure that it's actually appropriate," Dr Duncan-Smith.

He said it was important to ensure the use of AI did not result in poor health outcomes for the patient.

"It's creating a set of rules to make sure that we actually achieve what we are trying to achieve," he said.

Regulations lagging behind tech

Alex Jenkins believes the regulatory process needs to start as soon as possible.

"One thing that none of us knows is what the future will look like in even six or 12 months in this space," he said.

"So if we start getting these safeguards in place now, we'll be able to adapt them as the technology evolves."

Mr Konstantopoulos agreed but said any additional regulation should not prevent clinicians from getting access to innovative technology that could benefit their patients.

"There has to be a broad discussion around that," he said.

"But it does appear to be more of a knee-jerk reaction on regulating because of fear as opposed to really being structured on what can we do for the patients and how can we bring this in, in a rigorous manner."