Google I/O, the tech giant's annual developer conference, took place this past week, and unsurprisingly it was another year in which AI figured highly on the agenda. The company demoed some neat AI-powered tricks, and one in particular got a lot of attention: Project Astra.

Project Astra is Google's vision for a universal AI assistant that will accompany our every moment. You can have conversations with it as you go about your life, asking it everything you want to know like an inquisitive kid pestering a parent. "What's this called?" "What does this do?" And it can help you find your lost spectacles. At least that's the headline. It sounds like a dream come true for anyone who's constantly misplacing their glasses. But as Google's demo shows, the reality is a little underwhelming (see our pick of AI art generators for more on AI tools).

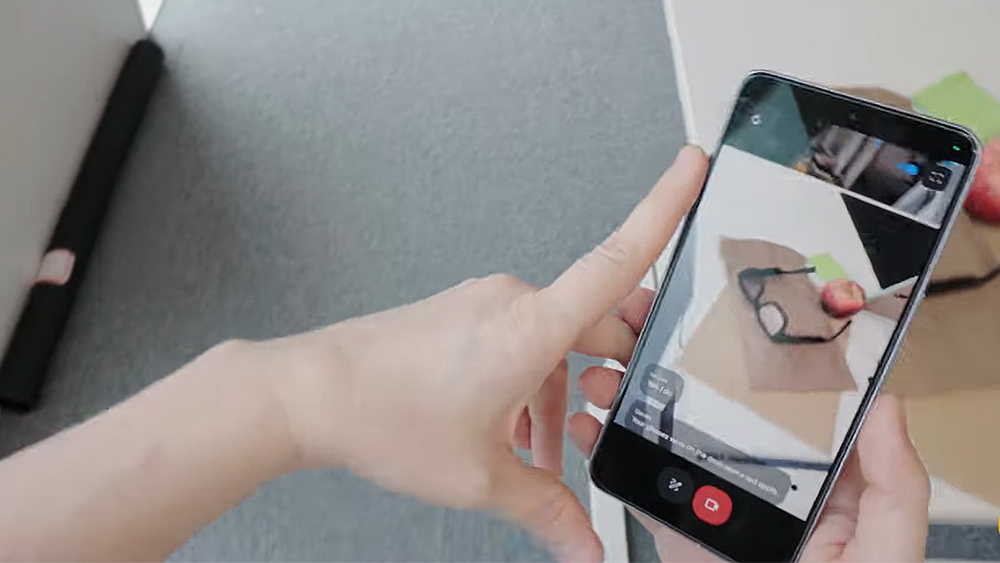

Project Astra is exploring the potential of AI agents supported by Google's multimodal foundation model, Gemini. The demo shows that the agent can reason about its environment in real time and interact conversationally with the tester about what it's seeing.

But the reason it's able to find a pair of spectacles in the demo is because it captures constant video. It works because the AI sees everything the user sees – a constant stream of their life. The reality is that despite how it might seem if you go to a concert or even just take a train or bus, people probably won't want to go about life holding a phone in front of them to film their every action just so they can then ask AI to locate their specs.

Even if people do go in for letting Google capture personal live streams of their lives, the most practical way for them to do so would be by... wearing glasses (well, it's true that when we lose our glasses they're often on our heads). Of course, if you had the AI assistant on your phone, perhaps you could use your phone to find your lost glasses if they were switched on and filming up to the moment you misplaced them. But there are other ways to locate misplaced tech.

But the form factor of smart glasses has always been problematic and it remains to be seen whether it's possible to overcome even potential early adopters' fear of looking like a dork. The original Google Glass never caught on and is widely considered to have been an expensive flop. Based on this demo, I'm yet to be convinced that the addition of new AI functionality will see a better result.

Turning a neat research project into a useful, scalable tool is the kind of challenge Google's struggled with many times. Cost and privacy and security concerns are all likely to be issues with any potential Astra AI glasses.

Meanwhile, Google is advancing with the implementation of AI tools in other products, including its search engine. It's rolling out AI summaries in search results in the US, and this could also be a challenge for the company since it risks cannibalising part of its own business (ads) as well as potentially damaging the sustainability of the content that, until now, has been the whole point of its existence: websites.

For more from Google I/O, see the news on the Gemini 1.5 Flash AI model.