What did we glean from the 2024 edition of Google’s annual I/O conference? Last year, the planet’s dominant technology company upped the ante on AI-generated content and tools, introducing the Magic Editor photography software for its Pixel phones, along with the announcement that it was opening up its Bard AI model to global use.

Bard has now been supplanted by Gemini and the I/O 2024 conference kicked off with a celebration of the past 12 months of rapid AI evolution, from smart assistants and generative systems of images, words, music and speech, all the way to large language models trained on areas of specificity like coding or medical knowledge.

So what does this mean for consumers? Expect AI to evolve in two distinct ways over the next twelve months. On the one hand, our daily searches are about to become blended with a new kind of AI assistant, a pocket polymath that uses our smartphone cameras as a portal. Google demonstrated Project Astra, a prototype system that uses vision and speech to respond to a series of vastly different questions – solving a code problem, coming up with a pun, finding your glasses.

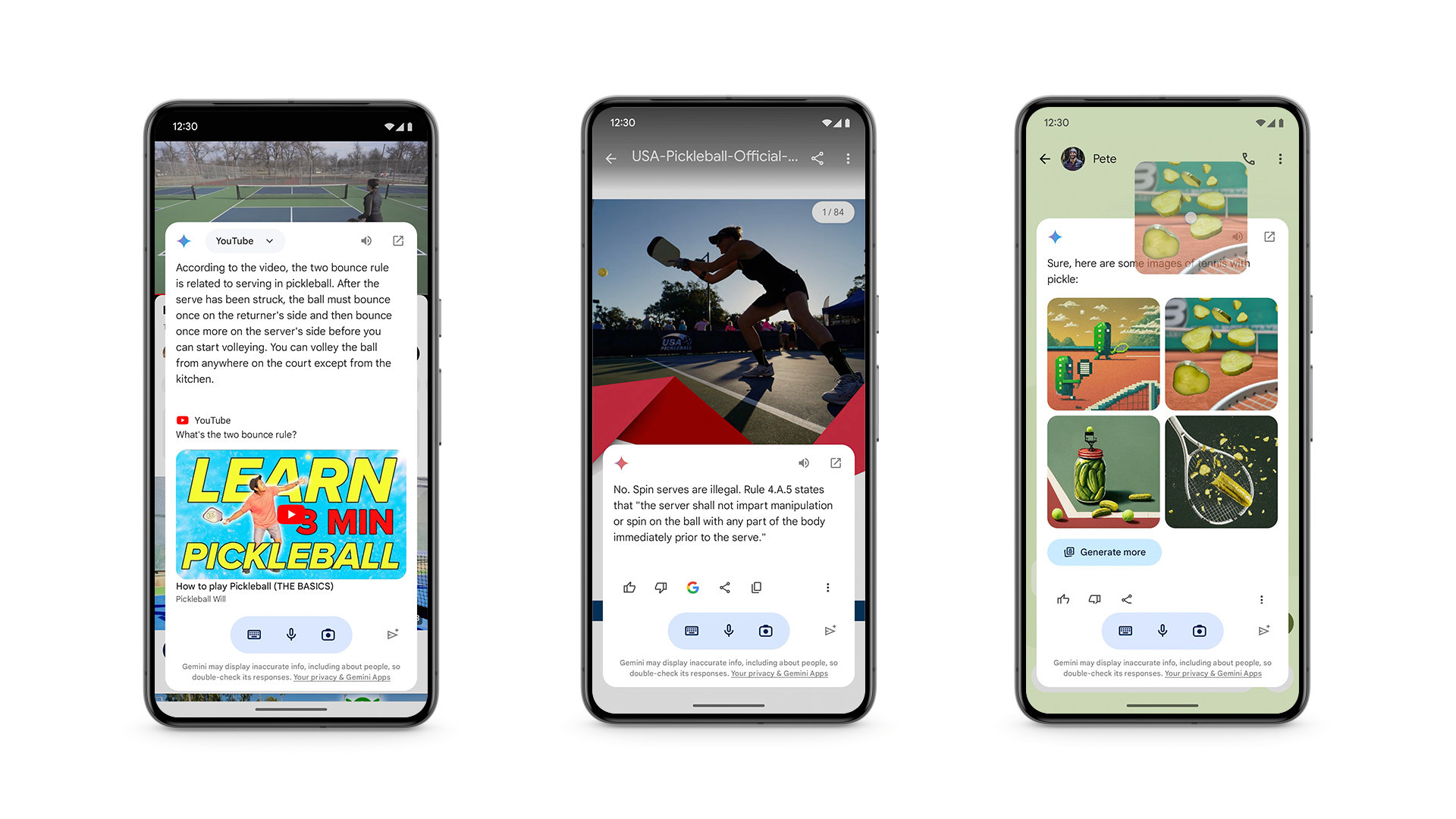

Other innovations include Ask Photos, a new way of interacting with your own imagery using spoken commands – ‘show me my photographs of rainbows,’ or ‘where did I photograph that red Audi?’, for example. The more information in a data set, the more an AI can glean, and Google announced that the Pro version of Gemini (v1.5) can now sift through one million tokens (the units of data processed) at a time.

At the current rate of development and take-up, this kind of thing will happen seamlessly. Gemini is creeping into Google Workspace and will soon become a valuable part of Gmail. The example use case was a convincing one; imagine being able to ask for a summary of all lesson plans and events for an upcoming school term, a boon for busy parents.

Studies show that most people already use the search bar as if it was an all-knowing oracle, usually asking direct questions rather than strings of related words. Gemini, and the models that will follow, are spookily adept at corralling these queries into digestible packages – meal plans, shopping lists, itineraries, explainers, suggestions. And all this will be personalised, dished out by a well-spoken voice model that knows its way around a database that goes deep into your likes, dislikes, search, location and purchase history, and much, much more besides.

The other path is darker. Generative AI is already a divisive subject in the creative industries, despite it already coming pre-baked into essential tools like Photoshop. Google wisely enlisted a number of artists - Shawna X, Eric Hu, Erik Carter and Haruko Hayakawa – to discuss their use of Imagen 2, a generative model powered by Google DeepMind. Former Nike creative director Eric Hu drew parallels between AI’s ‘disruptive capabilities,’ and movements like the Renaissance and the origins of photography, a grandiose claim.

Google’s StyleDrop generator was then presented as an artistic short-cut, trained by the artists themselves using their work and descriptions. Judge for yourselves as the results can be seen at Google's Infinite Wonderland site (we can’t help but wonder whether instant access to artistic infinity would blow minds or break them).

Donald Glover’s GILGA agency also popped up to help demonstrate how Google’s Veo technology might work as a form of instant cinematic storyboarding, generating photorealistic video from a simple prompt. ‘At the end of the day, all you really want in art is to make mistakes faster,’ notes Glover, but not everyone has his combination of skill, work ethic and well-trained eyes and ears – expect a whole load of lesser talents to trot our their ‘mistakes’ by the millions. ‘But it’s just play,’ you protest. Go, play, and then consider a world where human creativity and culture has lost almost all of its societal value: MusicFX, TextFX, ImageFX and VideoFX.

The fear of an AI-driven web degenerating into a synthetic mush of lesser art and untrustworthy information is a very real. We’re in the discovery phase right now, as human creativity scrabbles up the steep slopes of the uncanny valley, with no-one to notice when it loses its grip. Much of the past decade has been about tech titans appearing to wilfully miss the points made by cautionary tales about technology, in comics, books, films and television.

There’s a mildly famous tech meme about this myopia from the turn of the decade, recounted recently in Scientific American. "In my book I invented the Torment Nexus as a cautionary tale,” says a Sci-Fi Author. A few years, a Tech Company announces enthusiastically, “At long last, we have created the Torment Nexus from classic sci-fi novel Don’t Create The Torment Nexus".

It's Google’s world now, and we all have to live in it, adapting our perceptions and expectations to fit what it deems to serve up. Even this article is a form of votive offering, effectively valueless unless the omnipotent algorithm deems it worth of sharing with the world. Google’s stated mission, ‘Organizing the world’s information across every input, making it accessible via any output, and combining the world’s information, with the information in YOUR world, in a way that’s truly useful for you,’ is a task both Sisyphean and irreversible: to pull the plug would be to cast humanity into darkness. AI, in short, means we are all in.

More information at IO.Google/2024