Stalker 2 returns to the zone after a long absence

Stalker 2: Heart of Chornobyl finally marks a return to the zone after a 15 year absence. It leverages Epic's Unreal Engine 5 for some impressive visuals, and it also punishes lesser PCs. We've been running benchmarks and this is a game that needs both GPU and CPU horsepower — and don't neglect the VRAM if you're planning to run with maxed out settings. Our testing will look at many of the latest Nvidia, AMD, and Intel GPUs that are among the best graphics cards, and we'll provide a few CPU benchmarks as well. Let us know in the comments what other hardware you'd like to see tested, and we'll try to make it happen.

A lot has changed since Call of Prypiat, Clear Sky, and the original Shadow of Chornobyl came out in the late 00s (note that the spelling was officially updated after the Russian invasion of Ukraine). A state-of-the-art PC back in 2009, when Prypiat launched, consisted of a Core i7-965 or 975 Extreme Edition, or an overclocked i7-920 that was just as fast. The top graphics cards of the era were Nvidia's GeForce GTX 285 AMD's newly launched Radeon HD 5870. Windows 7 was also brand-spanking-new, having launched in October 2009, which means DirectX 11 had also just gone live. Call of Prypiat was one of the first (or perhaps the first) games to use DirectX 11, though it was also compatible with DX9 and DX10 GPUs.

Now, Windows 7 has officially been retired, along with the Windows 8 sequel, and Windows 10 is on its last legs. GPUs that were top of the line models 15 years ago often can't even run modern games. Stalker 2 as an example (and yes, we're going to skip the caps and periods rather than writing "S.T.A.L.K.E.R. 2" every time, since it's not even an acronym) requires at least a GTX 1060 6GB or RX 580 8GB graphics card — those arrived in 2016 and 2017, respectively. It also requires a Core i7-7700K or Ryzen 5 1600X or better, 16GB of RAM, and 160GB of disk space. That's for a target of 1080p with the low preset and 30 fps.

The recommended hardware — and based on our testing, you don't want to come up short — lists a Core i7-11700 or Ryzen 7 5800X, GeForce RTX 3070 Ti / 4070 or Radeon RX 6800 XT, and 32GB of RAM. That's for the high preset and 60 fps at 1440p. Also, an SSD is "required" — though I suspect the game can still load (slowly) from a hard drive. And those framerate targets? That's with TSR, DLSS, FSR, or XeSS upscaling and related technologies, including framegen.

Steep requirements might be an understatement, and once again we can only shake our heads sadly at the default enablement of upscaling and frame generation. We prefer to have those as ways to boost performance to higher levels and smooth out framerates, not as baseline requirements. To that end, we'll be testing at native resolution, as well as with upscaling only, and finally with upscaling and frame generation. So let's see how Stalker 2 runs on current PC hardware.

Stalker 2 Test Setup

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

GRAPHICS CARDS

Nvidia RTX 4090

Nvidia RTX 4080 Super

Nvidia RTX 4070 Ti Super

Nvidia RTX 4070 Super

Nvidia RTX 4070

Nvidia RTX 4060 Ti 16GB

Nvidia RTX 4060 Ti

Nvidia RTX 4060

AMD RX 7900 XTX

AMD RX 7900 XT

AMD RX 7900 GRE

AMD RX 7800 XT

AMD RX 7700 XT

AMD RX 7600 XT

AMD RX 7600

Intel Arc A770 16GB

Intel Arc A750

Stalker 2 comes with the full complement of upscaling technologies from Nvidia, AMD, Intel, and Unreal Engine 5: DLSS (3.7.0), FSR 3.1, XeSS 1.3.1, and TSR (Temporal Super Resolution). Or you can turn off all of those and run with TAA (Temporal Anti-Aliasing), still with the option to enable (a rather poor looking) scaling if you want.

Our main test PC consists of our soon-to-be-retired from active duty Core i9-13900K, 32GB of DDR5-5600 CL28 memory, an MSI Z790 Ace motherboard, and a 4TB Crucial T700 SSD. We'll have additional results for the 4090 and 7900 XTX running on some other CPUs, to show just how CPU limited the game can be. Our initial results suggest there's still some life left in Intel's previous generation Raptor Lake processors.

GPU drivers for our testing are Nvidia 566.14, Intel 6299, and AMD 24.10.1. This is an Nvidia promoted game, and AMD hasn't released any new drivers since October 18. Which is a bit concerning, given the number of new games coming out right now, like Flight Simulator 2024. But the AMD drivers don't seem to be having any noteworthy issues in our testing.

Our aim here isn't to test every current generation GPU. We'll skip the Nvidia RTX 4080 and RTX 4070 Ti, for example, as well as the Arc A770 8GB and A380. We've added some previous generation cards as well, including the minimum requirements GTX 1060 6GB and RX 580 8GB. This should at least serve as a good point of reference to help line up where previous gen cards rank compared to newer offerings.

Our typical testing consists of using medium settings at 1080p, and then 1080p, 1440p, and 4K with ultra — or in this case, "epic" — settings. We'll do all of the baseline testing with TAA and 100% scaling, and then run some additional tests at 1440p and 4K with upscaling, and all three resolutions with upscaling and framegen. Upscaling alone won't help the fastest cards, as we'll hit CPU limits (at least on the 13900K), so keep that in mind.

Our benchmark sequence consists of a manual run through the starting village of Zalissya. The map, as in previous Stalker games, is effectively completely open from the start and you can wander seamlessly throughout the exclusion zone... assuming you can survive. The first town makes for a decent benchmark sequence as there are no mutants or enemies, but there are plenty of NPCs wandering about as well as buildings and trees. We run each GPU at each setting at least twice, using the higher score, and three times for the first run on each preset (i.e. medium and epic), discarding the first result.

We'll have screenshots and a discussion of image fidelity at the various settings further down the page, after the benchmarks. Stalker 2 does not utilize ray tracing hardware, opting instead for software-based rendering using Unreal's Lumen and Nanite technologies. It's plenty heavy even without using hardware RT, though, as we'll soon see.

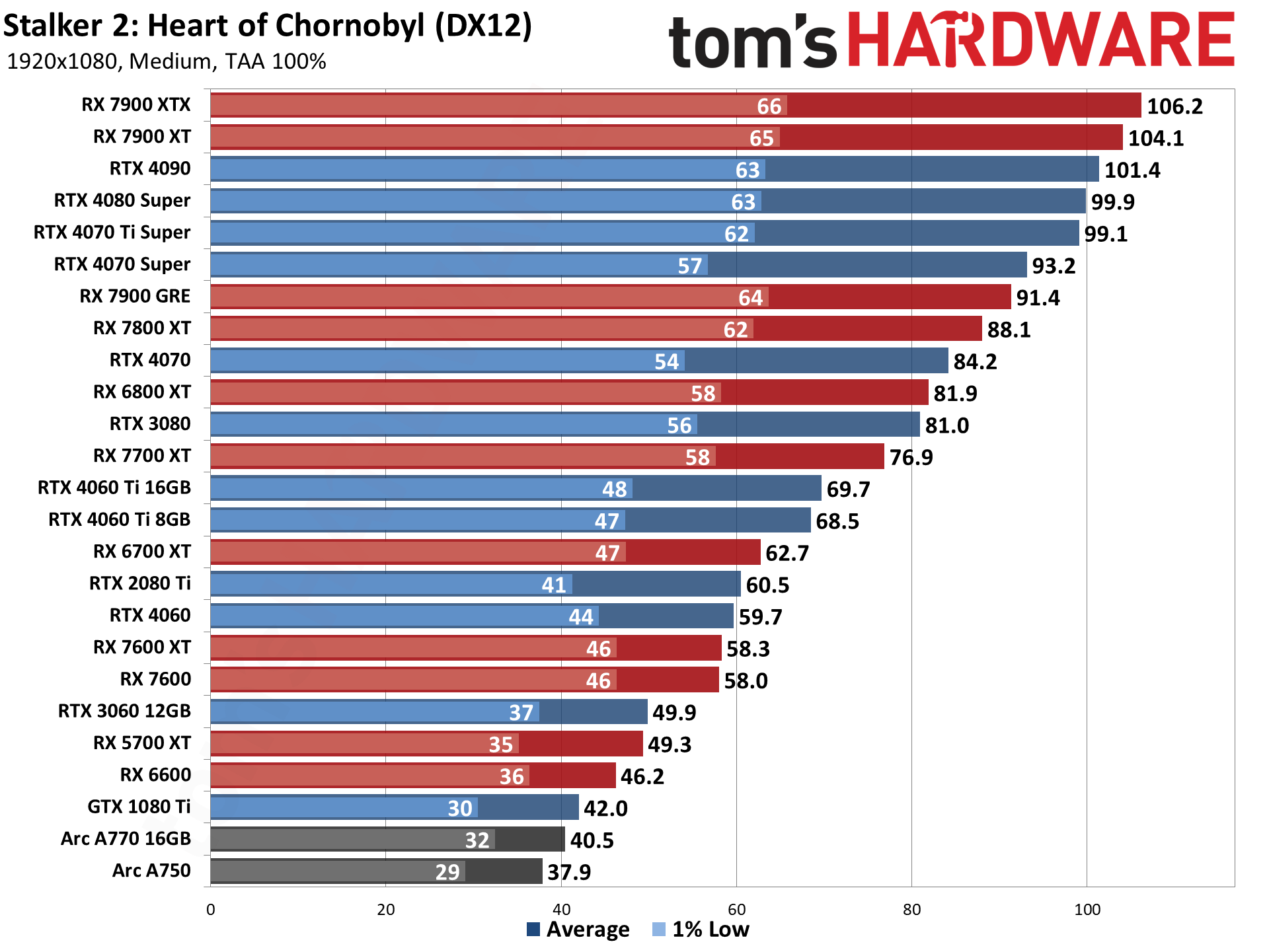

Stalker 2 1080p Medium GPU performance

1080p medium represents our baseline performance metric. Yes, you can go much higher, and you can also go lower — on resolution and settings. You can enable upscaling and framegen as well. But we feel 1080p and medium settings represent a target that most "reasonable" gaming PCs should be able to manage. Here are the results.

One thing that's immediately obvious is that we're hitting CPU bottlenecks here. AMD's top GPUs run slightly faster than Nvidia's top GPUs, but everything at the top of the chart ends up pretty packed together, right around the 100~105 fps mark. Considering the relatively low target, that's a bit surprising, but Stalker games have often pushed the hardware of their time to the limit.

Some of this is also due to the use of Unreal Engine 5. It's possible to hit much higher framerates, with the right settings and game optimizations, but from the games we've tested it seems that UE5 is usually quite heavy on both the CPU and GPU fronts.

At launch, if you're after 60+ fps without upscaling or framegen, you'll want at least an RTX 4060 Ti or RX 6700 XT, with the older 2080 Ti also getting there on average fps, barely. The other old GPUs that we've tested also did okay, for the most part, though the GTX 10-series will have a rough go of things. Intel's Arc A-series parts also struggle quite badly at 1080p native.

What about the minimum RX 580 8GB and GTX 1060 6GB? They didn't manage 1080p medium well at all, but they're supposed to be okay with 1080p and low settings, with FSR3 upscaling and framegen. And they sort of are, if you're willing to go that route.

The 1060 averaged 34 fps at 720p native using TAA, while the 580 got 33 fps with those same settings. Turning on FSR3 framegen bumped the 1060 to 61 fps, but going to 1080p with quality mode upscaling plus framegen dropped performance back to 34 fps — we suspect the 1060 ran out of VRAM. The 580 got 58 fps at 720p native with framegen, and 53 fps at 1080p with FSR3 quality mode and framegen, which is more in line with our expectations for how things should scale.

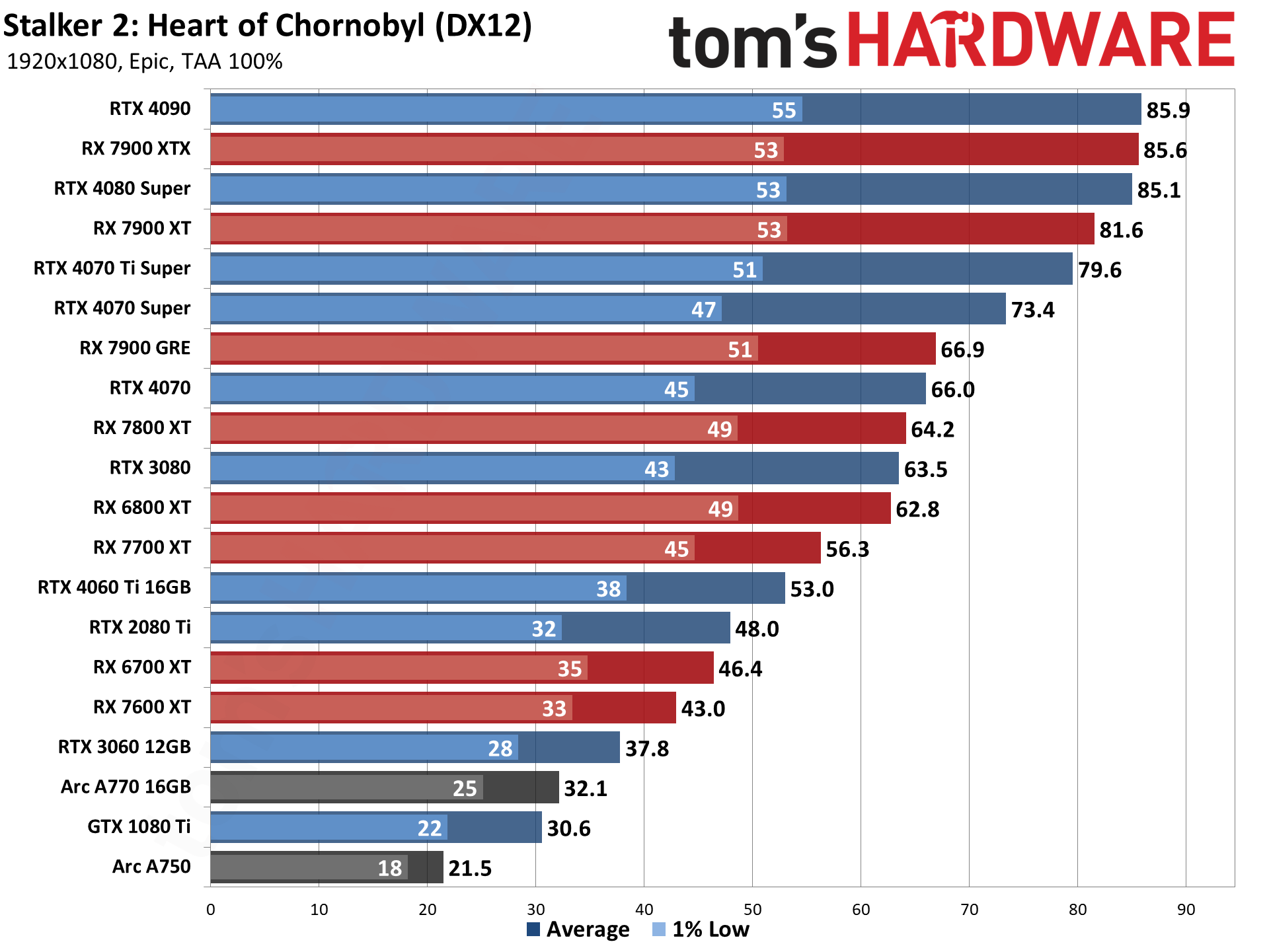

Stalker 2 1080p Epic GPU performance

For 60 fps or more at 1080p native and epic settings, Stalker 2 wants at least an RX 6800 XT or RTX 3080. The 7700 XT comes up short, as does the RTX 4060 Ti 16GB.

We dropped most of the 8GB GPUs from testing. 1080p high settings seems to be viable, but 1080p epic exceeds their VRAM capacity and performance absolutely tanks — except for the Arc A750 which still managed to not completely crumble at 22 fps and minimums of 18 fps.

The RTX 4060 Ti 8GB card initially ran at around 48 fps, but after a minute or so it had dropped to around 20~25 fps with almost constant stutters and dips into the single digits. The RTX 4060 never showed higher performance and ran in the single digits as well. AMD's RX 7600 likewise started at a higher level (~20 fps) but then dropped to <10 fps after a minute or so running around the game world.

The CPU bottleneck is also very much present at 1080p and epic settings. Now, the three fastest GPUs are all tied at 85~86 fps, and the next two GPUs aren't too far off that pace. The 1% lows are also pretty consistent at 50–55 fps. Without framegen, on the Core i9-13900K, this is as good as it gets for the epic setting in Stalker 2.

This is, sadly, the state of many games these days, particularly those using Unreal Engine 5. Running at native resolution like we're doing is no longer the goal. Instead, developers are using upscaling and frame generation technologies as crutches that allow them to post higher performance numbers.

Sure, Stalker 2 looks good graphically, but not amazing. UE5 also has a lot of pop-in where you'll see the geometry on objects massively shift — especially on trees, in this particular game. It's distracting and not something I like to see on top-end hardware with maxed out settings. Dragon Age: The Veilguard has similar quality graphics in my book, and manages to do away with a lot of the level of detail scaling pop-in that's so common with UE5 games. (And if it's not clear, no, Veilguard isn't using UE5.)

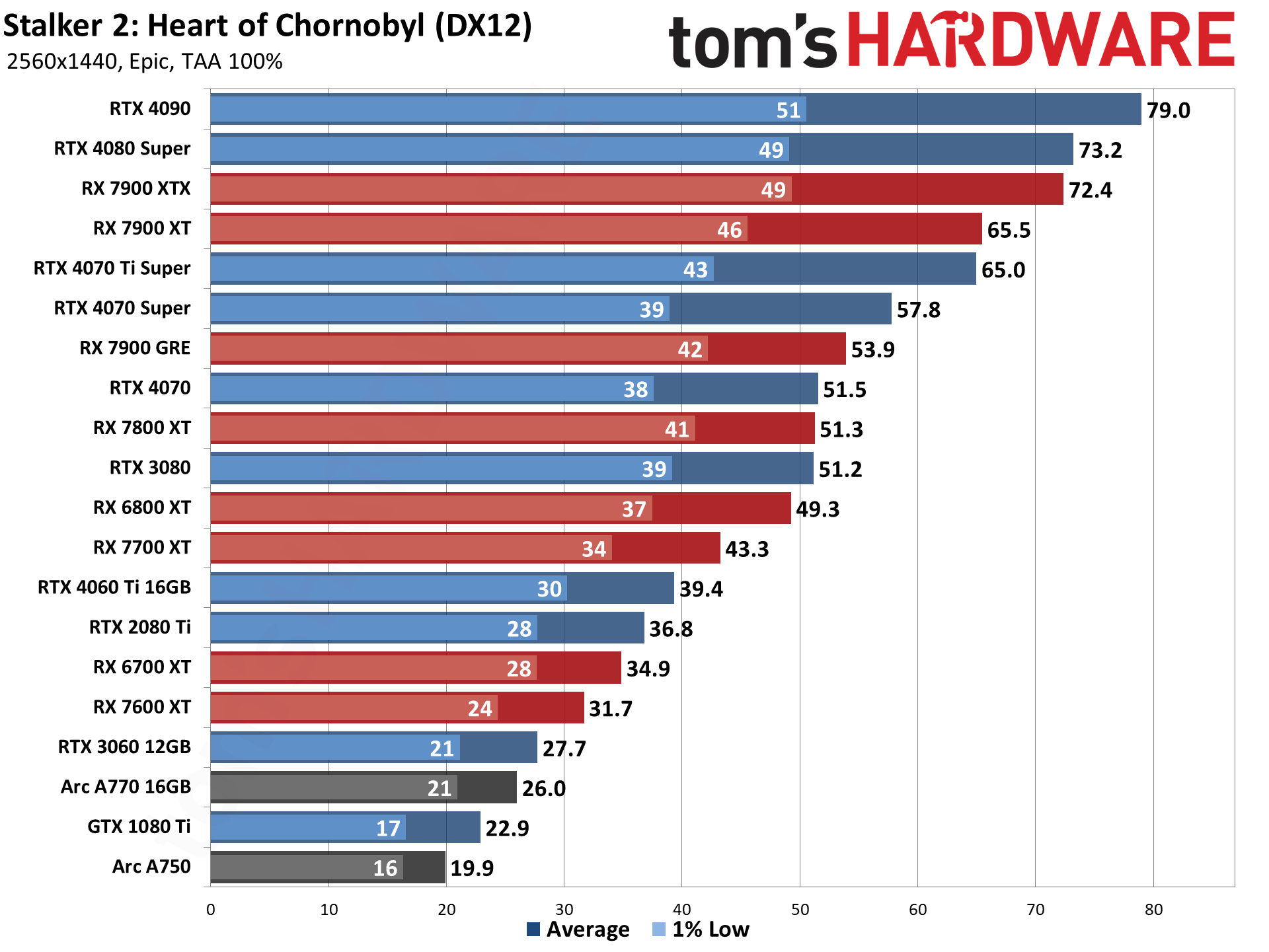

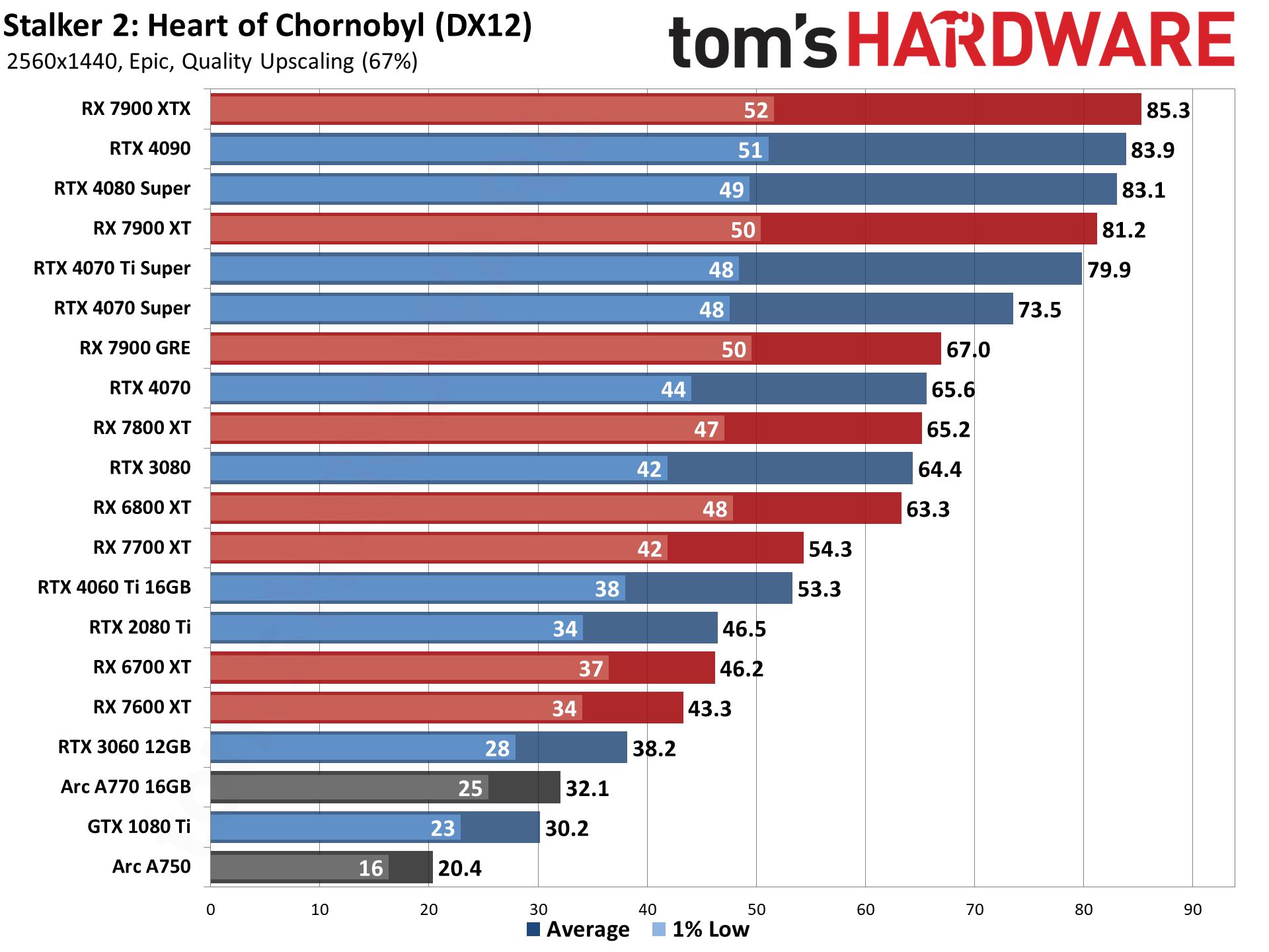

Stalker 2 1440p Epic GPU performance

What's it take to get 60 fps at 1440p native and maxed out quality settings in Stalker 2? Only five of the tested GPUs will suffice: RTX 4070 Ti Super and above — so that woudl be six GPUs if we include the vanilla 4080. The least expensive of these is the RX 7900 XT, currently starting at around $620, while the least expensive Nvidia GPU costs $740. If you're only looking for 30 fps — and perhaps planning to use upscaling and framegen to get you above 60 fps — the RX 7600 XT and above are all at least playable.

1440p epic settings finally starts to move away from the CPU bottleneck, but the 4090 at least is still clearly being held back. Power use for many of the faster Nvidia GPUs is also quite low, a key indicator of CPU limitations. The 4090 used 294W, 4080 Super drew 242W, and the 4070 Ti Super needed 241W. In contrast, AMD's top GPUs were still close to their rated power limits, with 360W on the 7900 XTX, 317W for the 7900 XT, and 256W on the 7900 GRE.

In the overall standings, the 4090 and 4080 Super now take the top two spots, though the 7900 XTX matches the latter with only a 0.8 fps difference (a 1.1% difference, which is within the margin of error). The 7900 XT matches the 4070 Ti Super as well, which means it has the value advantage given its current price — you can check our GPU price index for current values, but right now the 4070 Ti Super costs around $780 while the 7900 XT only costs $640.

Similar discrepancies in price to performance exist throughout much of the current GPU landscape. The 4080 Super costs $950 or more while the 7900 XTX starts at $840. The 4070 and 7900 GRE both have a $550 MSRP, but here Nvidia undercuts AMD with a $500 card versus a $530 card — albeit with slightly lower performance. Nvidia's 4060 Ti 16GB doesn't look too good either, with a $450 price tag and performance that falls below the $390 7700 XT, never mind the $470 RX 7800 XT that's 30% faster at these settings.

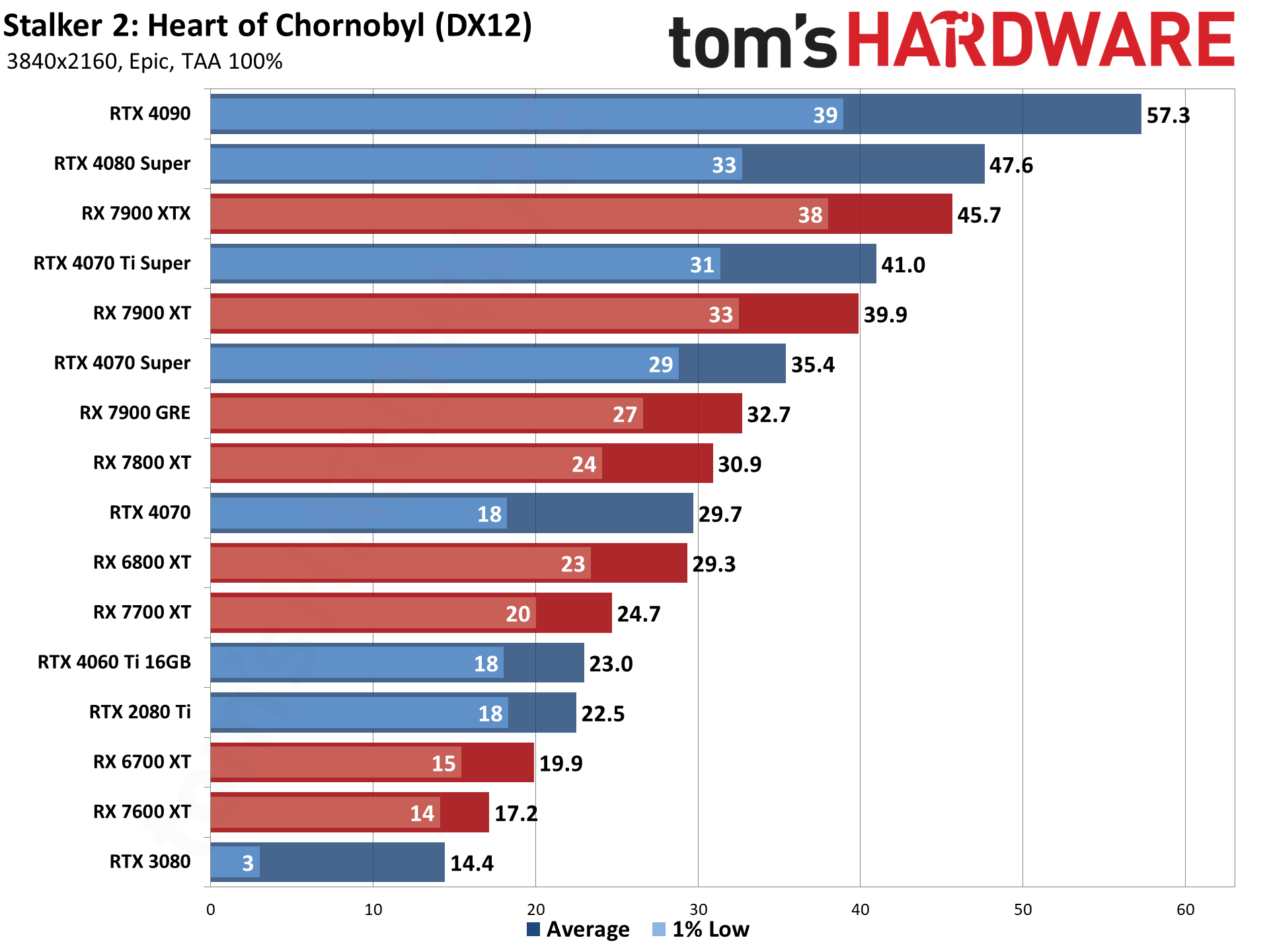

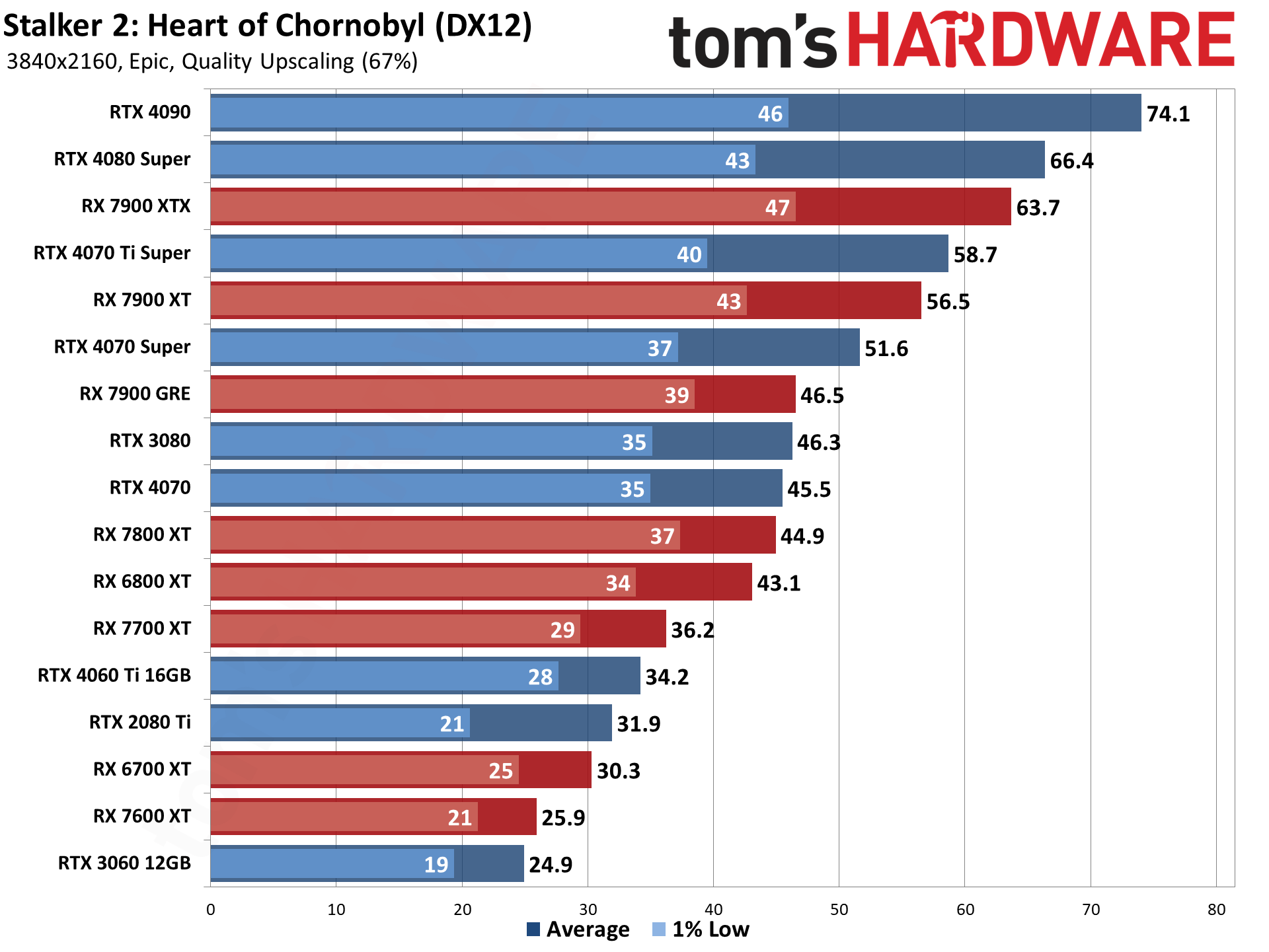

Stalker 2 4K Epic GPU performance

Native 4K at maxed out settings as usual proves to be too much for most of the GPUs. Even the mighty RTX 4090 only manages 57 fps — definitely playable, but not quite perfectly smooth. And while there are less demanding areas in Stalker 2, we're sure there are also regions that will be even more taxing and would push framerates even lower.

The 4090 leads the second string 4080 Super by 20%, which still isn't as large of a gap as we often see — and the 4090 still only pulls 346W, so something is keeping it from reaching higher levels of performance. The RTX 4080 Super in turn beats the RX 7900 XTX by a couple of FPS in average performance, though minimums are more stable on the AMD cards. That's actually something we saw throughout our testing: AMD's GPUs, probably thanks to having more VRAM at most levels, end up with better minimum FPS.

VRAM also proves to be an issue on GPUs with less than 16GB now. The RTX 4070 falls below both the 7800 XT and 7900 GRE, but AMD's RX 7700 XT likewise takes a tumble down to the low 20s. And check out the RTX 3080, which collapses to sub-15 fps due to insufficient VRAM. Just 1GB more on the RTX 2080 Ti allows it to stay above 20 fps.

If you're shooting for just 30 fps average at 4K native, with a bit of rounding, the RX 6800 XT and RTX 4070 and above will suffice. And we'll see how upscaling and framegen improve the situation in a moment.

Stalker 2 with upscaling

Given the obvious CPU bottlenecks at lower resolutions, it shouldn't be too surprising that those same limitations show up when we turn on upscaling. We skipped 1080p testing for that reason, focusing on the 1440p and 4K results. They're still pretty underwhelming at times.

At 1440p epic settings with quality mode upscaling (67% render resolution), the top six GPUs all land in the 80–85 fps range, with minimums of 48–52 fps. So, the 13900K isn't able to keep the GPUs fully saturated with work. We'll be testing a Ryzen 7 9800X3D shortly to see how that changes things, and maybe a few other CPUs as well, so stay tuned.

Lower tier GPUs do benefit from upscaling, and the further they are from 80 fps, the bigger the potential boost. the 7600 XT for example sees a 37% increase over native 1440p, and the 4060 Ti 16GB improves by a similar 36%. Everything from the RX 7800 XT and up manages to break 60 fps as well, and all of the tested cards are definitely playable — though we already dropped GPUs like the 8GB cards from our testing at 1080p due to performance issues.

The Intel Arc A750 is the one anomaly. First, the A750 still manages to run the game without seemingly running out of VRAM. None of the other 8GB GPUs we checked could do this. But it also only sees a 2% improvement in performance via XeSS Ultra Quality mode upscaling. (Sorry, Intel, but we're keeping the scaling factor at a constant 67%.) The A770 got a significant 23% boost from the same setting, so VRAM or something else is holding the A750 back.

Nvidia's fastest GPUs, as well as AMD's fastest GPUs, see less benefit from upscaling at 1440p. The 4090 only increased framerates by 6% versus native, because it was already near 80 fps. The 4080 Super improved by 13% while the 4070 Ti Super got a 23% boost. AMD's 7900 XTX improved by 18%, while the 7900 XT and GRE got a 24% increase.

But we do need to mention that DLSS, FSR3, and XeSS are not the same. That's part of why we stick to TAA 100% resolution as the baseline. Software algorithms to improve performance can be beneficial, but fundamentally the 7700 XT using FSR3 upscaling does not look the same as the 4060 Ti 16GB using DLSS upscaling, and the A770 with XeSS upscaling offers a third option. We would say the various upscalers are all viable, particularly if you're not too finicky, but DLSS continues to look better. FSR3 upscaling looked particularly bad at 1080p as well, more like 720p with spatial upscaling than a temporal upscale.

4K epic with upscaling reduces the CPU bottleneck, and while the rankings are mostly what we would consider "normal," there are still bottlenecks. As with 1440p, the performance improvement offered by quality mode upscaling varies by how far away from the CPU limit the GPU starts.

The 4090 for example only beats the 4080 Super by 12%, which is basically in line with our larger GPU benchmarks hierarchy results for 1440p ultra in pure rasterization performance. But AMD's 7900 XTX shows a 13% lead over the 7900 XT, compared to just 7% in our hierarchy.

In games where we're clearly not CPU limited, though? The RTX 4090 can be up to 30–35 percent faster than the 4080 Super. That often requires the use of hardware ray tracing features, or at least a game that doesn't run into clear CPU limitations.

Only the top three GPUs manage to break 60 fps, and if you look at the native 1440p results, they're pretty similar. Native 1440p runs a bit faster, because 4K with quality upscaling first renders at 1440p and then has to run a relatively complex upscaling algorithm.

Stalker 2 with upscaling and frame generation

Frame generation is often a highly controversial feature, and rightly so. Some people refer to the generated frames as "fake frames," and they're not really wrong. Because there's no additional sampling of user input, and with the added overhead of framegen, it often feels more like two steps backward for two steps forward.

Stalker 2 supports FSR 3.1 and DLSS 3 framegen, which means if you really want to, you can use DLSS upscaling with FSR framegen. Sort of. That totally worked with our testing of the RTX 3080, 3060, and even the 2080 Ti. But with the RTX 4090? FSR3 framegen did nothing. It's like that option was locked out by the game code. Which... wouldn't be shocking, as it's an Nvidia promoted game.

Using FSR3 framegen with a 40-series GPU could potential yield higher performance than DLSS3 framegen on Nvidia's cards. And that might look bad, even if FSR3 framegen doesn't look as nice. (We can't do a direct comparison, however, since it's not working.) What we can say is this.

At 1440p, which we'll get to in a moment, FSR3 framegen on the 3080 provided a 62% boost to fps versus 1440p with only DLSS upscaling. The similar performing RTX 4070 came out just ahead of the 3080 using upscaling alone, but with DLSS upscaling and framegen, it only saw a 49% boost to framerates. So, it's maybe only a 10% difference, at least on that level of GPU, but it would have been nice to give FSR3 framegen a shot with the 40-series GPUs.

Because FSR3 framegen isn't working on the RTX 40-series cards, when we tried the RTX 4070 Super using FSR3 vs DLSS3, it seemed like DLSS was 38% faster. But while FSR3 upscaling works on all the Ada GPUs, framegen does not. I'm sure it's just a "driver bug" or "game code bug" though, and by calling it out like this it's more likely to get fixed.

But again, before we get to the charts, this is a critical point that many overlook. These upscaling and frame generation algorithms are not "equivalent work." Yes, FSR 3.1 is technically open source, but that doesn't mean AMD didn't do a lot of work optimizing it to work better on its own hardware. And maybe GSC just didn't feel a need to enable FSR3 framegen for RTX 40-series cards — the only GPUs right now that can use DLSS framegen.

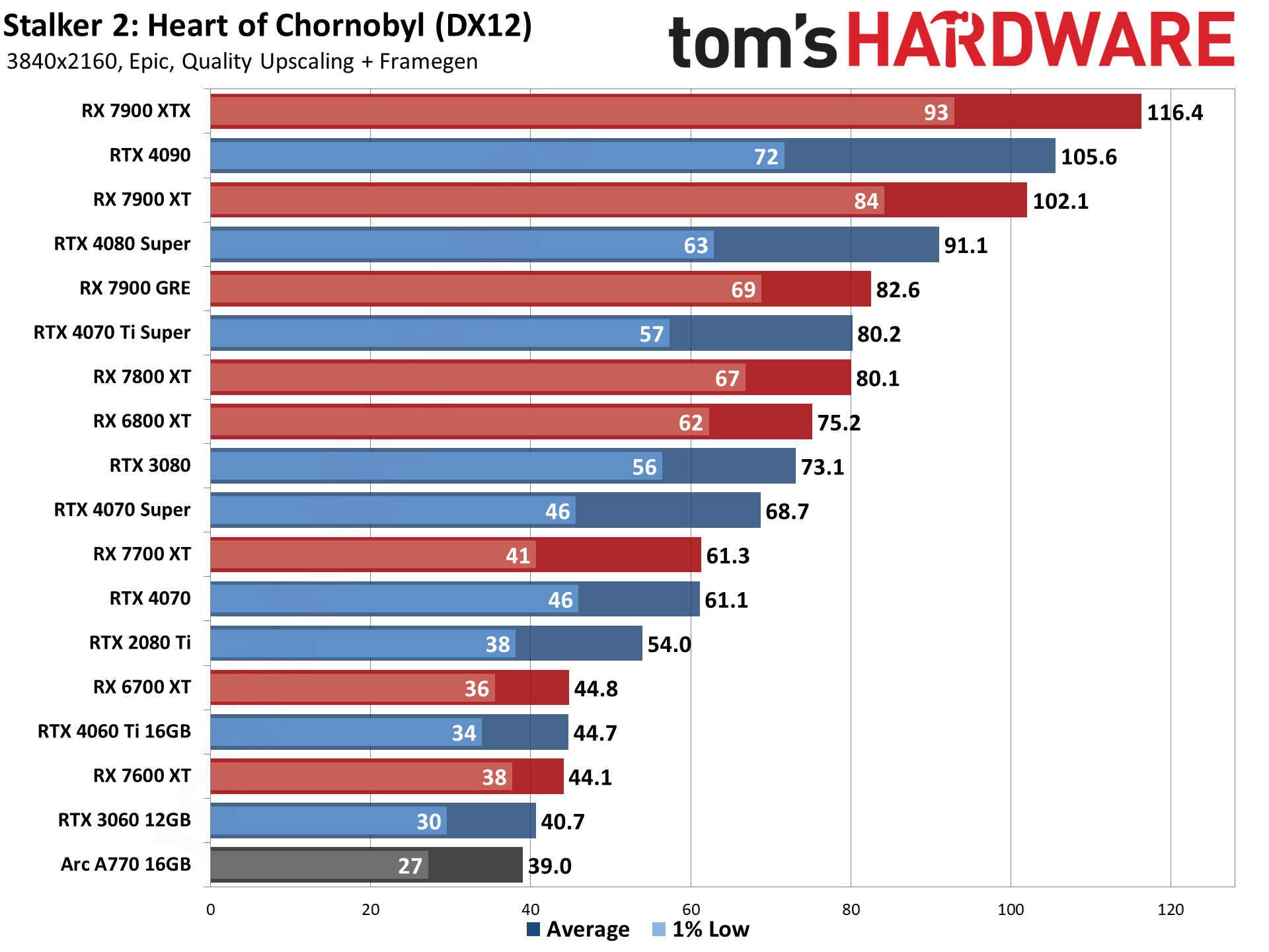

But the combination of various upscaling algorithms with two different framegen options means that, if we were to take a screenshot of 1440p epic with upscaling and framegen on the various cards, we'd end up with qualitatively different outputs. And so, we have apples and oranges that we get to compare and debate. Here are the results with both quality upscaling and framegen enabled.

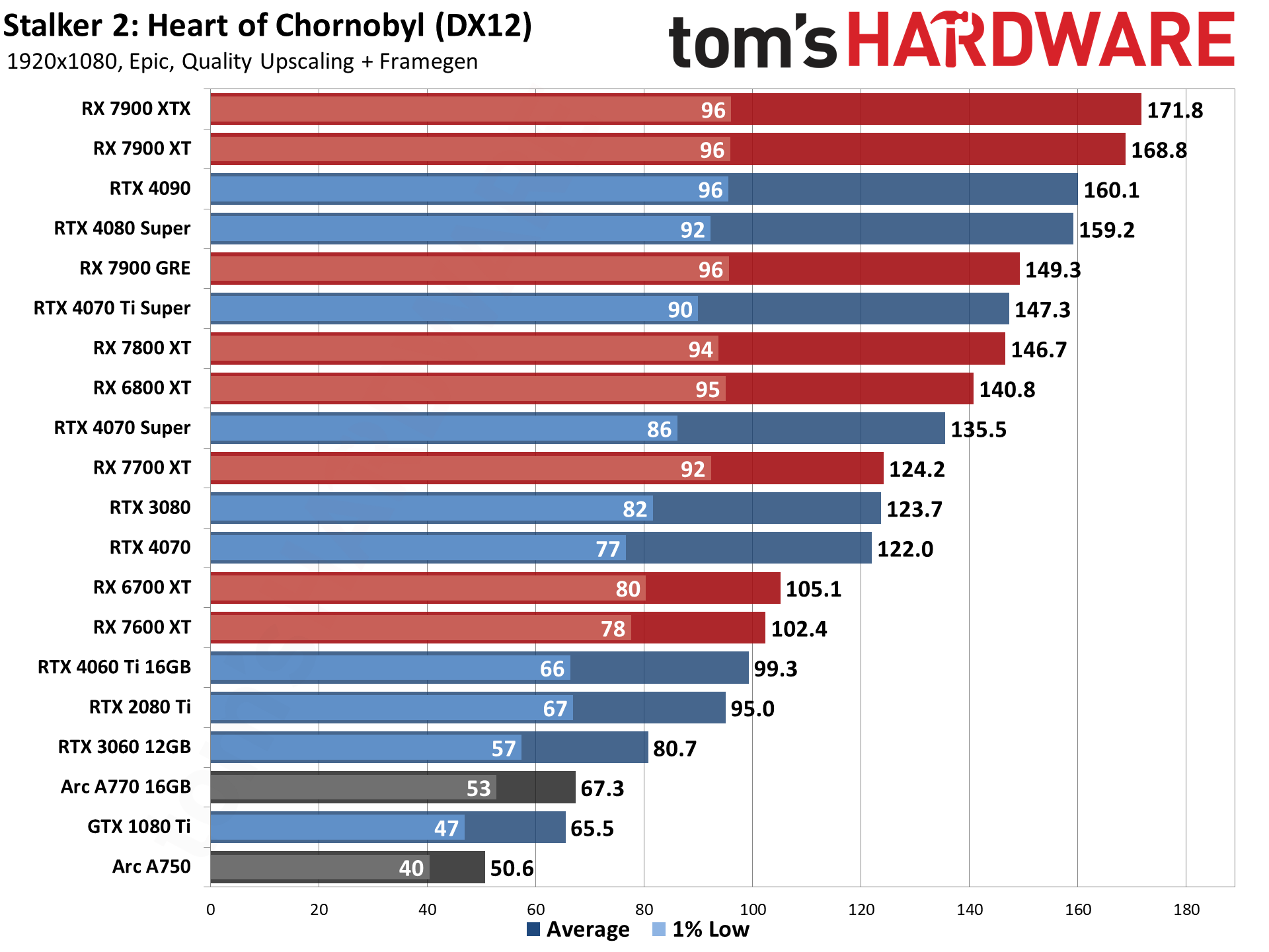

Starting with 1080p, because of the heavy CPU bottleneck, framegen gives some significant improvements to perceived FPS. However, as we've said in the past, this is basically "frame smoothing" rather than "frame generation" — because no new user input gets factored into the generated frames. It's not inherently bad, and in Stalker 2 the overall performance uplift can make it worthwhile, but the user input sampling rate will be half of the shown FPS values.

And again, as we've seen in some other games, FSR3 gives AMD a bigger boost in overall performance than DLSS gives to Nvidia. It's not a "fair" comparison in that the GPUs are not running the same workloads, and using FSR3 on Nvidia doesn't fix things as it's only getting optimizations for AMD GPUs as far as we can tell. 1080p with upscaling and framegen absolutely looks worse on the AMD cards, even if it runs faster.

But let's look at the four groupings quickly. We have Arc with XeSS + FSR3, AMD with pure FSR3, RTX 20/30 with DLSS and FSR3, and RTX 40 with pure DLSS. The two Arc GPUs both see over a 100% improvement in performance from our native 1080p epic results, 110% for the A770 and 136% for the A750. AMD's GPUs see a 120% increase on average as well, but that's brought down a bit by the lower scaling (due to CPU limits) of the 7900 XT and XTX (107% and 101%, respectively).

For Nvidia, the RTX 2080 Ti, 3060, and 3080 all see a net improvement vs native of around 100% — 98% on the 2080 Ti, 114% for the 3060, and 95% for the 3080. That might seem about the same as the other GPUs, but then we get to the RTX 40-series. All of those see an 85–87 percent improvement. Some of that will be from upscaling, though, and we didn't test 1080p with only upscaling due to the amount of time already spent. But in short, it looks like DLSS framegen might be providing less of a boost than FSR3 framegen.

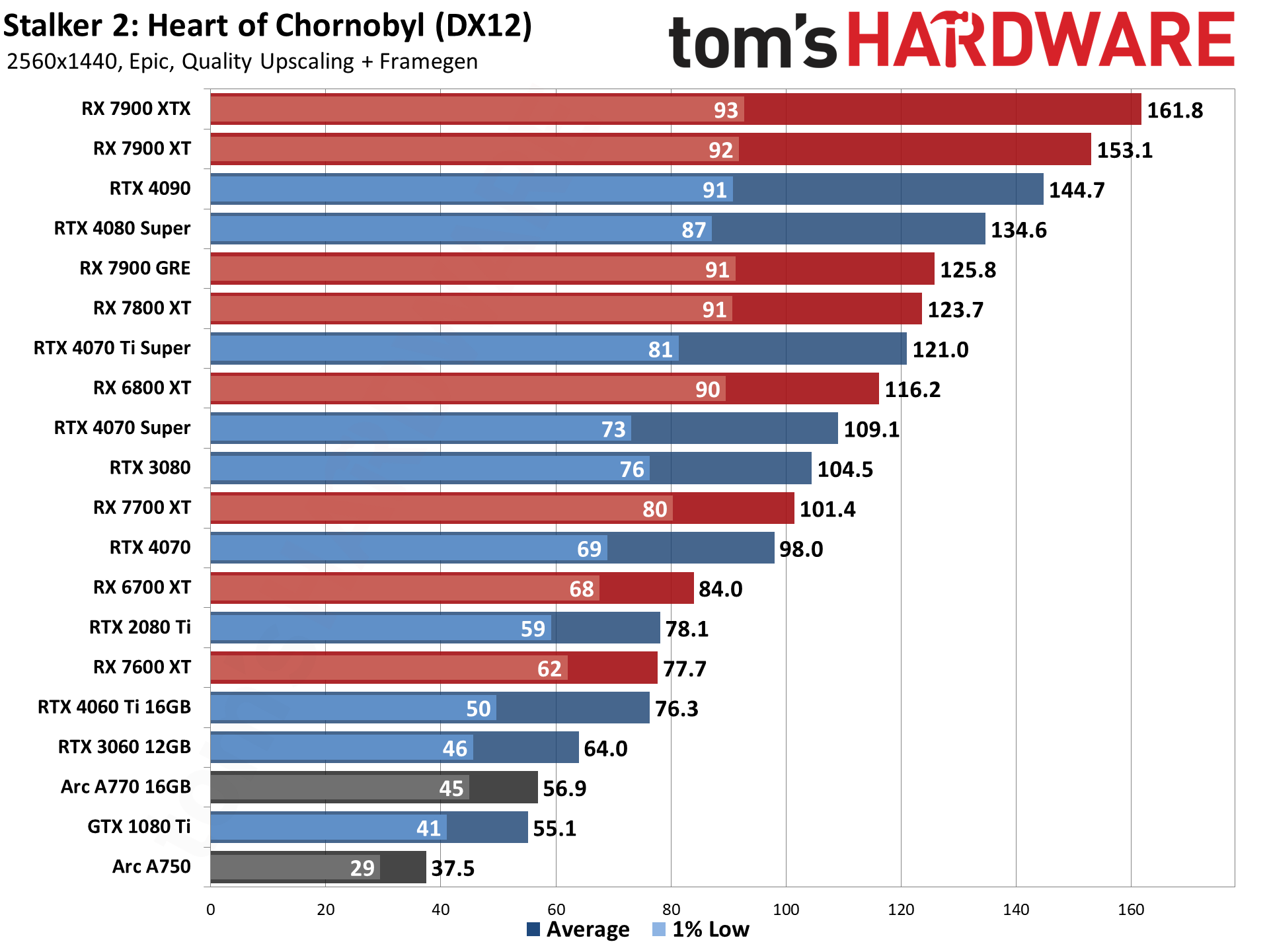

The higher output resolution of 1440p does make some of the rendering issues that were clearly visible at 1080p go away for FSR3. You have to start pixel peeping to really see differences, so in some sense this is a win. But this is also why we don't like games or graphics card companies using upscaling and framegen as the default comparison. That's ripe for abuse by using only the optimal results to make one company's products look better.

The important thing, insofar as Stalker 2 goes, is that nearly every GPU in this chart is still eminently playable. Yes, user input still happens at half the rate shown in the chart, but it does look smoother and that tends to trick your brain into the game feeling smoother as well. When framegen only yields a 20–30 percent improvement in FPS, that's not very good, but here we're getting much better scaling.

The RTX 4060 Ti 16GB went from 53.3 to 76.3 fps, a 43% improvement. The higher tier 4090 on the other hand gets a 73% boost. So perhaps memory bandwidth or some other factor is holding back the lower tier cards. This time, we have upscaling alone vs upscaling and framegen, so we can isolate how much of a boost framegen provides. The lesser 40-series cards get a 43–52 percent improvement from framegen, while the 4080 Super sees a 62% increase and the 4090, as noted before, improves by 73%. The 2080 Ti, 3060, and 3080 all use FSR3 framegen, and those three GPUs saw a 68%, 68%, and 62% improvement, respectively.

AMD overall sees a much larger boost from FSR3 framegen. The RX 7600 XT goes from 43.3 to 77.7 fps, an 80% increase. That's the "worst" of the results. 6700 XT and 6800 XT saw 82% and 84% improvements, respectively, while the higher tier 7000-series cards all improve by 88–90 percent. That's very near to the theoretical 100% boost that framegen can offer (which shouldn't ever be realized unless there's other wonkiness involved).

4K with upscaling and framegen also yields mostly playable results on all the GPUs we tested — we say "mostly" because cards like the 4060 Ti 16GB, 7600 XT, and 6700 XT at just 44–45 fps definitely start to feel sluggish. That's because the input sampling rate is only around 22 fps. But the 4070 and above all break 60 fps of generated performance, which works fine overall.

Note that 12GB cards sometimes struggled at these settings, especially over longer play sessions. So the 4070 and 7700 XT (and 4070 Super and 4070 Ti) become more likely to see short bursts of highly unstable framerates — meaning, you might get individual frames falling into the single digit FPS range.

That also happened on the RTX 3080, though its higher memory bandwidth seems to have helped more, and perhaps it was also helped by relying on FSR3 framegen. Where it only managed 14.4 fps at native 4K, switching to 1440p upscaled to 4K, plus FSR3 framegen, yields a somewhat playable result of 46.3 fps — "somewhat" because, as noted above, that actually represents a 23 fps input sampling rate and it still feels sluggish.

AMD's GPUs still see around a 70–83 percent improvement from framegen versus upscaling alone, though the 6700 XT only saw a 48% increase. The RTX 40-series cards only got a 31% (4060 Ti 16GB) to 43% (4090) improvement from framegen at 4K. Which explains why all the AMD GPUs jumped ahead of their normal competitors. Apples and oranges, as I was saying earlier.

Stalker 2 settings and image quality

We haven't had time to do a full image quality analysis, so check back and we'll update this section.

Stalker 2 closing thoughts

There's a lot to digest, but as far as performance and system requirements go, our advice is to make sure you exceed the minimum recommendations. Also, plan on using upscaling and framegen for most GPUs, especially at higher resolutions.

We still need to test some CPUs, which is something we're working on now (and testing Flight Simulator 2024 at the same time). But if you're wondering if Stalker 2 properly carries forward the Stalker legacy, it does — in both good and bad ways.

The graphics have improved compared to the older games, as well they should after 15+ years. But a lot of the gameplay so far feels quite similar. You're constantly running into enemies that have PDAs that reveal stash locations, anomalies are all over the place, and you have to try and walk the fine line between the various factions. If you like the post-apocalyptic (-esque) setting of the Chornobyl area, returning to it again after all these years will almost certainly hit all the right pleasure centers of your brain.

It's still frustrating to see Unreal Engine 5 apparently requiring so much help to reach higher levels of performance. Framegen in particular should not be the baseline recommendation. It has some clear drawbacks, and tends to be more useful as a marketing feature. We'd rather have slightly less advanced graphics that run well, over interpolated frames to smooth out some of the choppiness. Other games and game engines seem to manage this much better than UE5, but Unreal Engine gets used a lot because it makes life easier on the game developers — often at the cost of performance.