AMD's Ryzen AI 300 series of mobile processors beats Intel's mobile competition handily at local large language model (LLM) performance, according to recent in-house testing by AMD. A new blog post from the company's community blog outlines the tests AMD performed to beat Team Blue in AI performance, and how to make the most of the popular LLM program LM Studio for any interested users.

Most of AMD's tests were performed in LM Studio, a desktop app for downloading and hosting LLMs locally. The software, built on the llama.cpp code library, allows for CPU and/or GPU acceleration to power LLMs, and offers other control over the functionality of the models.

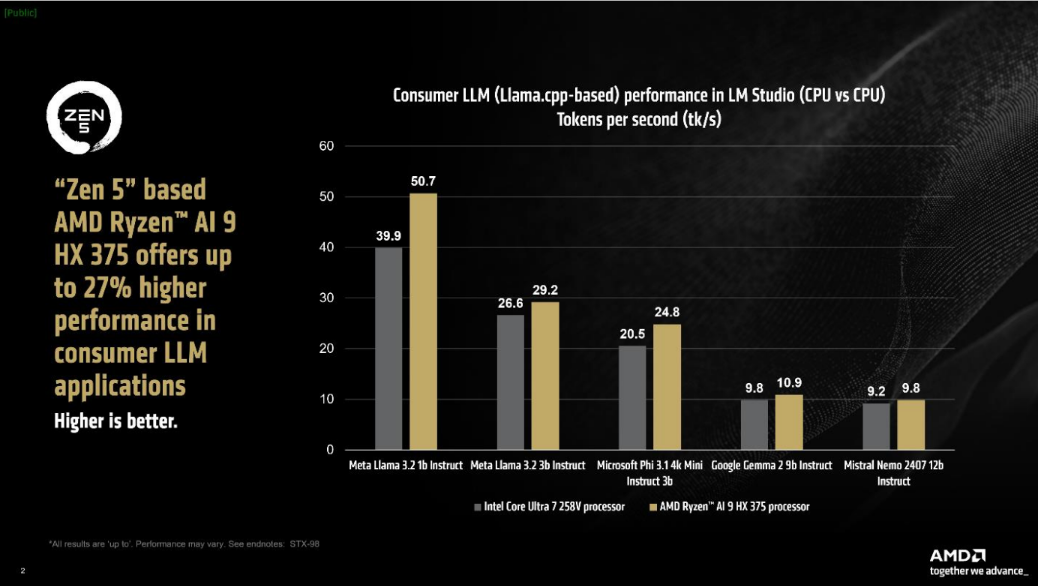

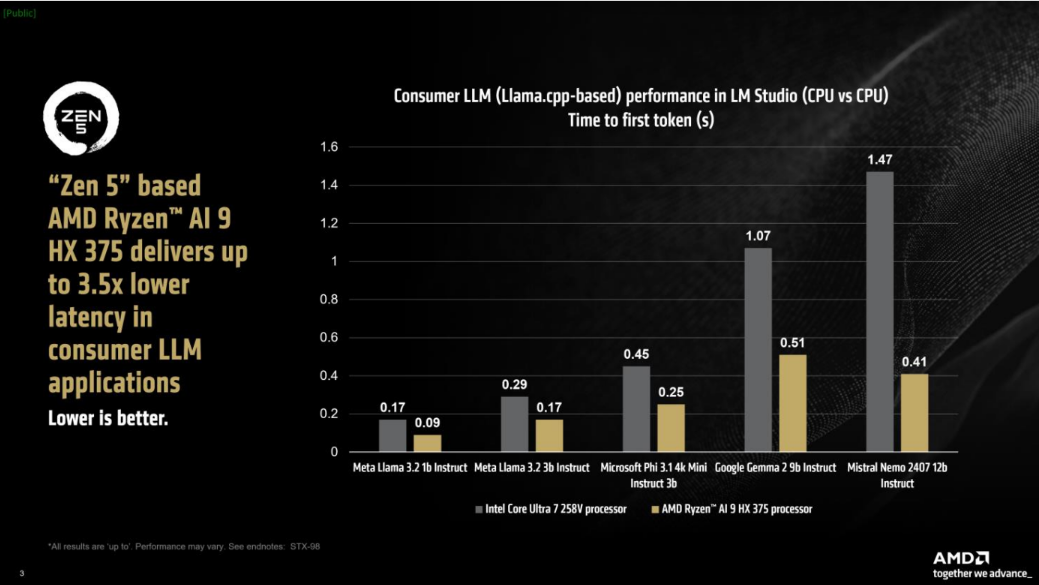

Using the 1b and 3b variants of Meta's Llama 3.2, Microsoft Phi 3.1 4k Mini Instruct 3b, Google's Gemma 2 9b, and Mistral's Nemo 2407 12b models, AMD tested laptops powered by AMD's flagship Ryzen AI 9 HX 375 against Intel's midrange Core Ultra 7 258V. The pair of laptops were tested against each other measuring speed in tokens per second and acceleration in the time it took to generate the first token, which roughly match to words printed on-screen per second and the buffer time between when a prompt is submitted and when the LLM begins output.

As seen in the graphs above, the Ryzen AI 9 HX 375 shows off better performance than the Core Ultra 7 258V across all five tested LLMs, in both speed and time to start outputting text. At its most dominant, AMD's chip represents 27% better speeds than Intel's. It is unknown what laptops were used for the above tests, but AMD was quick to mention that the tested AMD laptop was running slower RAM than the Intel machine—7500 MT/s vs. 8533 MT/s—when faster RAM typically corresponds to better LLM performance.

It should be noted that Intel's Ultra 7 258V processor is not exactly on a fair playing field against the HX 375; the 258V sits in the middle of Intel's 200-series SKUs, with a max turbo speed of 4.8 GHz versus the HX 375's 5.1 GHz. AMD's choice to pit its flagship Strix Point chip against Intel's medium-spec chip reads as a bit unfair, so take the 27% improvement claims with that in mind.

AMD also showed off LM Studio's GPU acceleration features in tests showing off the HX 375 against itself. While the dedicated NPU in Ryzen AI 300-series laptops is meant to be the driving force in AI tasks, on-demand program-level AI tasks are more prone to use the iGPU. AMD's tests with GPU acceleration using the Vulkan API in LM Studio so heavily favored the HX 375 that AMD did not include Intel's performance numbers with GPU acceleration turned on. With GPU acceleration on, the Ryzen AI 9 HX 375 saw up to 20% faster tk/s than when it ran tasks without GPU acceleration.

With so much current press around computers based on AI performance, vendors are eager to prove that AI matters to the end user. Apps like LM Studio or Intel's AI Playground do their best to offer a user-friendly and foolproof way to harness the latest 1 billion+ iteration LLMs for personal use. Whether large language models and getting the best out of your computer for LLM use matters to most users is another story.