For fans of the reclusive R&B artist Frank Ocean, the short audio clips posted to group chat service Discord in early April were tantalizing. They purported to be leaked studio tracks from Ocean, who hasn’t released a full studio album since 2016 but has teased a forthcoming new project.

Ocean-obsessed music collectors offered to buy the tracks for thousands of dollars to get them before everyone else. There was only one problem: The tracks were fakes, created with a new kind of artificial intelligence that is sending shock waves through the music industry and raising thorny questions about ethics, copyright, and how artists can protect their personal brand.

These so-called musical deepfakes have exploded in number because in the past six months, the technology to make realistic imitations of someone’s voice has become widely accessible and inexpensive. This is a potential nightmare for the recording industry. If current trends continue unchecked, artists could lose control over their sound and their earnings. Meanwhile, record labels risk losing profits.

The new reality for the music industry is part of a broader shake-up in the entertainment industry wrought by increasingly sophisticated A.I. The technology is already used by movie studios for special effects. In the future, studios hope to also enlist it to write scripts and provide voices for actors—all of which comes with serious legal considerations.

For now, the music industry’s legal protections against A.I. mimicry are uncertain. The phenomenon is so new that there are no laws that specifically address it or case rulings to serve as a guide.

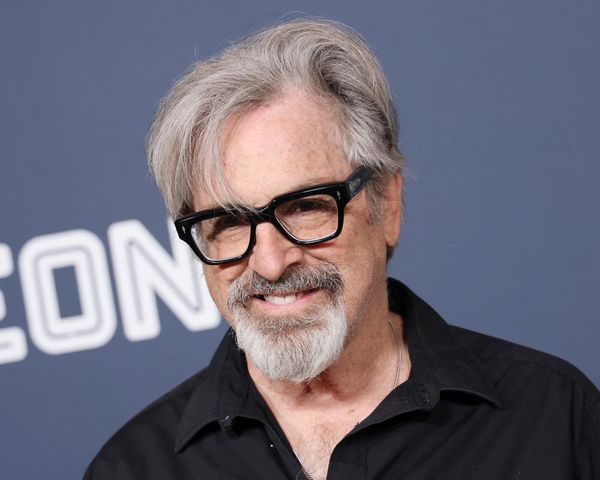

“Anyone who tells you that the legal implications are clear, one way or the other, is making stuff up,” says Neil Turkewitz, a former Recording Industry Association of America executive who has emerged as one of the leading critics of how today’s generative A.I. has been developed.

Ocean was hardly the first artist to have his voice and musical style mimicked by A.I. A deepfake track titled “Heart on My Sleeve,” purporting to be a collab from superstars Drake and The Weeknd (Abel Tesfaye), became a viral hit, ringing up millions of plays across Spotify, TikTok, and YouTube over just a few days in April before Universal Music Group, which represents both Drake and The Weeknd, demanded that the sites take it down. Fake songs from rap artists Ye and Playboi Carti, plus everyone from Ariana Grande to Oasis, have also appeared online.

“Anyone who tells you that the legal implications are clear, one way or the other, is making stuff up.”

Neil Turkewitz, former RIAA executive

Using A.I. to create music in the voice and style of a popular artist is relatively easy. Some underground music sites offer pre-built templates that can mimic the voices of dozens of popular musicians. And commercial A.I. software to clone voices and imitate musical styles is readily available. For example, Jukebox—A.I. software from OpenAI, the creator of buzzy A.I. chatbot ChatGPT—produces singing in the style of well-known musicians along with original lyrics written by the technology.

In the U.S. and most other countries, it isn’t possible to copyright your voice or your particular musical sound or vibe, says Jonathan Coote, a London-based intellectual property attorney with global law firm Clifford Chance. In 2015, the estate of singer Marvin Gaye famously won a $5.3 million judgment against singers Robin Thicke and Pharrell Williams over their smash hit “Blurred Lines,” which the Gaye estate said was based on Gaye’s 1977 song “Got to Give It Up,” even though “Blurred Lines” did not use any of the same notes or lyrics.

At the time, legal scholars thought the case could establish a precedent that a “vibe” could indeed be protected, but subsequent rulings have severely limited the case’s potential ramifications, Coote says. A song must include passages that are “substantially similar” to an earlier song, which would likely mean that specific original portions, such as a melody, chord progression, or lyrics were copied for a court to find it infringes copyright.

Because of this, singers and record labels will have to fall back on other strategies to combat deepfakes. In the U.S., claims can be brought for violating a musician’s or label’s “right of publicity,” says Mark Lemley, a professor specializing in science and technology law at Stanford Law School.

Another legal strategy may be to assert that simply training an A.I. model—a process that involves feeding an artist’s entire song into software without permission—violates copyright. Tech companies that develop A.I. software have tried to assert that A.I. training should be afforded a “fair use” defense from copyright claims.

A.I.’s day in court

Lemley is among those who think the tech companies have a good argument. He notes that courts, nearly a decade ago, allowed Google to copy vast libraries of books without consent in order to make snippets of them available and searchable online.

The key, Lemley says, is that the copies were not themselves distributed. A.I. training, he said, is no different. Lemley thinks the courts may draw a line, though, at A.I. models explicitly designed to ape a particular artist. Except in the case of parody, he does not think those should be protected. “If I train a model only on Taylor Swift songs,” Lemley says, the law “will find that problematic.”

Others see the fair use argument as fundamentally flawed. David Newhoff, a copyright advocate and writer in Washington, D.C., argues that the entire purpose of fair use is to promote new authorship, and authorship, by definition in the U.S., applies only to works created by humans. It would stretch fair use beyond the breaking point to extend it to A.I. training, he says.

The courts will soon get a chance to decide: Photo agency Getty Images has sued Stability AI, one of the creators of a popular open-source tool that turns text into images, for alleged copyright violations in its use of Getty’s photos for training. There is also a class-action lawsuit by a group of artists against Stability AI. Those cases are likely to hinge on the issue of fair use.

Turkewitz argues that human values and ethics, not legal technicalities, should guide policymakers. The cardinal principle, he says, should be artists’ consent. “What kind of world are we creating, if everything, our new reality, is generated through the non-consenting use of materials? Is that the world we want to live in?” he says.

The singer Grimes recently gave her consent—to everyone. “I’ll split 50% royalties on any successful A.I.-generated song that uses my voice,” she tweeted in late April after the fake Drake and Weeknd song went viral. “Feel free to use my voice without penalty.”

It’s unclear how Grimes could enforce that open offer from a technical standpoint, but her approach hints at how the music world’s biggest pop idols may be thinking about turning deepfakes to their advantage. These stars could even develop their own A.I. imitation models and license them to make extra revenue—all without the inconvenience of having to spend time in a recording studio.

The power dynamic shifts, though, for up-and-coming performers. Here, the record labels might ask, as a condition of any record deal, that musicians consent to having their voice and music used to train A.I. models. Similar concerns about Hollywood studios turning to A.I. are a major sticking point in the Writers Guild of America strike, in which TV and movie screenwriters walked off the job in May and put many productions on pause. The dispute had yet to be resolved by press time.

Copyright’s limits

The only drawback for rights holders training their own A.I. models is that A.I.-generated music isn’t itself copyrightable. In general, only the work of humans—or groups of humans, such as corporations—can be copyrighted. So anyone could copy an A.I. song and distribute it, without having to pay for the rights.

Coote, the copyright attorney, says musicians and composers may be able to win some legal protection by reworking music that is initially created by A.I. software.

Absent favorable rulings in court, musicians may have to lean even more heavily into live performances for revenue. Because of relatively paltry payments from music streaming services, they’ve already had to depend more in recent years on concerts for their livelihoods and reputations. After all, it’s harder to fake it onstage in front of an arena full of adoring fans. And invariably, those fans want to go home with ticket stubs and concert T-shirts to prove they were really there.

This article appears in the June/July 2023 issue of Fortune with the headline, "Singing the A.I. blues."