Google just came out swinging against OpenAI's GPT-4o with its own Project Astra. This universal AI agent is designed to be your assistant for everyday life tasks and leverages your phone's camera and voice recognition to give responses.

And Google demoed Project Astra using smart glasses as well.

To be clear, Astra is coming to phones first and will be called Gemini Live, but it has the potential to move to other form factors over time. But I can say the demo shown at Google I/O 2024 is impressive.

Google says that Project Astra can understand and respond to the world like people do — and it can take in and remember what it sees and hears to understand context and take action. You can also speak to it naturally without experiencing lag.

The agents that power Project Astra were built on Google's Gemini model and other task-specific models. It can process info faster by continuously processing video and speech input.

During the Project Astra demo, a person held up an Android phone and kept the camera's live video open while asking a series of questions. Project Astra didn't miss a beat.

For example, when pointing the phone's camera at a table and asking what makes sound, Astra found a computer speaker. Then the woman circled the top part of the speaker and asked Astra what it is. She correctly responded a tweeter.

From there, Astra was able to provide a creative alliteration about a bunch of crayons, identify what part of the code does when being pointed at a computer monitor and correctly identified the Kings Cross area of London when the camera was pointed out the window.

Then things got really interesting. The Google employee donned a pair of smart glasses and she looked at a board and asked what does this remind you of while staring at an illustration. What does this remind you of? Schrödinger's cat, replied Astra.

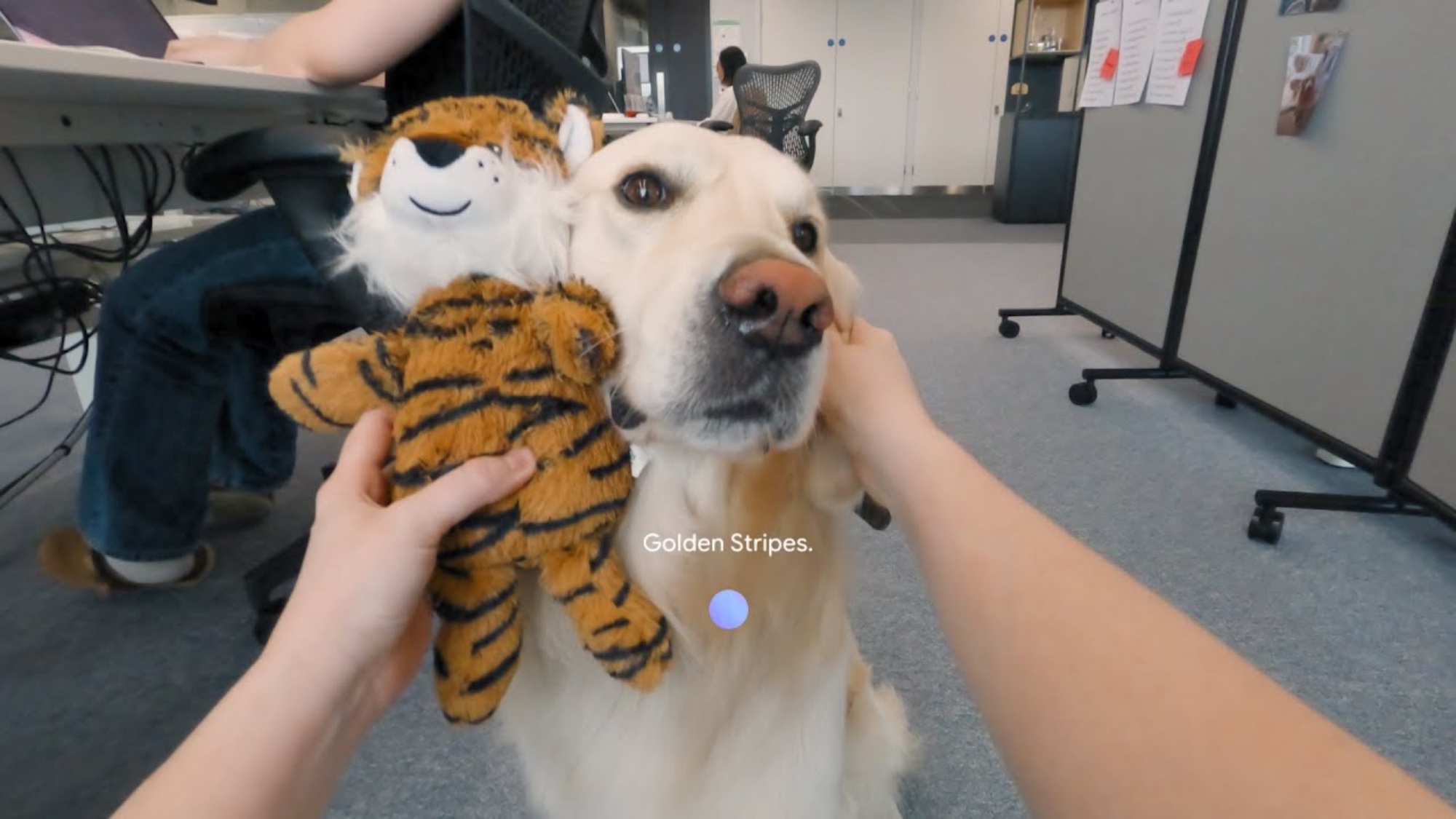

While holding a stuffed animal tiger next to a golden retriever, Project Astra was asked to come up with a band name on the fly. Answer: Golden Stripes.

Overall, Project Astra's spatial understanding and video processing seem impressive, and I'm excited to see where Google takes this AI agent. It's coming to Gemini app later this year and we can't wait to test it out.