You have to hand it to Mark Zuckerberg. In the face of criticism about the radical strategic shift he has chosen for Facebook, he is stubbornly focused on turning it into a metaverse company. Other tech billionaires may lash out at dissent, but Zuckerberg remains stoic, tuning out the noise to give earnest interviews and presentations about his virtual-reality vision.

But while he can get away with ignoring the criticism, the CEO of Facebook parent Meta Platforms Inc. should reassess his priorities over the coming months as the U.S. approaches potentially tumultuous midterm elections. He needs to turn his attention back to Facebook, or risk letting misleading videos about election fraud proliferate, potentially disrupting the democratic process yet again.

Zuckerberg could start by doing what thousands of managers before him have done, and reconsider his tasks.

The metaverse project is still in its infancy: While Facebook has about 3 billion active users, Horizon Worlds, the VR platform that serves as the foundation of metaverse experience, has just 200,000, according to internal documents revealed by the Wall Street Journal.

Zuckerberg has been upfront in saying that Meta’s metaverse won’t be fully realized for five years or more. All the more reason, then, that his passion project can afford to lose his attention for a few months, or at least during critical moments for democracy.

So far he has shown no sign of shifting his focus. Facebook’s core election team no longer reports directly to Zuckerberg as it did in 2020, according to the New York Times, when Zuckerberg made that year’s US election his top priority.

He has also loosened the reins on key executives tasked with handling election misinformation. Global affairs head Nick Clegg now divides his time between the UK and Silicon Valley, and Guy Rosen, the company’s head of information security, has relocated to Israel, a company spokesperson confirmed via email.

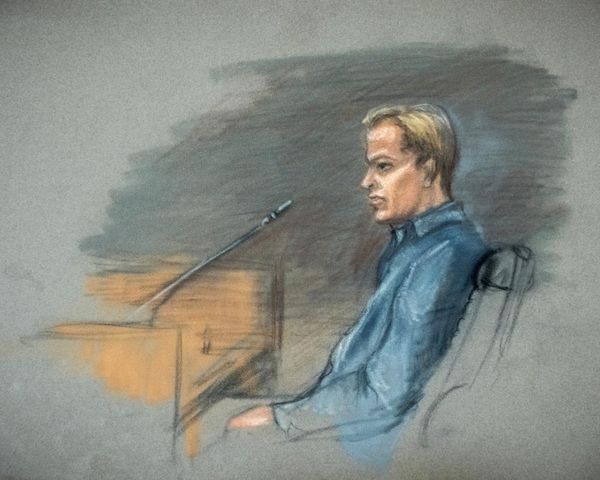

Researchers who track misinformation on social media say there is little evidence Facebook is better at stopping conspiracy theories now than it was in 2020. Melanie Smith, who heads disinformation research at the Institute for Strategic Dialogue, a London-based nonprofit, says the company hasn’t improved data access for outside researchers trying to quantify the spread of misleading posts. Anecdotally they still proliferate, she said. Smith said that she found Facebook groups recruiting election poll watchers seemingly for the purpose of intimidating voters on Election Day.

She also pointed to a video posted by Florida Rep. Matt Gaetz on his Facebook page, saying that the 2020 election had been stolen. The video has been viewed more than 40,000 times at the time of writing. Although it was posted a month ago, it doesn’t have a fact-check warning label.

Smith additionally cited recent Facebook posts, shared hundreds of times, inviting people to events to discuss how “Chinese communists” are running local elections in the US, or posters stating that certain politicians should “go to prison for their role in the stolen election.” Posts made by candidates tend to spread particularly far, Smith said.

Meta has said that its main approach to handling content through the 2022 midterms will be with warning labels. But warnings labels aren’t very effective. For more than 70% of misinformation posts on Facebook, such labels are applied two days or more after a post has gone up, well after it has had the chance to spread, according to a study conducted by Integrity Institute, a nonprofit research organization run by former employees of big tech firms. Studies have shown that misinformation gets 90% of its total engagement on social media in less than one day.

The problem, ultimately, is the way Facebook shows people content most likely to keep them on the site, what whistleblower Frances Haugen has called engagement-based ranking. A better approach would be “quality-based ranking,” similar to Google’s page rank system that favors consistently reliable sources of information, according to Jeff Allen, a former data scientist at Meta and co-founder of the Integrity Institute.

Facebook’s growing emphasis on videos stands to make the problem worse. In September 2022, misinformation was being shared much more often via video than through regular posts on Facebook, Allen said, citing a recent study by the Integrity Institute. False content generally gets more engagement than truthful content, he added, and so tends to be favored by an engagement-based system. (1)

In 2020, Facebook deployed “break glass” measures to counteract a surge of posts saying the election was being stolen by then-President-elect Joe Biden, which eventually fed into the storming of the U.S. Capitol on Jan. 6.

Meta shouldn’t have to resort to such drastic measures again. If Zuckerberg is serious about connecting people, and doing so responsibly, he should come out of his virtual reality bubble and re-examine the ranking system that keeps eyeballs glued to Facebook’s content. At a minimum he could communicate to his employees, and the public, that he is once again making election integrity a priority. The metaverse can wait.