Apple Intelligence, perhaps the highlight of this year's WWDC, is tightly integrated into iOS 18, iPadOS 18, and macOS Sequoia, and includes advanced generative models specialized for everyday tasks like writing, text refinement, summarizing notifications, creating images, and automating app interactions.

The system includes a 3-billion-parameter on-device language model and a larger server-based model running on Apple silicon servers via Private Cloud Compute (PCC). Apple says these foundation models, along with a coding model for Xcode and a diffusion model for visual expression, support a wide range of user and developer needs.

The company also adheres to Responsible AI principles, ensuring tools empower users, represent diverse communities, and protect privacy through on-device processing and secure PCC. Apple says its models are trained on licensed and publicly available data, with filters to remove personal information and low-quality content. The company employs a hybrid data strategy, combining human-annotated and synthetic data, and uses novel algorithms for post-training improvements.

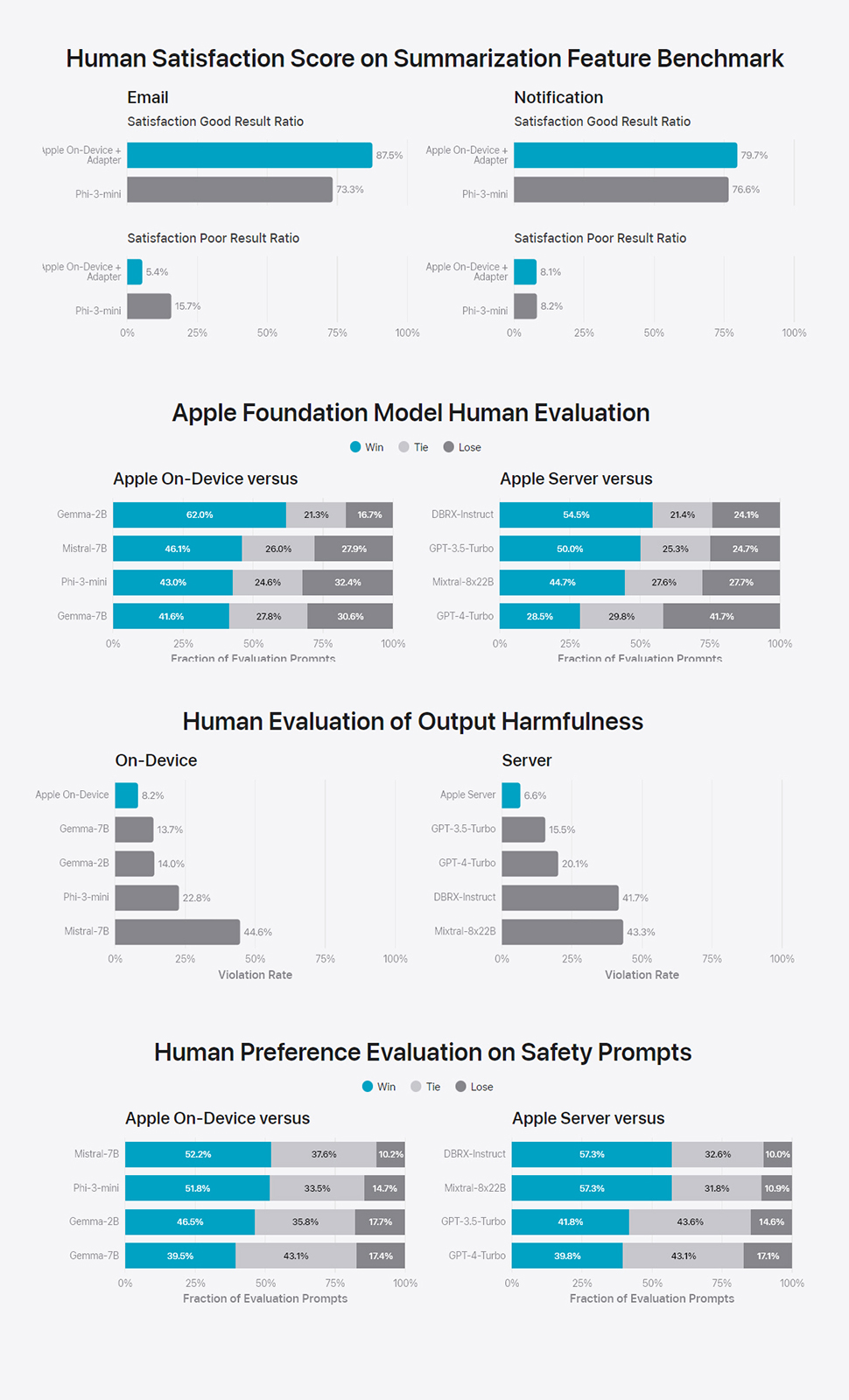

Human graders

For inference performance, Apple states it optimized its models with techniques like grouped-query-attention, low-bit palletization, and dynamic adapters. On-device models use a 49K vocab size, while server models use 100K, supporting additional languages and technical tokens. According to Apple, the on-device model achieves a generation rate of 30 tokens per second, with further enhancements from token speculation.

Adapters, which are small neural network modules, fine-tune models for specific tasks, maintaining base model parameters while specializing for targeted features. These adapters are dynamically loaded, ensuring efficient memory use and responsiveness.

Safety and helpfulness are paramount in Apple Intelligence, the Cupertino-based tech giant insists, and the company evaluates its models through human assessment, focusing on real-world prompts across various categories. The company claims its on-device model outperforms larger competitors like Phi-3-mini and Mistral-7B, while the server model rivals DBRX-Instruct and GPT-3.5-Turbo. This competitive edge is highlighted by Apple’s assertion that human graders prefer their models over established rivals in several benchmarks, some of which can be viewed below.