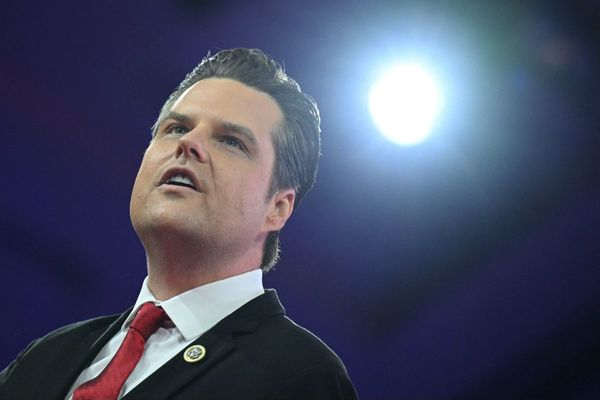

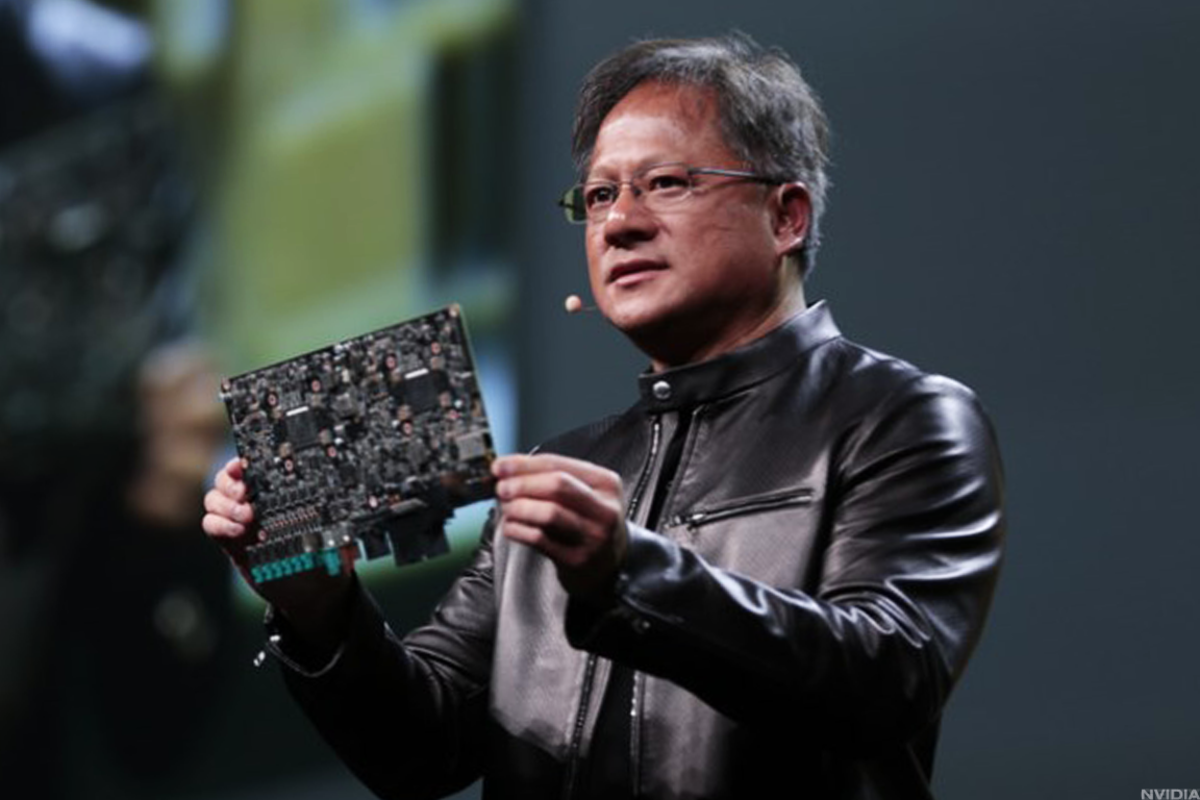

“The first thing is to remember that AI is not about a chip. AI is about an infrastructure,” Nvidia (NVDA) Chief Executive Jensen Huang recently explained.

On Sept. 11, Huang presented at Goldman Sachs’s Communacopia + Technology Conference. He discussed Nvidia’s competitiveness, the Blackwell platform, Taiwan Semiconductor, and more.

Nvidia’s stock jumped more than 8% following Huang’s speech, a significant recovery from the sharp drop following its Q2 earnings report in August.

On Aug. 28, Nvidia released its fiscal second-quarter earnings report.

Related: 7 takeaways from Nvidia's big earnings report

For the quarter that ended July 28, the company reported adjusted earnings of 68 cents a share, more than double the year-earlier figure and surpassing the analyst consensus estimate of 64 cents. Revenue reached $30 billion, up 122% from a year earlier, exceeding the anticipated $28.7 billion.

However, investors anticipated more significant growth for the company, which led them to sell down Nvidia shares by more than 15% within a week of the financial report.

Nvidia accelerates two major technology trends

Moore's Law explains that the number of transistors on a microchip roughly doubles every two years while costs stay relatively low. The concept was introduced by Intel Co-Founder Gordon Moore in 1965 and has since become a key benchmark in the semiconductor industry.

Now, Huang said Moore's Law is over.

“We're seeing computation inflation. We have to accelerate everything we can,” Huang said, “What we want to do is take that few, call it 50, 100, 200-megawatt, data center, which is sprawling, and you densify it into a really, really small data center.”

Related: Analysts overhaul Nvidia stock price targets after Q2 earnings

Nvidia is tackling two major trends shaping the future of computing.

First, Nvidia is pushing a shift from CPU-based data centers to faster, GPU-accelerated computing, which is especially useful for handling large datasets.

Second, Nvidia is focusing on the rise of generative AI, which enables computers to learn from data instead of being programmed. Generative AI produces original content — text, graphics, audio and video — from existing data.

“The days of every line of code being written by software engineers are completely over,” Huang said, “And the idea that every one of our software engineers would essentially have companion digital engineers working with them 24/7, that's the future.”

Nvidia's Taiwan Semiconductor risk is manageable

As most of Nvidia’s chip production comes from Asia, investors are worried about geopolitical risks that could hurt Nvidia’s delivery and revenue.

Huang said Nvidia has the ability to switch to alternative suppliers as it has enough intellectual property.

He also noted, however, that the adjustment could potentially lead to a decline in quality.

“Maybe the process technology is not as great, maybe we won't be able to get the same level of performance or cost, but we will be able to provide the supply. And so I think the — in the event anything were to happen, we should be able to pick up and fab it somewhere else,” Huang said.

Huang said Nvidia's new GPUs have 35,000 parts, totaling 3,000 pounds when racked. “These GPUs are so complex. It's built like an electric car,” he said, highlighting Taiwan Semiconductor’s (TSM) leading position in the industry.

“TSMC is the world's best by an incredible margin … the great chemistry, their agility, the fact that they could scale.”

Taiwan Semiconductor is a key chip supplier for Nvidia. Its August revenue surged by 33% to $7.8 billion, another indicator of high demand for Nvidia's AI chips.

Nvidia’s competitive moat strengthens with Blackwell chip launch

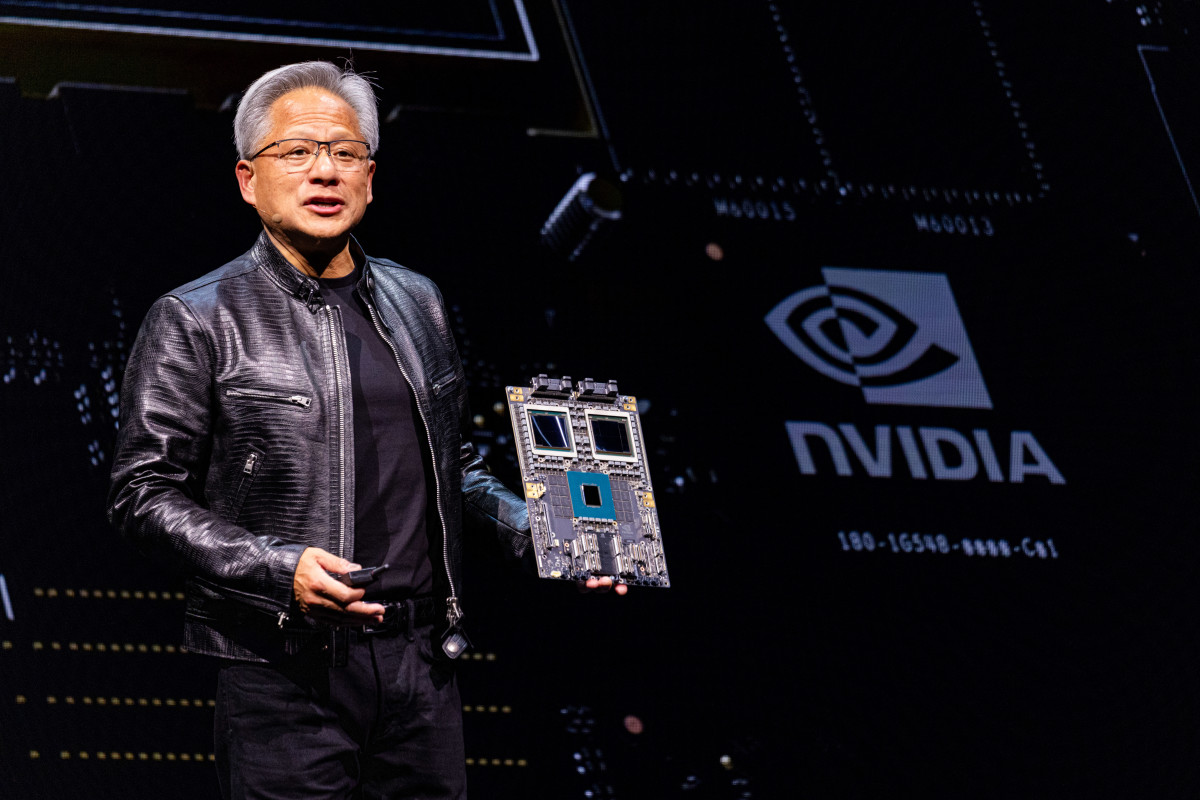

When asked about Nvidia's competitive edge, Huang pointed to the company's AI infrastructure and mentioned Blackwell.

“Today's computing is not build a chip and people come buy your chips, put it into a computer. That's really kind of 1990s. The way that computers are built today, if you look at our new Blackwell system, we designed seven different types of chips to create the system,” Huang said. He added that Nvidia is building a “supercomputer” of an entire data center.

Blackwell is a platform Nvidia rolled out in March that enables organizations to run real-time generative AI on trillion-parameter models. These large language models are trained on vast amounts of text data to understand and generate human language. Nvidia's customers include Amazon AWS, Dell, Google, Meta, Microsoft, OpenAI, Oracle and Tesla.

In its August earnings report, Nvidia admitted to Blackwell’s production challenges and stated that it had to overhaul part of the manufacturing process.

More AI Stocks:

- Palantir stock leaps on big S&P 500 boost for data analytics group

- Veteran fund manager unveils startling Nvidia stock forecast

- Analysts reset Alphabet stock price target before key September court event

Huang said Blackwell was now in full production, with shipments expected in Q4 and scaling planned through next year. He emphasized the extraordinary demand, noting that clients are eager to be first and secure the most capacity.

“Everybody wants to be first, and everybody wants to be most … so the intensity is really, really quite extraordinary,” he said, “So less sleep is fine, and three solid hours, that's all we need.”

Related: Veteran fund manager sees world of pain coming for stocks