Nvidia's ChatRTX app has released its anticipated version 0.3 update today on Nvidia's website. The update to the ChatGPT-like app opens up a host of new features first teased at Nvidia's GTC conference in March, including photo search capabilities, AI-powered speech recognition, and compatibility with even more LLMs.

ChatRTX represents another Nvidia foremost tech demonstration of the power of AI when fueled by consumer RTX graphics cards. The ChatRTX program offers a ChatGPT-adjacent experience locally installed on your computer, with the ability to leverage your data into AI use cases without the risk of having that data stolen for training purposes or more nefarious uses. An example of the program in practice can be seen in this example of an enthusiast putting an RTX card into a NAS to use ChatRTX's data synthesis abilities on their data network-wide.

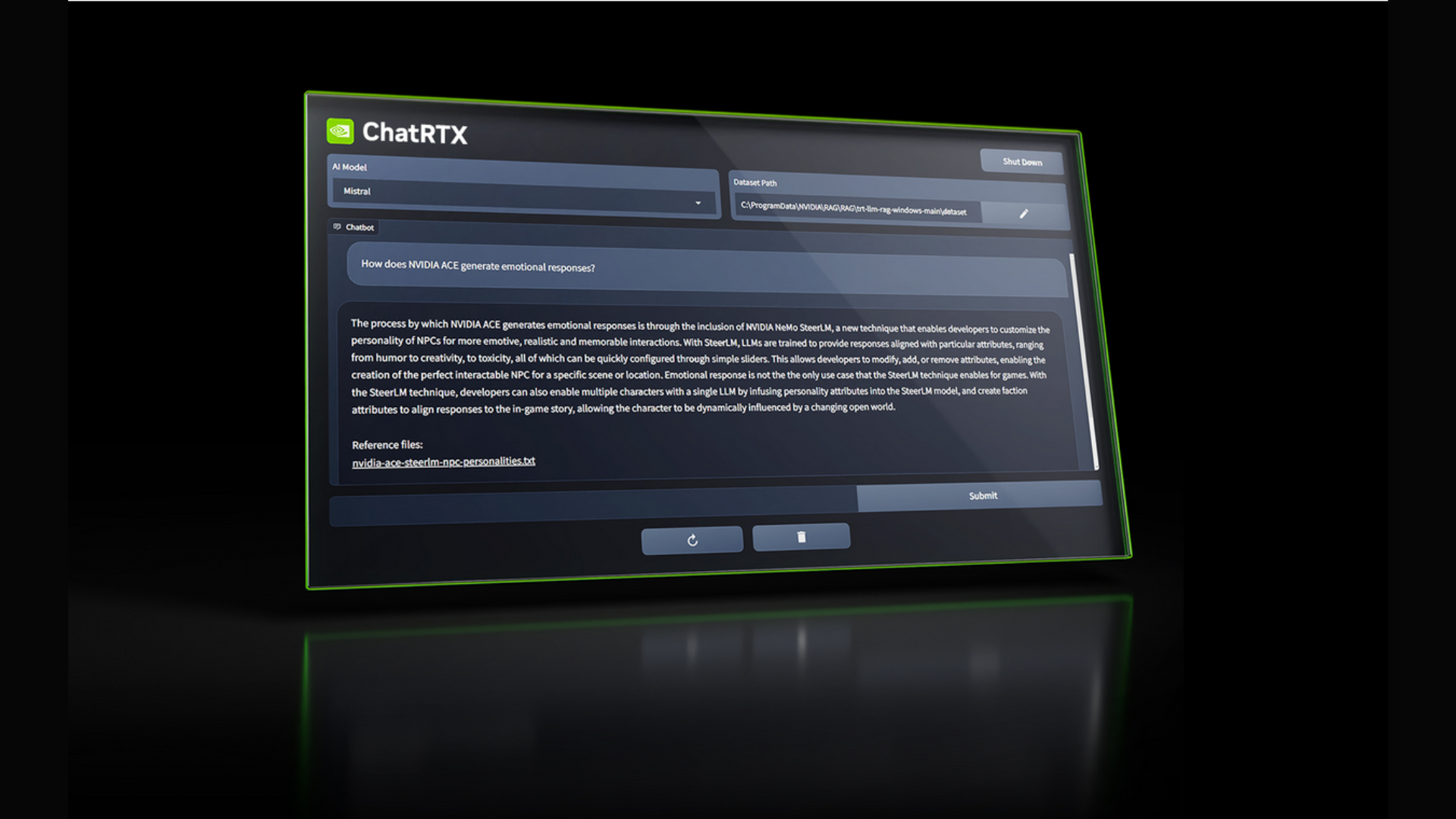

We were able to download the new version before its official release and check out some of the new features. ChatRTX has expanded its stable of supported LLMs (language learning models; i.e. GPT-4) to include new options like Gemma, the latest LLM from Google, and ChatGLM3-6B, a new open-source option. The ability to choose your own LLM is invaluable, as different LLMs may perform the same tasks entirely differently.

ChatRTX's photo searching and interaction tools have also seen a massive upgrade, with tech borrowed from OpenAI's CLIP tool allowing the program to search images without the extensive complex metadata labeling previously required by the app. Finally, ChatRTX users can now speak with their data, with added support for Whisper, an AI-powered speech recognition system that enables ChatRTX to understand verbal speech.

The tech demo leverages the power of Nvidia's tensor cores and the TensorRT library, so it requires an RTX GPU — and for the time being, it doesn't support the first generation RTX 20-series either. So if you're looking to run the app yourself, you'll need an Nvidia RTX 30- or 40-series GPU with at least 8GB of VRAM — and likewise, any of the professional Ampere or Ada GPUs will also do the trick.

The program is likely to continue to grow in functionality before an eventual 1.0 release, including the expected return of its YouTube link scrubbing capabilities that were removed recently, shortly after the emergency 0.2.x updates to patch a security vulnerability.