As our conversations with ChatGPT continued to advance it was always a matter of time until someone decided to level up NPCs using the power of artificial intelligence.

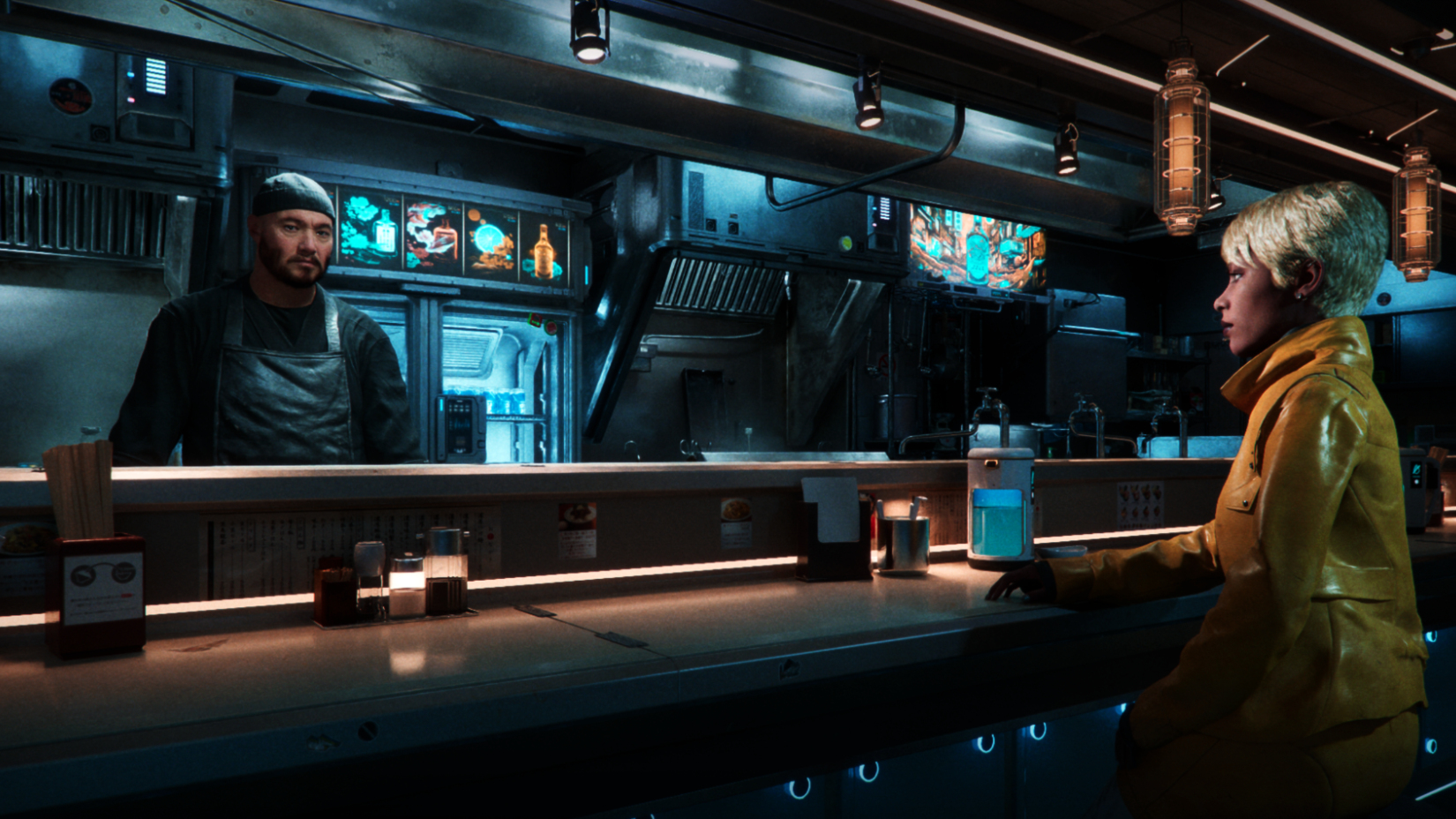

Nvidia showed off how far its generative AI platform Avatar Cloud Engine (ACE) has come along at CES 2024. In a cyberpunk bar setting, the NPC Nova responded to ACE Senior Product Manager Seth Schneider asking her how she was doing. When Schneider asked the barman Jin for a drink, the AI character promptly served him one.

The company basically just gave us a preview of how we’ll soon be interacting with in-game characters — as if we’re having a conversation with a friend.

Others who had the opportunity to try the demo for themselves had natural conversations with Nova and Jin about different topics. They also asked Jin for some ramen and also if he could dim the lights of the bar — Jin managed both with ease.

The platform works by capturing a player’s speech and then converting it into text for a large language model (LLM) to process in order to generate an NPC’s response. The process then goes into reverse so that the player hears the game character speaking and also sees realistic lip movements that are handled by an animation model.

For this demo, Nvida collaborated with Convai, a generative AI NPC-creation platform. Convai allows game developers to attribute a backstory to a character and set their voice so that they can take part in interactive conversations. The latest features include real-time character-to-character interaction, scene perception, and actions.

How it works

According to Nvidia, the ACE models use a combination of local and cloud resources that transform gamer input into a dynamic character response. The models include Riva Automatic Speech Recognition (ASR) for transcribing human speech, Riva Text To Speech (TTS) to generate audible speech, Audio2Face (A2F) to generate facial expressions and lip movements and the NeMo LLM to understand player text and transcribed voice and generate a response.

In its blog, Nvidia said that open-ended conversations with NPCs open up a world of possibilities for interactivity in games. However, it believes that such conversations should have consequences that could lead to potential actions. This means that NPCs need to be aware of the world around them.

The latest collaboration between Nvidia and Convai led to the creation of new features including character spatial awareness, characters being able to take action based on a conversation, and game characters with the ability to have non-scripted conversations without any interaction from a gamer.

Nvidia said it’s creating digital avatars that use ACE technologies in collaboration with top game developers including Charisma.AI and NetEase Games — the latter of which has already invested $97 million to create its AI-powered massively multiplayer online (MMO) Justice Mobile.

Check out our CES 2024 hub for all the latest news from the show as it happens. Follow the Tom’s Guide team in Las Vegas as we cover everything AI, as well as the best new TVs, laptops, fitness gear, wearables and smart home gadgets at the show.

And be sure to check out the Tom's Guide TikTok channel for all the newest videos from CES!