Nvidia has announced its latest GPU architecture, Blackwell, and it's filled with upgrades galore for AI inference and a few hints at what might be instore for next-gen gaming graphics cards. In the same breath as its announcement at GTC, major tech companies were also announcing the many thousands of systems they've just snapped up with Blackwell on-board.

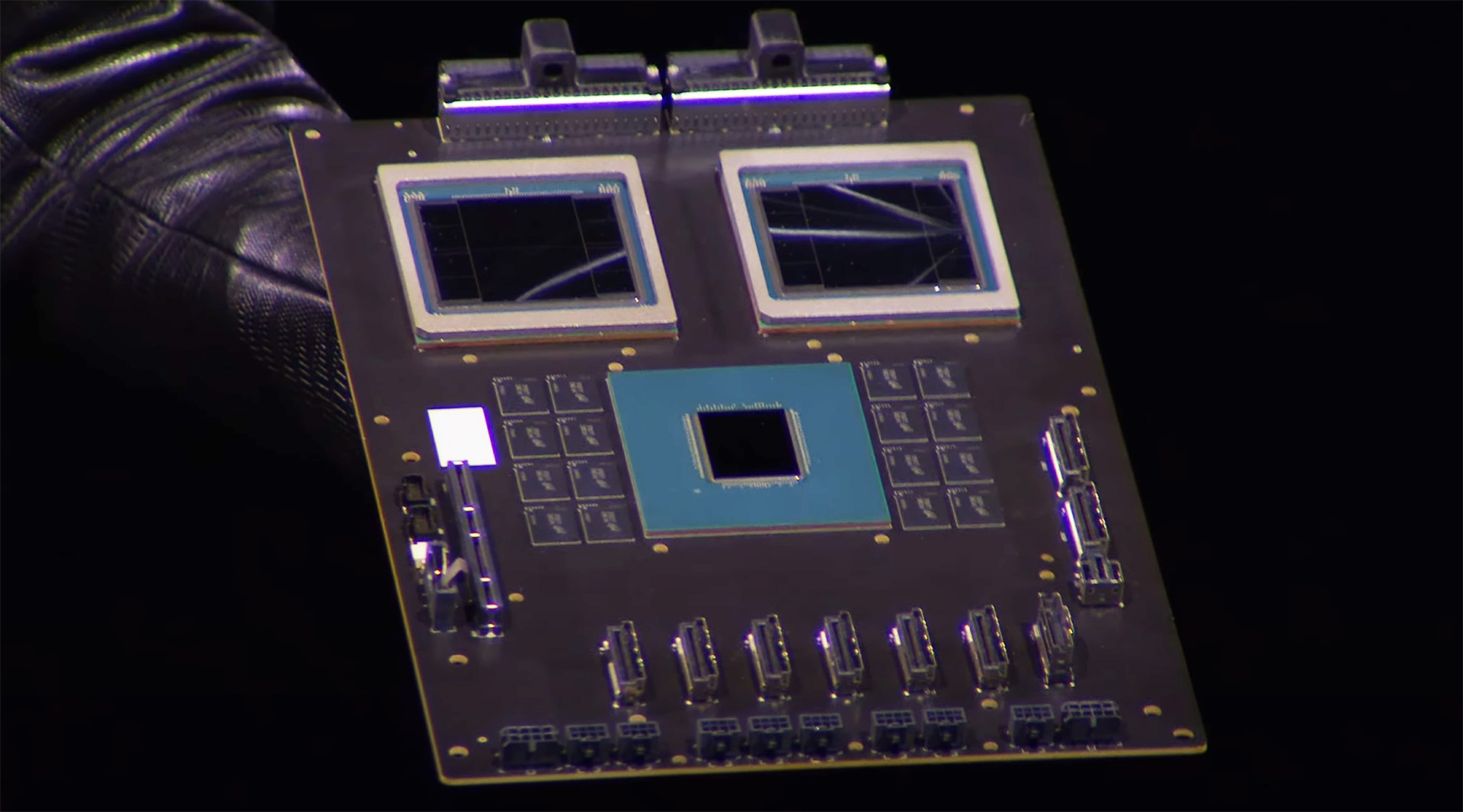

AWS, Amazon's datacenter arm, announced it's bringing Nvidia's Grace Blackwell Superchips—two Blackwell GPUs and a Grace CPU incorporated on a single board—to EX2, which are effectively on-demand compute resources in the cloud. It's also already agreed to bring 20,736 GB200 Superchips (that's 41,472 Blackwell GPUs in total) to Project Ceiba, an AI supercomputer on AWS used by Nvidia for its own in-house AI R&D. Sure, that's roundabout Nvidia buying its own product, but there are other instances that show just how big demand is for these sorts of chips right now.

Google says it's jumping on the Blackwell train. It will offer Blackwell on its cloud services, including GB200 NVL72 systems. Each one comprised of 72 Blackwell GPUs and 36 CPUs. Flush with cash, eh Google? Though we don't know how many Blackwell GPUs that Google has signed up for just yet, with the company's race to be competitive with OpenAI in AI systems, it's probably quite a lot.

Oracle, of Java fame to folks of a certain age or more recently of Oracle Cloud fame, has put a number to the exact amount of Blackwell GPUs it's buying from Nvidia: 20,000 GB200 Superchips will be headed Oracle's way, at first. That's 40,000 Blackwell GPUs in total. Some of Oracle's order will be devoted to Oracle's OCI Supercluster or OCI Compute—two lumps of superconnected silicon for AI workloads.

Microsoft is playing coy with the exact numbers of Blackwell chips it's buying, but it's throwing heaps of cash behind OpenAI and its own AI efforts on Windows (to a mixed response), so I expect big money to be changing hands here. Microsoft is bringing Blackwell to Azure, though we've no exact timelines.

That's the thing, we don't have all the details about Blackwell's rollout or availability. Nvidia's sold heaps of them, that we know of, right as the announcement for the architecture dropped. The exact launch times or even specifications for the chips are still up in the air. That's more usual of enterprise chips such as this, but we don't even have the white paper in our hands and tens of thousands of GPUs—if not hundreds of thousands once you include other companies cited in Nvidia's GTC announcement including Meta, OpenAI, and xAI—have already been sold to the highest bidder.

This level of demand for Nvidia's chips isn't at all surprising: it's fueled by the market for AI chips. Just one example of many, Meta announced earlier in the year that it aims for 350,000 H100s by the end of the year, which could cost as much as $40,000 each by some estimations. Though I wonder how Blackwell and the B200 will factor into Meta's estimates. It will take some time for Nvidia to ramp up to full production for Blackwell, and even longer to satiate the needs of the exponentially growing AI market, and it's likely ownership of H100s will remain the marker of whether you're a big fish in AI or not for a little while longer.