When you finish reading this sentence, look away from the screen for a moment and repeat it back in your head. Do you know exactly where in your brain this inner “voice” is speaking from?

Researchers have tried to map out the regions of the brain responsible for this “inner monologue” for years. One promising candidate is an area called the supramarginal gyrus, which sits a little north of your eyeballs and slightly behind your ears.

What’s new — According to new research presented at the recent Society for Neuroscience conference, the supramarginal gyrus could help scientists translate people’s inner thoughts.

“We’re all pretty excited about this work,” says Sarah Wandelt, a graduate researcher at the California Institute of Technology and lead author of the study, which is published on the pre-print server medRxiv and has not been peer-reviewed.

Along with other recent advances in this field, this research could eventually help people who can hear and think but who have lost the ability to speak, like those with advanced Lou Gehrig’s disease. “It’s cool to think it might help those who are in the most locked-in states,” Wandelt says.

Here’s the background — The supramarginal gyrus is mainly known for its role in helping people handle tools. So when Wandelt’s team first placed their implant in the brain of their participant (a man known as “FG,” who is paralyzed from the neck down but able to speak), they were initially looking for a way to help paralyzed patients grasp objects.

But they noticed the area was also lighting up when FG read words on a screen. That’s when the researchers decided to change course and see where the hunch would lead, Wandelt explains.

What they did — Wandelt’s team then set off to capture this “inner speech” — which the study defines as “engaging a prompted word internally” without moving any muscles.

The scientists presented FG with a word, asked him to “say” the word in his head, and then had him speak the word out loud. At every stage, the electrodes in FG’s brain gathered information and taught machine-learning algorithms to recognize the brain activity that corresponds to each word.

The team ran through a total of six words in English and Spanish, in addition to two made-up, “nonsense” words. When they asked FG to think of the words again, the computer could predict the result with up to 91 percent accuracy.

Because speech is a complex process that involves multiple areas of the brain, Wandelt’s strategy isn’t the only way to tackle the translation problem. “I think there are a lot of viable strategies, and it's cool that a lot of different groups are trying different things,” says David Moses, a postdoctoral researcher at the University of California San Francisco who wasn’t involved in the new study.

Moses’s team measures activity in the sensorimotor cortex to capture the signals being sent to the vocal muscles. Rather than decoding inner speech like the CalTech team, this approach predicts the words the patient is trying to say out loud.

In 2019 and 2020, they trained their deep-learning computer models to recognize brain activity corresponding to 50 words in one patient. While their program logged a larger vocabulary than the one from CalTech, Moses notes that the latter had an impressive training time, about 24 seconds of data per word. “This was very fast training.”

As the number of possible word choices grows, the odds that the computer will pick the right one shrink. This means that scaling the program up to handle a larger vocabulary will be challenging. The researchers also need to make sure they can reproduce their results in other subjects. This is a difficult task because everyone’s brain is unique, and it’s tough to place the implant in precisely the same spot in different people.

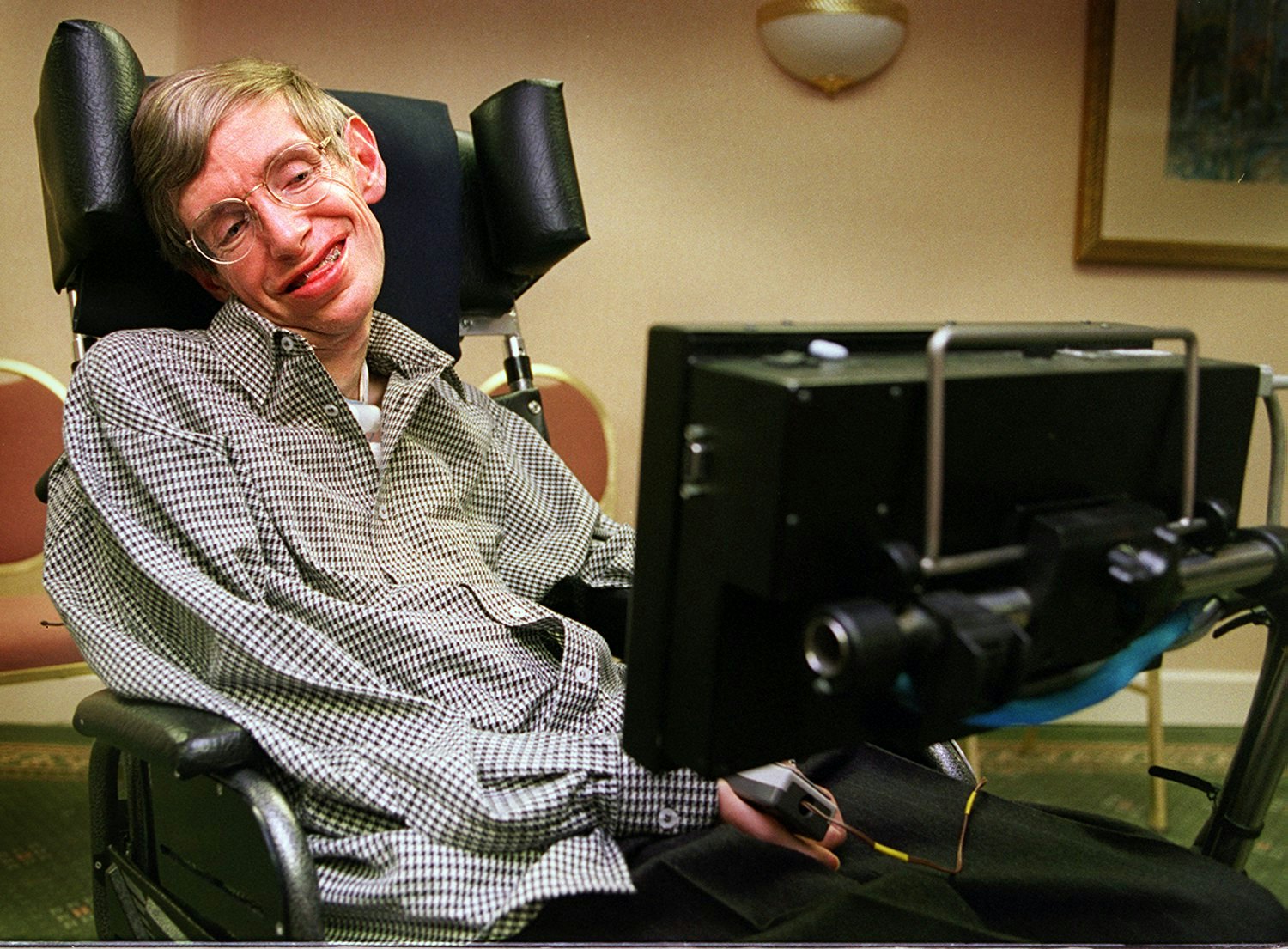

Why it matters — The holy grail for researchers like Moses and Wandelt is to translate full sentences as close to real-time as possible. For those who have lost the ability to communicate verbally, this would better reflect the experience of speaking compared to other assistive methods that need extra steps, like the device used by Stephen Hawking — he operated it by moving his cheek muscles to type words.

What’s next — Instruments like fMRI can’t take measurements fast enough to catch the kinds of signals that can be translated into speech, so researchers have to rely on risky and highly invasive surgeries to install the brain implants that can gather this data. But wireless brain implants are currently in the works, and they could give patients a bit more freedom to move around when using the device, rather than staying hooked up to a computer.

In recent years, private industry has become more interested in technology that can help non-verbal patients communicate, Moses says. Companies like Neuralink, Kernel, and Synchron are developing their own implants and high-tech devices, like a helmet that can read brain activity using near-infrared light.

You may be wondering: What if this system could one day divulge our most intimate inner conversations? The concept certainly brings to mind a hypothetical dystopia in which the government or tech companies can read our minds without our consent. But the researchers stress that their techniques can’t capture what most people consider their private thoughts.

In fact, FG had to focus intensely on each word to complete the experiment — before they could measure his internal speech, the researchers and FG had to make sure they agreed on what “inner speech” actually was. “This is quite hard to study because it relies on people having to report something about themselves,” says Wandelt. “Everyone has a different way of doing it.”

For some people, the experiment wouldn’t capture their natural inner monologue, which might consist more of visual images or quick soundbites. Instead, Wandelt and colleagues could only pick up on words slowly and one at a time. “I think if the patient was just randomly thinking about stuff it wouldn't work at all,” says Wandelt.

So while a technique that can detect inner speech doesn’t count as “mind-reading,” says Moses. “It does get one step closer.”