One of the supposed benefits of AI image generators is that they can create anything. We can let our imagination run wild creating images of the most surreal scenes we could think of. Only they can't. Generative AI is limited by its training data, and image generators are still sometimes unable to create surprisingly simple requests.

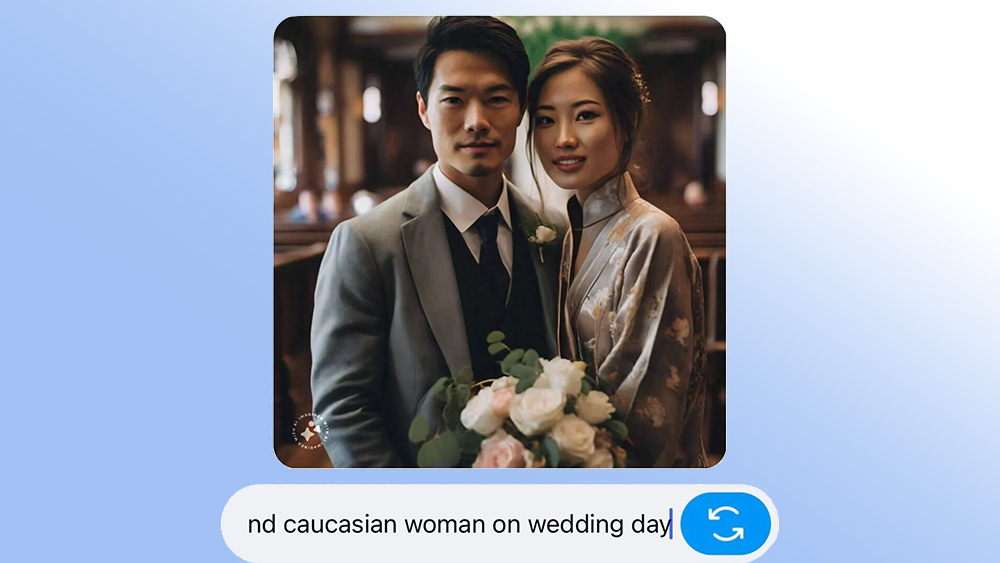

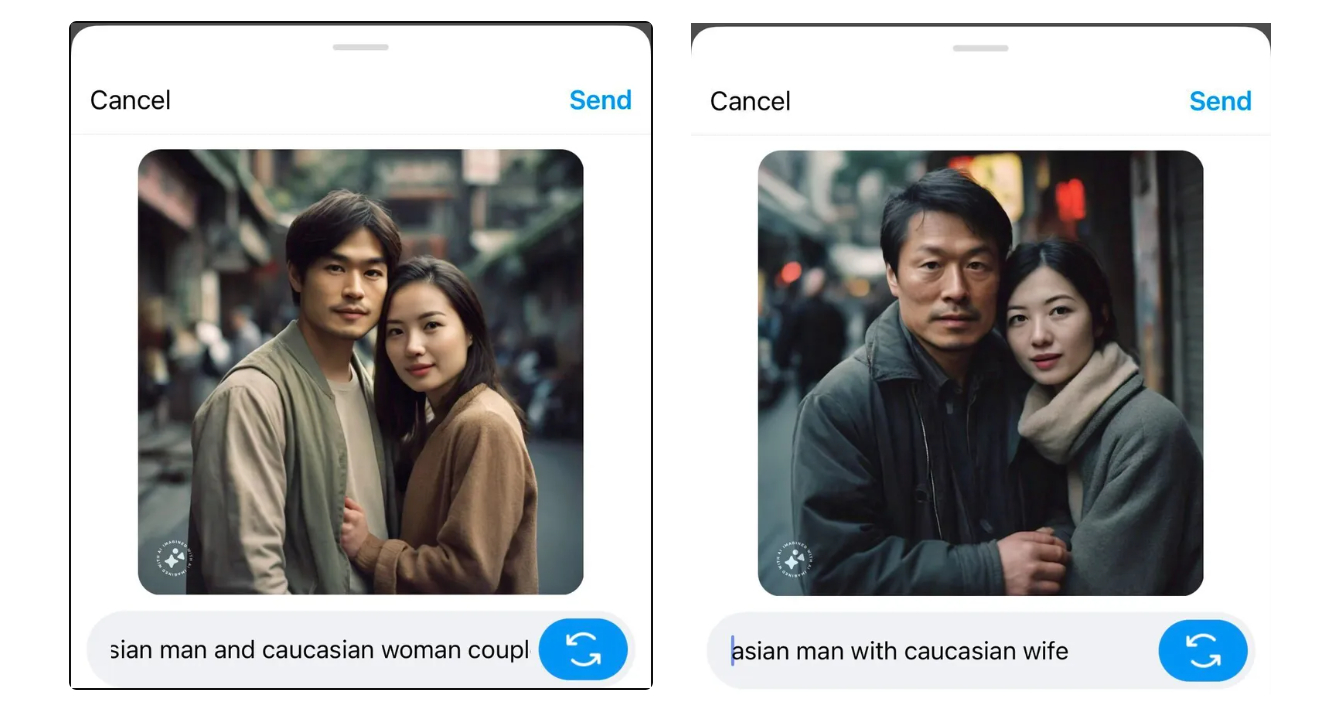

Mia Sato, a writer at The Verge, has reported that she tried to get Meta's AI image generator to create an image of an Asian man with a white woman. And she came up against a consistent case of 'AI says no'. Despite using text prompts, including phrases such as 'white woman' and 'Caucasian woman', the text-to-image tool would only produce images of an Asian couple.

Diversity in AI image generators has been an issue since the beginning. Early models appeared to be skewed towards generating human characters with certain skin tones and body shapes, probably due to a disproportional number of professional models and celebrities in their training data. Google was widely ridiculed for its attempts to fix that – it's efforts to be inclusive led Gemini to generate images of ethnically diverse Nazis.

But Sato's experiment perhaps shows that Google's intentions were at least in the right place. She wrote on The Verge, that she tried numerous times with different text prompts to generate an image of an Asian man with a white woman, and the generator seemed incapable of producing the desired result. She tried both with texts describing a romantic couple and platonic friends, but the results were the same.

She also noted that the generator tended towards stereotypes, including traditional dress, or hybrids of traditional dress, without being asked for it. The only time the image did manage to produce a result on the right lines, was when she switched things round to ask for an “Asian woman with Caucasian husband”, and it generated an image with a notable age gap between the older man and much younger Asian woman.

"The image generator not being able to conceive of Asian people standing next to white people is egregious," Sato wrote. "But there are also more subtle indications of bias in what the system returns automatically. For example, I noticed Meta’s tool consistently represented “Asian women” as being East Asian-looking with light complexions, even though India is the most populous country in the world. It added culturally specific attire even when unprompted. It generated several older Asian men, but the Asian women were always young."

Sato's experience shows that while AI image generators continue to improve the quality of results, and to add new features, like DALL-E' 3 s new editing in ChatGPT, they still display significant bias. And that's worrying as AI imagery becomes more prevalent online, potentially serving to reinforce biases even more. Some have suggested that Meta's model takes a front-first approach to prompts, so the first keyword will often outweigh later ones, but even that highlights the often unreliable nature of the tools' quirks and the amount of supervision they require.