For a long while, I've had my eye on an upgrade for my gaming PC, specifically in the GPU department - I'll be honest, I'm obsessed with attaining that perfect powerful system to provide consistent high frame rates.

While using an RTX 3080 Ti (which, make no mistake, is still an absolute powerhouse), at the 3440x1440 resolution on Dell's Alienware AW3423DWF, I found that it couldn't quite push its way through highly demanding AAA titles like Cyberpunk 2077 (especially with ray tracing enabled).

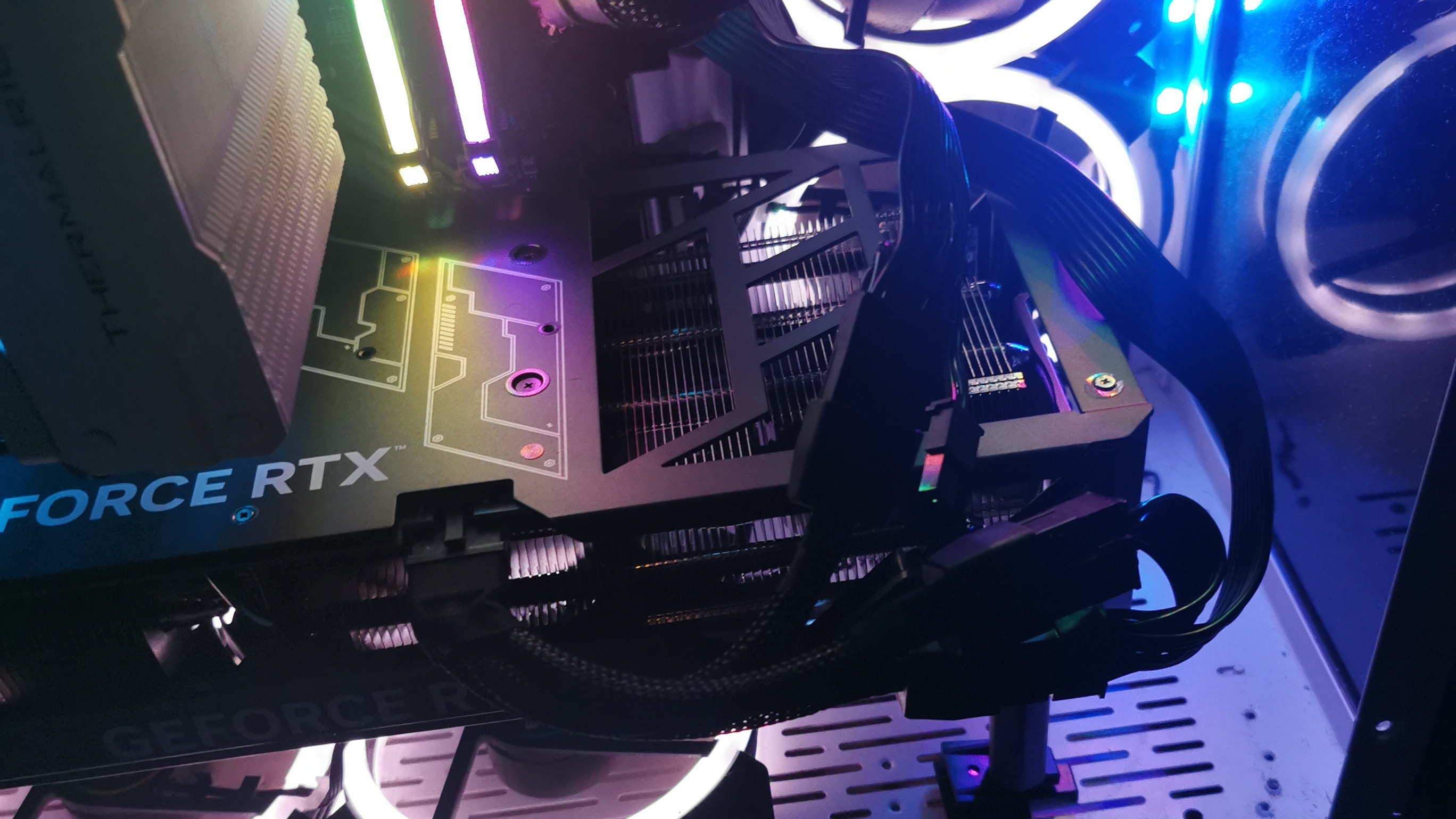

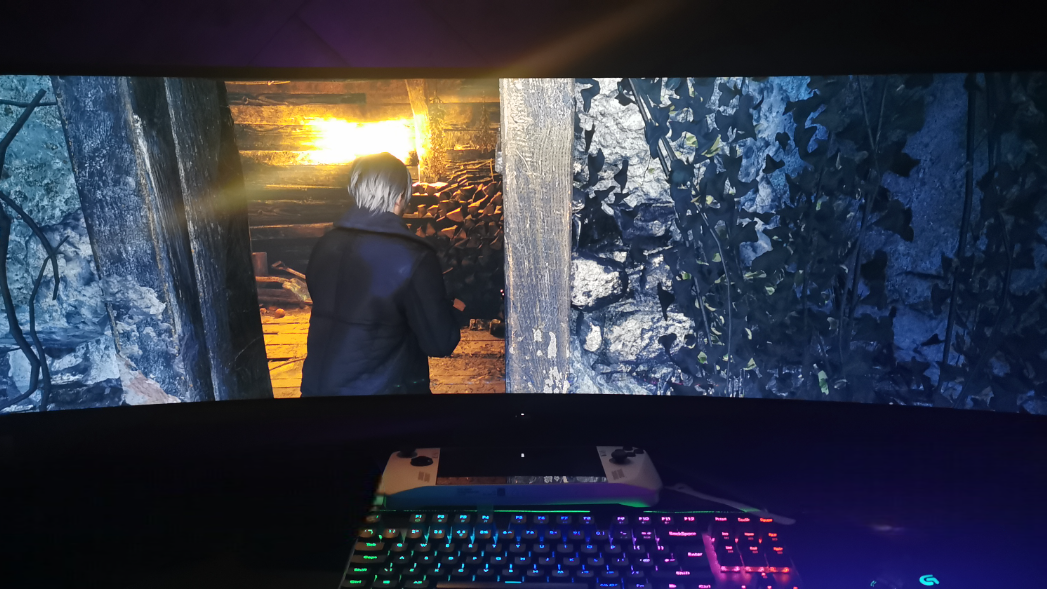

Fortunately, the lovely folks at Nvidia and Asus reached out to provide not only the ROG Swift PG49WCD OLED super ultrawide monitor ($1,199 / £1,399 / around AU$2,699) but also the TUF RTX 4080 Super OC Edition GPU for testing - if you came here for a quick answer on this specific GPU upgrade path, I can tell you without a doubt, it's worth it.

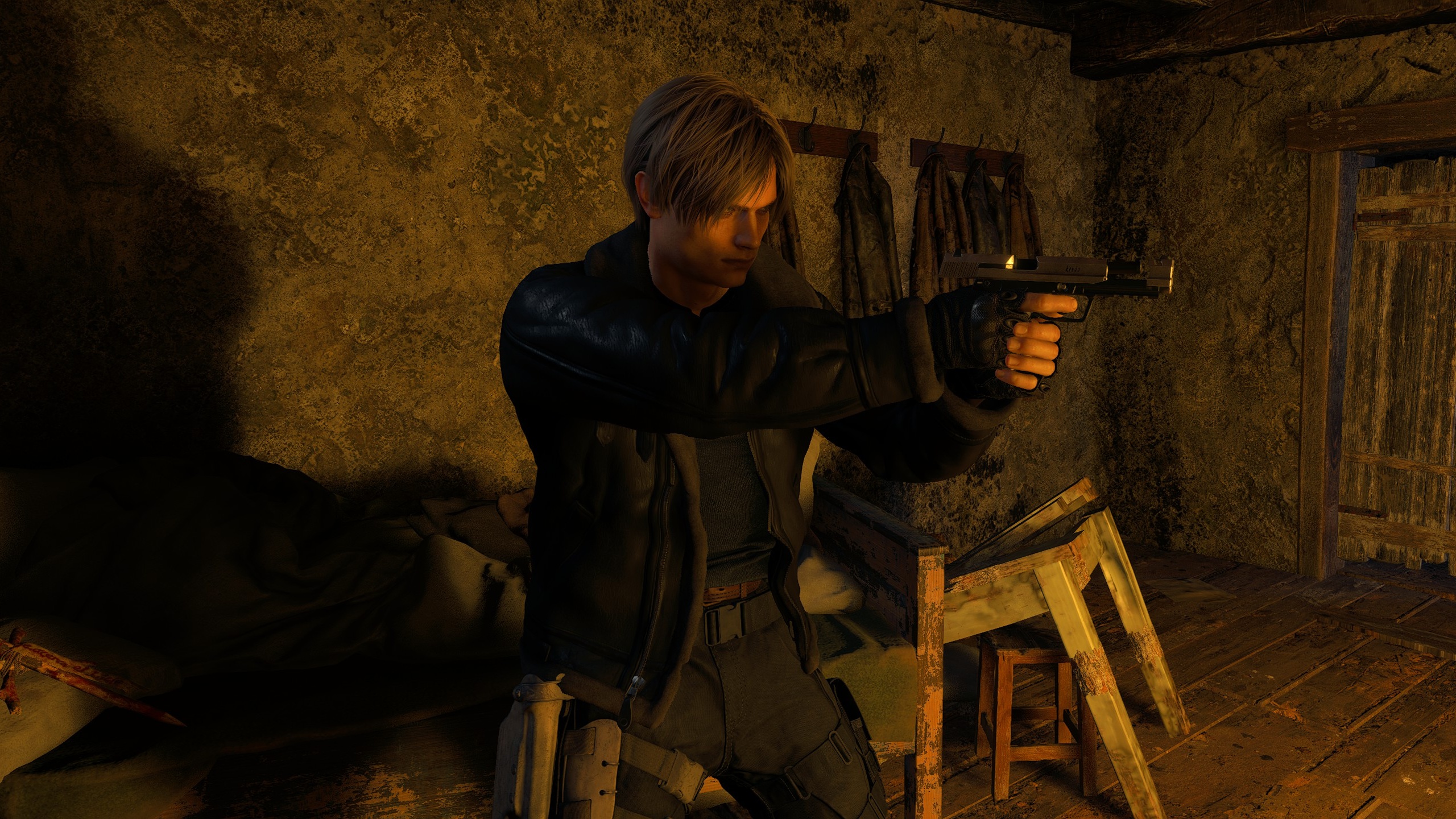

The RTX 4080 Super storms through the 3440x1440 resolution on maximum settings, and does the same with the PG49WCD's 5120x1440 resolution (especially with DLSS 3). This comes after tests in multiple games, most notably - you guessed it - the incredible Resident Evil 4 remake.

While Capcom's 2023 return hit wasn't necessarily a demanding title, I've always wanted to crank graphics settings to the max without worrying about the heavy performance hit I'd see with the 3080 Ti - and the RTX 4080 Super has allowed me to do just that.

What makes the jump from the RTX 3080 Ti to the 4080 Super worthwhile?

It's entirely possible to stick with Nvidia's high-end RTX 3000 series GPU at 3440x1440, 5120x1440, or even 4K, but the problem you might run into is the need to adjust your in-game graphics settings. This is especially the case since so many recent PC titles are poorly optimized (I'll never shut up about this), and the highly beneficial DLSS 3 is only available to RTX 4000 series users - with Team Green's Frame Generation software, even if you're faced with poor performance, it can work wonders by literally making more frames for higher frame rates (despite the slightly increased latency this causes).

The frame rate would wildly fluctuate between around 60 and 100 frames per second in the Resident Evil 4 remake at the 3440x1440 resolution with the 3080 Ti when using the 'High (6GB)' texture quality setting with ray tracing enabled. It didn't help that there's actually no DLSS support for the game, and enabling FSR 2 on an RTX 4000 series GPU just doesn't feel right - especially given how much better DLSS is when it comes to preserving image quality.

Testing with the RTX 4080 Super

Whilst those performance results aren't bad, you could find yourself dealing with constant frame rate dips (also depending on your CPU), which can be incredibly frustrating. I also must mention that the frame rate in the Resident Evil 4 remake specifically can fly all over the place as you enter new locations - most of my testing was done during the iconic village onslaught near the beginning of the game, which is where most of the dips occurred (likely due to the numerous enemies, destructible objects, and corpses).

In came the RTX 4080 Super to my aid! Even without access to DLSS, it outperformed the RTX 3080 Ti comfortably, keeping a consistent frame rate above 99fps at 5120x1440 using the same 'High (6GB)' texture quality and ray tracing enabled. I didn't manage to test the RTX 3080 Ti at the 5120x1440 ultrawide resolution, but the results at 3440x1440 should give you a strong indication of how it may struggle with the PG49WCD monitor's 5120x1440 panel.

Besides the remake, I gave the 4080 Super a run for its money, testing Cyberpunk 2077 at maximum settings using DLSS 3 in 'Quality' mode, and this easily sat above 100fps - setting the 'Overdrive' ray tracing mode on (a ludicrous setting that even the RTX 4090 struggles with) dropped this down to about 30 to 55fps. When compared to the 3080 Ti's 20 to 30fps, the difference is clear to see when both GPUs are put under heavy load in one of the most demanding games available.

Downsides of the RTX 4080 Super

If there's one gripe I have with the RTX 4080 Super itself (and the other high-end RTX 4000 cards, too), it's the power cable adapter that comes with the GPU. Intent on closing your PC's side panel? If you're using the included adapter, forget about it. Not without squashing the power cable to a ridiculous degree - considering previous stories of RTX 4090s burning due to issues of power connectors not fully inserted, I was never going to risk flexing a stiff cable adapter just to secure my case's side panel, ultimately putting my system at risk.

Purchasing a Seasonic 12VHPWR power cable served as a better solution (if of course, you have a compatible Seasonic PSU), providing a far more convenient case setup for me.

Besides this, the RTX 4080 Super impressed me by taking games that would chug along on my previous setup and in many cases literally brute-forcing its way through to fantastic performance while still allowing them to look their best.

How did the Asus PG49WCD OLED fare?

As most OLEDs do, the Asus ROG Swift PG49WCD super ultrawide does an incredible job at providing great deep black levels thanks to the infinite contrast ratio - this paired with a 32:9 aspect ratio at the 5120x1440 display resolution, is the stuff of dreams.

My infatuation with the Resident Evil 4 remake will never die, and while this monitor's resolution pushes the perspective far too close to Leon, a mod known as REFramework from 'Praydog' on GitHub fixes this, adjusting the FOV appropriately.

Like I've said in previous articles, a 32:9 display is fantastic for creating a more immersive experience, and fortunately the PG49WCD (like many other OLED monitors) does a decent job at it. Similar to the move from the RTX 3080 Ti to the RTX 4080 Super, the jump from the Alienware QD-OLED ultrawide to Asus' 49-inch screen was well worth my time.

It single-handedly pushed my immersion up a notch (where I initially hadn't expected it to) - while the best OLED display I've personally used is still the one on the Lenovo Yoga Slim 7x laptop with its True Black HDR 600 VESA certification, this didn't take anything away from my experience with the PG49WCD despite it only being True Black 400.

The one aspect that did leave me with minor annoyances as it does with most OLED monitors, is the OLED care pixel refresh - while this isn't necessarily an issue as it's there to prevent potential burn-in, it becomes irritating using this tool after a while. What doesn't help here either, is the small LED indicator below the monitor, which doesn't get bright enough to tell you whether a pixel refresh is taking place (before realizing refreshes happen automatically, fortunately).

This is quite different with the AW3423DWF QD-OLED, as there is a bright green LED light on the power button to indicate a refresh is occurring - but on a positive note for the PG49WCD, at least the reminder doesn't appear right in the middle of your screen, disrupting gameplay.

Back to the case of immersion, I recently purchased the Meta Quest 3S VR headset, and I can say that this widened perspective comes close enough (with the bonus of an OLED panel). There are small cases of a fisheye effect in some games (specifically Call of Duty: Black Ops 6 for me), but it isn't very noticeable.

Is the 32:9 aspect ratio too much for gaming?

Now, considering the pricing of Asus' PG49WCD monitor ($1,199 / £1,399 / around AU$2,699), it's definitely not an affordable option for most PC gamers - it's worth noting that a large number of games do not have official support for the 32:9 aspect ratio, so I've already had to deal with relying on modders to find a solution where possible. There is also indeed an aspect of having too much screen space, particularly for gaming.

This isn't me criticizing the monitor, but rather letting you know that it isn't an absolute must-buy over a 3440x1440 display - it's certainly worth it don't get me wrong, but if you're not looking to drain your wallet for the monitor and Nvidia's high-end GPU to power through it, you're better off aiming for the likes of the aforementioned AW3423DWF QD-OLED.

You might also like...

- I've never taken Intel's GPU competition seriously, but the Arc B580 has left me no choice - it just surpassed the RTX 4060 and RX 7600 in Vulkan benchmarks

- Intel confirms Arc desktop GPU launch on December 3, while Battlemage B570 leak hints that it could be a dream budget graphics card

- Desperate to see Nvidia’s RTX 5090? A graphics card maker rep just accidentally revealed that next-gen flagship GPU will be unveiled at CES 2025