Millions of people have watched the latest trailer for GTA 6, which leaked this weekend. It is one of the biggest gaming events of the year with speculation running rampant on what to expect when the game is released.

Armed with nothing more than an iPhone and a handful of generative artificial intelligence apps I decided to see if I could create a Grand Theft Auto 6 trailer in just 30 minutes.

Since joining Tom’s Guide I’ve used AI to create a Thanksgiving meal, a Hallmark-style Christmas movie, and to plan a hiking trip in New York. Producing a trailer for a AAA game in such a short amount of time might be my biggest challenge yet — but also a fun way to explore the potential of AI.

Where to begin?

There are two ways to approach this. The first is to use ChatGPT, Claude, or Google’s Bard to create a script and storyline, then build images around that. The second, and the one I picked, is to start with the images and see where it takes me.

As DALL-E 3, the AI image generator built into ChatGPT Plus, can be a little squeamish about creating images based on a real person, product or service I opted to use Stable Diffusion.

SDXL 1.0 is a powerful image-generating model and I’m using it through NightCafe, a multimodal generative AI platform and community that also has several pre-built styles including an “epic game” aesthetic.

Creating the images

My first prompt: "Grand Theft Auto 6" created some interesting images but they were a little too much like GTA 5. So I tried again, refining the prompt to say: “Grand Theft Auto 6 main character gameplay,” which got me closer to the goal.

The final prompt was “Next generation of Grand Theft Auto. Advanced future look with main character gameplay,” which, while not particularly clever, generated GTA-esq pictures.

In each generation I had it create between six and nine images and surpriginly they were each completely different, not just versions of the same car or person. I picked half a dozen of the best, then thought up a fresh prompt for interiors and party scenes.

Making them move

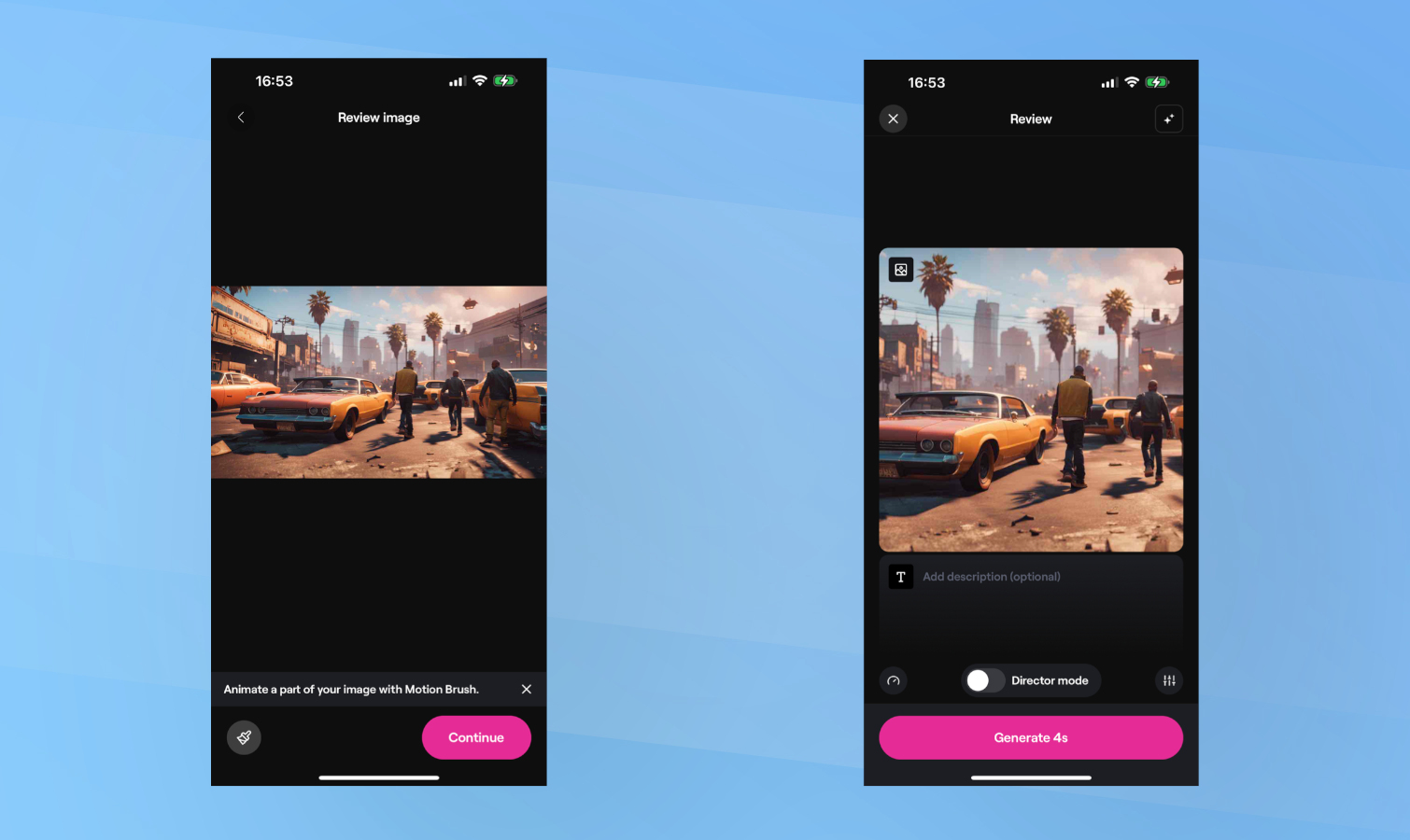

Next up is turning the still image into something that resembles a trailer. There are several possible choices for this including Stable Video Diffusion (SVD), Pika Labs and Runway.

I’ve opted for Runway as Pika 1.0 hasn’t rolled out and SVD has limited capabilities. Runway allows you to convert images, text or videos into other videos and gives you fine control over how it looks and items within the video move. For convenience and time I left it on default.

The downside to this approach is that it can generate some unexpected output. For example there was one clip I couldn’t use where the protagonist appeared to merge with the vehicle he was sitting on. In another the motorbike goes backwards before folding in half.

In total I made about 20 clips, with nine turning out fully usable for the video. These were made from images generated previously, and allowed me to create the 45 second trailer.

The added extras

I added one additional opening graphic with the GTA logo to round things off. While Stable Diffusion generates impressive looking images, it still struggles with text and so for the opening image I turned to ChatGPT Plus. This includes the DALL-E 3 model which, for hte most part, can generate well constructed, properly spelt text.

I put this into Runway to generate an opening sting that started with the logo DALL-E created, zoomed in and ended on a street scene, which created the perfect transition into the first clip.

I put the clips into Adobe Premier Rush on my iPhone as this is a full featured, easy to use video editor with a free version. I added a hip-hop soundtrack and realised something was still missing, especially when compared to the real GTA 6 trailer.

Finishing it off

To complete the video I added a “Ryanstar Games presents” title about 10 seconds in, and went back to ChatGPT to have it write a short monologue in the voice of the main protagonist.

I then opened ElevenLabs, a text- or speech-to-voice AI tool that creates incredibly realistic synthetic voices. I entered the script from ChatGPT and clicked generate, using a deep, rough sounding voice and added the resulting sound file to Adobe Premier Rush.

The resulting video, while not perfect, is still a fascinating look at the possibilities presented by artificial intelligence. Created in just 30 minutes, it gives an insight into the game, a voiceover and even an opening graphic to pull it all together.

Given enough time to fine-tune each video generation, creating more structured image prompts and even use ChatGPT to improve the quality of the prompts for SDXL 1.0, I think I could have created a more natural feeling, exciting trailer. I would also have explored music generation models to create a fully AI-generated production.

What the output shows is that, despite being less than a year old, AI video production is improving all the time and will eventually make the life of effects artists, directors and creative professionals easier, faster and more creative as AI will carry out the heavy lifting.