Many of the best phones released this year have all been turbocharged by AI features, so that piles up the pressure on Apple to respond and keep pace. With the release of the iOS 18.1 developer beta, I’m getting my first taste of what Apple Intelligence brings to the table. More importantly, it gives me a better idea of how these new AI experiences could alter the way I use my iPhone 15 Pro going forward.

I know first hand about the benefits of having access to AI tools and features on my phones. For example, I still can’t stop raving about the Pixel 8 Pro’s ability to intelligently take phone calls on my behalf with Call Screen — or how Interpreter Mode on the Galaxy Z Flip 6 makes conversations in another language easier.

Not only do these AI features add value to their respective devices, but they save me time and frustration of doing complex processes on my own. Of course, Apple Intelligence is no different with its fundamental principles.

As a reminder, I’ve been trying out these new Apple Intelligence features on my iPhone 15 Pro for a short period of time using an early-release beta — so they may not be in as polished form, given how things can dramatically be different between the beta and actual Apple Intelligence release later this year. I’ll highlight everything that stands out, including how practical and intuitive they are to use.

Siri: a much-needed makeover

When I think about AI, the first thing that comes to mind is an intelligent voice assistant that can help me out with mundane tasks. Out of all the new Apple Intelligence features I’m trying out with the iOS 18.1 developer beta, this new version of Siri is a refreshing change that Apple desperately needs.

Sure, Siri has a new look and animation, but the visual makeover is nothing compared to how it sounds. This iteration of Siri has a more natural conversational tone, with less of that robotic speech pattern and fewer odd pauses between words. I also like how I can keep the conversation going and even cut Siri off mid-answer to ask another question.

While this is great, Siri can still have trouble answering more detailed questions. For example, I asked Siri who did the Yankees beat in last night’s game, to which it responded with the correct answer — but when I asked which pitcher got the win, I simply got a pop-up screen with search results. A quick Google Search could easily fetch this answer, so I’m hoping Siri gets better as the beta gets more finely tuned.

I also tried asking Siri a bunch of simple product knowledge questions, like asking it what’s my Wi-Fi network password — which prompted Siri to open the Passwords and Passkey app to show me. In contrast, I tried asking Siri to disable the gridlines in the camera app, but the assistant couldn’t do it. This is an example of how it still needs further refining because a simple thing like that shouldn’t involve me going into the Settings menu and drilling down into the camera settings to change.

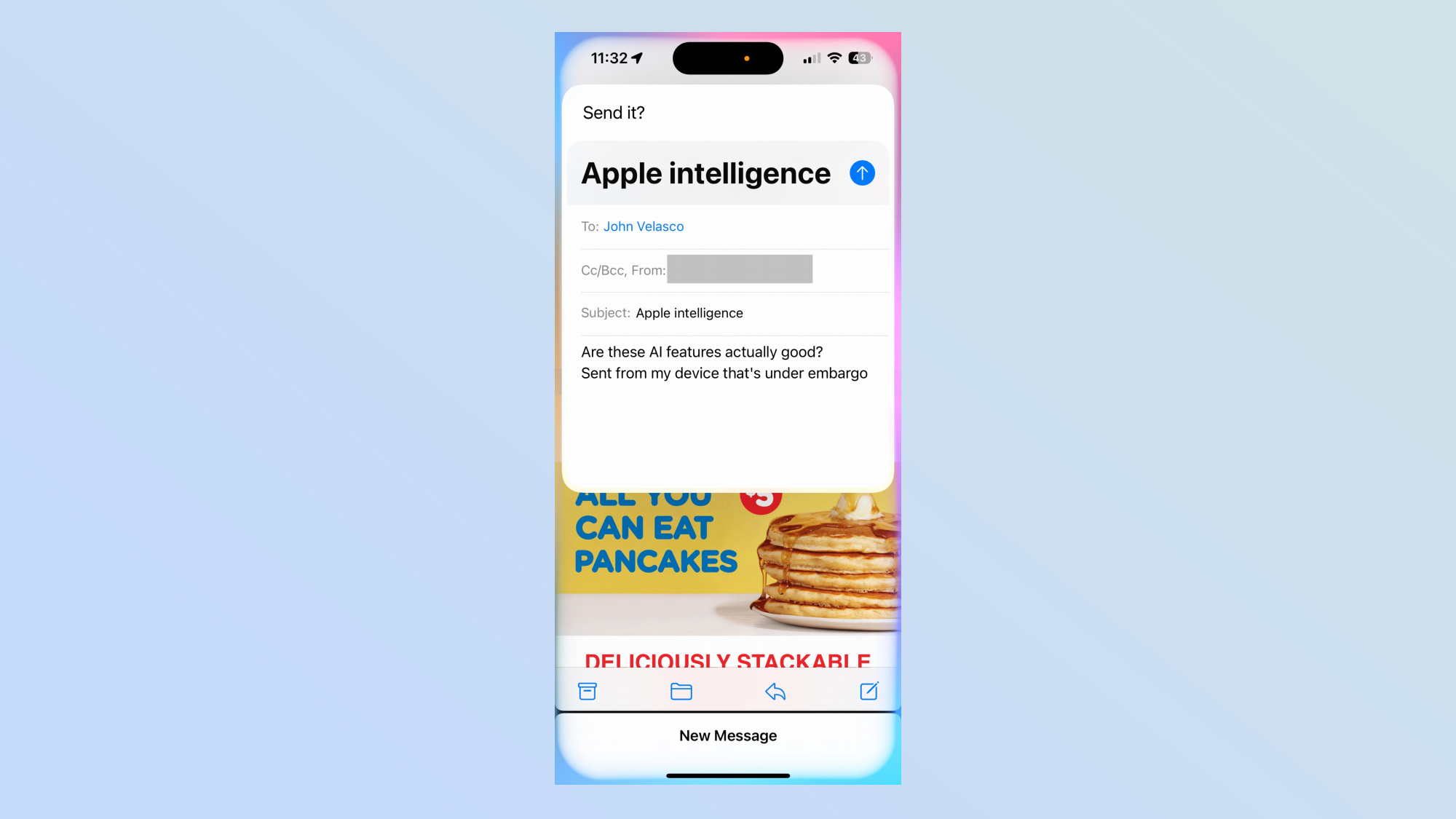

Finally, I can lean on Siri to help compose an email from scratch by simply relying on good ‘ol voice commands. With a simple request to write an email to a specific person, Siri takes me through all the steps to fill out the contents of the message — including the subject matter. This is one way of how Apple Intelligence roots itself in apps. However, when I tried asking Siri to get me an Uber ride to the nearest train station, the assistant simply opened the app.

Writing Tools: Could be useful, could be novel

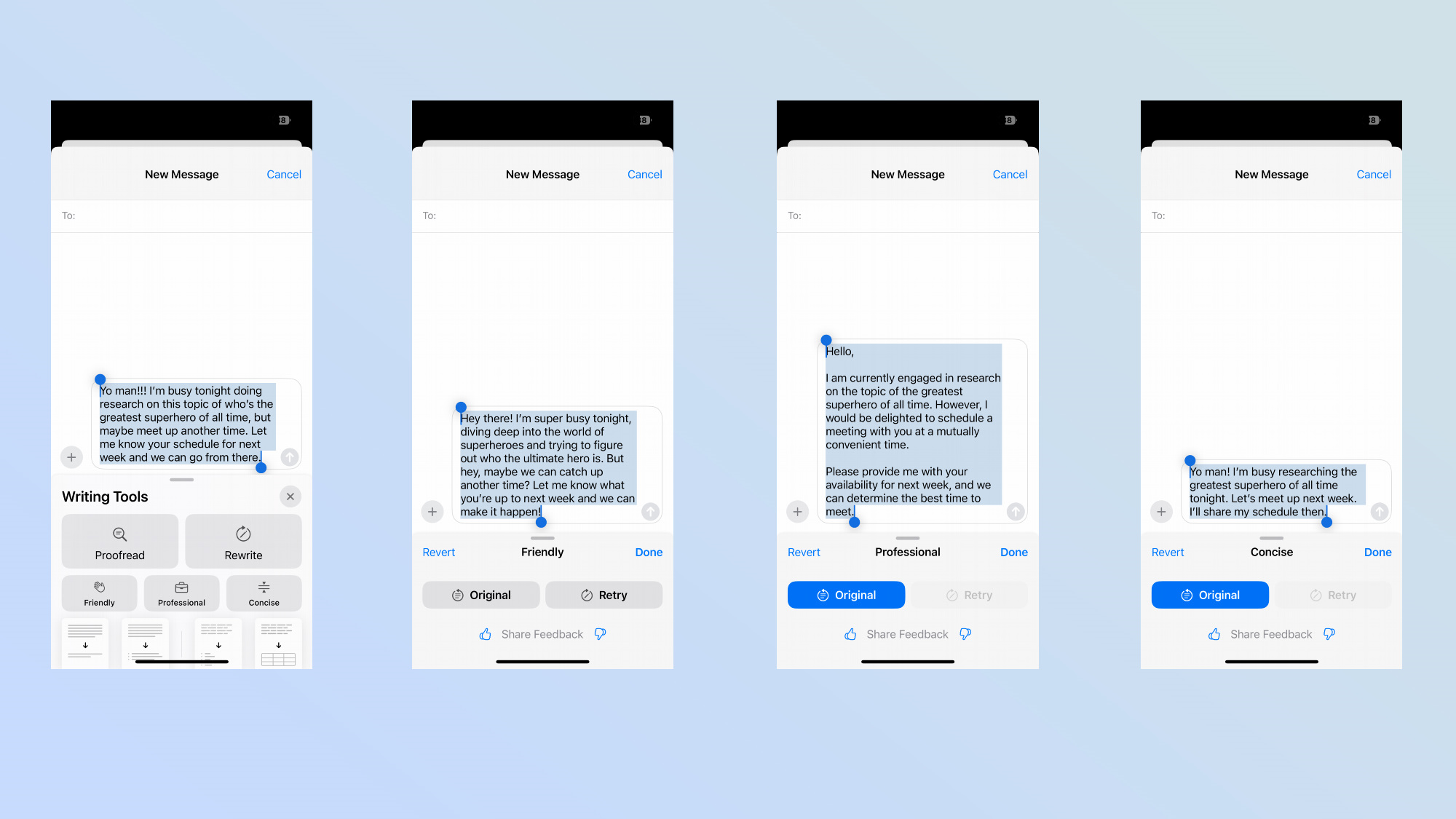

Writing Tools consists of a bunch of features in Apple Intelligence, but the premise is no different from the implementations I’ve seen in other phones. It’s similar to the Chat Assist feature on the Galaxy S24 Ultra that I’ve tried out, which offers me different writing styles to choose from with my messages. In this case, Apple Intelligence gives me the options for friendly, professional and concise.

Each option takes what I jot down and rewrites them into the style I select. Similar to Chat Assist for the S24 Ultra, I think they’re novel at best and something I just can’t see myself using that often. While I’ve never really found much use with these AI-assisted writing styles, I can see how they can come in handy for certain situations. Just take a look at what it came up with in the screenshots above.

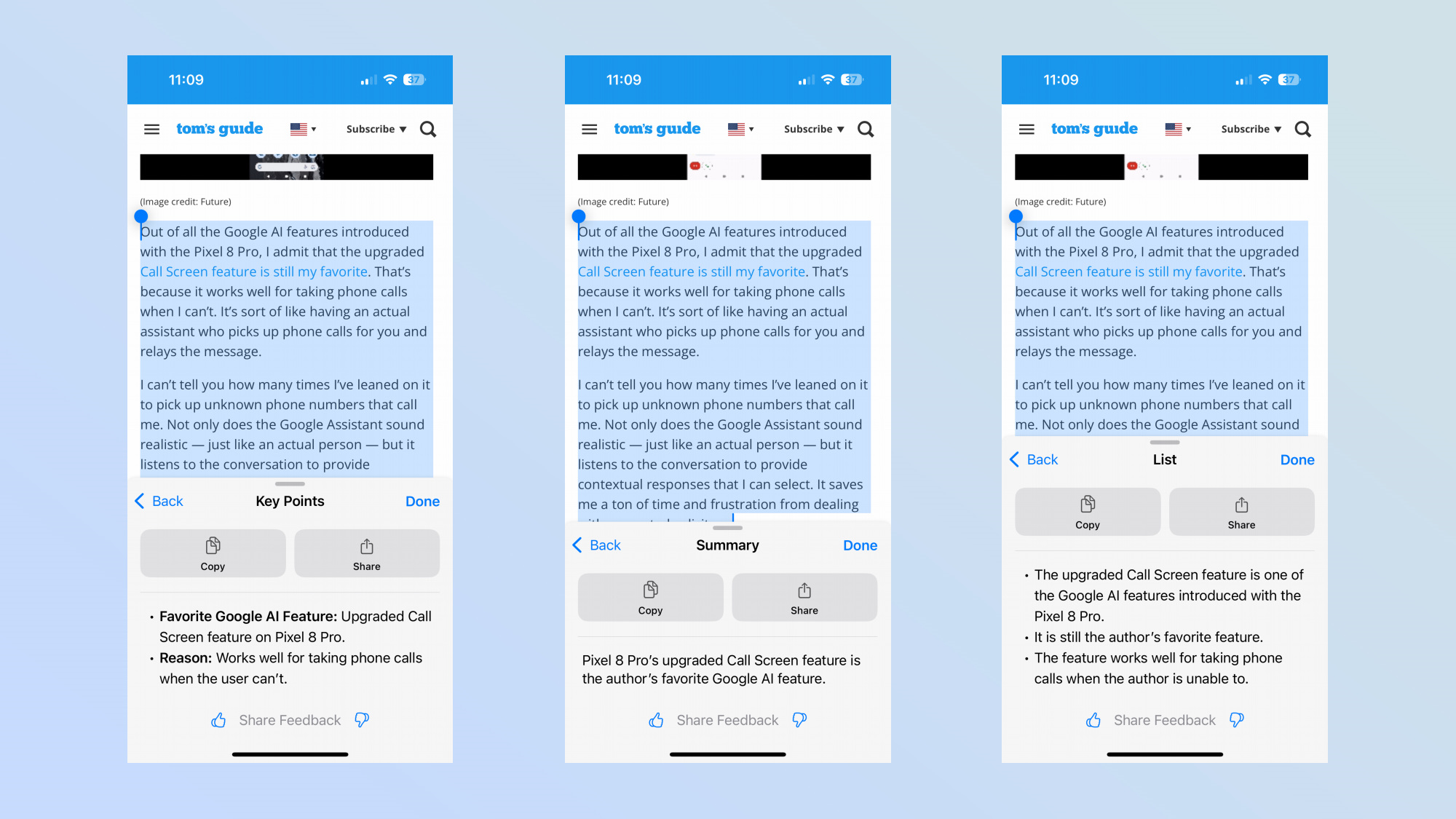

Additionally, I can take a long email or web page and have Apple Intelligence generate a summary, list, table, or key points for me. I used my long term review of the Google Pixel 8 Pro as a test to see what Apple Intelligence does, and predictably so, I think it nails it all down. Taking two paragraphs from the section on Call Screen, Apple's feature sums up the entire thing into one simple sentence — while it provides me with three main key points when I select list form.

That said, it’s tough for me to say confidently if it’s a compelling case for AI. Like really, I know what I sound like when I type — so to change it up just feels a bit weird.

Mail: TL;DR style priorities

When it comes to email, the Gmail app has been my preferred choice because of its robust features and functionality. Apple’s hoping to gain back followers because the Mail app is more helpful thanks to Apple Intelligence.

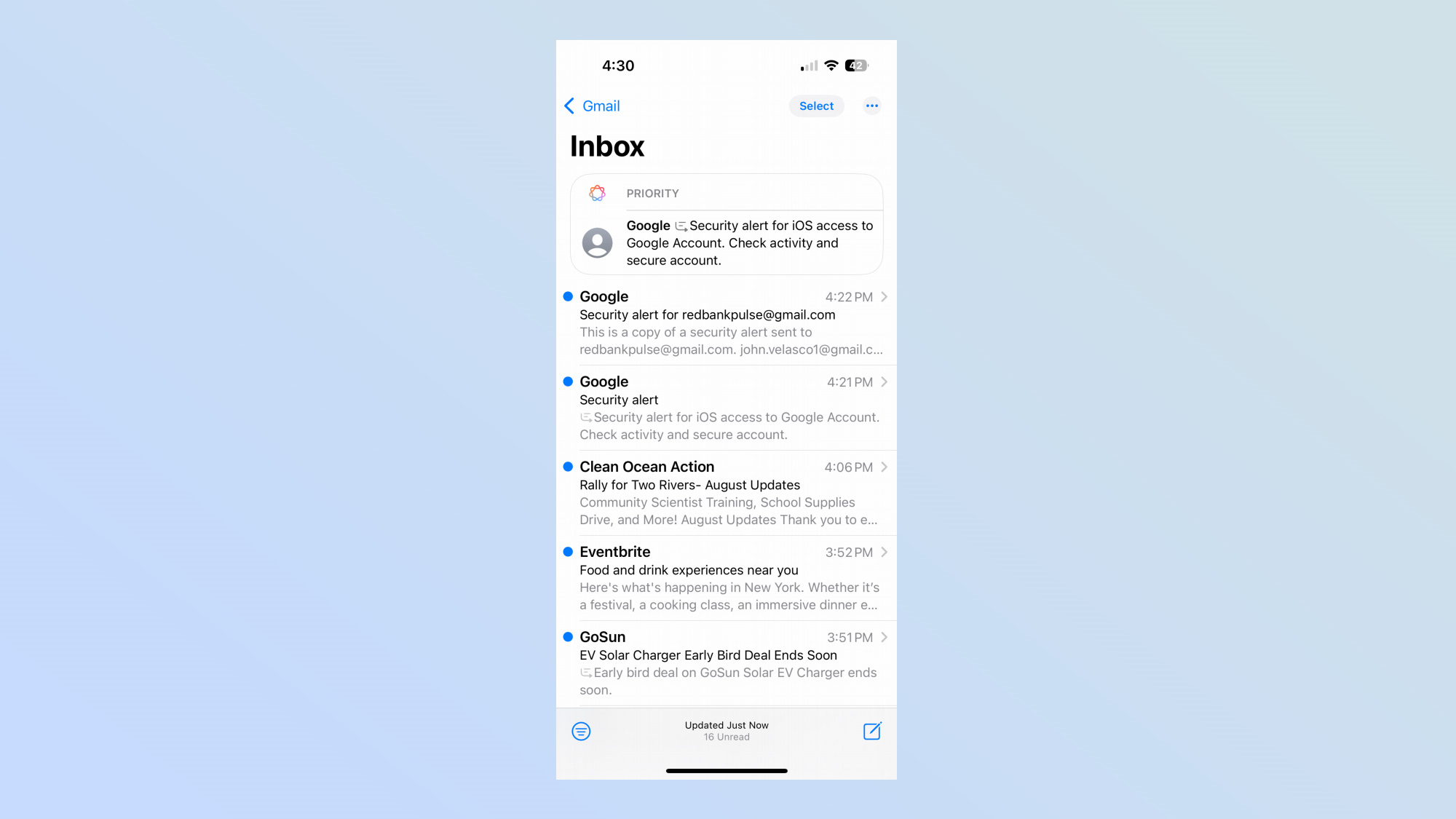

Right off the bat, the first thing I’m instantly attuned to is the priority message at the top of each of my mailboxes — you know, the stuff that should get your attention. While it’s still early on, it’s going to take more emails and time before I really see the benefit of Priority Messages because the ones I’ve seen so far simply involve security alerts due to my recent log-ins with my accounts.

But given how security and privacy are paramount for Apple Intelligence, I guess it’s doing its job at notifying me about these messages. However, I’m curious to see what other emails earn priority the more I use Mail.

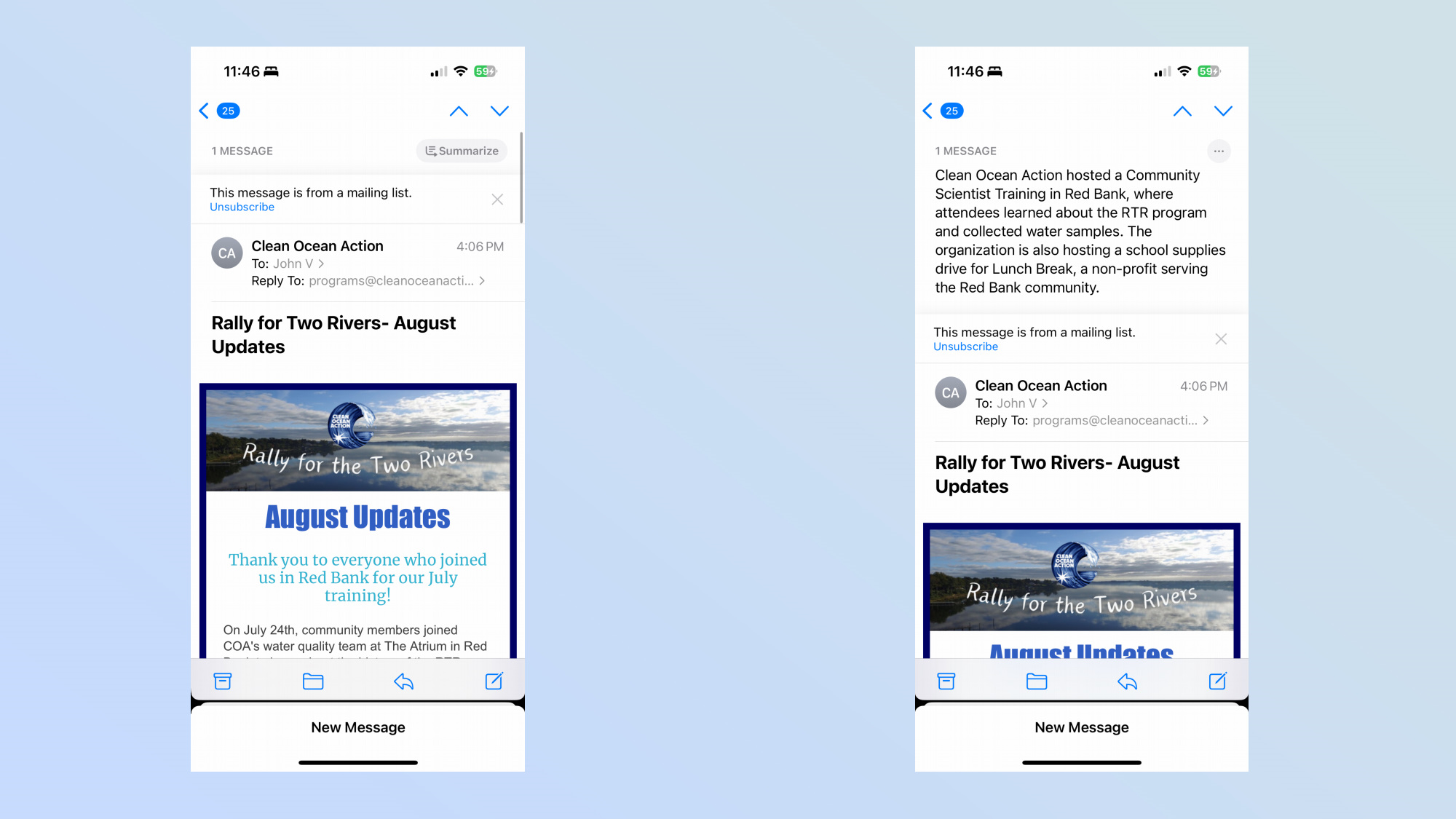

When I select an email, Apple Intelligence also gives me the option for a brief summary. Rather than reading through this email I got from a non-profit about an upcoming event, I can get a TL;DR style summary that I think condenses the message into a bite-sized friendly summary. Considering how I have such a short attention span, I can definitely see myself using this frequently to save me the drudgery of going through less important topics.

These are all good starting points to make the Mail app a more viable option for iPhone users, but I’d like to see more action on the part of sifting through the hundreds of emails I get daily — and automatically filter out what needs my attention and what should be disregarded. I really hate seeing my inbox higher than 100 unread messages, so it’d be nice if Apple Intelligence could manage things better than Gmail.

Notes, Photos, and Notifications

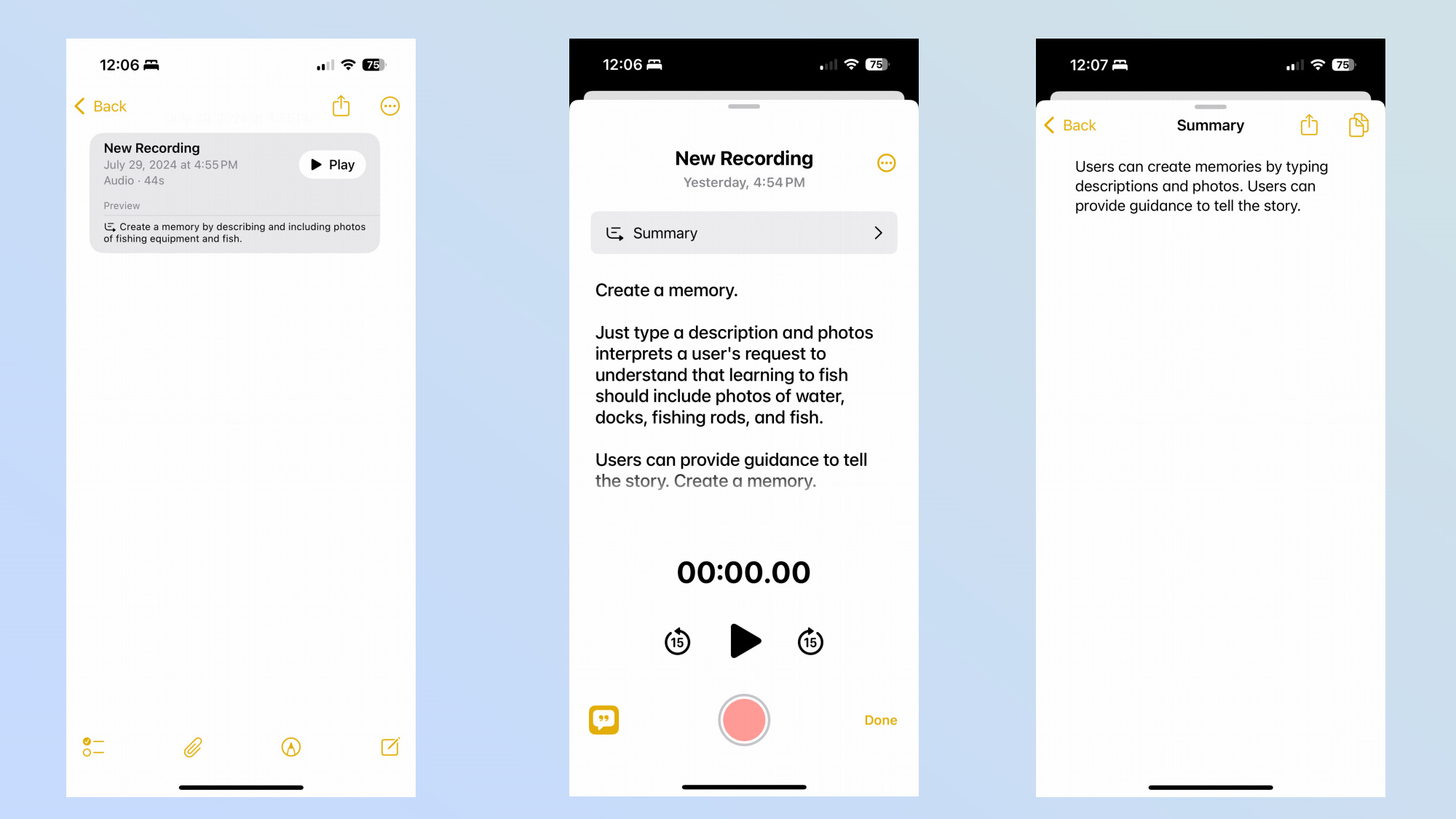

As for the rest of the Apple Intelligence features included with the iOS 18.1 developer beta, I’m still trying to get a better feel and understanding for them. In the Notes app, I’m able to record and transcribe audio recordings, and Apple Intelligence generates a summary for me. I tried it out and it took what I read out aloud and transcribed it all within the Notes app — including a two sentence summary. I can see this handy for long presentations because it beats having to jot every single word down myself.

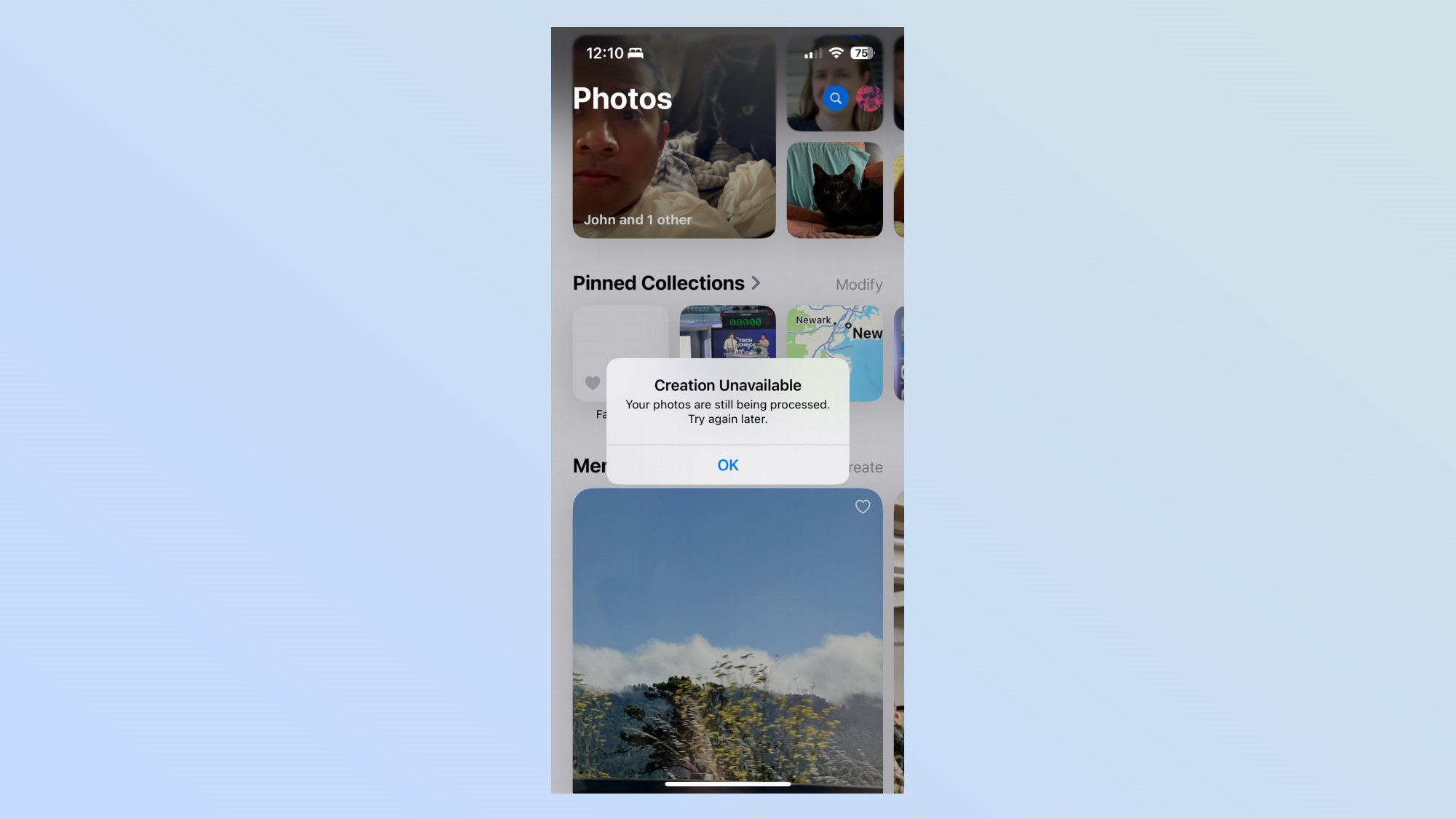

In the Photos app, there’s the option now to create a memory from the photos I’ve taken with my iPhone 15 Pro. However, I’m unable to try out this feature right away because of the message I receive on how my photos are still being processed to allow this. But the cool thing about this feature is that I’d be able to provide a description about what I want, which Apple Intelligence would then create a storybook of sorts complete with images and audio to show that memory.

Lastly, notification summaries would take all the notifications I get from Messages and Mail — and then provide me with a summary through the notifications panel. So far, I haven’t seen this come up yet, but I suspect that I just need a new day’s worth of notifications for the feature to strut its stuff

Apple Intelligence: Outlook

There’s still a long road before we truly feel the gravity of Apple Intelligence’s true worth. The stuff I’ve been testing out isn't groundbreaking, but it’s only a fraction of what Apple showed off during WWDC 2024 — plus there’s time to tweak these features.

What I’m most excited for are some of the generative AI stuff that Apple Intelligence is expected to offer, like being able to create original images with Image Playground based on descriptions. I would also love to see deeper third party integration with Siri, so I can perform more complex actions apart from opening apps and whatnot. These are naturally things that save me on time, and ultimately shows my iPhone can make me more productive.