In a blog post from Google on Tuesday, the company said, "Android is the first mobile operating system that includes a built-in, on-device foundation model," and now, Google's Pixel phones will be the first capable of running AI features fully on-device.

A month ago, we excitedly reported on a claim from Bloomberg's Mark Gurman on many initial AI features for iOS working "entirely on device." This approach would've relieved many privacy concerns regarding AI on iPhone, but Gurman is now reporting that Apple's AI features won't run entirely on your device, and will instead be split between on-device processing and secure data centers.

So regardless of how Apple ends up handling its AI features, Google beat the company to the punch by launching its expanded Gemini Nano model with extra on-device features. Apple won't launch iOS 18 until later this year, but Google's updated Gemini Nano model is available now on the Pixel 8 Pro.

Here's what a privacy-focused, fully on-device AI experience will look like with Gemini Nano on the Pixel 8 Pro (and eventually, the Pixel 8 and Pixel 8a).

What Google's expanded Gemini Nano model can do on-device

Google calls Gemini Nano its "AI model built for maximum efficiency on Pixel phones," and it's certainly capable of a lot.

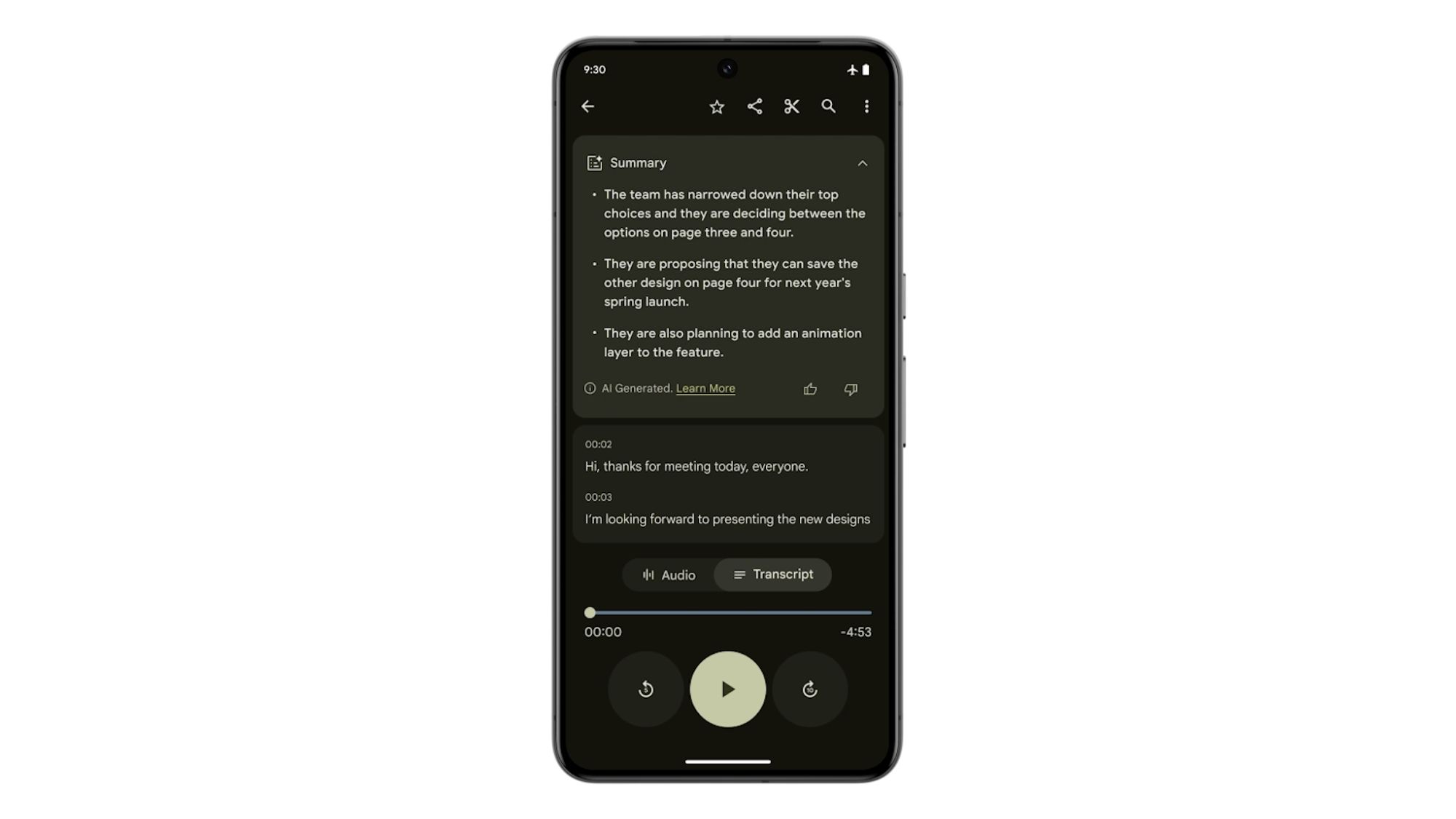

Through the Recorder app on the Pixel 8 Pro, the Summarize feature can analyze any recorded conversation or speech and create an easy-to-read summary of the main points. And with this expanded Gemini Nano model, "playing, rewinding, typing notes, [and] powering through irrelevant sections of the recording" are all done on-device, "without the need for an internet connection."

People who use Google Messages on a Pixel 6 or newer have likely already experienced the company's Magic Compose AI feature, which can paraphrase your messages in different ways. That version of Magic Compose uses cloud-based AI processing, but with Gemini Nano, it'll use on-device processing and function without an internet connection.

Google also reports that Gemini Nano will gain full multimodal functions, "starting with Pixel later this year." Put simply, this means Pixel phones will soon be able to understand more complex contexts and process "sights, sounds, and spoken language." It'll have more 'agentive capabilities,' one of the buzzwords Google used to describe the smarts its new AI possesses.

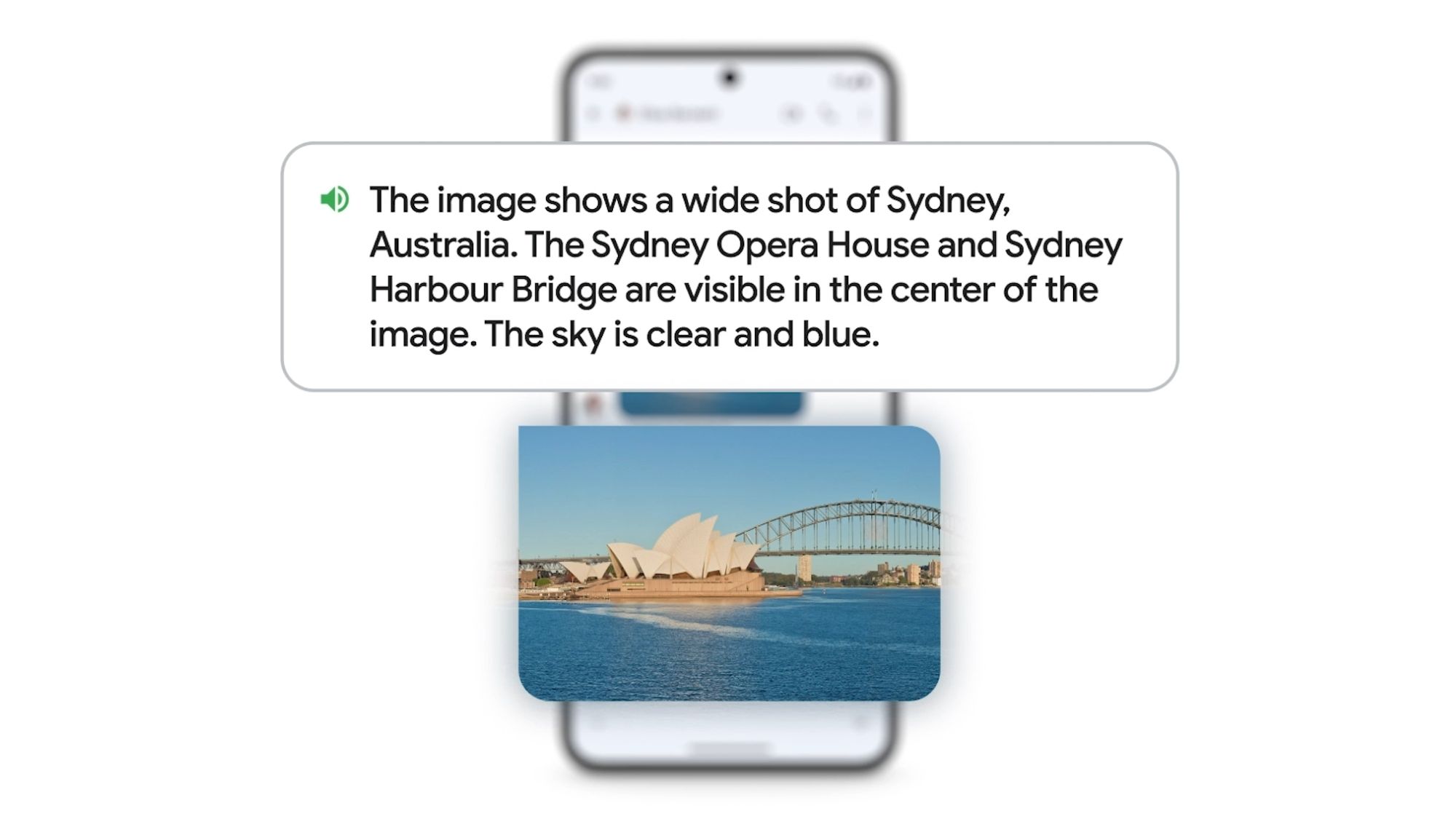

With its new multimodal capabilities, Gemini Nano will improve existing features, like TalkBack, and make new features possible, like detecting suspicious activity in phone calls.

The existing TalkBack feature helps those who are blind or have low vision use their devices more effectively with touch and spoken feedback. Gemini Nano will be able to improve TalkBack by giving more vivid descriptions of what's appearing on-screen, from "what's in a photo sent by family or friends" to "the style and cut of clothes when shopping online" — all without a network connection.

The Gemini Nano model will also be capable of warning you in real time if it detects a suspicious phone conversation with a potential scammer. Google says you'd get an alert if a "bank representative" asks you to do something potentially malicious, like "urgently transfer funds" or give them your bank PINs or passwords. That could be a lifesaver when it comes to protecting vulnerable groups from spam calls.

And because this built-in protection would be happening on-device, any conversation that's monitored or potentially recorded would stay private on your smartphone. This security feature isn't launched just yet, but Google says it'll "have more to share later this year."

If Google plans to share more details at its next event — likely the Made by Google event — it'll be October before we hear anything new.