Google has responded to claims that its AI Overview answers appearing at the top of search results for health queries are inaccurate. An investigation by The Guardian claimed that certain queries, such as “what is the normal range for liver blood tests,” would return misleading information.

According to the Guardian's report, the above search query into Google would return an AI Overview filled with "masses of numbers, little context and no accounting for nationality, sex, ethnicity or age of patients." In another instance, the AI result seemingly told people with pancreatic cancer to avoid high-fat foods.

The experts consulted by the site didn't pull their punches.

Anna Jewell, the director of support, research and influencing at Pancreatic Cancer UK told the site the AI Overview was "completely incorrect" and that following its advice “could be really dangerous and jeopardise a person’s chances of being well enough to have treatment.”

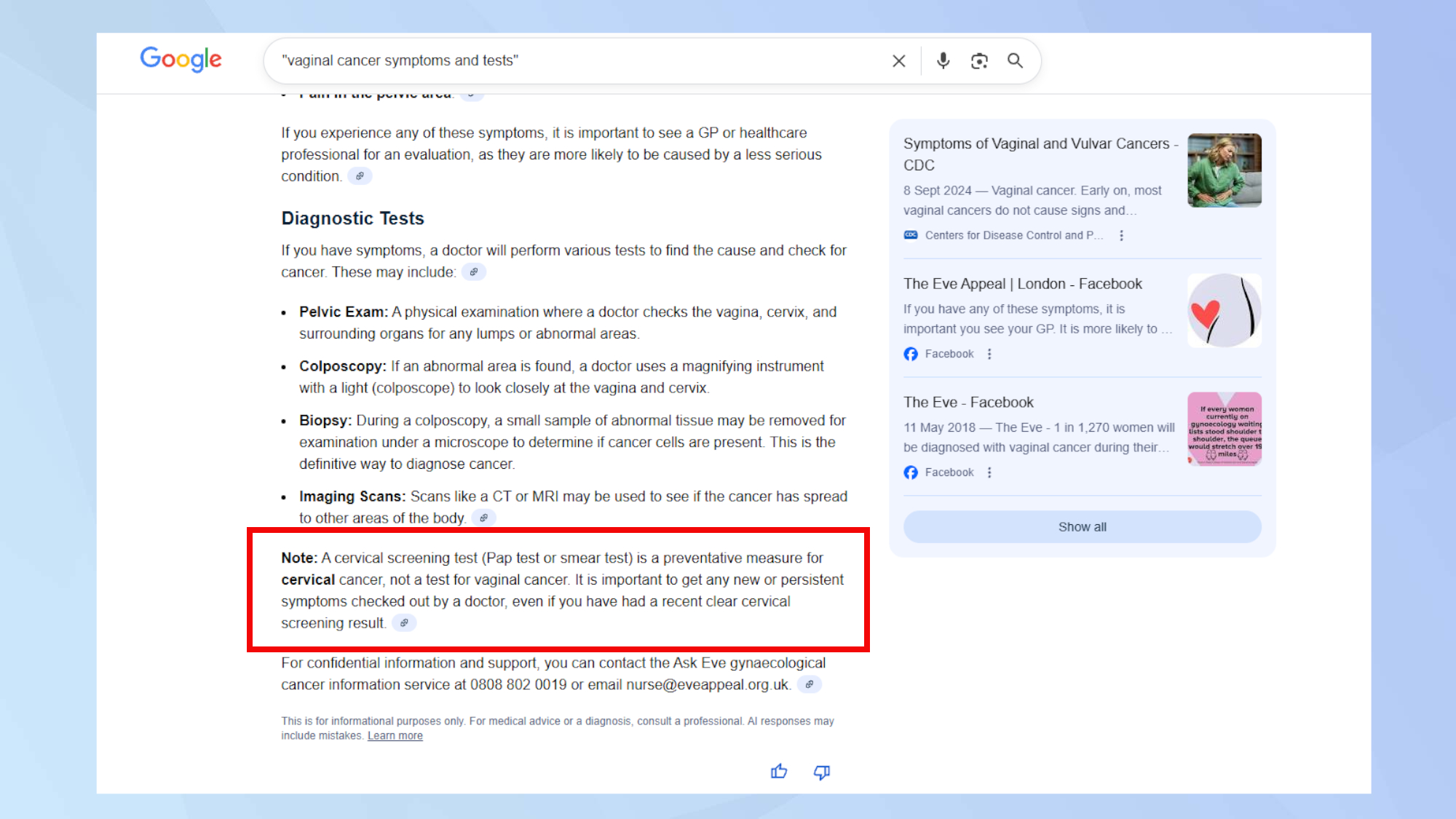

Other examples The Guardian provided include searching for "vaginal cancer symptoms and tests," which led to an incorrect AI Overview saying a pap test screens for vaginal cancer (it screens for cervical cancer), as well as searches around eating disorders.

Google responds to claims

Google acknowledged the investigation and has responded to the Guardian's claims. When I searched for the above query on liver blood tests, Google didn't surface an AI Overview at all, while searching for vaginal cancer symptoms still provides an AI Overview but it makes the distinction on pap tests.

Our internal team of clinicians reviewed what’s been shared with us and found that in many instances, the information was not inaccurate and was also supported by high-quality websites.

Google told The Guardian: “We do not comment on individual removals within Search. In cases where AI Overviews miss some context, we work to make broad improvements, and we also take action under our policies where appropriate.”

I contacted Google separately for additional comment and received the following statement: “In cases where AI Overviews miss some context, we work to make broad improvements, and we also take action under our policies where appropriate. Our internal team of clinicians reviewed what’s been shared with us and found that in many instances, the information was not inaccurate and was also supported by high-quality websites.”

Further to the above statement, Google told me that many of the examples included in the Guardian's report were partial and incomplete, making it difficult to assess the full context of the AI Overview in question.

The company said its internal team of clinicians reviewed the examples shared against the NAM credible sources framework and felt they link to well-known and reputable sources and inform people when it’s important to seek out expert advice.

In addition, when it comes to the AI Overview on pancreatic cancer, Google said that it accurately cites reputable sources like Johns Hopkins University and a pancreatic cancer organization that cites Cedars-Sinai.

Meanwhile, the topic of fatty foods in pancreatic cancer is "nuanced depending on the patient’s individual situation, and the AI Overviews goes into more detail about different types of fatty foods so users can better understand what may apply to them."

Do you trust AI when it comes to your health?

In March last year, Google announced it was rolling out new features to improve responses to healthcare queries.

"People use Search and features like AI Overviews to find credible and relevant information about health, from common illnesses to rare conditions," the company wrote in a blog post.

"Since AI Overviews launched last year, people are more satisfied with their search results, and they’re asking longer, more complex questions. And with recent health-focused advancements on Gemini models, we continue to further improve AI Overviews on health topics so they’re more relevant, comprehensive and continue to meet a high bar for clinical factuality."

Picking apart the inclusion of AI into healthcare is extremely difficult and nuanced.

Nobody would argue that showing incorrect health information in a common search result isn't bad. And studies have shown AI Overviews aren't as accurate as we'd like them to be.

But what about AI-driven algorithms that support clinicians with faster and more accurate diagnoses? Or an easily-accessible AI chatbot working, not as a substitute for a medical professional, but as a first step for someone interested in collecting more information about a symptom or feeling.

I've certainly asked ChatGPT a few health questions over the last few months to get a steer on whether or not I should seek further help. And, as it happens, OpenAI has just launched ChatGPT Health, an AI tool aiming to make medical information easier to understand.

Would you trust an AI answer when it comes to aspects of your mental or physical health? Let me know in the comments below.

Follow Tom's Guide on Google News and add us as a preferred source to get our up-to-date news, analysis, and reviews in your feeds.