ChatGPT has been the leading artificial intelligence platform since its launch in November 2022. Over that time it has had new models and regular updates to the UI — but has OpenAI made some behind-the-scenes upgrades to GPT-4o that have made it more responsive?

I’ve spent a lot of time working with Anthropic’s Claude recently. It is no secret I’m a huge fan of the Artifacts and the way the Claude model responds. It is often more detailed in its output, understands what I’m asking from a single prompt, and is faster thanks to Sonnet 3.5.

However, I regularly chop and change between the platforms including Llama on Groq, Google Gemini, and the many models available on Poe. Recently I’ve noticed ChatGPT has become as good as Claude with Sonnet 3.5 was when it first launched — particularly at longer tasks.

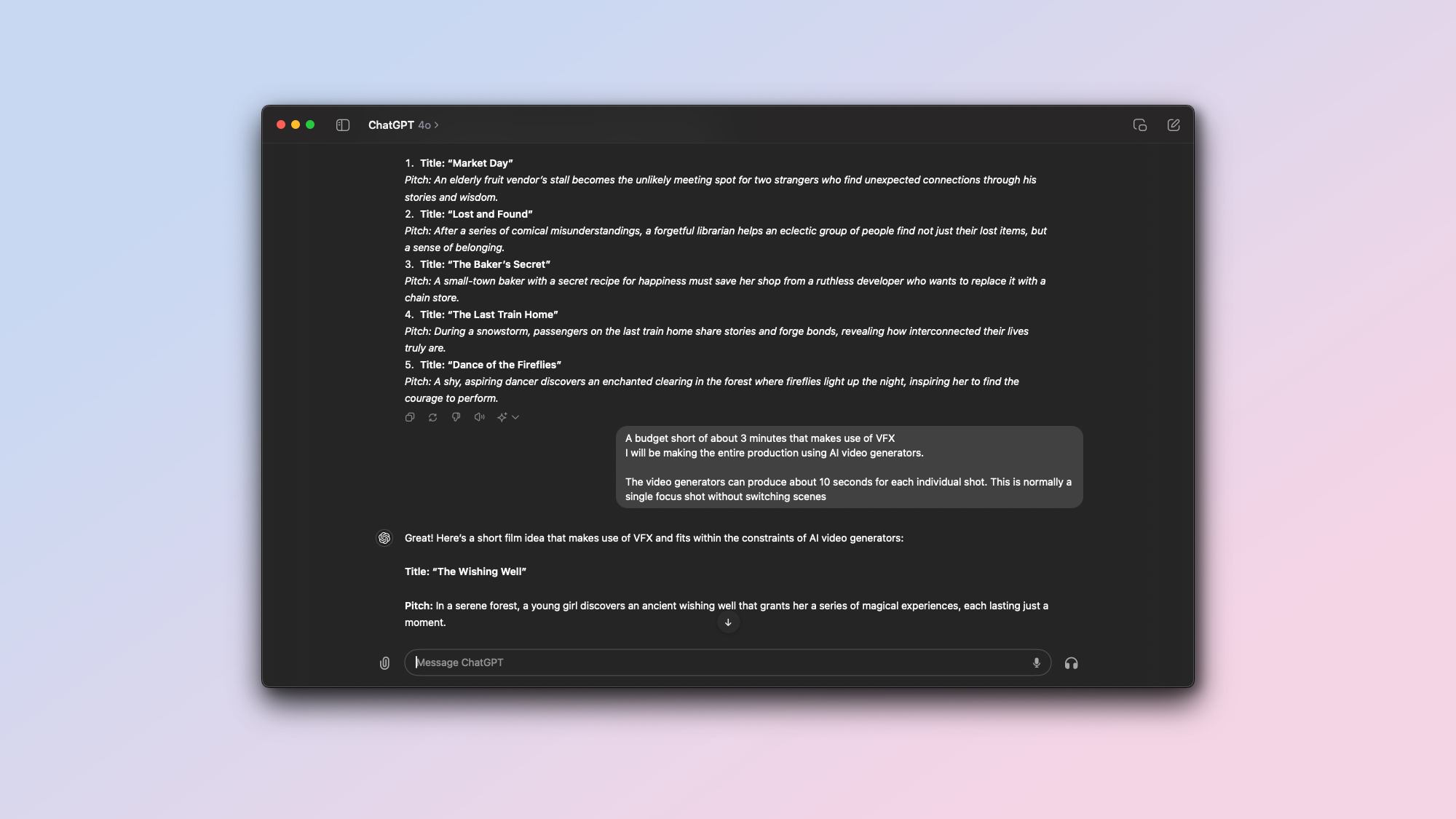

In the past week alone I’ve built an entire iOS app in an hour using ChatGPT, re-written multiple letters and created shot-by-shot plans for AI video projects — without ChatGPT breaking a sweat. It handles every query without getting tripped up, is very fast and more creative.

What’s changed in ChatGPT?

While new models from OpenAI like GPT-4o get all the attention, often the company will release a fine-tuned version of an existing model that can have a major impact on performance. These get little attention outside of developer circles as labeling doesn’t change in ChatGPT.

Last week GPT-4o was given an upgrade and a new model called GPT-4o-2024-08-06 was released for developers. Its main promise was cheaper API calls and faster responses but each new update also brings with it overall improvements due to fine-tuning.

These updates were likely also rolled out to ChatGPT — after all it makes sense for OpenAI to use the cheapest-to-run version of GPT-4o in its public chatbot.

So, while this might not have the glamour of a GPT-4o launch, it has led to subtle improvements. I suspect there is also an element of back-end changes to infrastructure that allow for longer outputs and faster responses beyond just the model updates.

It feels faster and more creative

I am basing this purely on my own experience using ChatGPT over the past week. I’ve offered it the same types of queries I’ve used on Claude and on ChatGPT and it ‘feels’ faster.

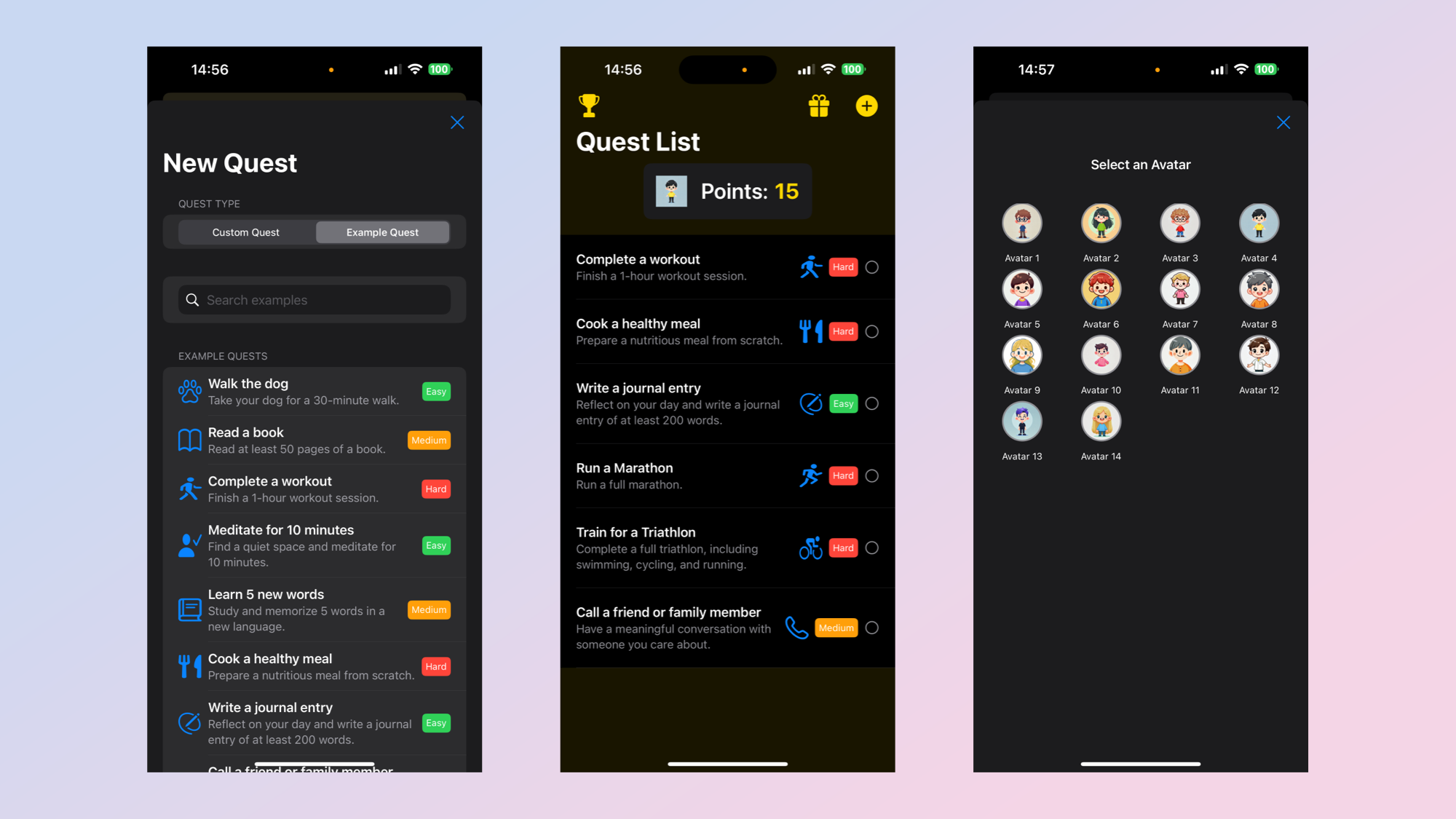

One example of this is in how it handles a very long code block. I built an iPhone ToDo list app that uses gamification to encourage task completion. This often requires multiple messages for each block of code and in the past if it was too long ChatGPT would truncate a response.

It would expect you to pull out elements and replace them in your own code. Latel, ChatGPT has been displaying entire code blocks for every update request without being asked.

ChatGPT does this over multiple messages but uses the very clever ‘continue generating’ feature so it all appears within a single block of code rather than disjointed across messages, often breaking the code layout or structure in the process.

I’ve also found it is more creative in its responses to tasks like ‘come up with 5 ideas for a short film about ordinary people’, or ‘re-write this letter aimed at a specific audience’.

While I can’t say for certain that ChatGPT has had an upgrade, its definitely had a performance boost over the way it was about two weeks ago — and I’m using it more than I have in a while.