Artificial intelligence will profoundly change how we work and what we create. Many fear that it will steal jobs, encroach on artistic output and strip creatives of livelihoods. But, as the gimmicky-side of AI loses the buzz, its power to enhance the creative process, complementing human creativity without taking the wheel, is increasingly being recognised.

Undoubtedly (as the history of AI art has proved), artificial intelligence needs human intelligence. And, with the democratisation of this technology, offering increased access and speed, there must be a collective responsibility for balanced and thoughtful integration. AI should be seen as the paintbrush not the painter. For innovation, authenticity, empathy and true craft, the human touch will always be essential.

In this respect, AI can be a creative “co-pilot”, working alongside humans as a tool for research and ideation, transforming workflows and boosting efficiency for creatives. It can help us maximise experimentation, handle the repetitive tasks and take away toil. So, rather than replacing humans, and our creative minds, can AI empower them?

We spoke to Dr Shama Rahman, neuroscientist, artist, creative technologist and founder of AI platform NeuroCreate, about what happens to the brain when we are being creative and how this has helped to uncover the benefits of integrating AI into day-to-day creative workflows.

Can you tell us about your work in creativity, neuroscience and AI?

I did a PhD looking at the neuroscience of creativity. I was very interested in breaking down the cognitive processes and the mental states that we go through during a creative process. I also founded a company, NeuroCreate, that essentially creates products that are all about augmenting creativity by building workflow tools. We created a platform called FlowCreate, which combines the way that I've used AI myself as a creative and lots of different tools into one platform. It’s specifically designed for creatives, strategists and people in the knowledge sector who work on conceptualisation. It helps take you all the way from a brief to a pitch deck. By the end of it, you can have some kind of brand strategy, design assets, a product brief and a deck to pitch to clients or other colleagues. It’s very much an ideation and innovation management platform. It takes you all the way from having a fuzzy idea to having something concrete, acting like a project manager.

How can neuroscience help us understand creativity?

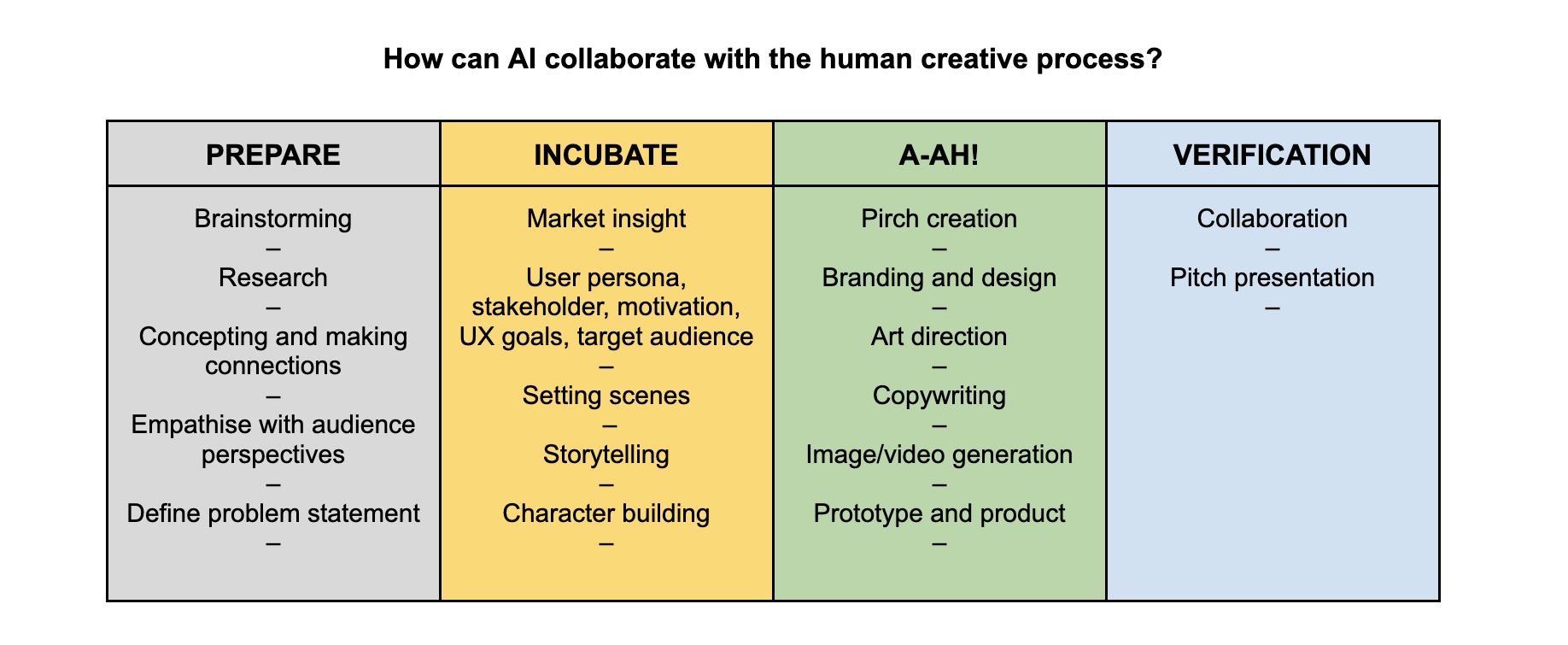

Nearly 100 years ago, Graham Wallas wrote The Art of Thought, in which he amalgamated hundreds of interviews with eminent creatives at the time, including artists, musicians, architects but also mathematicians, scientists and engineers, asking them about their creative processes. From there, using phenomenological analysis, he said there are four broad stages (in no particular order): preparation, incubation, the “aha”, and verification. These states map quite nicely onto different mental states that can be characterised by signature brain patterns.

Neuroscientists have also been looking at this creative process, myself included. In ‘preparation’ [what you see in terms of neural mechanisms in scans] it’s the very deep part of the brain that comes up when you are focused – so when you’re researching and giving your brain lots of different pieces of information and different perspectives. In incubation, this is the part of the brain called the default mode network, [which shows up] when your mind wanders, better known as daydreaming, which, at school, you’re not meant to be doing but as a creative it's imperative. Here, what you are essentially doing is mind-wandering about those juicy bits of information you’ve just fed your brain. It is when you put the problem to one side, and may go for a little snooze, a walk, chat to friends, or cook, letting your subconscious mind get to work. Then, the back of your brain lights up when you are about to get the right answer to a riddle (and not the wrong answer, curiously) – the “aha” moment. Then, in my own work, we see the “flow” state, characterised by another brain signature.

How did this research impact your work in AI?

By mapping out the different stages in our creative process, it seems to be a physiologically natural thing that we as humans do. Everybody does some research. Everybody has an “a-ha” moment. Everybody gets in the zone. So then, the idea is, how can we design our AI systems to help us get the most out of those stages? If we’re thinking about research, it would be really good to have the AI be able to show us areas of knowledge that we have no idea about, for example. And we can really use AI to get us out of our normal habits, away from our biases, certain go-tos and other associations.

(See table above for how Shama says AI can be utilised at different stages of creativity)

What challenges have you faced working with AI and creativity?

The main challenges have come from how people have viewed AI over the years. Now, we're at a point where people are able to see how it can be helpful more readily in their workflows. There was always a bit of fear, wasn't there. Being a creative myself, I’ve always been mindful of the ethics behind it. That it's about augmenting human processes, not replacing humans. That’s how FlowCreate is designed, so that it’s not threatening, from that perspective. A few years ago, with AI, it was like, “wow, that's something new” and there was this intrigue around it. And although the intrigue hasn’t gone, AI is now something everyone is talking about. There's a much bigger seismic shift towards the acceptance of it, I would say. More people are now expecting to utilise AI, and are trying to find out how to do that, looking for ways to upskill themselves. This could be a really, really big opportunity to enhance what we're doing – for creativity, for memory, for decision-making, for cognitive abilities – if we can figure out good design in the interaction, in the products that we have, and in education around how to use it.

What do you see as the benefits of utilising AI in creativity?

There's all the stuff that we already do very well as creatives. Then there's also the stuff that we’ve formed bad habits around – holding on to a very first idea, for example. And so can we utilise the AI to be a provocateur that can get us out of our kind of “stuckness”.

AI’s ability to allow people to collaborate is one of the biggest opportunities. In general, if we are thinking about the way to approach AI, it should be in a collaborative sense. Whenever you are collaborating with other people, they are not always going to agree on everything. With AI, there is the ability to improvise and interact with it (and each other), treating it as if it was the third person in this conversation. AI is also potentially blurring the lines of creative roles, but not in a bad way – for example, if you aren’t a designer but you want to get something out of your head and work with designers in a more collaborative way.

AI can also help show us different perspectives. In ‘incubation’, your brain finds patterns and you see different ways of looking at things. Similarly, AI, as a very good statistical tool, can also find connections, analyse things and find overall themes. Sometimes these may feel counterintuitive – which is great. That's exactly where you want the “a-ha”. This goes beyond the creative industries, and is about creativity and problem-solving, innovation and research, where you see something that has been missed before.

Currently, a lot of AI tools focus on helping us with the production side of things. Some of the new kinds of video AI tools coming out are really good, though still have limitations. This allows us to focus more on the storytelling aspect and the more exciting elements of production, and less on the mundane parts. It can be another form of animation, allowing us to do things that normal video doesn't – because of all the things that ground us in reality. Suddenly, we've got something that's photorealistic and doing something insane. It’s cool from that imagination perspective. We’re constricted by our own senses and our own ways of thinking. And AI is not human – so why don't we utilise that? It can help us to unearth things that we might not see or that we might have biases about, for example, because we're limited by our own experiences.

How might we put this into practice?

AI can be used as a decision making tool. It can help us in divergent thinking – meaning there is no right answer, but lots of possibilities. Instead of simply asking ChatGPT to give you five taglines [for a pitch], why not ask it to help you think differently, help you come up with diverse ideas? Then, as an example of convergent thinking – although you’re trying to have lots of ideas and not restrain yourself at this point – AI can help you summarise, find things in common, something counterintuitive perhaps. For example, “Help me make a decision between these five startup ideas by connecting the dots between them, and/or the pros and cons, and/or the likely best performing idea.” If you did all this yourself it may have taken some time, though you would have got there.

What are some of the most useful tools for creatives?

We are familiar with different techniques – language AI, visual AI, sonic AI, and there are tons of others. Integrating these [into workflows or models] is multimodal AI. GPT-4o has just come out and this can integrate lots of “sensory” inputs, things like visual, video, audio, and it bring it together. Another way to think about it is how we can integrate different types of AI techniques at different points in the workflow.

Some of the bigger players in language AI – working on the generation of human-like text pre-trained using a very large set of training data – include ChatGPT, Gemini, Meta’s Llama, Anthropic and Bing.

Looking back, “good old fashioned AI” such as StyleGAN gave a lot of freedom with visual aesthetics, working with visuals to visuals. Currently, it's often text to visuals, so there's a little bit of frustration around how it can translate what you mean. However, in visual AI, there are loads of different companies coming out with different tools – production-based RunwayNL is still the best video based tool, in my opinion. There are also different generative AI imagery tools, such as Midjourney, Firefly, Dall-E – they all have their own aesthetic or feel, so why not use them all? You can use the same prompts and get lots of different artistic styles.

Dr Shama Rahman runs the masterclass Using AI to Enhance Creativity, for more information and future course dates visit dandad.org/masterclasses/course/ai-creativity/

Creative Bloq's AI Week is held in association with DistinctAI, creators of the new plugin VisionFX 2.0, which creates stunning AI art based on your own imagery – a great new addition to your creative process. Find out more on the DistinctAI website.