On Wednesday, Apple announced in a press release that several exciting new accessibility features are coming to iPhone and iPad, including eye tracking. You will soon be able to control your iPhone or iPad using just your eyes, no extra hardware or apps required. That's not all — Apple is also introducing Music Haptics, vocal shortcuts, Vehicle Motion Cues, and more.

These accessibility updates come less than a month before WWDC 2024, Apple's developer conference, where it's expected to announce iOS 18 and iPadOS 18. Both could be among Apple's most ambitious OS updates yet, with a particular focus on AI. The accessibility updates revealed on Wednesday include a peek at what might be in store at WWDC in a few weeks.

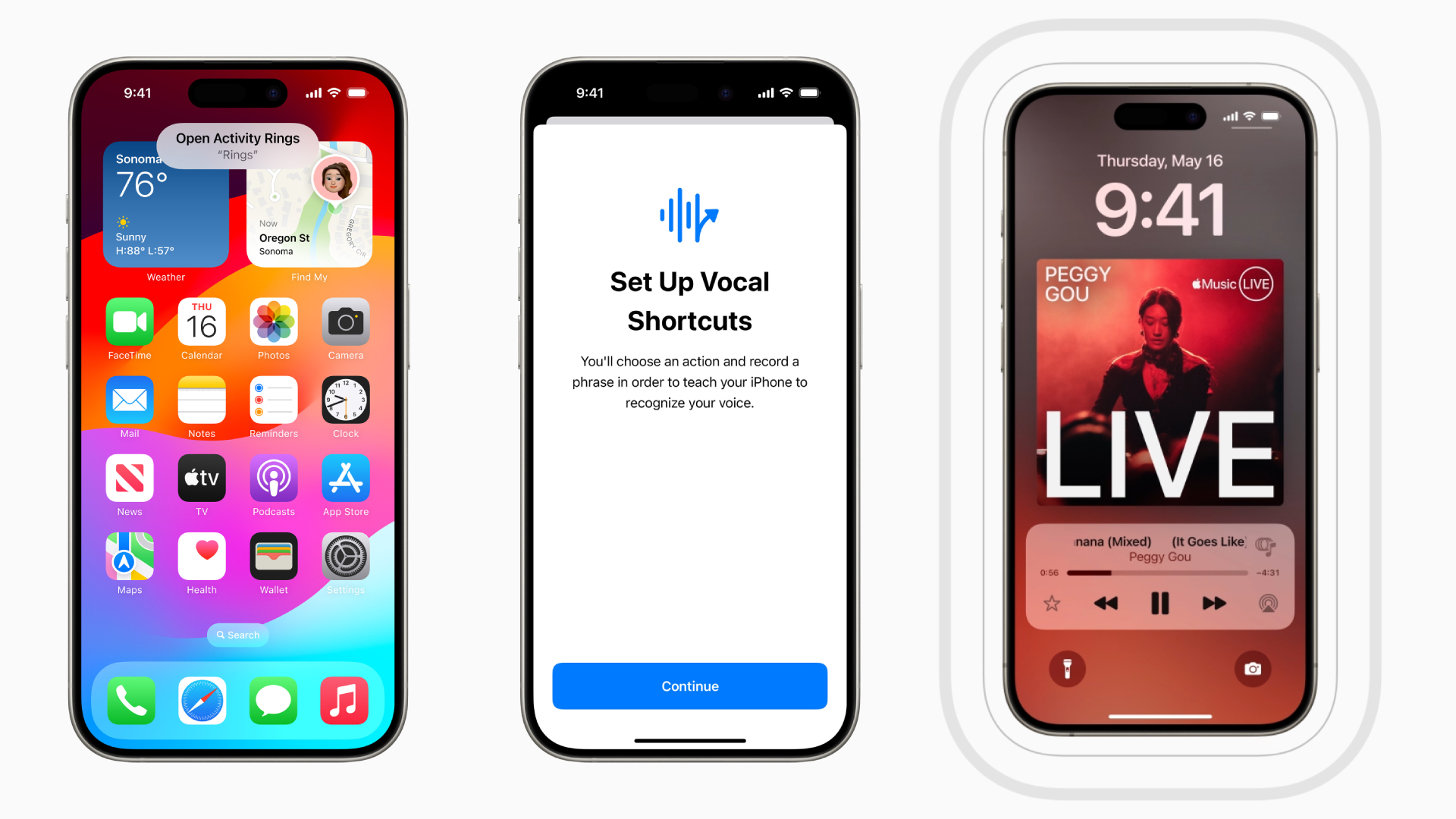

Here's a look at the new accessibility features Apple is introducing and how you can use them.

Apple announces eye tracking control for iPhone and iPad

The most exciting accessibility feature Apple announced on Wednesday is native eye tracking support for iPhone and iPad. You will soon be able to control all of iOS and iPadOS with just your eyes, including third-party apps. While anyone can use eye tracking, it's designed for people with physical disabilities or limited mobility.

Eye tracking in iOS and iPadOS uses on-device machine learning to calibrate to individual users. It's the first of likely many new AI features coming to the iPhone and iPad. Since it uses on-device machine learning, you won't have to worry about any of your eye tracking data going to a data center or the cloud. However, the downside of that is that this feature will only be available on iPhones and iPads with the processing power for on-device AI, like the new iPad Pro M4.

Music Haptics, vocal shortcuts, and Vehicle Motion Controls offer quality-of-life updates

Eye tracking is a game changer for accessibility on the iPhone and iPad, but Apple didn't stop there. It also announced Music Haptics, vocal shortcuts for Siri, and Vehicle Motion Cues, as well as a couple of accessibility features for CarPlay and visionOS.

Music Haptics will allow people with hearing challenges to experience music through haptics. Using the iPhone's Taptic Engine, Music Haptics creates taps and vibrations coordinated to music tracks so people who are deaf or hard of hearing can feel music even if they can't listen to it. Music Haptics will be available first on Apple Music, but Apple is also releasing an API that will allow third-party developers to use it on their apps, as well, such as Pandora or Spotify.

Vocal shortcuts are a convenient new feature coming to iPhone and iPad that allow you to train Siri to perform tasks using custom phrases. You can create your own action and key phrase combos, which don't need to include Siri. For instance, you could train Siri to open the Apple News app when you say, "show me the news."

This feature is reminiscent of the handful of AI assistant devices that have come out recently, like the Rabbit R1. If Apple unveils even more features like this, it could easily make dedicated AI devices obsolete. It's especially interesting that vocal shortcuts are tied to Siri. Apple is expected to announce major changes to its virtual assistant at WWDC 2024, so it will be exciting to see how vocal shortcuts and other new AI features evolve with the new version of Siri.

Vehicle Motion Cues is a more niche accessibility feature, but could come in handy for anyone who suffers from motion sickness. This feature uses moving dots around the edges of your iPhone or iPad screen to tell your eyes which way the vehicle is moving.

Motion sickness is often caused by a disconnect between what your eyes are seeing and the movement your body is experiencing. The motion of the dots around the edge of your screen attempts to repair that disconnect by telling your eyes which way you're moving, helping to prevent motion sickness when you're looking at your screen.

Speaking of cars, Apple CarPlay also got a minor, but helpful update with the addition of voice control. You can now use verbal commands to control CarPlay, reducing the need to look away from the road to touch your car's screen. VisionOS also got a new accessibility feature: system-wide live captions. This feature can show captions for any audio on the Vision Pro, including FaceTime calls.

These features are a peek at what's coming up from Apple at WWDC 2024. We're expecting many more new AI features in iOS 18 and iPadOS 18. Apple will likely unveil both OS updates at WWDC, but it tends to roll out updates to users in the fall. So, you might have to wait until September to try out these new accessibility features and anything else announced at WWDC.

We'll be covering the latest news and rumors from Apple leading up to WWDC, so stay tuned for more details, info, and insights.