AMD's Instinct MI300 is shaping up to be an incredible chip with CPU and GPU cores and a hefty slab of high-speed memory brought together on the same processor, but details have remained slight. Now we've gathered some new details from an International Super Computing (ISC) 2023 presentation that outlines the coming two-exaflop El Capitan supercomputer that will be powered by the Instinct MI300. We also found other details in a keynote from AMD's CTO Mark Papermaster at ITF World 2023, a conference hosted by research giant imec (you can read our interview with Papermaster here).

The El Capitan supercomputer is poised to be the fastest in the world when it powers on in late 2023, taking the leadership position from the AMD-powered Frontier. AMD's powerful Instinct MI300 will power the machine, and new details include a topology map of a MI300 installation, pictures of AMD's Austin MI300 lab, and a picture of the new blades that will be employed in the El Capitan supercomputer. We'll also cover some of the other new developments around the El Capitan deployment.

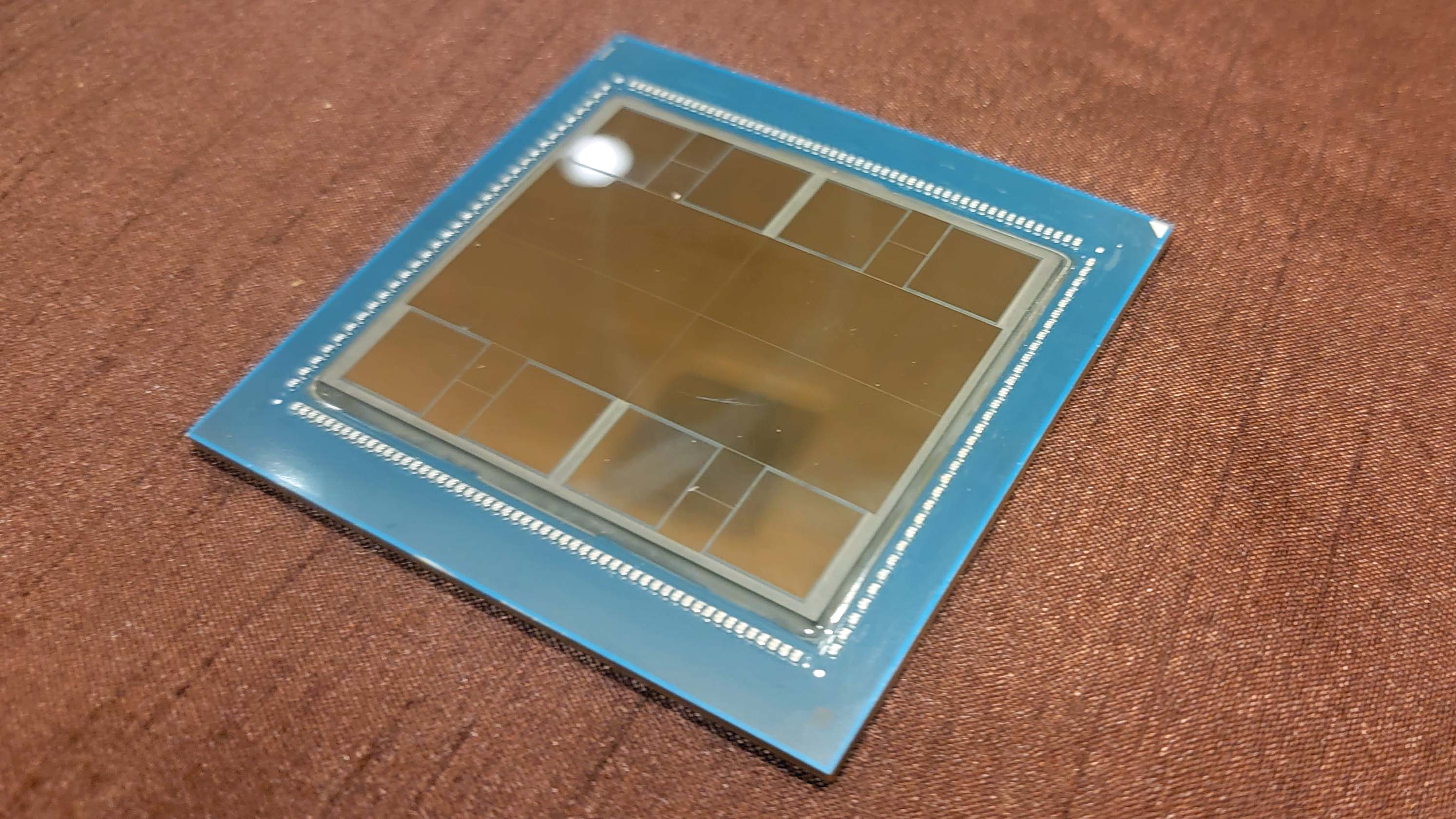

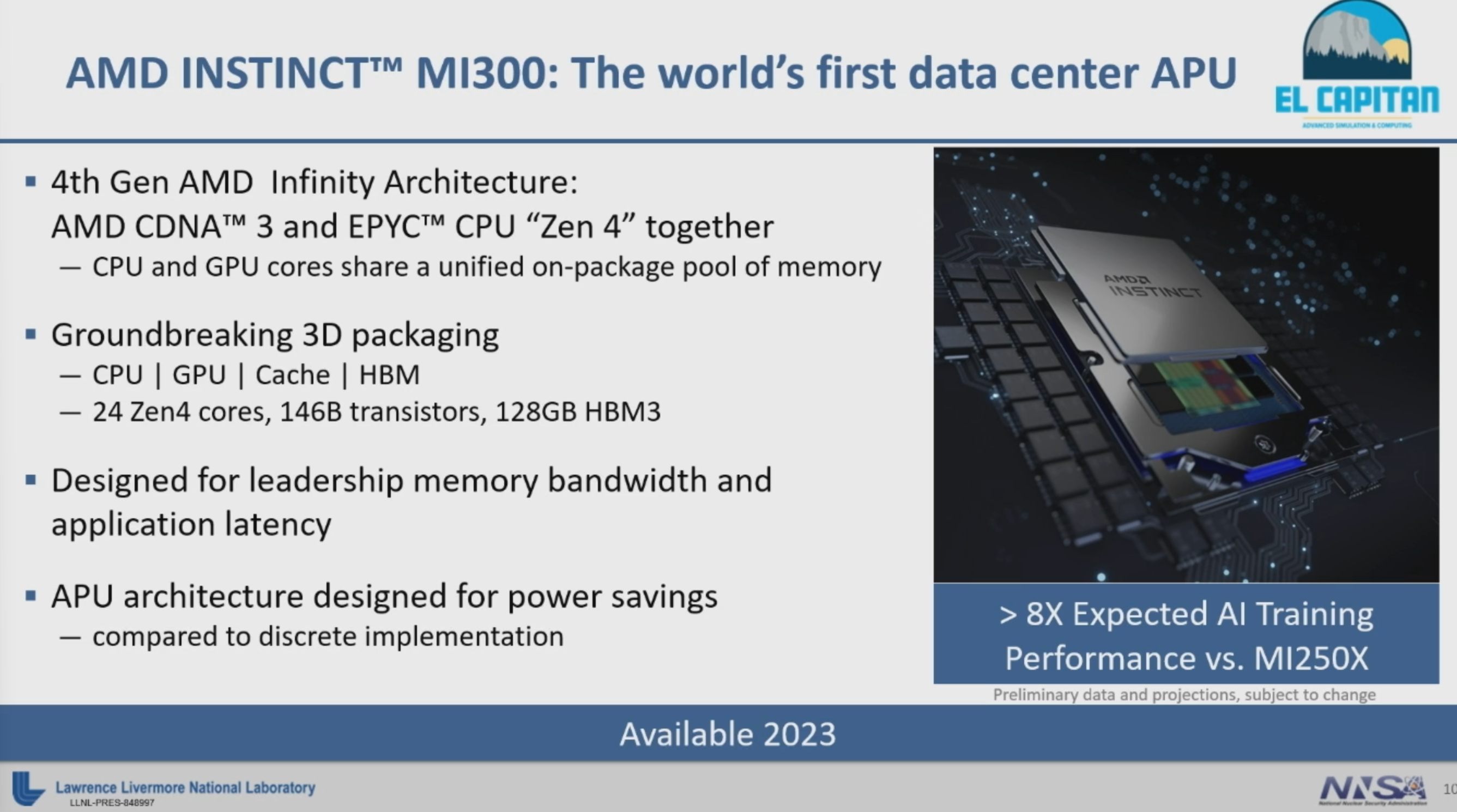

As a reminder, the Instinct MI300 is a data center APU that blends a total of 13 chiplets, many of them 3D-stacked, to create a single chip package with twenty-four Zen 4 CPU cores fused with a CDNA 3 graphics engine and eight stacks of HBM3 memory totaling 128GB. Overall the chip weighs in with 146 billion transistors, making it the largest chip AMD has pressed into production. The nine compute dies, a mix of 5nm CPUs and GPUs, are 3D-stacked atop four 6nm base dies that are active interposers that handle memory and I/O traffic, among other functions.

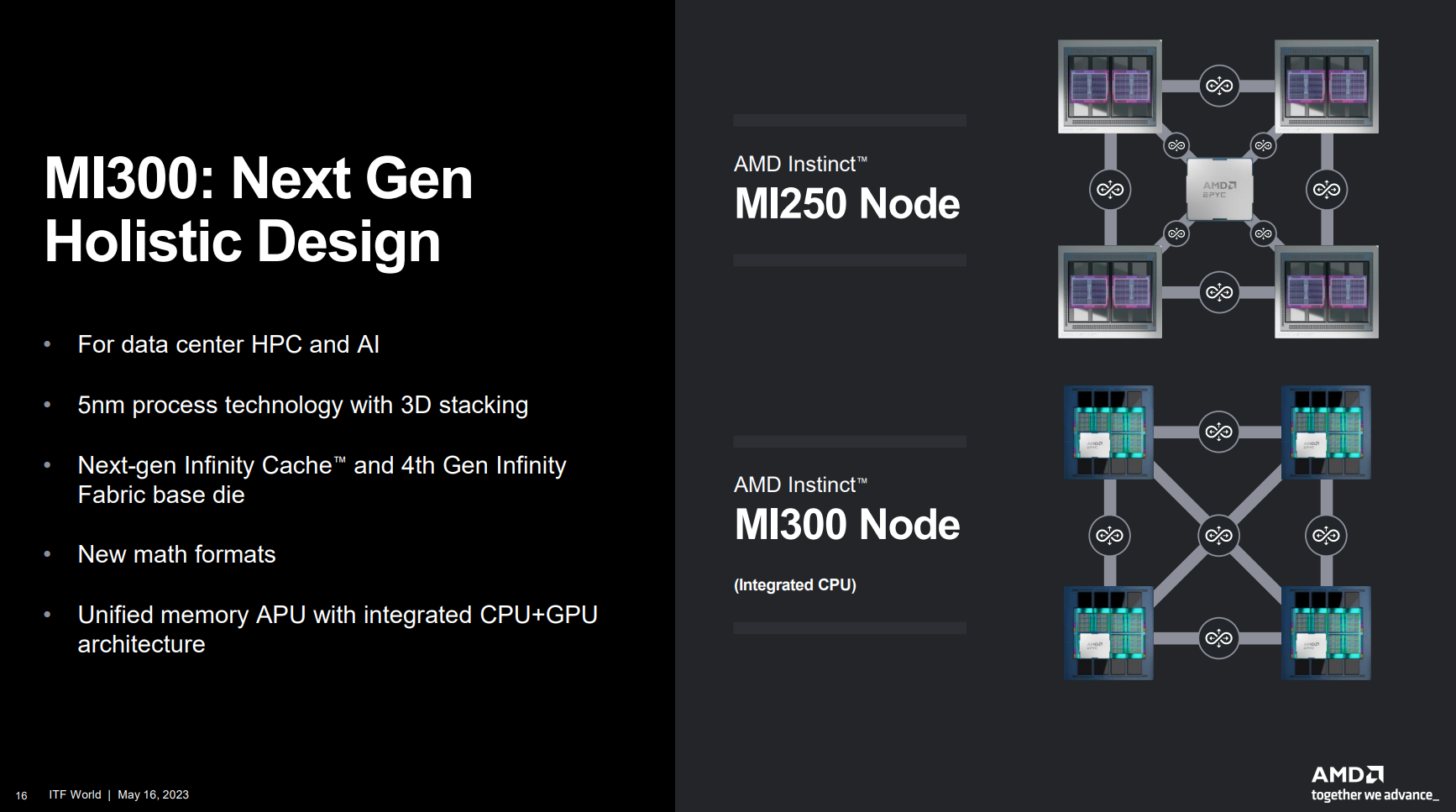

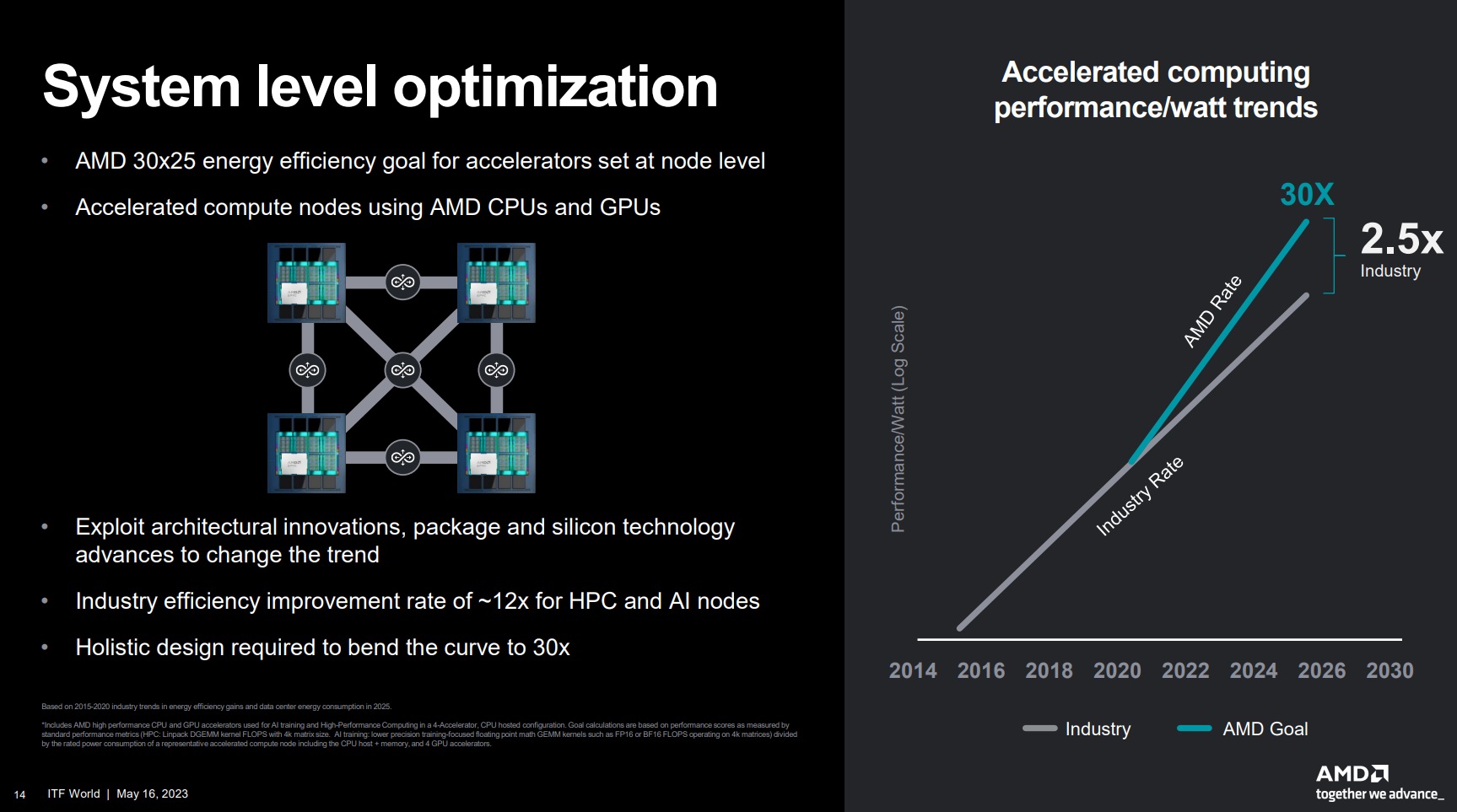

Papermaster's ITF World keynote focused on AMD's "30x25" goal of increasing power efficiency by 30x by 2025, and how computing is now being gated by power efficiency as Moore’s Law slows. Key to that initiative is the Instinct MI300, and much of its gains come from the simplified system topology you see above.

As you can see in the first slide, an Instinct MI250-powered node has separate CPUs and GPUs, with a single EPYC CPU in the middle to coordinate the workloads.

In contrast, the Instinct MI300 contains a built-in 24-core fourth-gen EPYC Genoa processor inside the package, thus removing a standalone CPU from the equation. However, the same overall topology remains, sans the standalone CPU, enabling a fully-connected all-to-all topology with four elements. This type of connection allows all of the processors to speak to each other directly without another CPU or GPU serving as an intermediary to relay data to the other elements, thus reducing latency and variability. That's a potential pain point with the MI250 topology. The MI300 topology map also indicates that each chip has three connections, just as we saw with MI250. Papermaster's slides also refer to the active interposers that form the base dies as the 'fourth-gen infinity fabric base die."

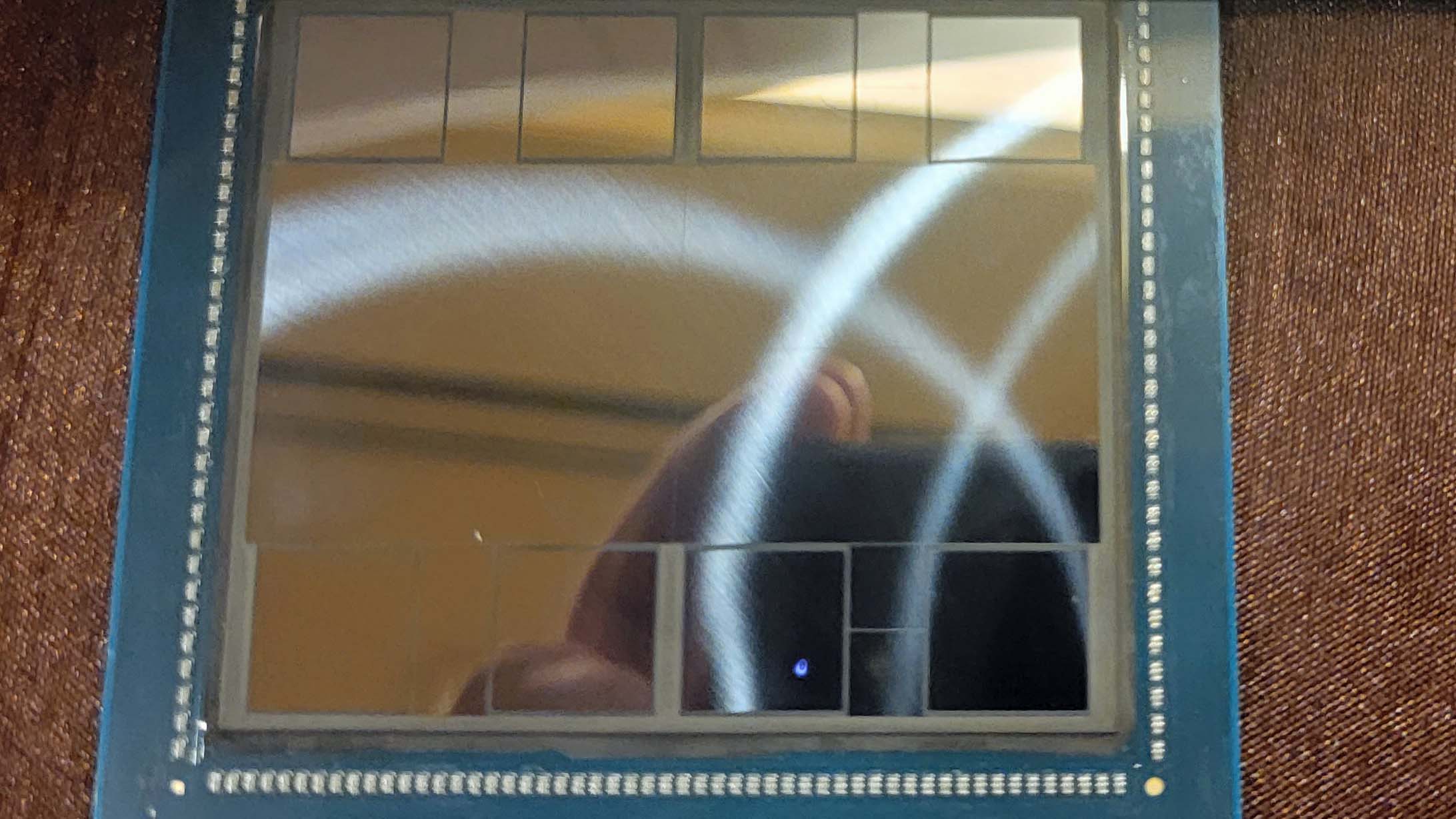

As you can see in the remainder of these slides, the MI300 has placed AMD on a clear path to exceeding its 30X25 efficiency goals while also outstripping the industry power trend. We also threw in a few pictures of the Instinct MI300 silicon we saw firsthand, but below we see how the MI300 looks inside an actual blade that will be installed in El Capitan.

AMD Instinct MI300 in El Capitan

At ISC 2023, Bronis R. de Supinski, the CTO for the Lawrence Livermore National Laboratory (LLNL), spoke about integrating the Instinct MI300 APUs into the El Capitan supercomputer. The National Nuclear Security Administration (NNSA) will use El Capitan to further military uses of nuclear tech.

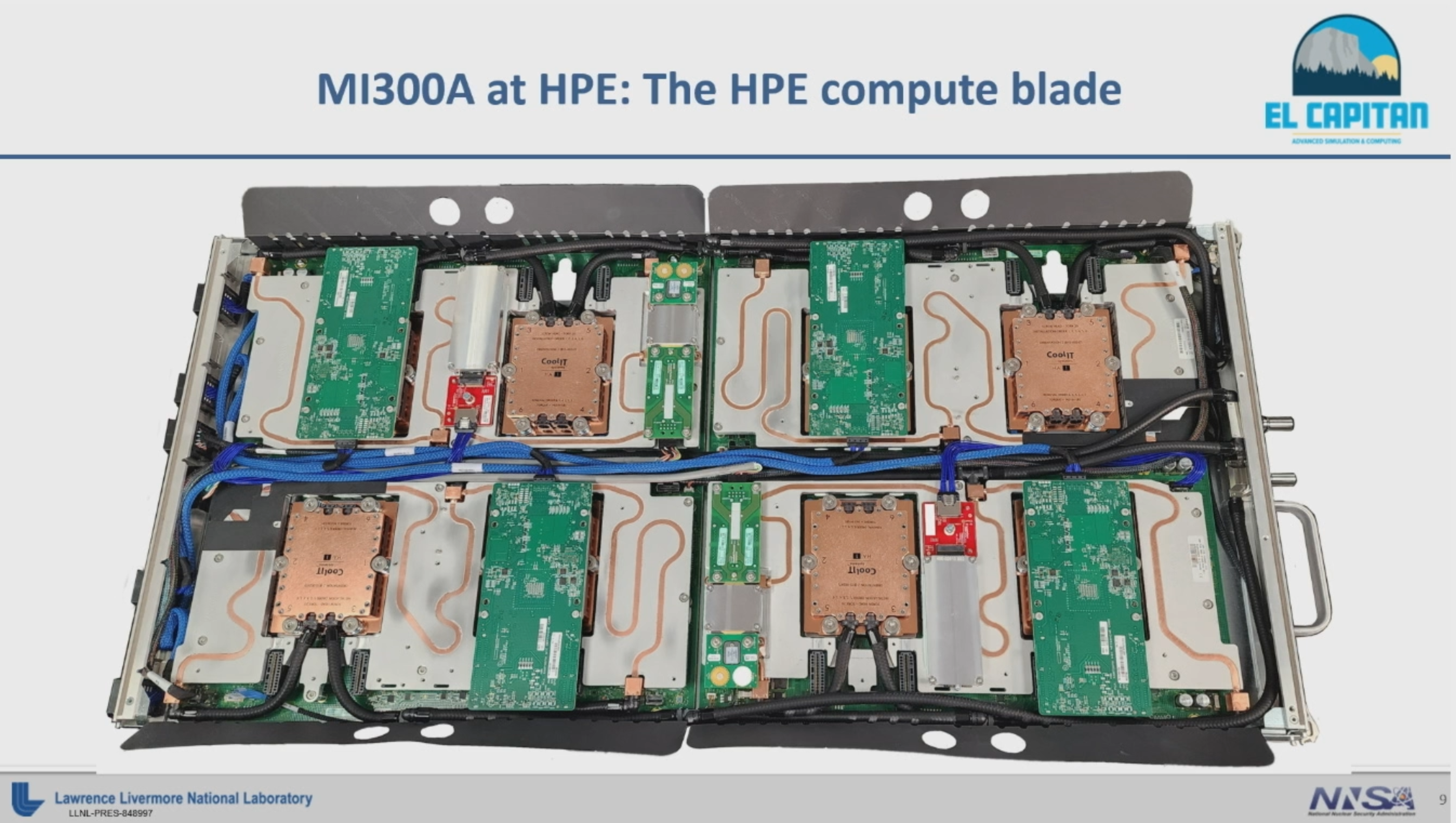

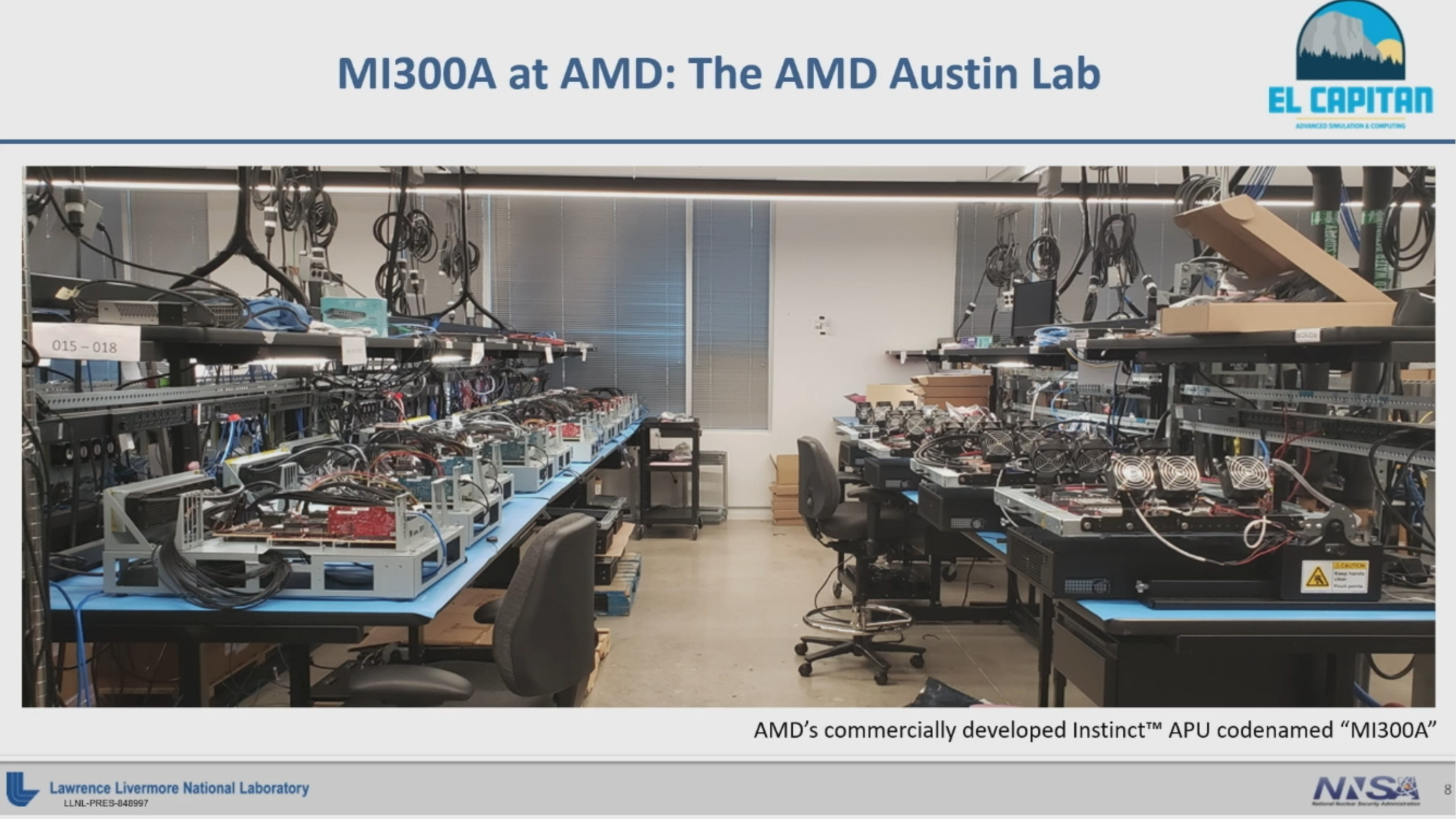

As you can see in the first image in the above album, Supinski showed a single blade for the El Capitan system. This blade, made by system vendor HPE, features four liquid-cooled Instinct MI300 cards in a slim 1U chassis. Supinksi also showed a picture of AMD's Austin lab, where they have working MI300 silicon, thus showing that the chips are real and already under testing — a key point to be made considering some of the recent missteps with Intel-powered systems.

Supinksi often referred to the MI300 as the "MI300A," but we aren't sure if that is a custom model for El Capitan or a more formal product number.

Supinski said the chip comes with an Infinity Cache but didn't specify the capacity available. Supinski also cited the importance of the single memory tier multiple times, noting how the unified memory space simplifies programming, as it reduces the complexities of data movement between different types of compute and different pools of memory.

Supinski notes that the MI300 can run in several different modes, but the primary mode consists of a single memory domain and NUMA domain, thus providing uniform access memory for all the CPU and GPU cores. The key takeaway is that the cache-coherent memory reduces data movement between the CPU and GPU, which often consumes more power than the computation itself, thus reducing latency and improving performance and power efficiency. Supinksi also says it was relatively easy to port code from the Sierra supercomputer to El Capitan.

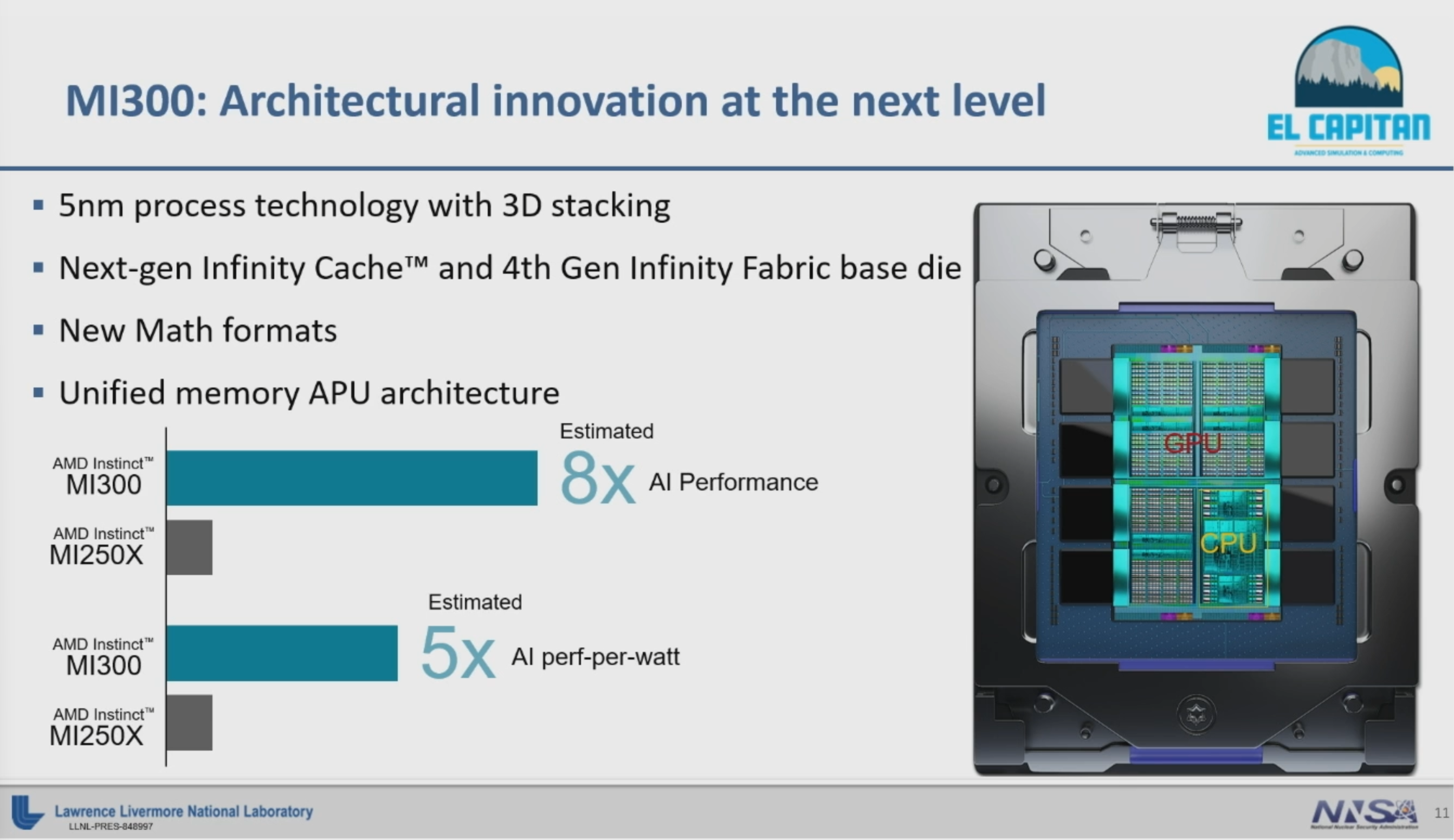

The remainder of Supinski's slides includes information AMD has already disclosed, including performance projections of 8X the AI performance and 5X the performance-per-watt of the MI250X.

HPE is building the El Capitan system based on its Shasta architecture and Slingshot-11 networking interconnect. This is the same platform that powers both of the DOE's other exascale supercomputers, Frontier, the fastest supercomputer in the world, and the oft-delayed Aurora that's powered by Intel silicon.

The NNSA had to build more infrastructure to operate the Sierra supercomputer and El Capitan simultaneously. That work included bolstering the power delivery dedicated to compute from 45 MW to 85 MW. An additional 15 MW of power is available for the cooling system, which has been upgraded to 28,000 tons by adding a new 18,000-ton cooling tower. That gives the site a total of 100 MW of power, but El Capitan is expected to consume under 40 MW, though the actual value could be around 30 MW — the final numbers won't be known until deployment.

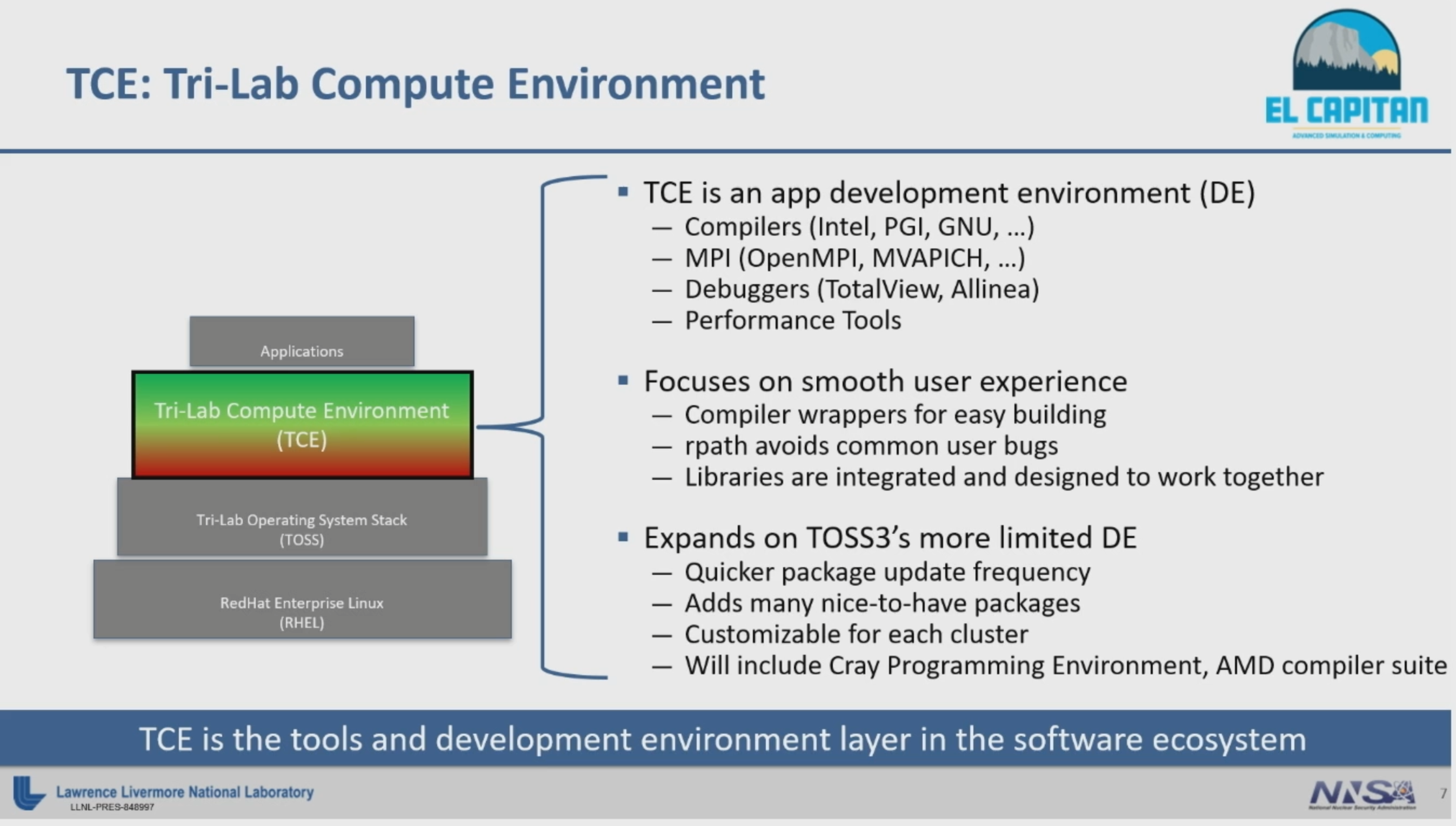

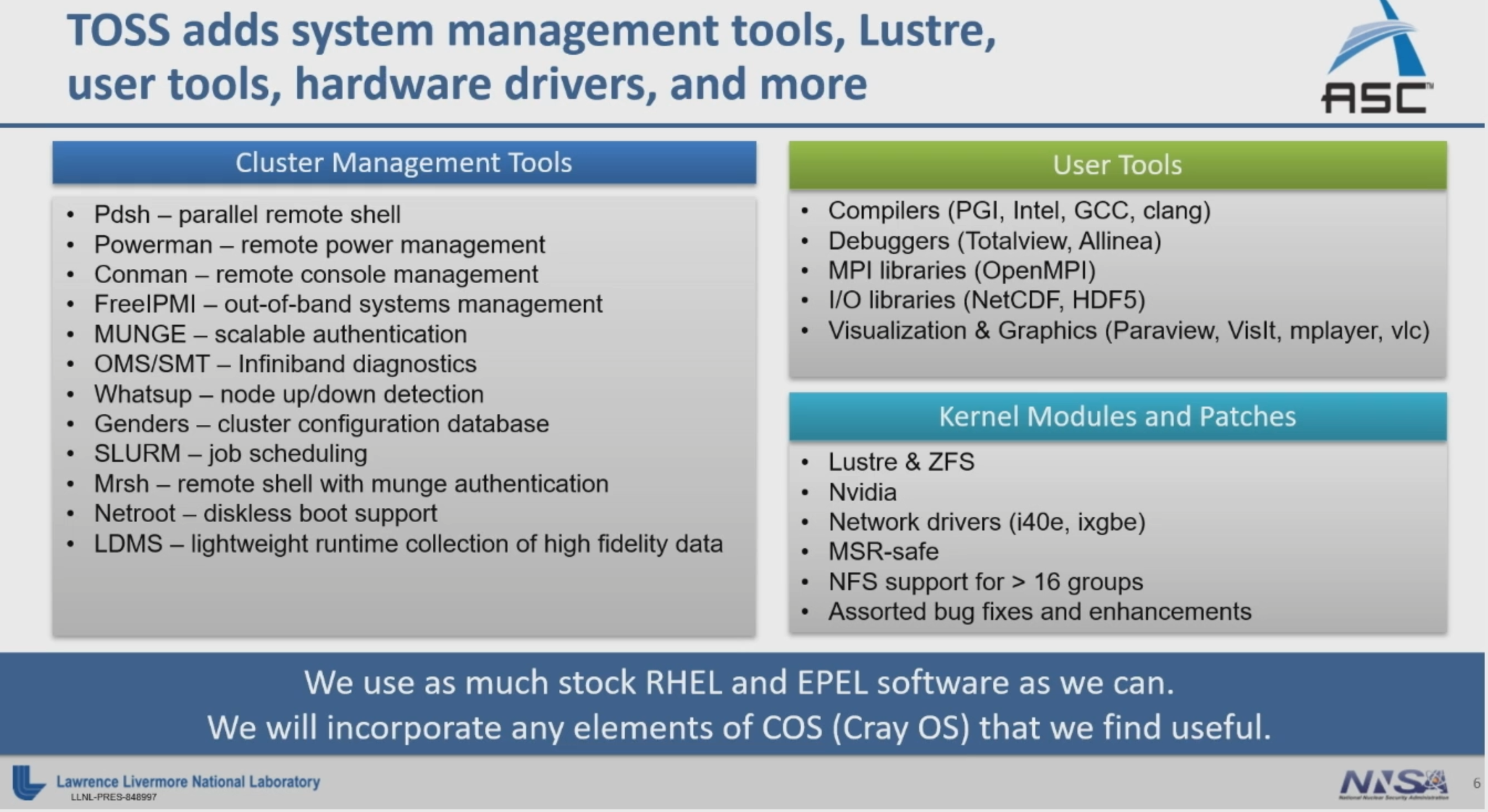

El Capitan will be the first Advanced Technology System (ATS) that uses NNSA's custom Tri-lab Operating System Software (TOSS), a full software stack built on RHEL.

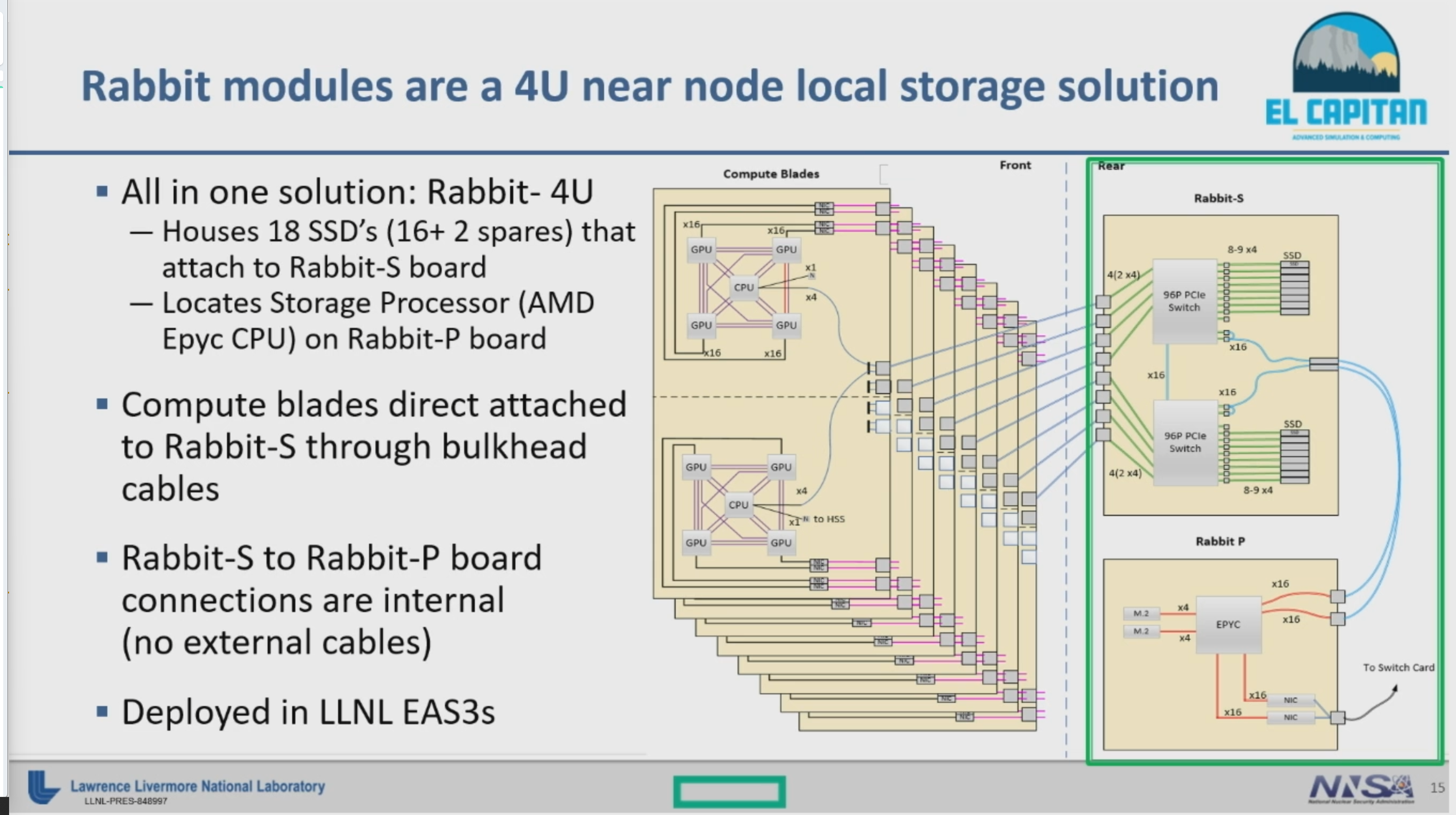

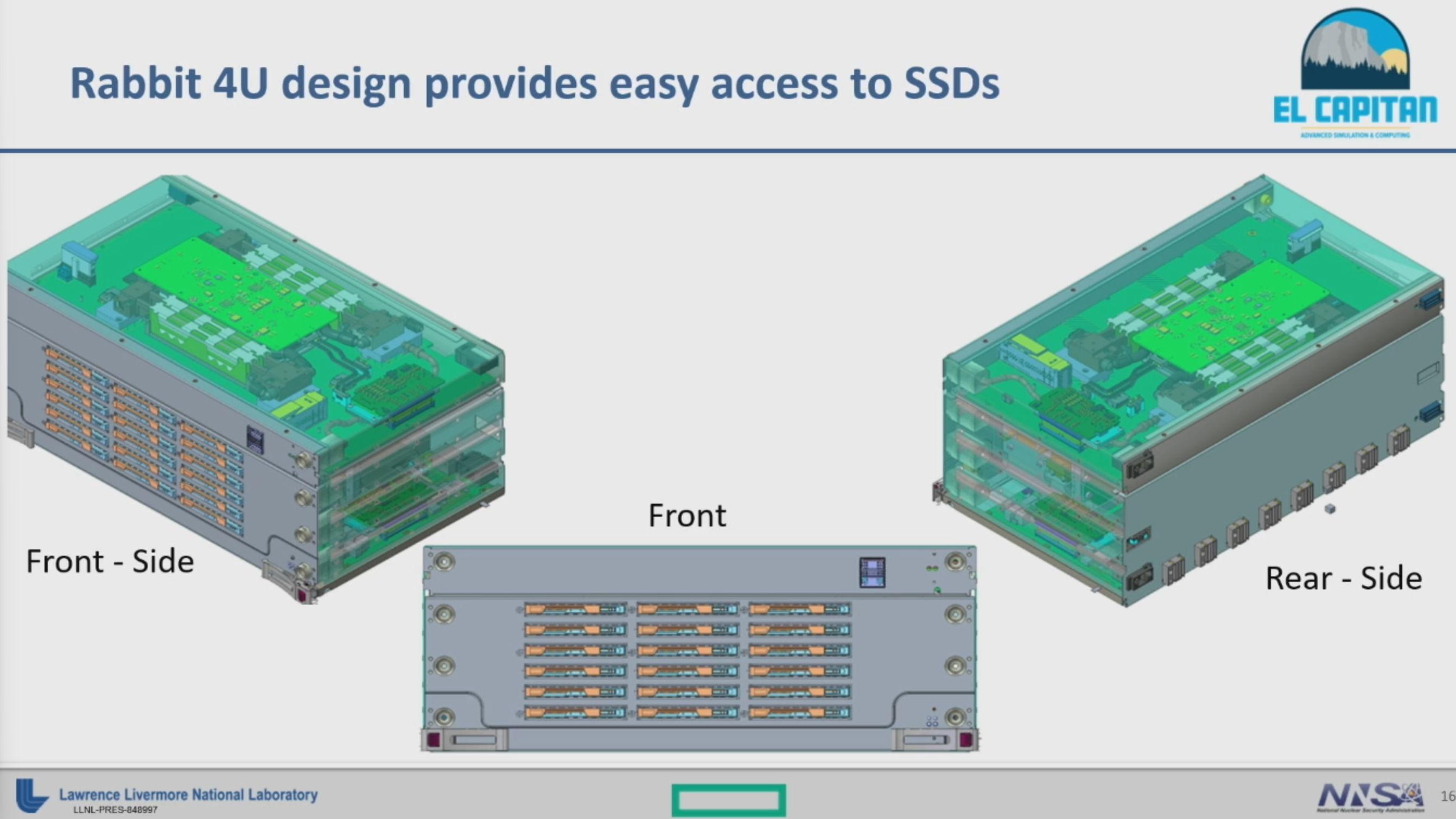

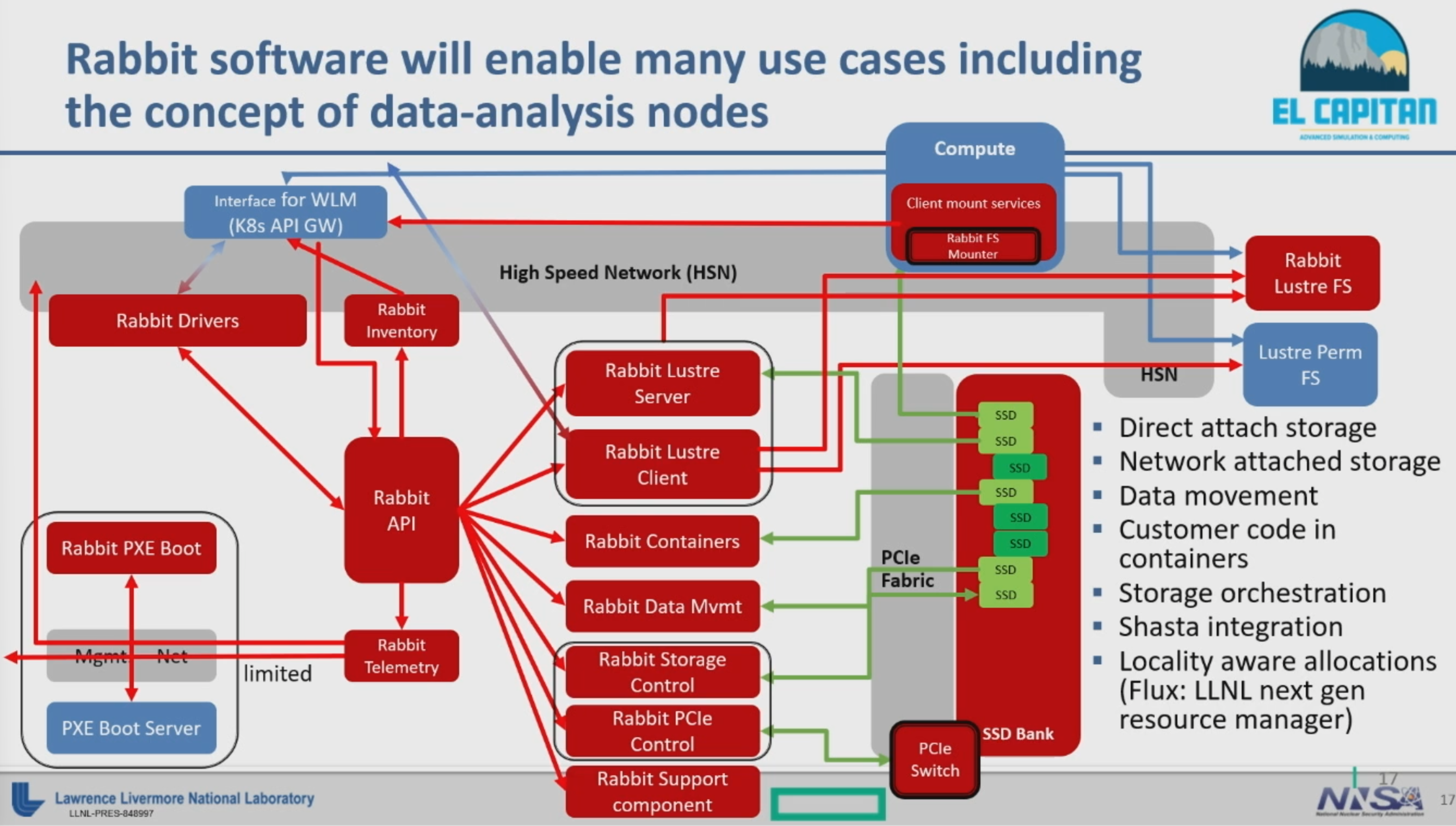

El Capitan's Rabbit Program for Storage

The LLNL is using smaller 'EAS3' systems to prove out the software that will be deployed on El Capitan when it becomes operational later this year. LLNL is already testing new Rabbit modules that will host a plethora of SSDs for near-node local storage. Above, you can see the block diagrams of these nodes, but be aware that they don't use the MI300 accelerators — instead, they have standard EPYC server processors for storage orchestration and data analytics tasks. These fast nodes appear to serve as burst buffers that absorb massive amounts of incoming data quickly, which will then be shuffled off to the slower bulk storage system.

AMD Instinct MI300 Timeline

With development continuing on a predictable cadence, it's clear that El Capitan is well underway to being operational later this year. The MI300 forges a new path for AMD's high-performance compute offerings, but AMD tells us these halo MI300 chips will be expensive and relatively rare -- these are not a high-volume product, so they won't see wide deployment like the EPYC Genoa data center CPUs. However, the tech will filter down to multiple variants in different form factors.

This chip will also vie with Nvidia's Grace Hopper Superchip, which is the combination of a Hopper GPU and the Grace CPU on the same board. These chips are expected to arrive this year. The Neoverse-based Grace CPUs support the Arm v9 instruction set, and systems come with two chips fused together with Nvidia's newly branded NVLink-C2C interconnect tech. In contrast, AMD's approach is designed to offer superior throughput and energy efficiency, as combining these devices into a single package typically enables higher throughput between the units than when connecting to two separate devices like Grace Hopper does.

The MI300 was also supposed to compete with Intel's Falcon Shores, a chip that was initially designed to feature a varying number of compute tiles with x86 cores, GPU cores, and memory in numerous possible configurations. Intel recently delayed them to 2025 and redefined the chips to feature a GPU and AI architecture only — they will now not feature CPU cores. In effect, that leaves Intel without a direct competitor for the Instinct MI300.

Given the rapidly approaching power-on date for El Capitan and AMD's reputation for getting supercomputers done on time, we can expect AMD to begin sharing much more information about its Instinct Mi300 APUs soon. AMD will host the company's Next-Generation Data Center and AI Technology livestream event on June 13, and we expect to learn more there. We'll be sure to bring you the latest from that event when it arrives.