A new research paper by Meta and Oxford University scientists outlines a powerful AI-driven technique for generating scalable 3D models. VFusion3D addresses the paucity of 3D data for AI training and content generation. Thus, rather than using existing 3D models, VFusion3D is trained on text, images, and videos.

The researchers claim that VFusion3D “can generate a 3D asset from a single image in seconds,” exhibiting high-quality and faithful results. If these scalable 3D models are up to snuff, they could save a lot of work in the gaming, VR, and design industries.

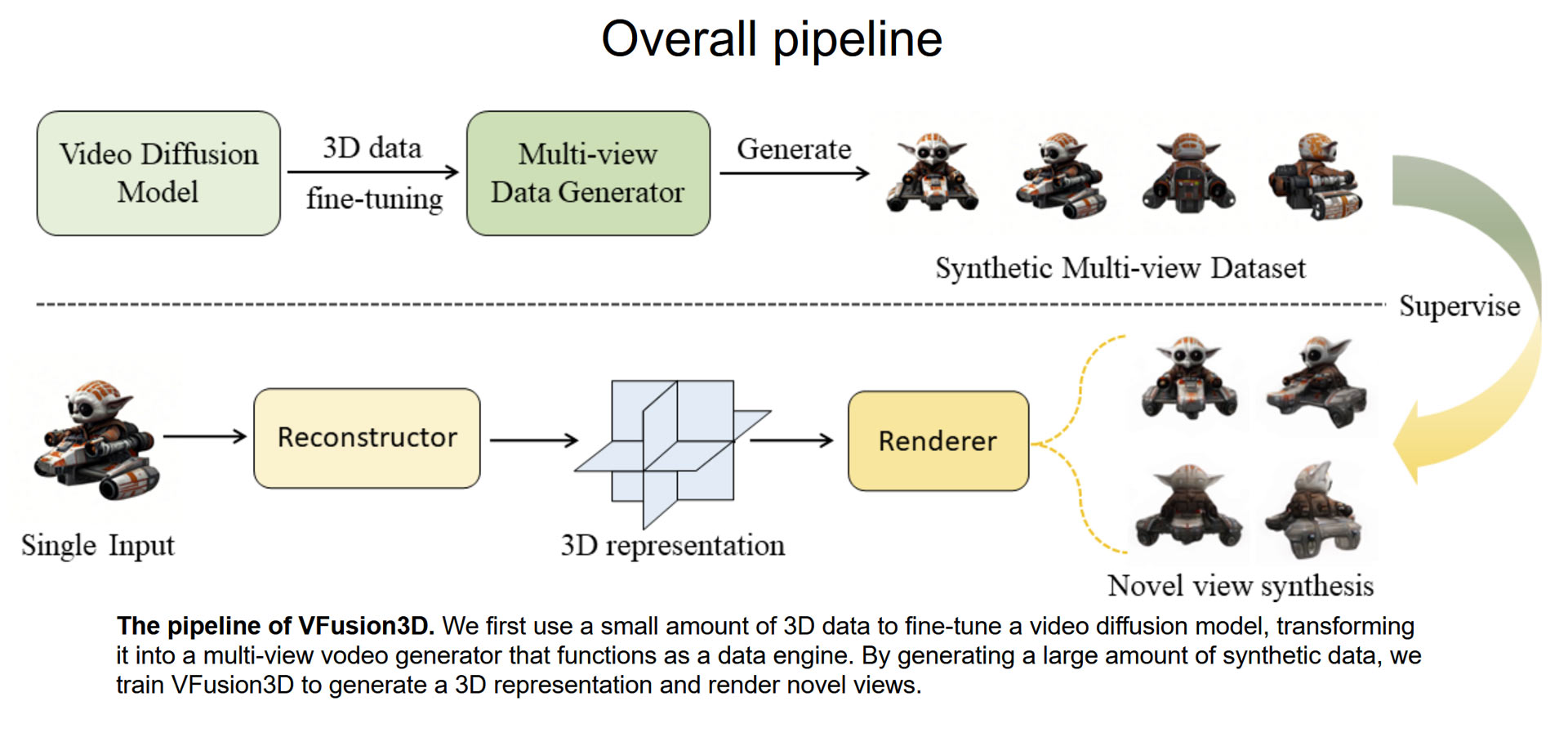

The research team led by Junlin Han, Filippos Kokkinos, and Philip Torr explains the pipeline they designed for VFusion3D, as the diagram above represents. The pipeline features a small amount of 3D data, which is used to fine-tune a video diffusion model. Videos are great sources for this pipeline as they often show various angles of an object, which can be instrumental in faithful 3D reproductions.

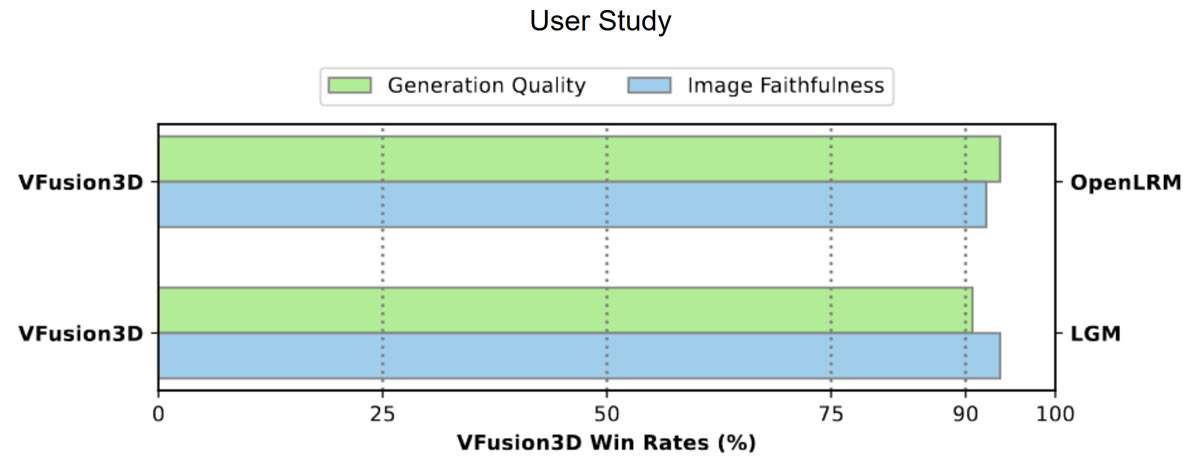

The researchers chose a video model dubbed EMU Video, as it has been trained with a mix of videos, including panning shots of objects and drone footage. As they say, such video sources “inherently contain cues about the 3D world.” The result is VFusion3D, which is claimed to be able to “generate high-quality 3D assets from a single image” irrespective of viewing angle. A user study backs up these claims.

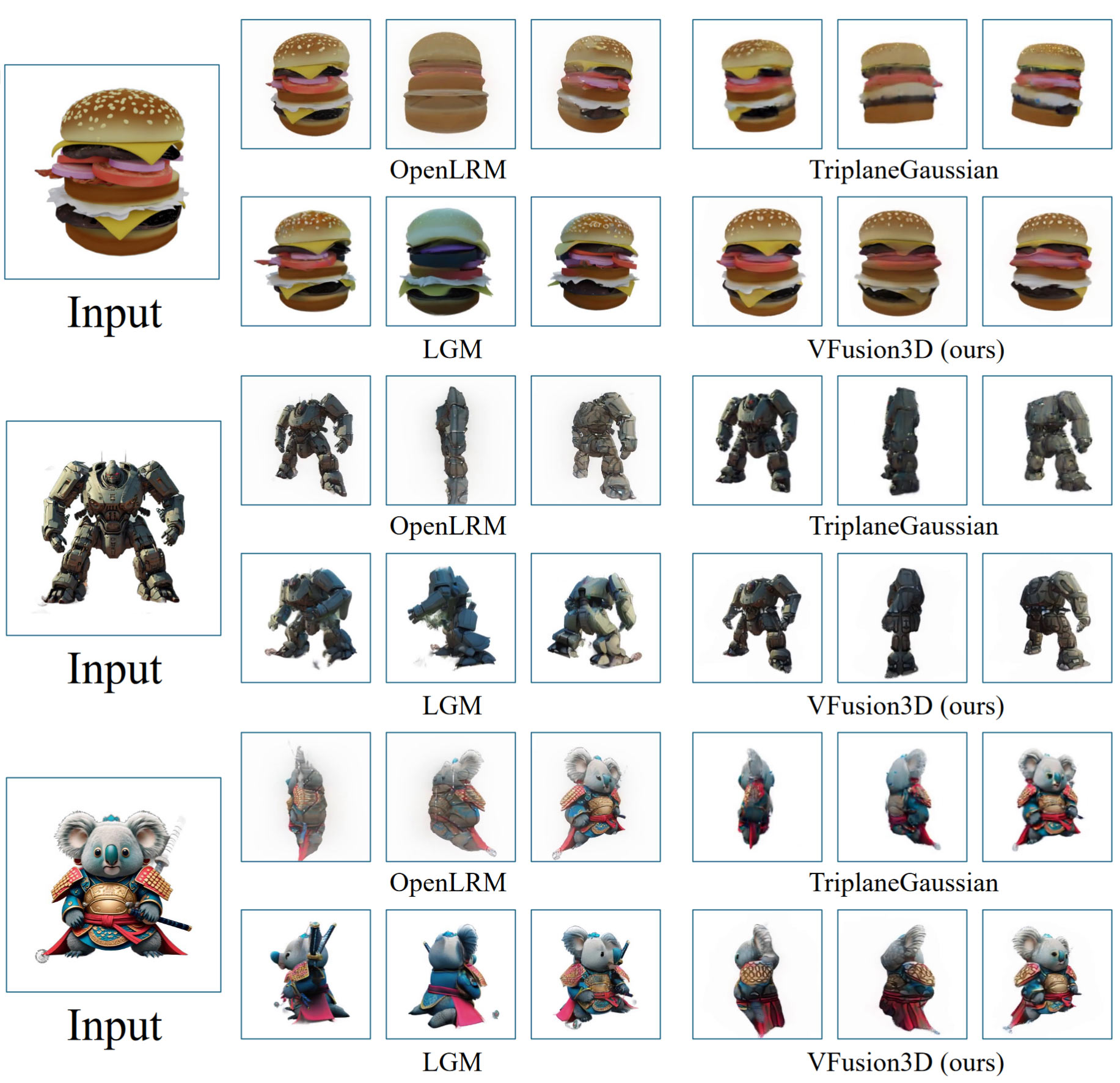

In addition to testing their new model, the scientists compared it against rival distillation-based and feed-forward 3D generative models. After all, this isn’t the first tool that targets this task. Meta’s Junlin Han highlights the comparative quality and performance of VFusion3D on a GitHub project page. At the same place, you can enjoy a selection of animated objects from VFusion3D and its rivals – compared to the source (input) image. Several comparisons are included in our gallery.

If readers are interested in testing VFusion3D, an online demo is available. In it, you can generate and download a 3D model from one of the example images or even upload your source. At the time of writing, this demo wasn’t responding, as it was “busy.”