Sora is here and it joins o1 as the major announcements from OpenAI so far in its 12 day extravaganza. Between now and December 20 we're expecting something new every day — some smaller like reinforcement fine-tuning, some big like Sora.

I've been a journalist for more than two decades and have covered all kinds of events and announcements, but a tech company embracing the '12 days of Christmas' as a theme is a new one for me.

In CEO Sam Altman's own words, the company will "have a livestream with a launch or demo, some big ones and some stocking stuffers." I suspect more stocking filler than a major feature, but time will tell.

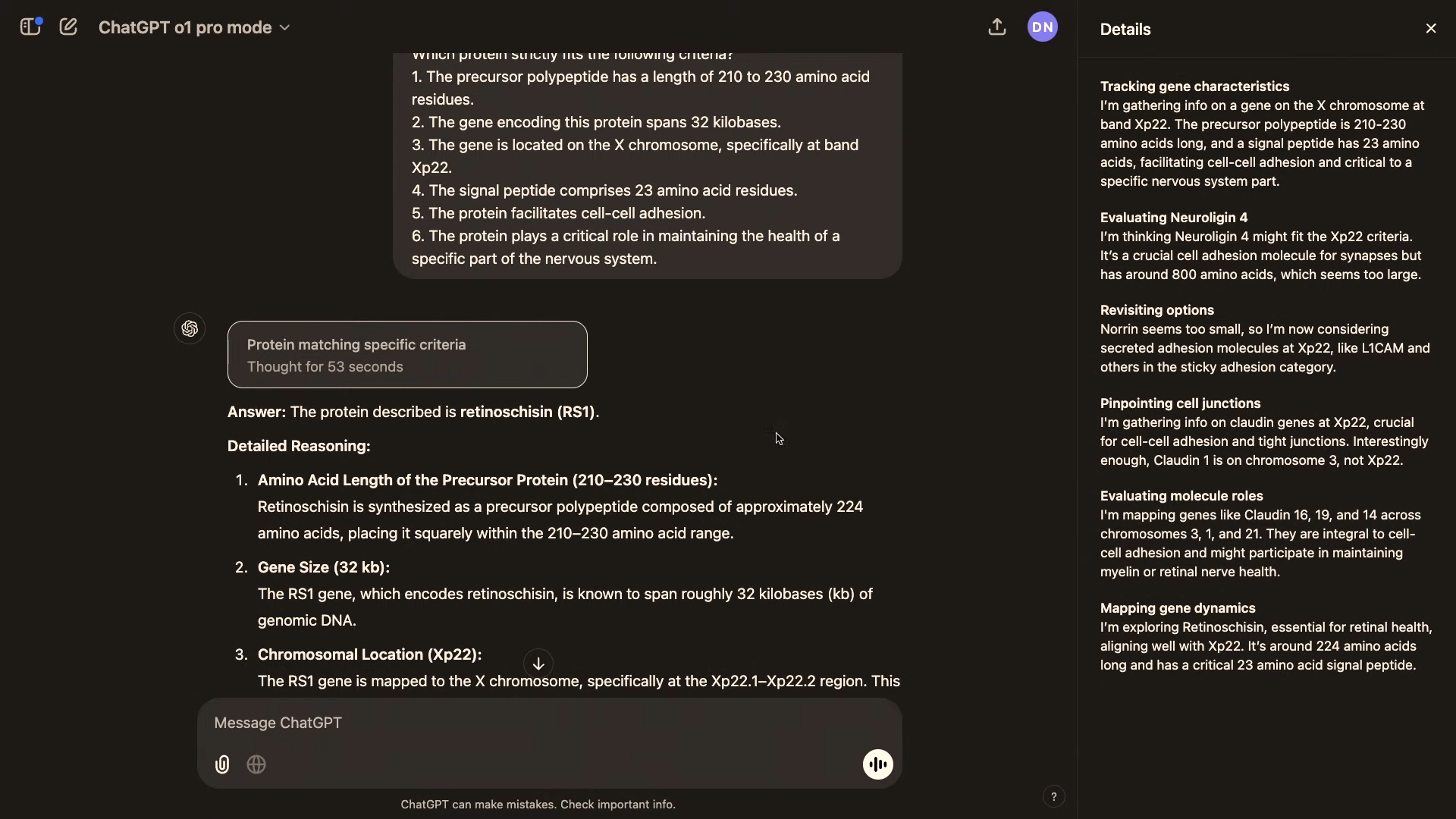

So far we've seen the launch of the o1 reasoning model and a new o1 Pro model behind a new $200 per month ChatGPT Pro subscription. We then got something for research scientists on Friday in the form of reinforcement fine-tuning of models.

Finally, Sora dropped yesterday, bringing a new major player into the AI video space. The big surprise was the launch of a dedicated sora.com platform, the first major consumer product from OpenAI since ChatGPT.

There are still eight announcements to go and with so much to cover — we're doing it live.

12 Days of OpenAI: Biggest News So Far

- OpenAI launches Sora: OpenAI's artificial intelligence video generation tool, Sora, is official and enables you to generate videos and images in nearly any style from realistic to abstract. It's a whole new product for the company on a separate page from ChatGPT.

- Fine-tuning AI models: In a roundtable, OpenAI devs focused on the power behind OpenAI's models and reinforcement fine-tuning for AI models tailored for complex, domain-specific tasks. to make them work in specific fields like science, finance and medical.

- ChatGPT Pro Tier: Sam Altman and his roundtable continued the 12 Days by announcing a Pro tier of ChatGPT meant for scientific research and complex mathematical problem solving that you can get for $200 a month (this also comes with unlimited o1 use and unlimited Advanced Voice).

- ChatGPT o1 model: OpenAI's 12 Days of AI kicked off with a rather awkward roundtable live session where Altman and his team announced that the o1 reasoning model is now fully released and no longer in public preview.

Live updates

OpenAI continues its 12 Days release event

OpenAI is releasing something new every weekday until December 20 and so far the focus has been on the advanced research and coding side of artificial intelligence.

CEO Sam Altman says there will be something for everyone, with some big and some "stocking stuffer" style announcements. With day one giving us the full version of o1 and and day two offering up a way for scientists to fine-tune o1 — that is bearing fruit.

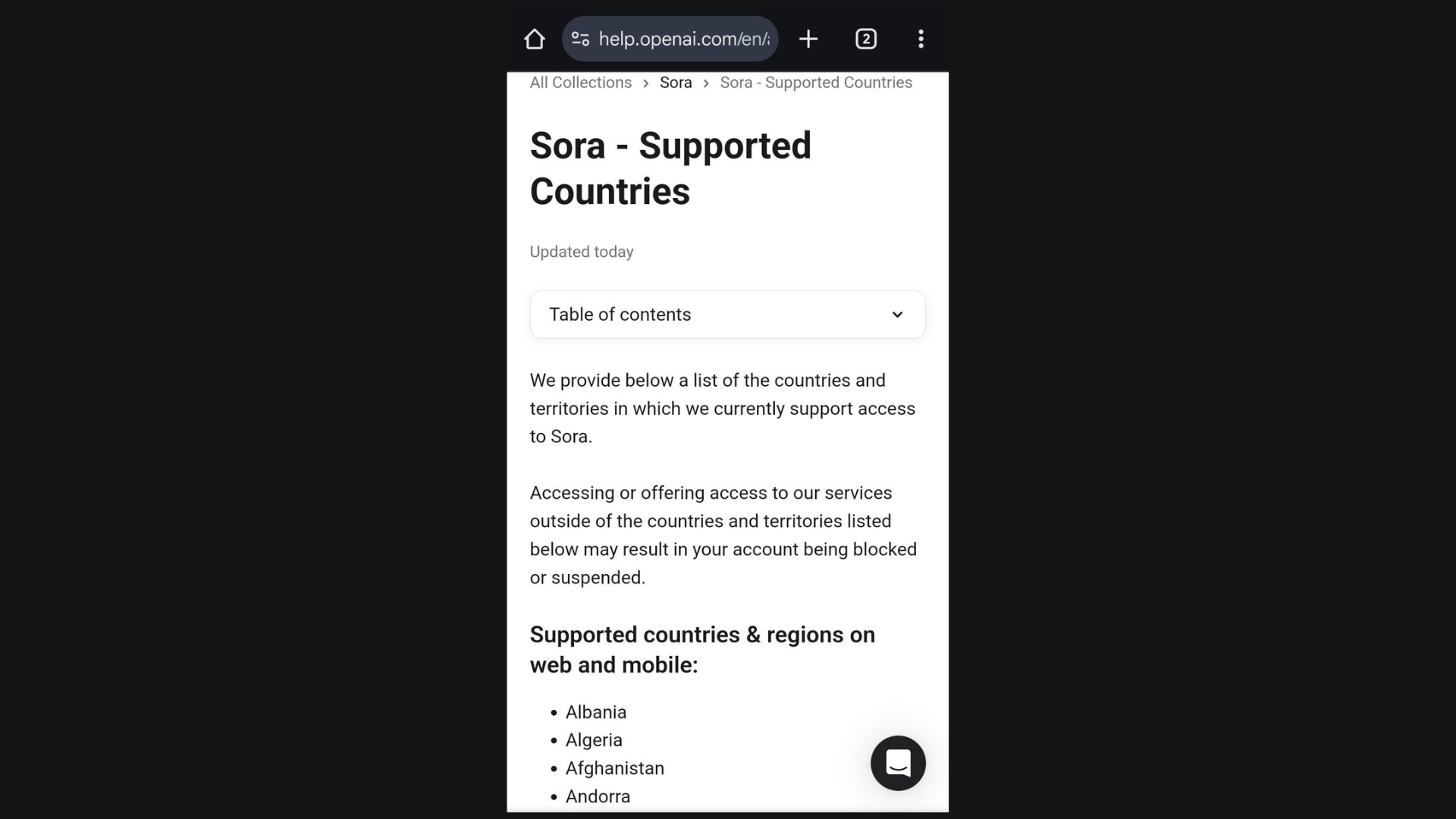

All roads point to Sora getting a release today. This includes a leak over the weekend showing a version 2 of OpenAI's AI video model, and today the documentation was updated to show which countries Sora will be available in — everywhere but EU and UK.

Whatever the AI lab announces, we will have full coverage and if its something we can try — we'll follow up with a how to guide and a hands on with the new toy.

A Sora is brewing...

While we’re all at the edge of our seat waiting for a Sora announcement, one user on X noticed that the OpenAI page listed Sora availability by country. However, it looks like that page was pulled. Whoever let the cat out of the bag, gave us all hope that something about Sora will be announced soon.

This mysterious page listing raises more questions than answers. Does the inclusion of availability by country suggest that Sora is poised for a global rollout sooner than we think? The post originally stated that it would not be available in the EU and UK. Could this imply features tailored for specific regions or languages? OpenAI has been tight-lipped about the details, but the leak has sparked rampant discussions on X and other platforms, with users theorizing everything from enhanced language capabilities to integration with existing OpenAI tools like Copilot. The abrupt removal of the page has only amplified the excitement, with fans now convinced that an official announcement could be imminent.

For now, all we can do is wait and speculate. OpenAI has a history of building anticipation for its projects, and the Sora leak has only added to the intrigue.

Sora IS coming and MKHB gives us a first look

Sora, OpenAI's state-of-the-art AI video generator IS launching today, at least according to Marques Brownlee. Speeding to X and YouTube with a post on the new model, the tech reviewer shared video footage generated with Sora two hours before the live stream.

OpenAI is scheduled to go live at 1pm ET with a fresh announcement as part of its 12 Days of OpenAI event. So far we've had the full o1 model and a research update. It was strongly hinted that today's announcement would be the long-awaited Sora.

If Brownlee's video is real then we're in for something special from the OpenAI flagship, offering degrees of realism barely even dreamt of by other models. In his X thread, Brownlee talks about it being particularly good at landscapes and drone shots but struggles with text and physics.

We'll find out for sure just how good it is at 1pm. I wonder if Sam Altman will be there for this one, or even if they'll move beyond the simple roundtable format.

What can we expect from OpenAI Sora?

OpenAI's Sora UI revealed! pic.twitter.com/Sig3ClobbZDecember 9, 2024

I have been waiting impatiently for the best part of a year for OpenAI to release its AI video generator Sora to the general public. Every new video drop or project added to my impatience.

Now, it looks like we're a mere hour away from the launch of what looks to be a generation ahead of any other commercial or open-source model currently on the market.

What we don't know is how much it will cost, how you will access it or where it will be available. There are some possible leaks pointing to this on X including one from Kol Tregaskes showing some screenshots that 'may' be from a Sora site.

This includes requiring you to commit to not using it for certain types of content, ensuring you have rights to any images you upload and not creating depictions of people without permission. The UI seems very similar to Haiper with elements of Midjourney and appears to be able to do landscape and portrait. It will run independently of ChatGPT.

We will know for sure after 1pm ET.

Day 3 livestream is ... live

Day 3 of OpenAI's 12 Days of OpenAI announcement event is live on YouTube. By this point we pretty much know what is going to be announced, but we don't know how or specifics.

Sora is going to be the most visual of all the announcements coming out of these 12 days, and so I'm hoping for something a little more than a roundtable — but who knows.

We will have all the details and information as it happens, and with follow up stories over the coming days.

Sora confirmed — and it looks great

Sam Altman is back to announce Sora and with it we get our first 'official' look at the new video model. So far, so good. It looks impressive and Altman explains that "video is important" on the path to artificial general intelligence.

The company confirmed Sora would be launching today from sora.com. It is a "completely new product experience" but would be covered by the cost of ChatGPT Plus.

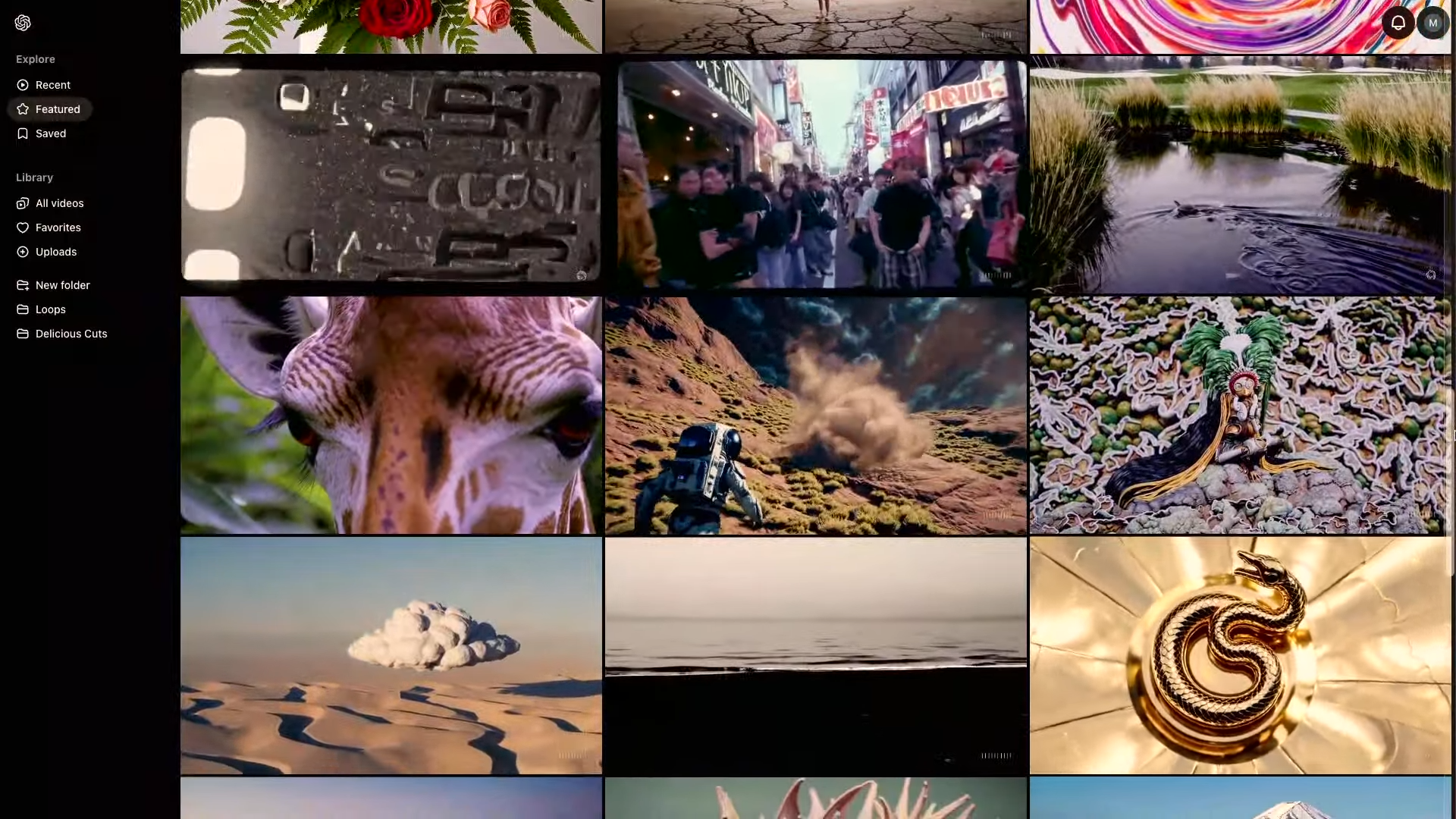

Today's announcement is Sora Turbo which runs faster than the previous version. It includes a Midjourney-style community view with videos generated by others.

Sora works a lot like other video platforms

During a demo of Sora during the livestream OpenAI was able to generate a video of wooly mammoths, revealing the system of creation including a Haiper like toolbar.

The video took a few minutes to create and included options like resolution (480p, 720p and 1080p) as well as duration of the clip starting at 10 seconds.

Videos seem to be as good as we expected from previews and you only need a ChatGPT account to sign up for Sora.com. It is available today, so I'd love to see what you create.

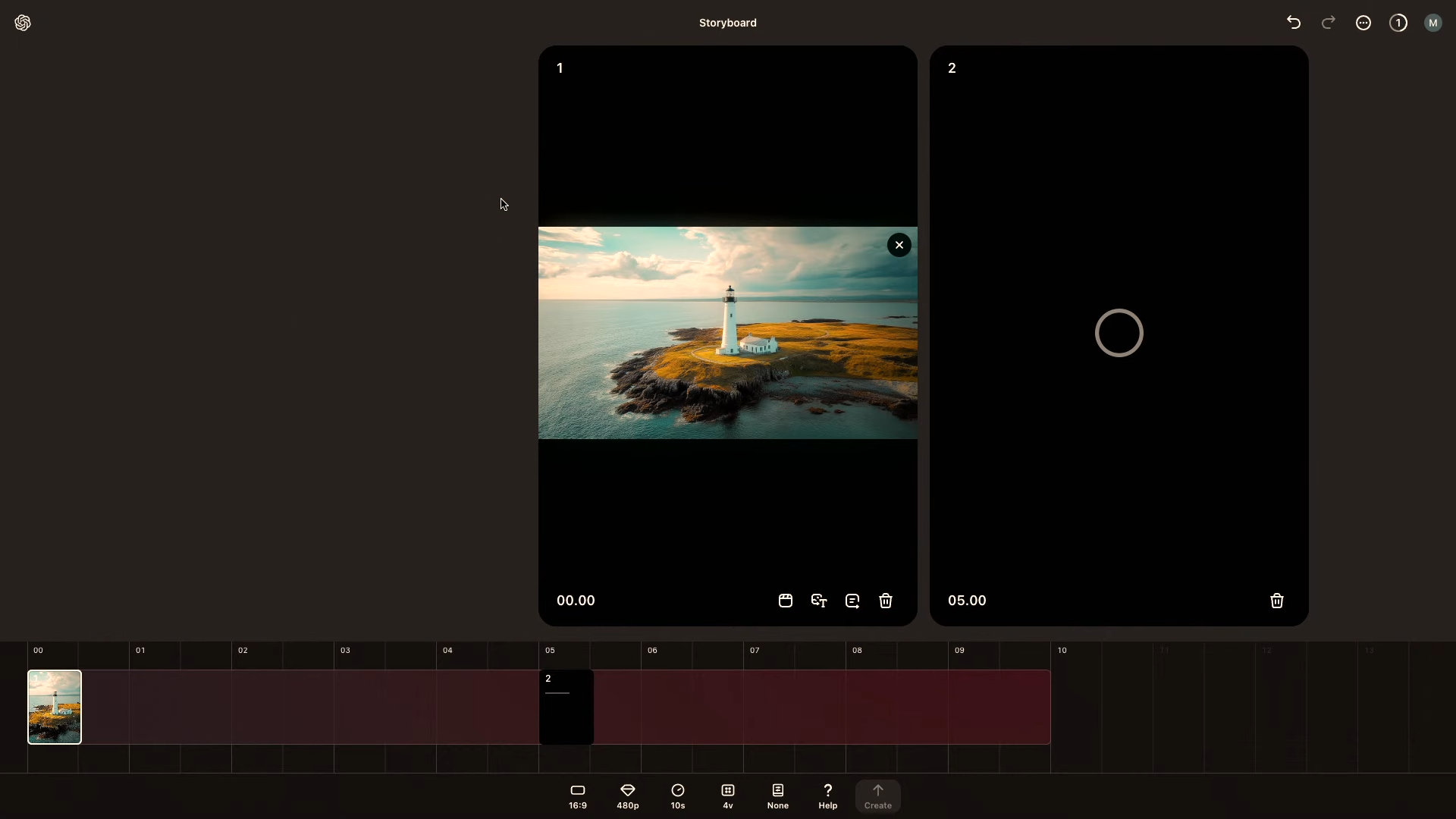

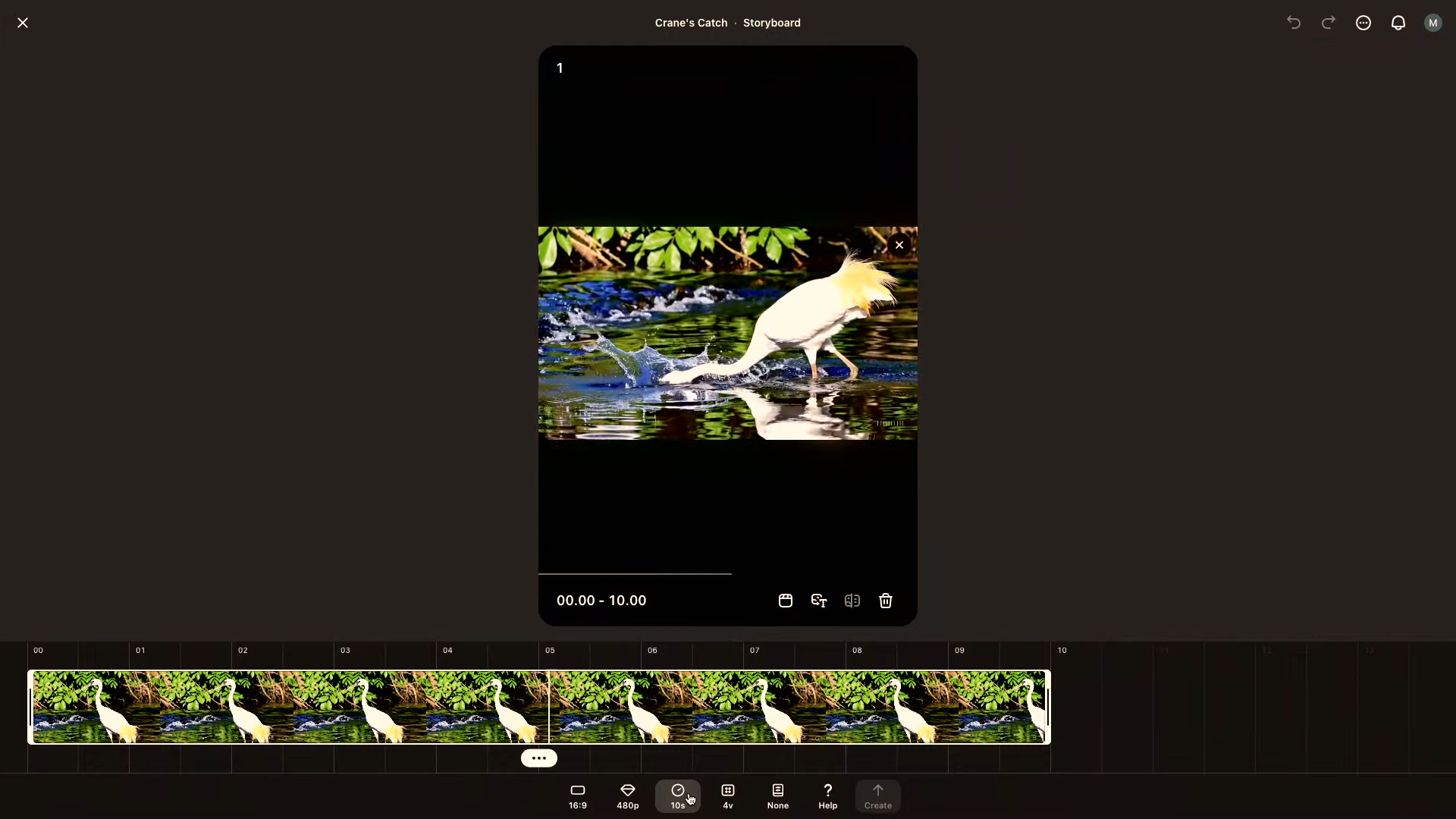

Storyboard view in Sora is a huge update

OpenAI's Sora includes a new 'Storyboard' view that lets you generate videos and place them on a timeline. Sora can then fill in the gaps from the start of the timeline to where you place the image or prompt.

If you place an image at the start Sora will create a prompt at the appropriate point in the timeline. So if you add it 10 seconds in it will generate the motion from your image to the prompt. This allows you to fully customize the flow of the video by adding keyframes to the timeline view.

When the video is generated you get more control over how it displays. Remix is one such feature that lets you describe changes to the video — such as swapping mammoths for robots — and it will handle the change, leaving everything else the same.

They were able to demonstrate the changes in real-time, suggesting it takes less than a minute to generate lower-resolution videos — roughly inline with Runway timing.

"Half of hte story of Sora is taking a video, editing it and building on top of it," OpenAI explained during the live.

Sora is a whole new product for OpenAI

There was some speculation that Sora would be incorporated into ChatGPT but OpenAI confirmed it is a completely standalone product found at Sora.com. However, if you have a ChatGPT Plus or Pro subscription access to Sora will be included.

It has a similar interface to Midjourney with menu down the side, but with a prompt box that floats over the top. The biggest new feature is the Storyboard view which looks more like a traditional video editing platform.

Other key features include the ability to remix in a video. In one example they turned wooly mammoths into robots. Another is blending one video into another. You can also manipulate different elements within a video and share them with others.

You can also remix other videos shared by others in the community feed.

Access to Sora starts today

Users will get 50 generations per month if they have a ChatGPT Plus, unlimited slow generations with ChatGPT Pro or 500 per month normal generations. Plus costs $20 a month and Pro is $200 per month but does include unlimited o1 and Advanced Voice.

Sora is a standalone product but tied to a ChatGPT subscription. Any ChatGPT account can view the feed — with videos made by others — but only paid accounts can make videos.

It will be available everywhere but the UK and EU from today, but those places will get access "at some point". Altman says they are "working very hard to bring it to those places".

"Sora is a tool that lets you try multiple ideas at once and try things not possible before. It is an extension of the creator behind it," the company says, adding that you won't be able to just make a full movie at the click of the button.

Altman says this is early and will get a lot, lot better.

OpenAI staff share Sora examples

Sora is here for Plus and Pro users at no additional cost! Pushing the boundaries of visual generation will require breakthroughs both in ML and HCI. Really proud to have worked on this brand new product with @billpeeb @rohanjamin @cmikeh2 and the rest of the Sora team!… pic.twitter.com/OjZMDDc7maDecember 9, 2024

OpenAI VP of Research Aditya Ramesh has shared a video made using Sora that shows jellyfish in the sky. He wrote on X that: "Pushing the boundaries of visual generation will require breakthroughs both in ML and HCI."

Bill Peebles, Sora lead for OpenAI shared a cute, looped image of "superpup" with a city in front of him. The loop feature looks like an interesting one to try.

The Sora feed is available to view at Sora.com, even if you are in the EU or UK. If you are anywhere else in the world you can also start creating videos.

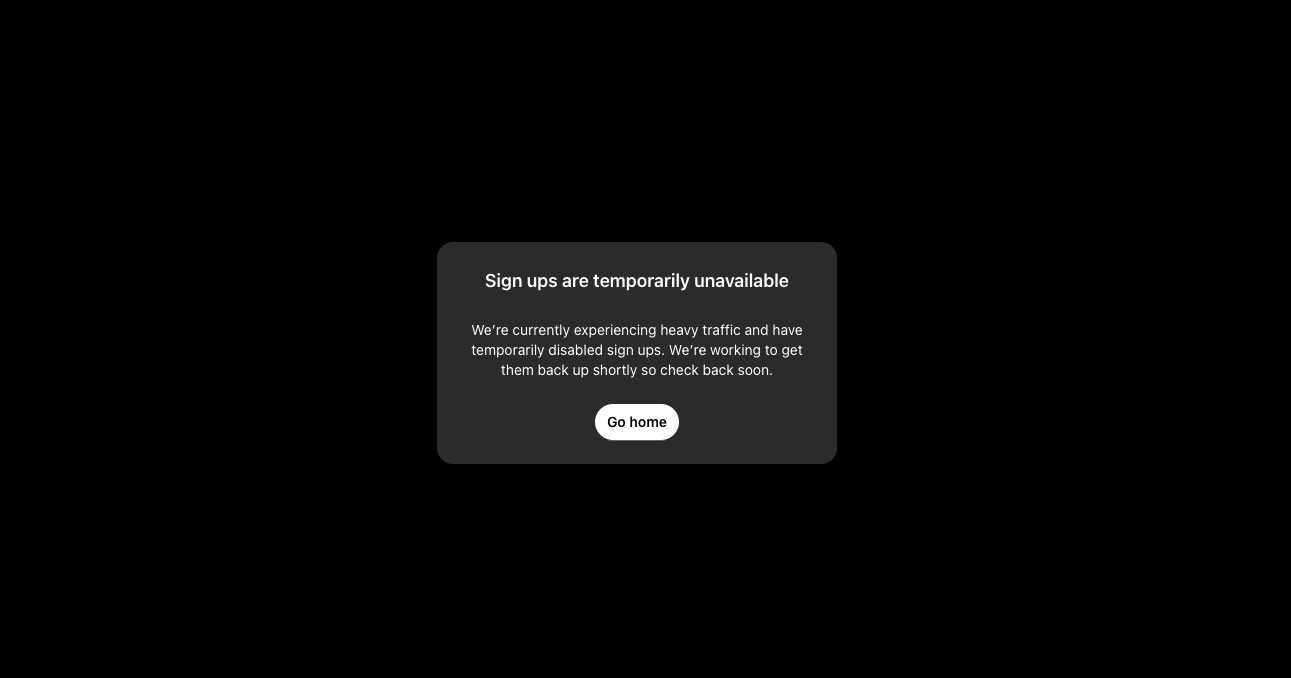

Sora access limited

We've tried logging into Sora and have had trouble getting in either locally or via an VPN.

Users on Reddit and other social platforms are also reporting an inability to sign up for the platform. One user said that they couldn't even generate videos because the queue is full.

Additionally, those of you in Europe and the UK won't be able to access the platform (without a VPN) because of local content laws which requires extra compliance measures. OpenAI is working with the governments there but we don't know when Sora will actually be available in those regions.

What you need to know about Sora

Sora is here, at least in the United States, and assuming you can get through the heavy traffic.

There is a lot going on in with the AI video generation tool including story boarding, impressive capabilities and integration with ChatGPT if you are a paid subscriber.

For more details and to learn how to access Sora, check out our full breakdown of what's new and what Sora is capable of.

Check back tomorrow for Day 4 of OpenAI's 12 Days of announcements

Sora was the big bad beast of an announcement for OpenAI, but there are several more days ahead. What else could they have planned?

We'll continue to keep an eye on OpenAI this week. If you're curious, check back in with Tom's Guide to see what's coming.

For now, here's what we think could be announced tomorrow:

- Advanced search gets more advanced: We've seen rumors and leaks about Voice Engine being able to recreate human voices with just a 15-second recording. This would be a wild announcement if it happens, but I'm only 50/50 on it actually seeing a launch this year. Instead, I'd look more to small but significant tweaks like getting access to live search or being able to look through a camera (such as your webcam or phone snapper).

- Canvas improvements: Finally, Canvas has become one of our favorite writing tools that fuses the assistance of generative AI with your workload in a way that feels slick and actually helpful. We predict some updates coming that we saw subtly hinted towards — like stacking different elements within a chat.

- ChatGPT-tweaks: And finally, turning to ChatGPT itself, we're anticipating some improvements to the capability of OpenAI's bread and butter chatbot — including video analysis.

Sora comes with some limitations

I've created hundreds of AI-generated videos over the past few years using everything from Pika Labs to Hailuo and each model brings with it different quirks and features. But, it goes beyond that as they also have their own approaches to user interface and interactivity.

With Sora, OpenAI seems to have borrowed heavily from the design Midjourney adopted when it moved from Discord to the web, but with a bit of Haiper thrown in through its floating prompt box. The most unique element is the Storyboard, where you can drop clips, images and text prompts and have Sora fill in the gaps.

But, there are other limitations facing Sora users, especially those not paying $200 per month for a ChatGPT Pro subscription. Most users will be on the $20/month ChatGPT Plus.

Limits on $20 per month plan

For a start you only get 50 generations per month, then they are limited to 720p and 5 seconds duration. This is less than most other models even when you pay a similar monthly subscription, although those other models don't also include ChatGPT access.

You can also only generate video of people if you pay for the higher subscription tier, although OpenAI says it is working on adjusting guardrails and restrictions based on use.

Many of these problems will be tackled next year though, as OpenAI is actively working on new subscription plans and payment options for different types of Sora users outside of the ChatGPT subscriptions. So you could pay $20 for ChatGPT plus a Sora subscription to get unlimited, without having to shell out for the full ChatGPT Pro.

I just want it available in the UK, which the company tells me is something they're actively working on but there are content laws posing difficulties in the rollout.

OpenAI continues its 12 Days release event

OpenAI is releasing something new every weekday until December 20 and so far we've had one small announcement in the form of fine-tuning for scientists, and two big ones including Sora and o1 full. We've also had ChatGPT Pro for $200 per month.

CEO Sam Altman says there will be something for everyone, with some big and some "stocking stuffer" style announcements. If today's announcement follows the pattern set last week then today should be a stocking stuffer rather than a big product.

Rumors point to it being an update to ChatGPT, possibly a way to run code inside a Canvas, which would be a huge improvement for developers.

Whatever the AI lab announces, we will have full coverage and if its something we can try — we'll follow up with a how to guide and a hands on with the new toy.

Hands on with Sora

After the Sora announcement today, I couldn’t wait to get my hands on the tool and try it for myself. Like many, I’ve been patiently waiting for OpenAI to finally release its advanced image and video generator. Needless to say, today’s demo during the third of OpenAI’s ’12 Days of OpenAI’ was very exciting.

Overall, I was generally impressed. I’ve used other video generators, that have stunned me with similar results, however other generators take much longer to generate. Sora created the videos quickly. In less than 5 minutes my prompt was turned into a fairly realistic 3 to 5 second video.

The site was easy to use and all the features and what they did were clear, especially after watching them demoed today. I did find it a little tricky to navigate back home after a video was created. I ended up just using the back arrows to get back home and create a new prompt.

A rocky start for Sora

we significantly underestimated demand for sora; it is going to take awhile to get everyone access.trying to figure out how to do it as fast as possible!December 10, 2024

OpenAI's Sora offers users the ability to create hyperrealistic videos, but there are currently some issues with both the cost and the service itself. Especially if you want to create more than a handful of videos per day.

It was recently revealed that OpenAI's video generator Sora will be included in the different paid tiers for ChatGPT, including the $20 ChatGPT Plus plan and the $200 Pro.

However, there are some limitations regarding the quality of video your credits can produce and just how many you get per month without paying for the more expensive Pro plan. Add this to massive server issues causing the company to block sign-ups and its been a bit of a rocky start for the new AI video platform.

In a recent post on X, user kimmonismus revealed how many videos users can make while running the Plus subscription. The plan includes enough credits to create 50 5-second priority videos at 720p.

For reference, a priority video is granted a leading position in the server queue, meaning it's created faster. Meanwhile, the $200 Pro model offers up to 500 20-second videos that run at 1080p. It also gives you unlimited 'slow' generations.

Best alternatives to Sora

Sora is impressive but it isn't the only option if you want to create realistic-looking AI video. There are a multitude of offerings including Runway, Kling and Pika Labs. On top of that you've got open-source offerings from Genmo and LTX Studio.

All of the platforms have their own unique features, models have their own quirks and capabilities and they all come at different price points. Some are almost indistinguishable in performance to what we're seeing from Sora Turbo, others have a more social focus, adding templates or pre-designed styles to make creation easier.

My personal favorite is Runway because I've used it the most but there is something special about the way Hailuo handles human emotion. I've compiled a list of my top five AI video generator alternatives to Sora.

The video above was created using an image generated by Grok Aurora and animated in Runway Gen-3 Turbo without any additional prompt.

You've tried Sora — here's what you can do with your videos

Now that Sora is officially here and many have had the chance to go hands-on with it, you might be wondering what can you do with your videos? Besides impressing your friends and family with your prompt skills, AI video creation is very useful.

I came up with a few ideas while playing around with the site and exploring what others have done in the feed. You could create a fun gender reveal video, an invitation to your holiday party, a graduation announcement, or even a trailer for your own book or film.I wrote a book and wanted to play around with the possibility of using Sora to bring it to life.

However, I ran into some hiccups because the AI thought utilizing the image from my book in a video was against their privacy policy. While this was really frustrating and put a damper on my plans to make the ultimate advertisement for my book, I appreciate OpenAI’s efforts to make the site as safe as possible for users.

These 5 second videos are incredibly cool and even easier to make with the right prompts. Their usefulness goes as far as our own imaginations will allow. So while you can’t create an entire feature film yet, there is plenty of room to explore new and exciting ways to make announcements, engage on social media, and explore creatively.

OpenAI takes safety concerns seriously for Sora users

With the launch of Sora, OpenAI’s new text-to-video AI tool, the spotlight once again shines on the critical issues of content safety and quality assurance in AI-generated media. OpenAI has implemented measures to address these concerns, ensuring that users can experiment creatively without compromising ethical boundaries or public trust.

To help calm anxieties and suspicions about misinformation, deepfakes, and AI manipulation, Sora places visible watermarks on each video. Although watermarks can be cropped and even removed, watermark-free videos retain metadata that tracks their origin, providing a layer of transparency and accountability. This ensures that Sora’s outputs remain identifiable and traceable, fostering trust in the platform. At least for now this layer of safety adds visibility and is helpful for transparency showing that each Sora video was generated with AI.

Additionally, OpenAI has established strict content guidelines. Users must agree before even generating their first video, that they will avoid generating material involving minors, violence, explicit content, or copyrighted material. While there are sure to be users who find workarounds, OpenAI is prepared to shut their activity down promptly. Those who violate these terms risk suspension or outright bans from the platform. These safeguards align Sora with industry standards while reflecting OpenAI's commitment to responsible AI use.

Sora enters a crowded and rapidly evolving market for AI video generation tools. Competitors like Runway, Pika, and others have already captured attention, each offering unique features and capabilities within their platforms. The heightened interest in AI video generation has fueled a race among developers to push boundaries while maintaining public trust.

As the popularity of AI video generators continues to surge, platforms like Sora must balance innovation with responsibility. The ability to create realistic, engaging videos from text prompts offers immense potential for storytelling, education, and entertainment.

OpenAI’s approach with Sora focues on balance while encouraging creative exploration. By addressing safety concerns and prioritizing them, Sora aims to lead the charge in responsible AI development—setting the tone for the future of generative media.

ChatGPT suggestions for Sora prompts are surprisingly good

Once I got my hands on Sora I was like a deer in headlights wondering where to begin. With so many features and capabilities, I really wasn’t sure where to start. What’s the word for AI video generation block? Whatever it was, it felt similar to writer’s block. Essentially, I was overwhelmed by the possibilities. If you can relate, then you might want to try a few of the prompts ChatGPT suggested I try. OpenAI’s chatbot gave me many suggestions, but here are a few of my favorites.

Fantasy worlds

If you ask me, this is something that I believe Sora does best. Sora does well with taking the creative liberty to explore fantastical worlds with plenty of science fiction. ChatGPT suggests these prompts:

"A glowing forest under a purple sky, with bioluminescent plants and a crystal-clear river."

"A steampunk cityscape with airships flying among towering brass buildings at sunset."

"A dragon perched on a snowy mountain peak, overlooking a medieval village."

Photorealism

Yesterday when I tried photorealistic videos, I was impressed by everything from the way the chef moved to the way the light reflected in the puddles of the city street. ChatGPT suggests these prompts:

"A bowl of fresh strawberries on a rustic wooden table, sunlight streaming through a window."

"A rainy city street at night, with neon signs reflecting on wet pavement."

"A portrait of an elderly man with deep wrinkles, wearing a worn leather hat."

Cartoon and whimsical

Without a doubt Sora shines with cartoon and Claymation type videos. Because cartoons don’t move precisely like actual humans, the glitches and minor flaws aren’t as noticeable. ChatGPT suggests trying the following:

"A group of anthropomorphic animals having a tea party in a lush garden."

"A superhero cat flying through a cityscape, wearing a cape and mask."

"A whimsical underwater world with smiling fish and a coral castle."

Nature and landscapes

Definitely give these a try. The natural landscape in the videos I generated yesterday were pretty phenomenal. Despite a few minor flaws with consistency, Sora can showcase a number of natural landscapes and earthy scenes.

"A tranquil mountain lake at sunrise, with mist rolling over the water."

"A desert oasis with vibrant green palms and crystal-clear water."

"An underwater scene with a coral reef teeming with colorful fish and sea turtles."

These prompts can be customized further based on specific details or styles you want the AI to emphasize. But remember, if you have ChatGPT Plus, you only get 50 video generations a month, so choose wisely.

Shockingly real Sora videos to explore now

For those who don’t have ChatGPT Plus, the good news is you can still enjoy Sora from the sidelines. As I’ve been exploring the site, I’ve had so much fun checking out the variety of creative, fun, and shockingly real videos.

From a tired cat in a tie and button-down shirt drinking coffee to a lazy cat turning into a robot with superpowers, I’m pleasantly surprised by the number of cat videos. Even with AI generated videos, it's clear the internet loves cat videos.

Other videos also included animals and were equally intriguing. Dolphins swimming in the sky, a lizard lounging on a comfy bed (no thanks!), a horse galloping through an open field, and an adorable squirrel doing squats in a mini gym was one of the cutest things I’ve ever seen.

A business man burning the midnight oil at his desk, a mysterious woman thinking, and a scuba diver exploring the ocean floor were a few more of the realistic videos I enjoyed.

While a ChatGPT Plus subscription definitely helps you get more from the site, it’s worth taking a look at what you can do before subscribing. I have to say, from my own experience, it’s definitely worth the $20 per month price tag.

What can we expect on Day 4?

If OpenAI keeps to the pattern they seem to have set with the first three days — and that really isn't enough information to say yes or no, then today will be a 'stocking stuffer'.

Sam Altman said some days will be something big, other days will be smaller announcements of interest to a smaller group of people. We've had two big announcement days and one 'stocking stuffer' so far.

- Day 1 was a big update: We got the full release of o1, ChatGPT Pro for $200 per month and a new o1 Pro model for really advanced requirements.

- Day 2 was a small update: We got a new type of reinforcement fine-tuning that will let scientists and people with complex data needs to train an AI model on that data and use a reasoning model to process the information.

- Day 3 was a big update: This might have been the biggest update of the lot as we got Sora, and not just the model but an entirely new platform and consumer product from OpenAI.

Based purely on that pattern I'm expecting a smallish update. Rumors are pointing to an update to ChatGPT Canvas. We will know for sure in about 30 minutes and if Sam Altman appears on screen — it might be bigger than we were expecting.

Day 4 is live: All things Canvas

Day four of the 12 Days of OpenAI is live and OpenAI's team is ready to reveal the next announcement. So far we've had o1 reasoning model, $200 per month ChatGPT Pro, reinforcement fine tuning and of course — Sora.

We'll have full live coverage here and on Tom's Guide over the rest of the event up to the final announcement on December 20.

The YouTube description says our hosts are OpenAI Chief Product Officer Kevin Weil, Lee Byron from the ChatGPT team, and Alexi Christakis, one of the people building Canvas.

Canvas comes to everyone

Everyone is getting Canvas and it will be part of the main model rather than as a separate drop-down option. You'll also be able to use it with a custom GPT and run python code.

The presentation was the usual trio sat around a computer, this time though one of them had a green Santa hat.

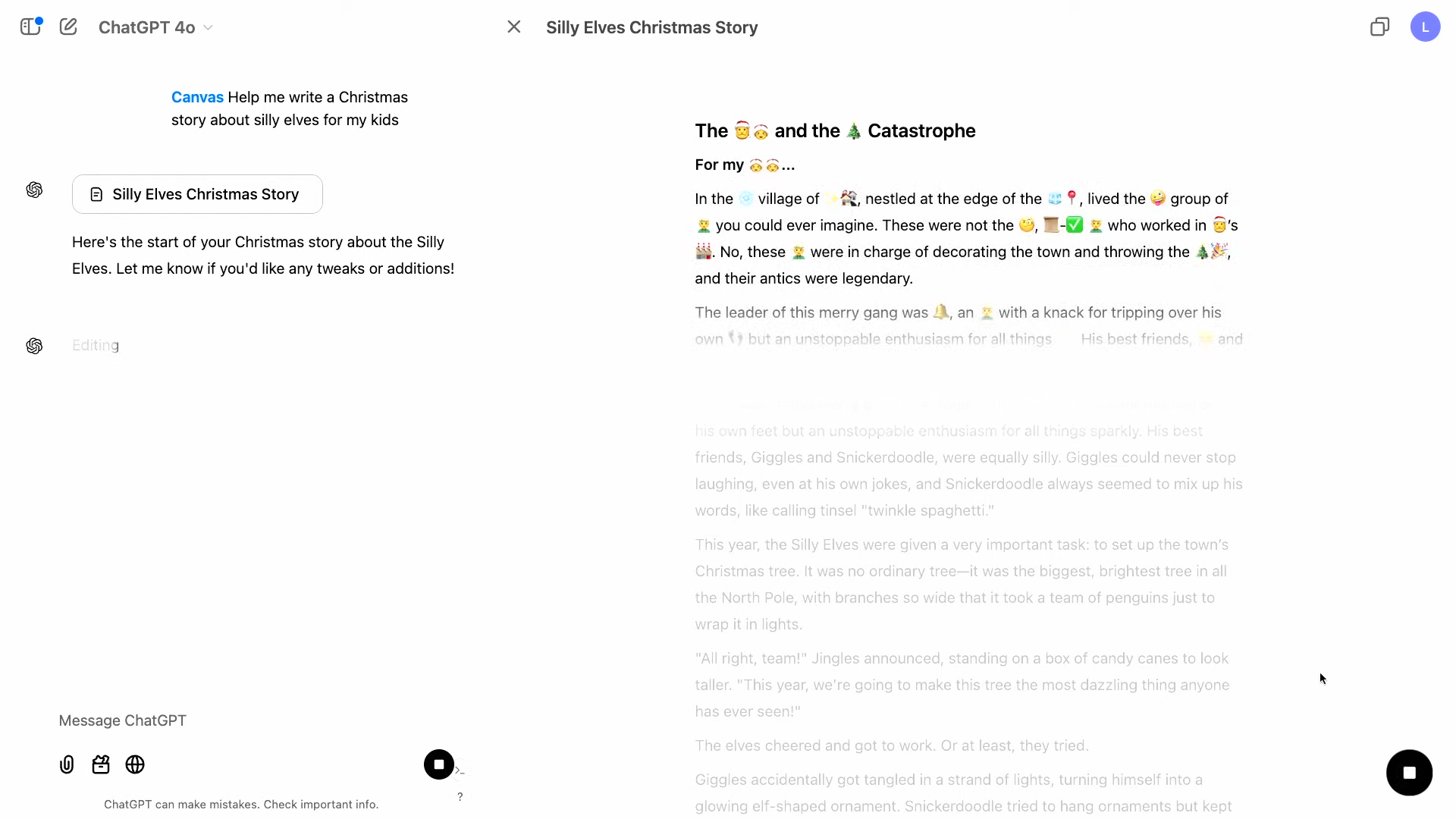

In the demo portion they asked ChatGPT to write a Christmas story about silly elves and it automatically triggered a Canvas, showing the chat on the left and Canvas on the right where it was writing the story. This kept chat separate from content.

Minimal change from Canvas beta

The biggest announcement today is that you'll be able to run Python code inside a Canvas, making it closer to the capabilities of Artifacts in Claude. Outside of that the main change is in how you access it — Canvas is coming to the main model, not just a beta version.

To use it simply ask ChatGPT to help you write content and it will trigger Canvas. If it doesn't then you can hit the function button and it will open a Canvas.

There is a new Writing helper tool as well. If you put a lot of text into a prompt box it will offer a new button to 'open in Canvas'. From that point you can edit the text you entered as if it were a text editor, or use ChatGPT to make changes.

Canvas is the perfect editor

While Canvas is a useful document writing tool, one area demonstrated by the OpenAI team during the 12 Days livestream is using it as an editor.

You can have ChatGPT review the document you upload and it can add comments/suggestions on a line-by-line basis for ways to improve your text.

You can then reject/dismiss a comment as you make changes based on its recommendations. After this you can download the document and share it.

Canvas as a code editor

Canvas was already able to generate and display code during the beta, but now it can use improved syntax highlighting based on the language you're using and if it is Python code it can run it from within the Canvas.

This is a brilliant way to test code on the fly before downloading it and sending it to your own code editor. Running Python code in Canvas also sends any errors or console output to the ChatGPT chat view, allowing it to quickly provide solutions.

There is even a 'fix bug' button where ChatGPT can edit the Canvas directly and make the correction. This is a great way to generate graphs or run complex problems.

You can also show any changes you've made to the document and rollback to earlier versions. It isn't clear how advanced the code can get but the team say it can run almost any python module — so it may be able to create games.

Breathing new life into custom GPTs

OpenAI launched its custom GPTs more than a year ago and they've been slowly making new models and features available to the AI. The latest is Canvas which could be significant as a custom GPT can also use functions from other applications.

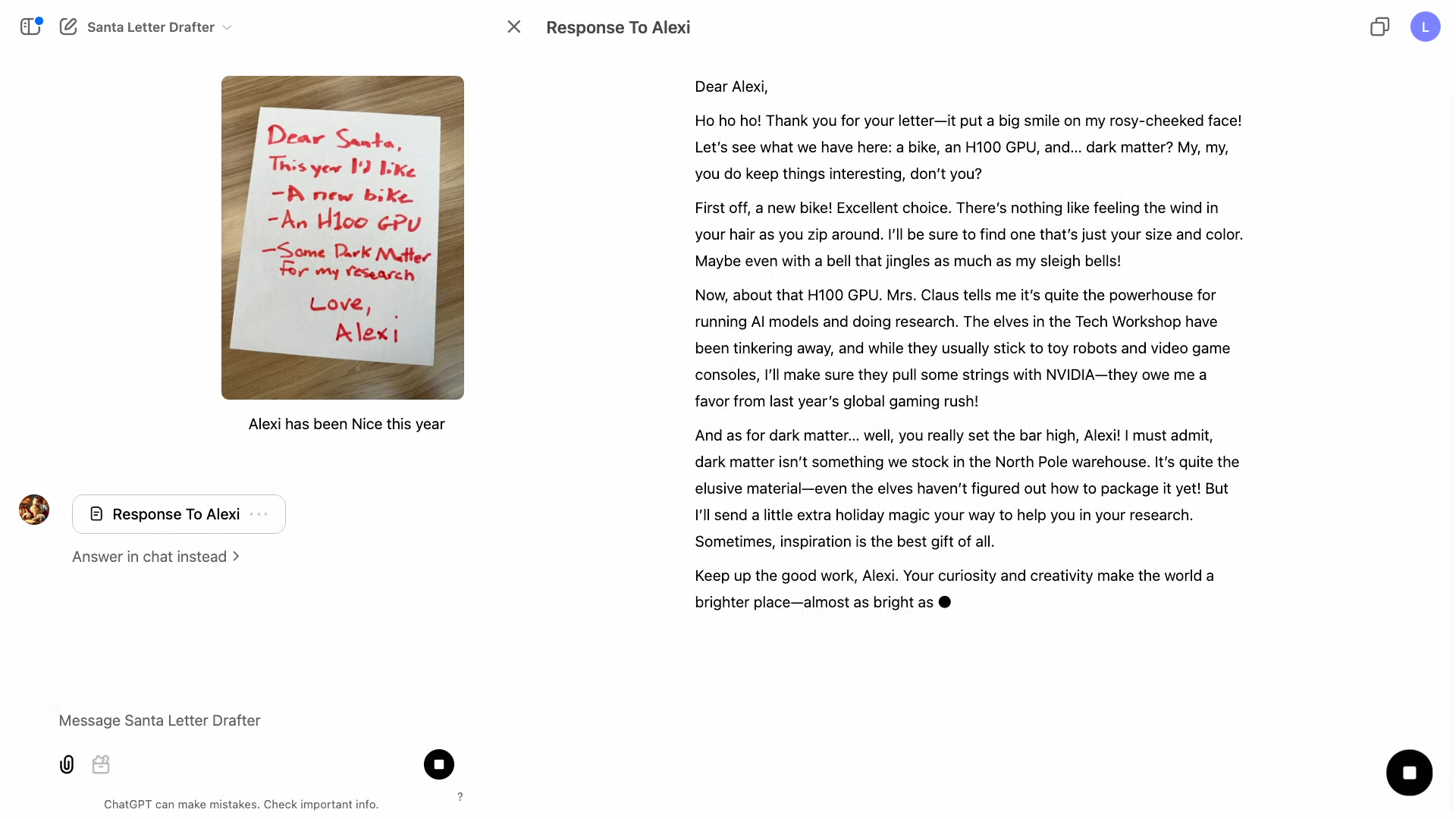

During the demo the team demonstrated loading a "letter to Santa" into a new "Santa Letter Drafter" GPT. It took the photo of the handwritten letter and opened a Canvas.

In the Canvas it started writing a letter from Santa in response to the handwritten letter that you can edit or save to send to your children. While this is a silly example, it does demonstrate the power of bringing other functionality into Canvas.

This could be used with a education GPT to improve writing skills or even help you learn another language through a collaborative Canvas. You and the AI can work together on it with the AI correcting your mistakes.

The obligatory 'joke' to end the live

Every one of the last four livestream events has ended with an obligatory 'bad joke' and this one was no better than any of the others so far.

How does Santa take photos?

With a North Polaroid

Everything Canvas

Today was run by the trio of OpenAI Chief Product Officer Kevin Weil, Lee Byron from the ChatGPT team, and Alexi Christakis, one of the people building Canvas.

They announced that everyone using the main ChatGPT model can access the new editing tool Canvas, that turns the content in GPT into a living document. The tool keeps the chat with the model separate from the content it produces. Additionally, there is a Writing helper tool that will prompt Canvas if it detects a lot of text

The biggest news is that you can run Python code in the editor. The OpenAI crew showed off how you can use Canvas to generate and display code and then improve syntax and fix errors. It will also suggest solutions with a fix errors button.

Lastly, they used Canvas to respond to an image of a letter to Santa, showing how the tool is integrating with other functionality aspects of ChatGPT.

Sam Altman posts about Canvas

canvas is now available to all chatgpt users, and can execute code!more importantly it can also still emojify your writing.December 10, 2024

OpenAI CEO and foudner Sam Altman posted about today's announcement over on X.

He noted that the writing and editing tool is available to everyone, not just paid subscribers.

And he very importantly, called out adding emojis in the content using Canvas. Very important information, ya know.

What's new with Canvas?

We've broken down Canvas in today's blog with the announcement, but we have a more detailed breakdown of all the new features in the writing/editing tool for ChatGPT.

If you're curious for more information check it out here.

Check back tomorrow for Day of OpenAI's announcements

OpenAI has had several big announcements in the first 4 days of their 12 Days of AI extravaganza.

Through Dec. 20 there will be more.

Sam Altman has said that some days will be bigger than others. We kicked off this week with the big Sora announcement and the Canvas announcement felt like more of a medium one.

Tomorrow may fall more into the stocking stuffer category.

Curious to see what's next? Check back in with Tom's Guide tomorrow morning 10 AM ET to see what's new in the world of OpenAI.

With than in mind, here are a couple of things that OpenAI may announce tomorrow:

- Advanced search gets more advanced: We've seen rumors and leaks about Voice Engine being able to recreate human voices with just a 15-second recording. This would be a wild announcement if it happens, but I'm only 50/50 on it actually seeing a launch this year. Instead, I'd look more to small but significant tweaks like getting access to live search or being able to look through a camera (such as your webcam or phone snapper).

- ChatGPT-tweaks: turning to ChatGPT itself, we're anticipating some improvements to the capability of OpenAI's bread and butter chatbot — including video analysis.