What you need to know

- YouTube highlights its new "altered or synthetic" content disclosure creators must fill out when uploading videos.

- If a user's video features people, footage, or scenarios that have been digitally altered, they must say "yes" to disclose this information.

- Videos with AI-generated or "altered" content will receive a label, which users will begin noticing "in the weeks ahead."

YouTube highlights the arrival of its new AI disclosure creators must adhere to when uploading videos.

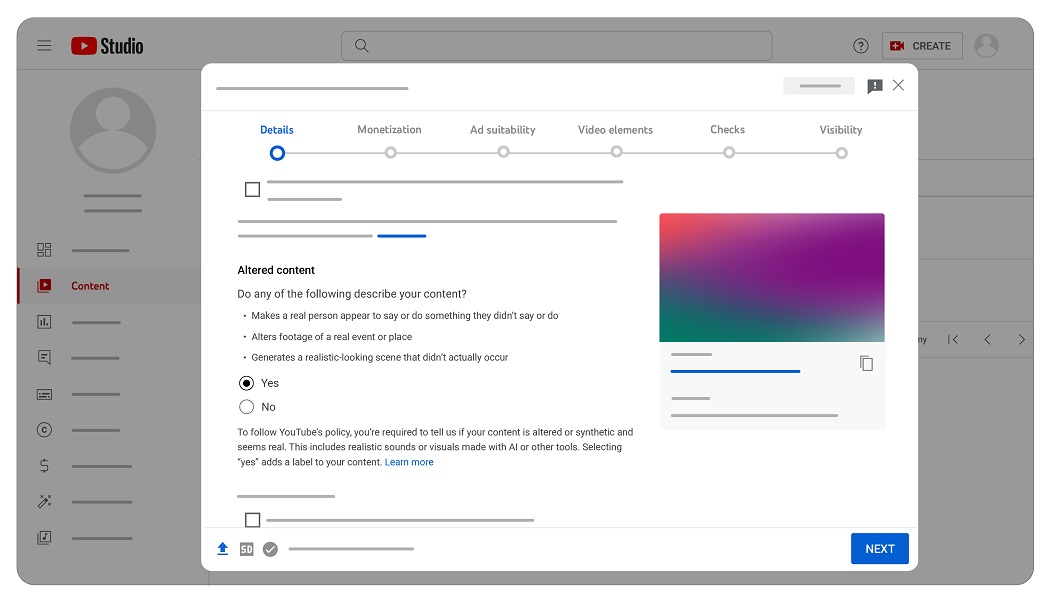

According to YouTube, today (Mar. 18), the platform is introducing a new set of "Altered content" details creators must fill out. The company adds creators must "disclose to viewers when realistic content – content a viewer could easily mistake for a real person, place, or event – is made with altered or synthetic media, including generative AI."

This new section will ask creators questions regarding whether or not the people, footage, or scenes in their videos were digitally altered. If the answer to the questions is "yes," YouTube states it will apply a label on your video or Short. Additionally, users can find these labels in a video's description box.

Disclosing that your video contains altered or synthetic content will not hinder its discoverability on the platform. The platform states creators who have not properly labeled their videos will receive a label "that creators will not have the option to remove."

If such behavior continues, YouTube states it will look to remove content, suspend a creator's account, and other penalties.

Content that is clearly fantastical and imaginative, like animations, color adjustment, special effects, and beauty filters, does not fall within the scope of altered content. Moreover, creators do not have to worry about penalties if they use AI to generate scripts or captions.

More information about this new disclosure can be found on YouTube's help page. YouTube viewers will notice these new labels appear "in the weeks ahead" on all devices.

YouTube states that its latest AI and altered content disclosure are part of its efforts to clamp down on misinformation. The company hopes to "increase the transparency" regarding digital content, as well.

It's no surprise that YouTube is going down this path as Google agreed to proceed with measures to help prevent societal risks. The White House urged big tech companies like Google to gain a better handle on AI-generated content and the problems it can have on everyday users. Through its AI safety practices, Google agreed to provide watermarks, metadata tags, and more to inform users when content was synthetically created.

Users are likely to continue to see these new tags as YouTube is working on several generative AI features. The company started rolling out a test for Premium members to test its new conversational AI alongside "Dream Track," which lets creators digitally sample popular artists' voices.