Over the past few weeks, it feels like I've received an uptick of Xbox customers reaching out to me either via email or direct message to help them overcome unfair bans. I wondered if it was coincidental, but I've also seen an uptick in the topic across social media as well, making me realize that Xbox has a ticking timebomb on its hands.

When you sign up for Xbox services on your Xbox Series X or Xbox Series S, or indeed any online gaming services, you sign a Terms of Service agreement which essentially denies you any rights to the content you've purchased. Even if you "buy" a game from any of these platforms, whether it's Xbox or not, you don't actually own anything. You own a license, to rent the item in question in perpetuity — or at least until Apple, Google, PlayStation, Microsoft, whoever, decides you no longer deserve it.

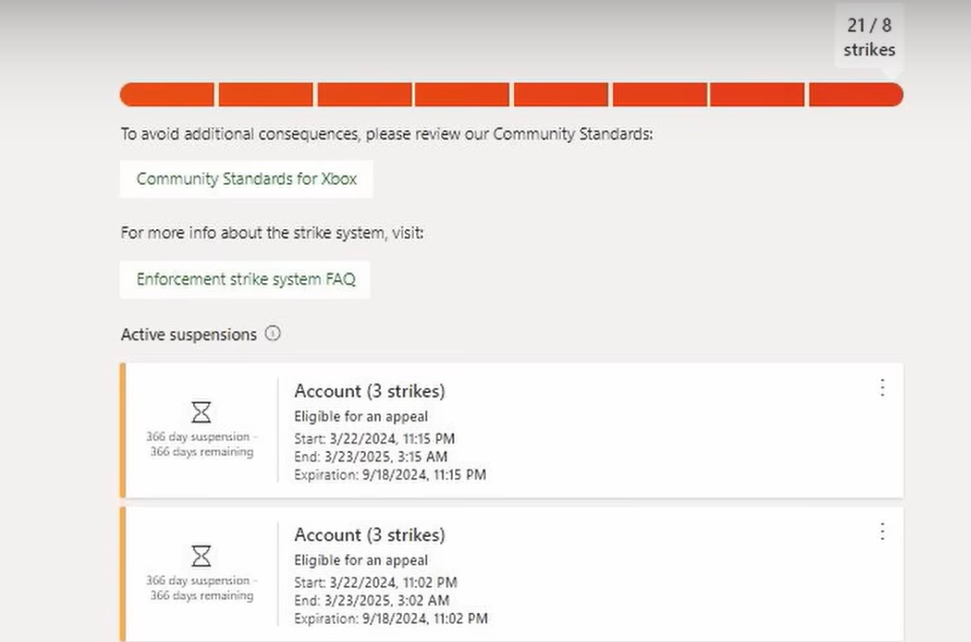

How do they decide whether or not you deserve access to the content you've purchased? Well, increasingly, these companies are using artificial intelligence. And increasingly, we're seeing an uptick in unfair banning practices with users left with absolutely no real path to appeal. This is not acceptable.

Microsoft fired hundreds of customer support employees over the past few years

As part of Microsoft's recent Activision-Blizzard "restructuring," the company culled vast swaths of the firm's customer service team. Activision-Blizzard was one of the few gaming publishers that actually did invest heavily in human customer service support in-house, even though this service too was heavily understaffed, overworked, and oft-criticized by users. Little did these users know, things were about to get a whole lot worse.

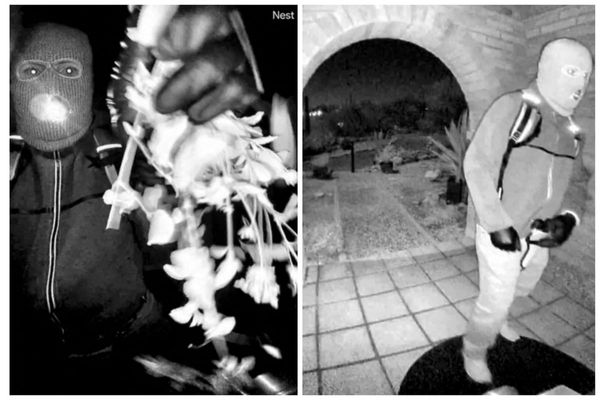

It's hard to say for sure if the two events correlate, but over the past few weeks I've seen more and more players reaching out to me via various channels to try and help them get their accounts unbanned. I assume that perhaps, in part, it was due to our coverage of a YouTuber named GhillieYT who had their account unfairly banned in Minecraft. Content creators are increasingly being targeted in "mass reporting" events that Microsoft's automated systems take as legit, resulting in permanent account closures.

Frankly, Microsoft isn't paying us to do their customer service for them, and it absolutely shouldn't fall to us to have to back channel to help with these situations. Microsoft does have systems in place, reviewable by human beings, in order to get these situations resolved — but they are underbaked, and you guessed it, understaffed. YouTubers and big content creators have a platform by which they can get attention onto their unfairly-placed bans to get them overturned, many thousands, millions of others, do not.

I've also been approached by Call of Duty players who claim to have been "shadow banned" unfairly. Shadow banning in Call of Duty is a practice by which cheaters, chat abusers, and the like, are placed into a separate matchmaking pool separate from the general population. Microsoft also has Xbox DVR clips auto-upload feature enabled by default, yet, it will ban you for auto-sharing 18+ rated clips from sexy games like Baldur's Gate 3 or Cyberpunk 2077, despite the content from those games existing on their own servers.

And now, there's a new rabbit hole of automated-banning that is going to cause Microsoft another big headache.

Players in FFXIV are being banned for using innocuous, in-game terms

Perhaps we should have seen this coming, but Microsoft is issuing permanent and total account bans to players in FFXIV, the MMO from Square-Enix, for using the phrase "Free Company." For those who don't know, Free Companies are essentially FFXIV's clan or guild system, where players can band together as mercs to take down the game's big scary beasties. Microsoft doesn't seem to care, however.

A viral tweet from Envinyon details how reddit user /u/TGB_B20kEn was handed a two-month account suspension for using the phrase "Free Company" in an LFG posting via Xbox. This simply is not acceptable.

pic.twitter.com/9woWS5KrtAMarch 30, 2024

If Microsoft is truly a serious gaming company, it needs to accept that human beings use words, and those words often have specific contexts. If their automated systems are too stupid to understand the absolute most basic innocuous contexts words and phrases might have, then it needs to throw the entire system into the garbage. Why assume the worst, if you're not going to moderate context appropriately?

I have literally no idea in what universe Microsoft thinks that "Free Company" denotes something harmful. It was suggested that the system assumed it could be interpreted as some kind of solicitation for "company," but seriously, if Microsoft isn't going to use human moderators in its system, it should give people the benefit of the doubt first.

The whole system needs a total policy overhaul. For every unfairly banned individual that is able to get attention on their case, there are probably hundreds who suffer in silence. Even if these are edge cases, this is not acceptable. And unless it's changed, it'll keep happening, and make people feel increasingly fearful about using Xbox Live in a social context.

We pay for this

• Best Xbox / PC headsets in 2024

• Best upcoming Xbox games

• Best Xbox controllers

• Best Xbox accessories

• Best small gaming PCs

• Best gaming laptops in 2024

• Best gaming handhelds 2024

One of the most archaic things about console gaming is the fact we have to pay to play online. We are told that the fee subsidizes things like customer service, moderated gameplay, a less-cheat-susceptible environment — but none of that is true today. What exactly are we paying for, now? We're not paying for customer service, that's for sure. We're not paying to avoid cheaters — Microsoft forces competitive console FPS players to share pools with more-hack-prone PC matchmaking pools now. And we're sure as hell not paying for intelligent moderation systems.

Microsoft's obsession with automation has been a blight on the company's consumer operations for the best part of a decade. I myself recently got locked out of a second Microsoft 365 Business Account, and it took well over two weeks of constant calls to get it escalated and fixed, being passed around to different departments who didn't want to take responsibility to help me out. And that's their business-grade service.

It's increasingly apparent that Microsoft thinks it can have its cake and eat it from a customer service perspective — the most minimal amounts of investment possible, while also having a "safe" sanitized environments that won't cause PR headaches. Well, I'm here to cause a headache, because the system in place is increasingly not fit for purpose.

Toxic players are bad, but so are toxic moderation practices.

Microsoft's logic is that toxic environments probably drive people away from playing. They're probably right. But what if the toxic environment stems from worrying you'll get banned for playing well in a competitive match, only to get mass reported and banned for no reason, ending up in a Kafkaesque nightmare of broken customer support bots? Toxic players are bad, but so are toxic moderation practices. Both can drive people away from the platform, and Microsoft should take note.

Either put trained humans in charge of issuing bans, or ease these absurd, draconian moderation practices, Microsoft. You can't build a social gaming platform comprised of human beings, paying customers, and hand off your duty of care to robots who are too stupid to tell the difference between an FFXIV "Free Company" and "free company xoxox link in bio."