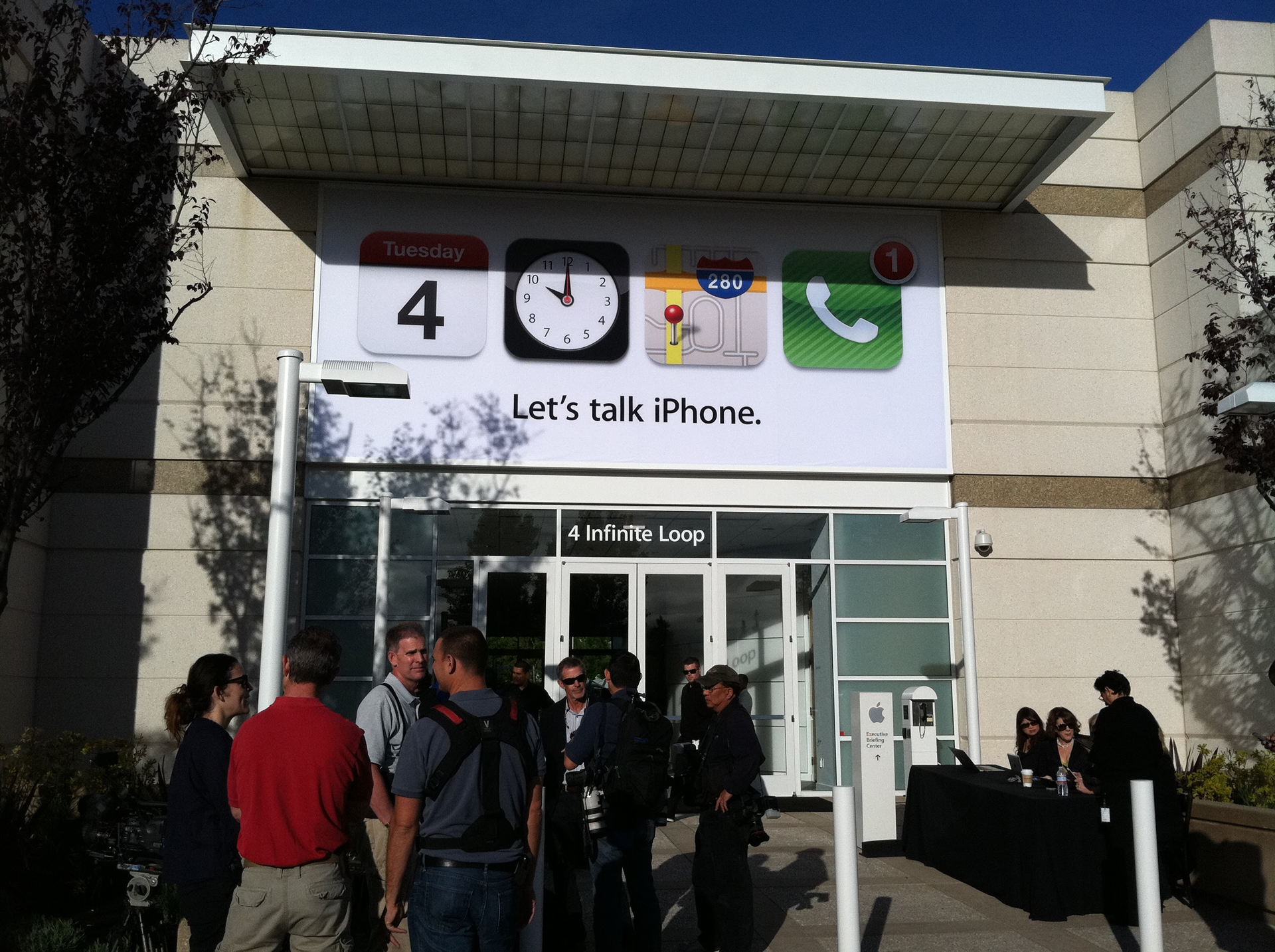

“Flawless and stunning.” That was how I described the very first Siri demonstration I saw 13 years ago at the iPhone 4S launch in Apple’s original One Infinite Loop headquarters.

It was a momentous unveiling that was quickly overshadowed by Steve Job’s tragic death from cancer just a day later. But before that news broke, we were digesting multiple significant Apple developments: It was Tim Cook’s first product launch as CEO (I recall that though his approach was very un-Jobs-like, he seemed perfectly at ease). We expected an iPhone 5, but Apple surprised us with the iPhone 4S. It seemed like a half-measure. However, the “S” meant more than “Second.” It was for “Siri,” Apple’s brand-new “Intelligent Assist.”

Apple told us that Siri had a form of artificial intelligence inside it but never described it as “AI.” My reaction to the first Siri was less about its nascent intelligence (to intuit the context of your question) than its uncanny ability to easily understand everything we said. That came by way of Nuance, a company with decades of voice recognition experience (it built Dragon Naturally Speaking). Microsoft eventually acquired it in 2022, and these days, Apple spends little time talking about Siri’s ability to understand speech, a table-stakes ability for most chatbots.

In the more than a dozen years since its introduction, Siri’s ability to understand and respond to our queries has improved, mostly thanks to a series of “brain transplants,” it has a more natural voice, doesn’t need the “Hey Siri” prompt, and can even maintain the thread of a conversation over a couple of queries (though it still runs out of road pretty quickly). Even so, this description of the original Siri’s capabilities from an Oct. 4, 2011, Mashable post pretty much encapsulates what it can accomplish today:

“Because it is capable of parsing natural human language, it can respond to a question such as ‘What is the weather like today?’ with an answer it finds online, such as a weather report. It can read messages, search Wikipedia, make a calendar appointment, set a reminder, search Google Maps and more.”

And that’s a problem.

Falling behind

Siri was impressive right up until Amazon introduced Alexa in 2014. Suddenly, Siri seemed limited by its smartphone environment. Eventually, Siri and Alexa appeared to reach some parity but were ultimately upstaged by a series of powerful AI chatbots that started arriving in 2022.

Since then, our expectations for what an “Intelligent Assistant” should be and can do have shifted. It’s like we were looking at our trusted horse, Siri, and thinking how good it was for getting around, and then race cars arrived. Siri’s still trotting along, but most of us are strapping in, taking off, and leaving our first voice assistant in the dust.

News that Apple might be talking to OpenAI about integrating ChatGPT into iOS 18 are intriguing and might make sense, especially since Apple has failed to move its own AI needle in any demonstrable way over the last 18 months.

I have no doubt that WWDC 2024 will be Apple’s most AI developers’ event ever. It’s also likely that Siri will get a full brain transplant and a new body, making it all but unrecognizable from the Intelligent Assistant I first encountered in 2011. But the idea that Tim Cook and OpenAI CEO Sam Altman will take the WWDC stage, join hands, and proclaim that Siri is now a ChatGPT product or at least that Siri’s brain is more GPT 4o than the original Apple-programmed Siri (note: Apple bought the company that made the OG Siri) does not add up.

Apple could use OpenAI’s large language models to inform Siri’s intelligence. It might also be using Google’s Gemini. I don’t suspect it’s using both, but I think that whatever Apple does use, they won’t spend much if any, time talking about the core LLM behind the new Siri.

The Apple way

As I understand it, the Apple way is about control and ownership. Apple works with countless partners to build its phones, laptops, watches, and tablets, but the boxes say, “Designed by Apple.” It never touted that it used Gorilla Glass or worked with TSMC to build any of its A-class chips (A16 Bionic, for instance). If you press the company, it might tell you that even if it does work with these companies, it insists on bespoke components that could not be compared to similar hardware in any competitors’ products, including those who use the same partners.

There is reasonable concern that Apple does not have the generative AI skills to build a new from-the-ground-up Siri. But that ignores the teams Apple has built (sometimes by grabbing AI experts from competitors like Google) specifically to tackle these AI issues.

Whatever Apple’s plan, it can’t afford to play it safe. Siri may have been first, but it’s now embarrassingly behind. The time for digging deep just into your phone is over. Getting great music recommendations is not enough. Siri must transform from an Intelligent Assistant to something bigger, an idea of what Generative AI could be when married to one of the industry’s best ecosystems.

That’s the advantage Apple has over Google, Microsoft, Amazon, and even OpenAI: a collection of hardware and platforms that know each other as well as they know themselves. A Siri that becomes a platform that can range across all these OSes and hardware types will be the formidable competitor we’ve been waiting for. They’ll call it SiriOS, and we will welcome it with open arms.

If Apple doesn’t do this (or something like it), it’s time to admit defeat. Siri can’t finish what it started.