The Apple Watch is one of those devices you don’t realize you need, or why you might need it, until it's been on your wrist for a few days. And the device is due for an update at today's "Glowtime" event at Apple Park. Hopefully, alongside some new information on Apple's AI tech.

I recently got the Apple Watch Ultra 2 and gave my wife my older one and she’s already finding it invaluable for one simple feature — notifications. Also, having access to Siri and a quick overview of your life without having to pick up your phone is useful, which led to the idea that Apple may already have the ultimate AI wearable.

The Meta Ray-Ban glasses, or any good AI glasses, stand out as a perfect form factor as they can put sound into your ears through the arms and can mount ‘eye-level’ cameras to give the AI a view of the world — wearing glasses isn’t ideal if you don’t need them.

With a watch, it just sits there on your wrist and with the right band, it is easy to forget you have it on. The Watch Ultra 2 already has some impressive hardware that puts it on the path to AI wearable status, but a few things are missing including a camera and beam-forming microphones.

What the Apple Watch needs to be an AI wearable

Technically, the Apple Watch Ultra 2 is already an impressive AI device. When I was researching this story many of the initial ideas I had for features needed to make it AI-friendly were already in place, but without a camera it fell at the first hurdle.

Some of the existing, and impressive features include an ultra-wideband (U1) chip that can be used to gather spatial data, which AI can use in location awareness features, allowing it to automatically turn on lights when you enter a room, or send your coffee order to the barista.

Double-tap gesture recognition, where the Watch can interpret small finger motions to perform tasks is also a useful feature that could be further expanded. Although this is more about making the watch standalone than purely AI-focused. However, the suite of sensors for health monitoring, on-device Siri and a chip with a neural engine do hint at what is to come.

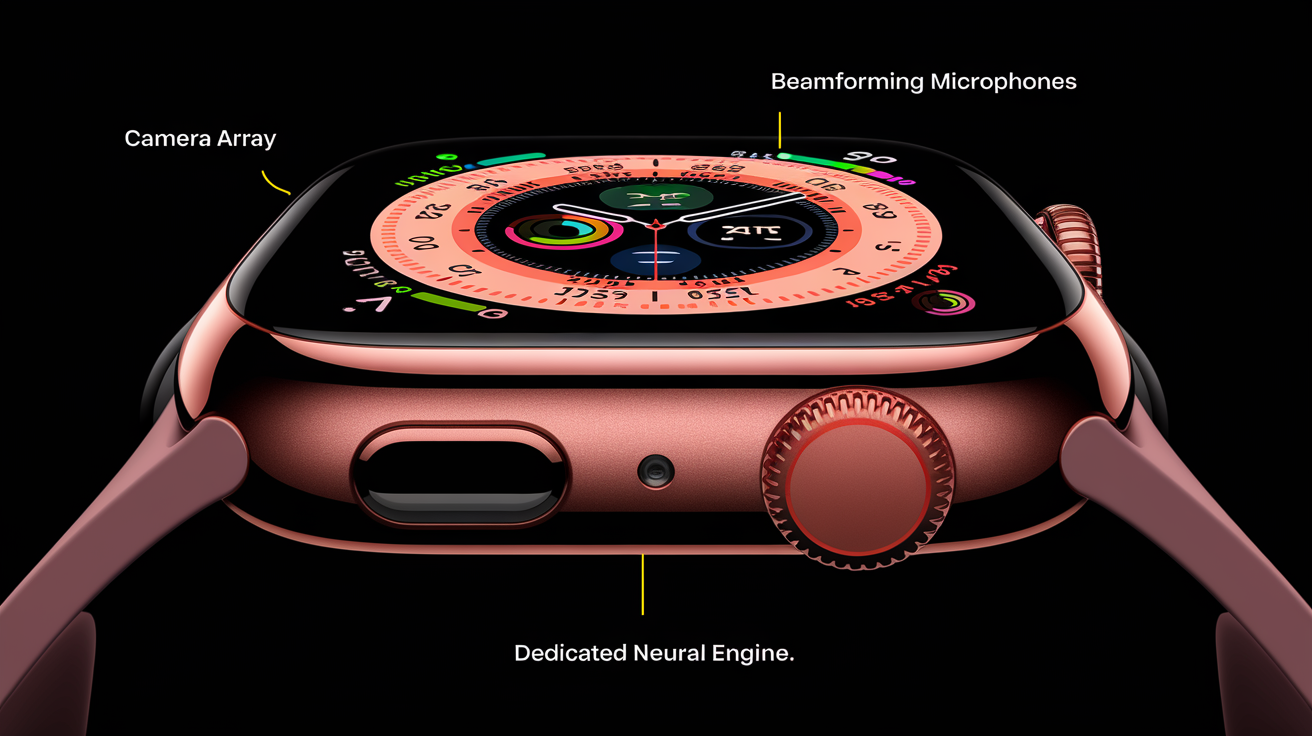

1. Camera array for gesture and AI vision

The biggest missing piece in the Apple Watch, in terms of making it an AI device, is the camera and I’d go a step further, adding a camera array. This would allow for multi-angle gesture detection, AI vision capabilities and 3D gesture control.

Having a series of cameras positioned strategically around the watch would allow it to capture depth and motion from different perspectives. This data could then also be fed into the AI model to provide it with a better view of the world and enable improved advice and analysis.

It would also allow for touch-free control of the watch, fitness tracking that could see actual movements without manual input and help with accessibility. These depth-sensing cameras could also be used with AI vision to provide contextual information on what it can see, such as buildings, food and products.

2. A dedicated neural engine

Apple has been putting neural engines in pretty much everything it makes since well before ChatGPT was a twinkle in Sam Altman's eye, but if Apple wants to make the Watch true AI hardware, it needs to up its game.

Currently, the Watch Ultra 2 boasts an S9 chip with a neural engine for some machine-learning tasks. This is mostly focused on health-related tasks rather than aiding a wider AI assistant.

Having a dedicated engine for Siri and Apple Intelligence would be more energy efficient, allow for faster on-device processing, and allow for some offline functionality including Siri responses and image analysis. It would also offer improved personalization and generally would make the Watch more responsive and adaptive, without relying so much on a nearby iPhone.

3. Beam-forming microphones

The current microphone setup in the Watch Ultra 2 is great for everyday voice commands, but it is far from perfect. Beamforming microphones would make voice recognition more accurate in noisy environments (including while out exercising) and allow the AI to filter out ambient noise.

For a good voice assistant to work it needs to be able to hear you easily in any environment. I've found my watch sometimes struggles when I'm walking by heavy traffic or even in a gym with loud music. By using multi-directional beamforming some of this will be solved as the AI could filter out those noises and just pick up the voice.

This would be particularly useful for runners and cyclists who could fire commands over to the watch without having to stop what they are doing, even when it is particularly windy.

The microphones would detect your voice clearly from a distance, so you also wouldn't need to move your wrist in order to speak to Siri. Obviously this would also require more targeted speakers so you could hear the response, although in that case just get a pair of AirPods.

Final thoughts

Overall, the Apple Watch is already a very useful device. It lets me make a quick call, see my notifications, keep track of my health and fitness levels and interact with Siri. It also already makes heavy use of artificial intelligence in performing many of these tasks.

As Siri improves and becomes more conversational, as more devices get smart capabilities that an AI like Siri can interact with and as smart assistants generally get smarter — the Watch could be well placed to take its position as the ultimate AI device.

Forget the Rabbit R1, the maligned Humane AI Pin or even the next generation of creepy, always listening ‘Friends’. The Apple Watch is up there with Meta’s Ray-Ban as the innovation we need in AI hardware — it just isn’t there yet.