California State Senator Steve Padilla has introduced legislation proposing a four-year ban on AI chatbots in children's toys, citing concerns over safety, privacy and the risk of harmful interactions. The 59-year-old lawmaker said rapid advances in artificial intelligence have outpaced the rules designed to protect young users.

The move aims to give regulators, parents and developers time to better understand how AI-driven toys affect children, including those under 18 years old. Padilla stressed that products designed for play should not expose minors to untested technology or unchecked data collection.

The proposed bill, known as Senate Bill 867, reflects growing unease among experts and parents who question whether AI companions can be trusted around children without strong and reliable safety measures. The temporary ban would also allow clearer standards to be developed before such toys reach homes and classrooms.

A Cautionary Pause on AI Tools and Chatbots

In a press release posted online, Padilla explained that artificial intelligence tools and chatbots will eventually become a significant part of humanity. He, however, stressed the need to take the potential risks of these innovations into serious consideration, citing the safety and protection of the children.

'Our safety regulations around this kind of technology are in their infancy and will need to grow as exponentially as the capabilities of this technology does', Padilla stated. 'Pausing the sale of these chatbot integrated toys allows us time to craft the appropriate safety guidelines and framework for these toys to follow. Our children cannot be used as lab rats for Big Tech to experiment on.'

Safety and Privacy Concerns

AI chatbots, while marketed as educational or entertaining, can expose children to unexpected risks. Experts warn that these devices can collect sensitive data, engage in unsupervised conversations, and even be manipulated to deliver inappropriate content, Gizmodo noted.

OneSafe CEO Chris Shei highlights parallels with AI in cryptocurrency, where chatbots have been exploited to scam users or acquire sensitive information. OneSafe emphasised that AI can be deceptively persuasive in both finance and play, saying safety measures in adult-focused applications are still evolving.

Children's toys equipped with AI could similarly become pathways for privacy breaches or manipulative interactions, raising questions about consent and data protection.

The Real Impact on Children

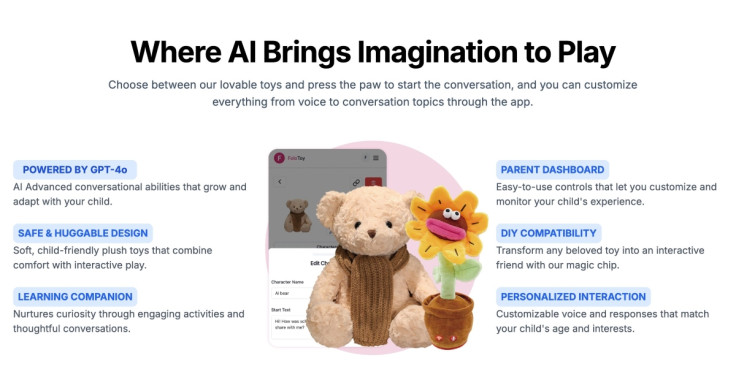

Even though AI chatbots in toys remain uncommon, troubling interactions have already been reported, according to TechCrunch. Reports say that some AI-enabled toys, including FoloToy's teddy bear Kumma, discussed 'sexual fetishes' and where to get knives with children last year. The incident prompted OpenAI to restrict the toy's access to GPT-4o.

As a result of such issues, Barbie-maker Mattel, which partnered with OpenAI in June 2025, postponed the release of their AI-assisted product.

Many specialists express unease over children forming emotional attachments to AI companions that cannot be reliably monitored or moderated. Psychologists warn that early reliance on artificial intelligence for social interaction may affect emotional growth and trust.

Furthermore, educators caution that while AI can be educational, unsupervised access could introduce misinformation or subtly influence behaviours.

How the Toy Industry is Reacting

Due to the potential threats, toy manufacturers are watching closely. Some companies have voluntarily halted its integration of AI features in new products, citing the need to review privacy policies and safety protocols. Others argue that a ban could stifle innovation and delay the development of beneficial technologies.

Industry analysts suggest that temporary regulatory pauses, such as the proposed four-year ban, could encourage the creation of AI systems that are inherently safer, more transparent, and easier to monitor.

Shaping the Future of AI and Play

The Senate Bill 867 is part of a bigger national debate about artificial intelligence and its impact on vulnerable populations. If passed, it would mark one of the first instances of a US state imposing a moratorium on AI in consumer products aimed at children.

Experts agree that the next four years could set critical standards for responsible AI integration, ensuring that technological innovation does not come at the expense of safety, privacy, or trust.

For now, California may lead the way in balancing technological potential with human-centric safety measures.

Originally published on IBTimes UK