Viewers of Secret Invasion, the latest Marvel-Disney TV series, may look twice at the show’s opening credits. The Halloween green landscapes that play out blur from one image to another in a nightmarish apparition. It immediately went viral, but not for the reason the producers might hope. Rather than awe, there was outrage: Artificial intelligence had replaced the work of animators.

Secret Invasion’s credits won’t be winning an Oscar any time soon, but it’s the first sign of a shift in movie and TV production that threatens artists — and it might just redefine our entire culture in the process.

Hollywood’s growing interest in AI has come up against a surprisingly effective obstacle: Hollywood writers. The picket lines organized by the union Writers Guild of America (WGA) have disrupted the entertainment industry as fast as you can say “Netflix.” The writers strike put late-night live shows on hold, punted major Marvel and Star Wars movies years down the line, and left prestige TV crews scrambling. Part of the reason WGA members have halted work is because they fear generative AI’s ability to produce scripts within seconds — an attractive prospect to a cash-conscious studio looking to cut costs.

“It’s going to be pretty clear those movies that have been made by humans versus those that are made by AI for a while.”

Right now, tools like GPT-4 can synthesize a college essay, write a basic computer program, or even produce simple (slightly boring) songs and scripts. But they can’t generate an entire Hollywood blockbuster from scratch — yet.

In a recent interview with NPR, one striking writer, Lanett Tachel, recalls reading a “terrifying” script generated by OpenAI’s ChatGPT: “The structure was there… But it had no depth. It had no spirit. It didn’t have nuance. It wouldn’t understand how to handle race, certain jokes, things like that.”

“[AI] cannot be the genesis of any creation,” she continued. “We create these worlds.”

Considering these limitations, how long will it really take for an AI tool to produce — from script to screenplay to cinematography, from soundtrack to credits to an edited and finished audiovisual masterpiece — a blockbuster movie on the scale of Mission Impossible?

5-Year Plan?

Nathan Lands, founder of generative AI company Lore, tells Inverse it will be sooner than you think. He points out that generative AI was a sleeping technology with little major progress in the field until November 2022 and the release of OpenAI’s ChatGPT.

“If these things have been developed for a year and they’re going to keep dramatically improving, that’s probably where you’ll start to see things that could go into films in two to three years,” Lands says.

Now, “things” are not an entire movie. Lands is talking about using AI tools in special effects or to produce b-roll that’s seen for a second or two between shots of the action hero.

“Just like the beginning of movies, there’s going to be a lot of duds.”

But in the last six months, we’ve seen huge strides in the use of just ChatGPT, never mind all the other more powerful generative AI tools that seem to come out every other day — so it stands to reason we could see something similar in stealth mode for movies. The exponential speed of adoption of large-language models makes Lands believe it’s only a matter of time before an AI progresses from writing a script to generating a full-blown blockbuster.

“I think it’s probably closer to three to five years, probably closer to five, where you might be able to literally type in things and have a full-blown fantastic movie,” Lands says.

But to make that deadline, AI tools need to overcome a massive hurdle: They need to be able to sustain a narrative.

Plot Twist

The problem with generative AI tools today — and with their human prompters, perhaps — is that giving them the right guidance to sustain a contained story arc and visuals through time is exponentially difficult. Stephen Parker should know. He’s part of the team at Waymark, a Detroit-based video company that made a 12-minute long film created entirely using images generated by OpenAI’s image making AI, DALL-E 2.

“I think we’re likely to see a fully generated AI movie within a year, but it’s not going to be very good at all.”

“This was not a short, small project,” Parker tells Inverse. “It took three-and-a-half months and a team of seven-plus contractors that whole time to make this 12-minute film.”

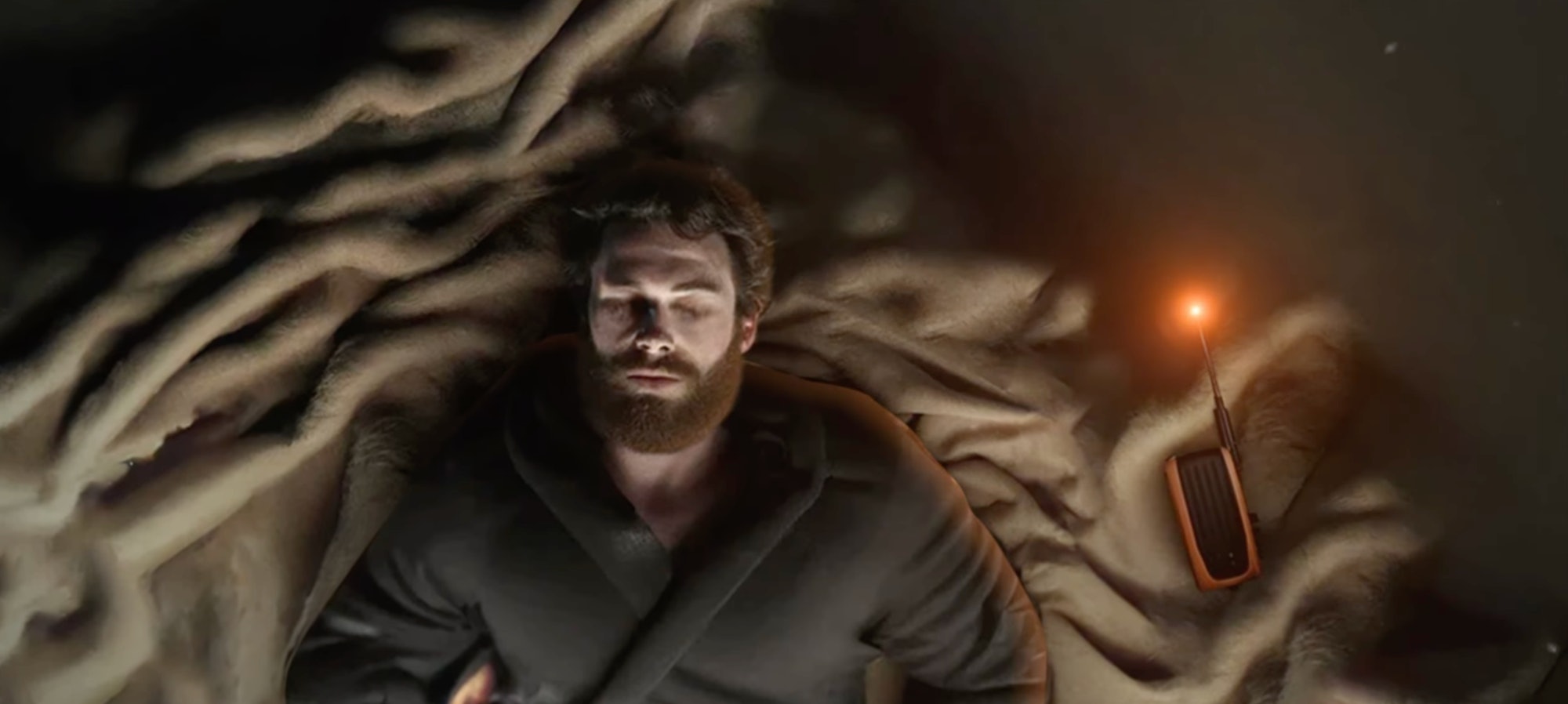

The film, The Frost: Part One, is uncanny at best and disturbing at worst. The reason why isn’t just the plot. It’s the imagery, too.

“We found it far easier to get emotional shots of dogs [than humans],” Parker says. DALL-E 2 also proved difficult to coax into remembering key elements of a scene. A pivotal plot point in the film, for example, is set in a room at the United Nations’ headquarters. Parker and his team wanted to produce seven different shots within that space, but needed the background, people and furniture within the room to be consistent.

“We kind of got frustrated by the scene,” says Parker. The wide shot would look different to the close-ups, and in turn, they would look different to reverse angles. In the end, the team hit on a workable solution that involved detailed prompts for DALL-E to create visual anchors throughout the different scenes to essentially build up the AI’s memory.

“We ended up using this transponder as this MacGuffin throughout the film that gets passed along and people are using to respond to the signal,” Parker says. “We need these small little elements of continuity, or a sort of structural continuity to a shot or set, in order to bring it together in the edit.”

The transponder ultimately gives the viewer a sense they are watching a cohesive movie, Josh Rubin, who directed The Frost, tells Inverse. It worked, to a degree. It’s important to point out that Waymark isn’t a movie studio. But it remains the case that on a traditional film, you also don’t need to keep reminding the entire cast, crew, designers, editors, directors, and producers — even the set itself — what’s going on.

“Just like the beginning of movies, there’s going to be a lot of duds. There’s going to be a lot of bad stuff that comes out,” Rubin says. “But whether it’s in three years, whether it’s five years, two years, whatever, there’s going to be someone somewhere that nails it.”

In Pursuit of Imperfection

That sort of timeline is one others studying the field agree with. “I think we’re likely to see a fully generated AI movie within a year, but it’s not going to be very good at all,” Irena Cronin, CEO of Infinite Retina, a consultancy studying the rise of AI, tells Inverse.

“Give it about three years, and you’ll have something that is more on the level of an independent movie that was made maybe sometime in the ’90s,” she says.

The team behind The Frost agree.

“I don’t know that I have a definitive answer for you on timeline,” says The Frost’s Parker. “My current estimate is three years, and I don’t think that that has moved too greatly from the beginning of our process making [The Frost] earlier this year.”

Within five years, Cronin believes the ecosystem of AI tools will begin specializing in servicing Hollywood to the extent a true “blockbuster” may be possible.

“I say at the most, it’ll take five years before you could see something that is quite acceptable, and might even be really good for like Netflix or Apple or something like that,” Cronin says.

“If you do not give the AI directions that it shouldn’t be done perfectly, it’s going to make a really boring kind of movie.”

What that does to the existing Hollywood system is equally uncertain — which is why Tinseltown is being struck by strikes.

“You have people in Hollywood who are freaked out, and they think it’s going to happen within a year or two,” Cronin says — but she cautions against seeing this as the death of the movie industry as we know it now.

“It’s not necessarily going to edge out the studio system for a while even after that,” Cronin says, “because it’s going to be pretty clear those movies that have been made by humans versus those that are made by AI for a while.”

Watching The Frost, it’s hard to disagree.

Cronin points to a favorite example of hers: the 1974 neo-noir mystery Chinatown.

“There’s lots of people even today, they look at the script and say, ‘Oh, that could have been written better, or it could have been written like this, or it’s taking too long for that to happen,’” Cronin says. For example, Jack Nicholson’s character, J.J. Gittes, spends much of the film with his face covered in gauze and bandages — a move some critics have called out as a motif that runs against Hollywood convention of clearly showing the leading man’s face throughout a film. It works, even though the prevailing wisdom suggests it shouldn’t.

Yet despite the flaws, the movie is critically acclaimed — it has an 8.2 rating on IMBD, even if its director, Roman Polanski, is highly problematic — it’s the foibles that come from an act of human creation that make movies like Chinatown special, says Cronin.

“If you do not give the AI directions that it shouldn’t be done perfectly,” Cronin says, “it’s going to make a really boring kind of movie.”