Of all the ways AI is impacting on music production, it’s the way it can model vocals that’s having the biggest impact, and this trend is set to go further. Stratospheric even. When you start talking about AI’s ability to model famous singers, you’re then talking ‘money’, and we all know what happens then. Usually trouble!

Where we are at present is something of a calm before the storm. There have been those high profile cases where AI models of famous singers have been reported about in big news stories, but now it’s “wait and see”. At present though, AI modelling websites are making money selling the usage of models of famous singers and very few of these websites are talking about these artists getting a cut of the profits.

Of those we've tested, only a few, for example Kits and Voice-Swap, mention royalty splits with the original affiliate artists who its AI algorithms are modelled on, and the website also lets these artists have the final say on commercial releases.

Another site, Musicfy, also makes clear that it’s aware of some of the ethical concerns raised using AI models of real, famous vocals. “AI voice cloners’ potential applications span from entertainment to accessibility,” the site says. “However, their use demands careful consideration due to the ethical implications involved. It’s essential to wield this technology responsibly, recognising that great power comes with great responsibility.”

So it feels a little like we’re all waiting for some kind of legal clarification or maybe even a high profile court case to move things forward. If you were one of these high profile artists, would you be happy with your voice being used in a song, speech or novelty placement without any recompense?

While we wait for this potential outcome, artists are not sitting on their hands. Former MusicRadar interviewee Holly Herndon has been very ‘vocal’ in the field of AI, pushing its advantages, and even offering a model of her own voice, Holly+. Grimes too has also offered help to artists and fans who want to use a model of her voice, as long as there’s a royalty split. And you can’t help thinking that this is a solution that would please everybody, with the AI modellers getting their cut along the way.

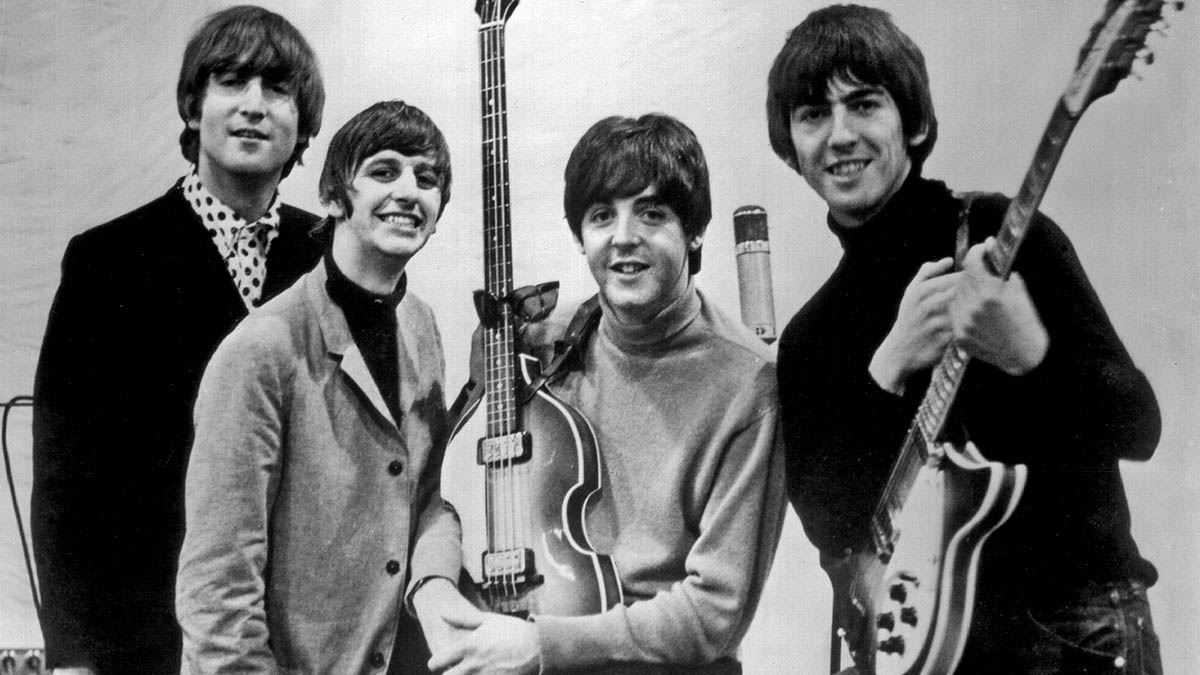

Whether or not there is a market for albums by AI artists is another matter. If done well and sensitively there could be a market – or at the very least a lot of curiosity – for ‘new music by’ bands who have long ceased to exist or refuse to reform. With that in mind, we've put AI technology to the test by putting together a fake Beatles track using the AI vocal modelling platform Lalals - we're calling it YesterdAI....

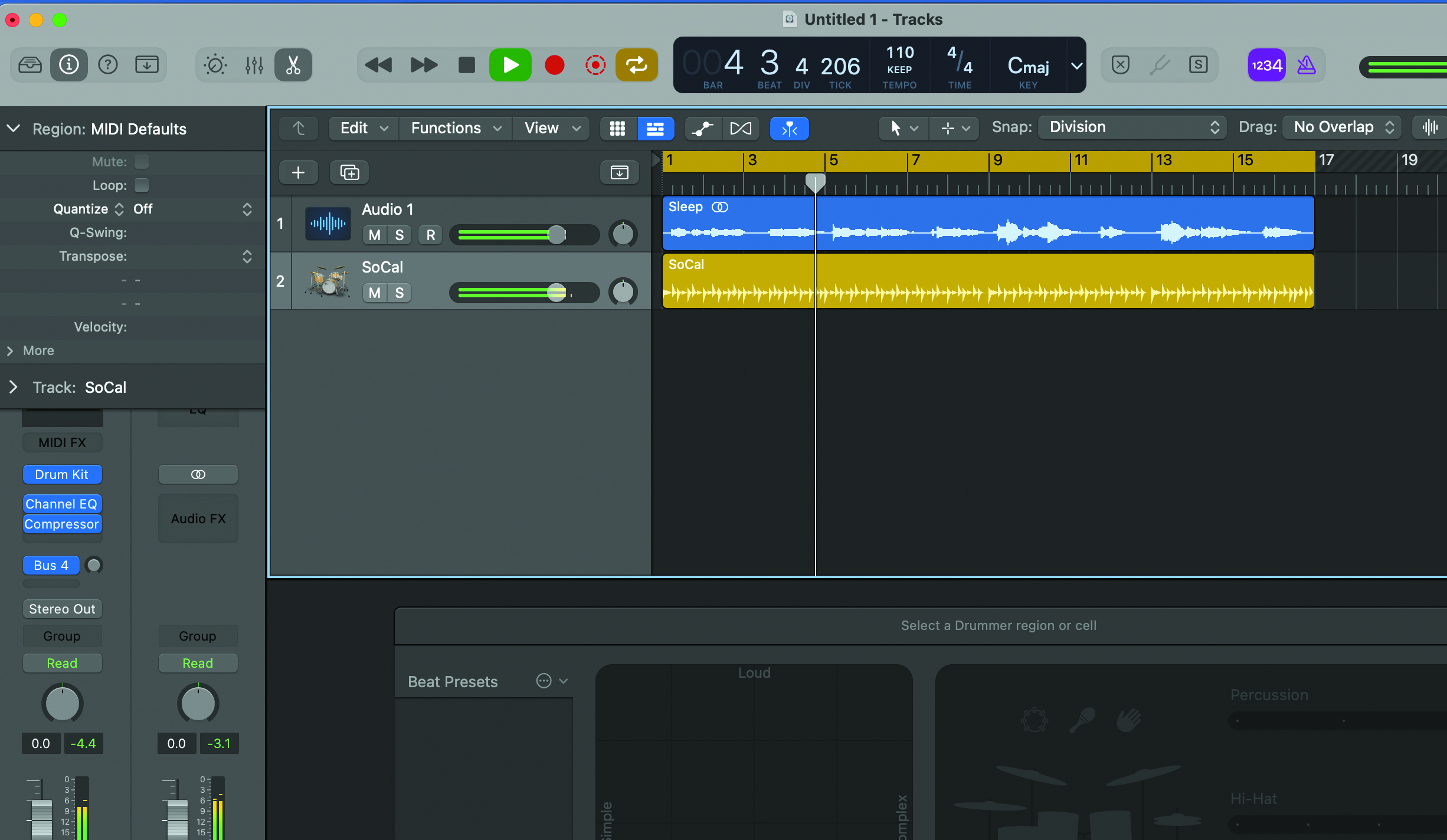

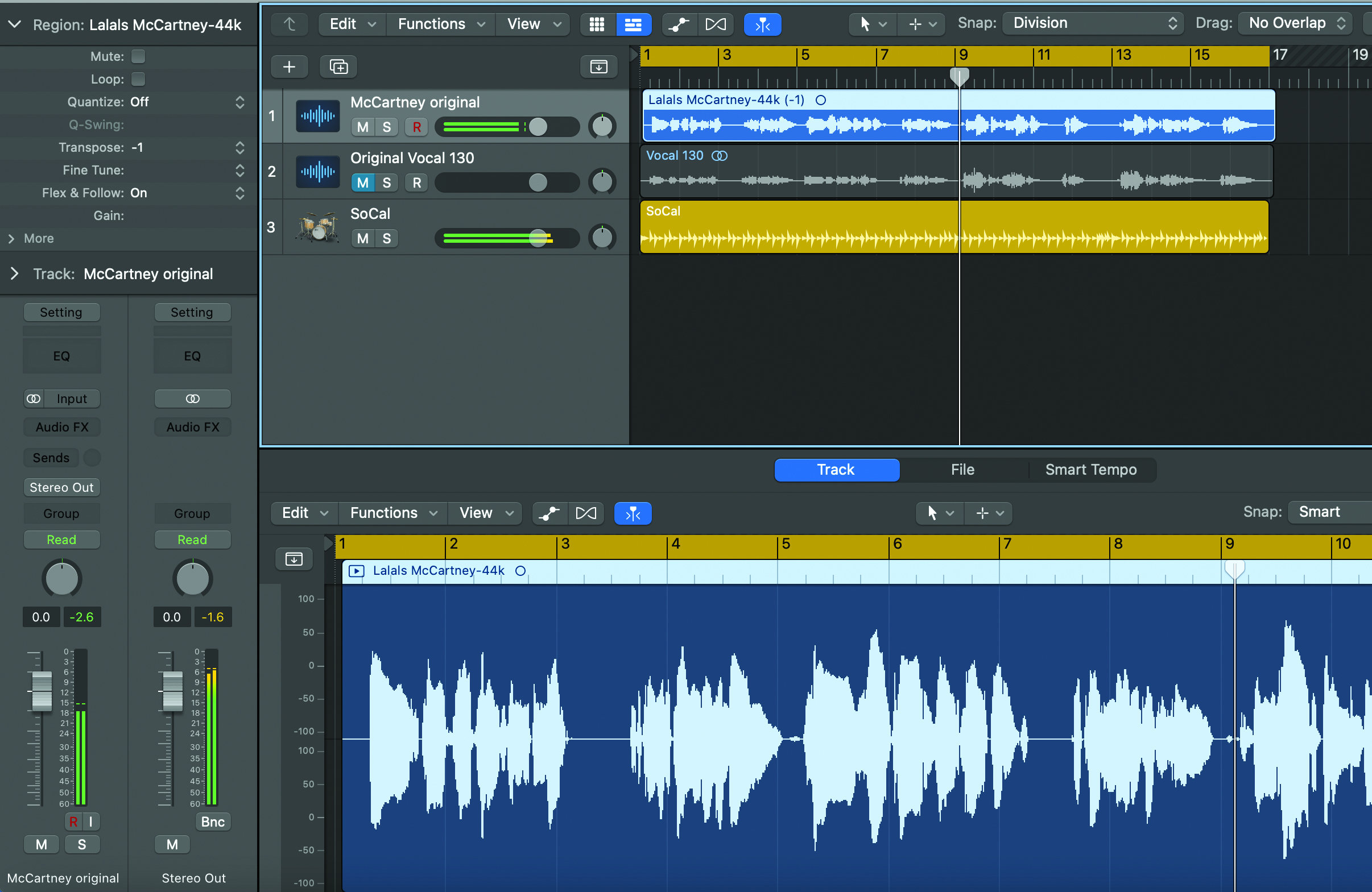

Yes, we really thought we would give this a go. What could possibly go wrong? We chose Lalals.com as our AI algorithm and platform of choice and Logic to do some tweaking. Here’s what happened. First we grabbed some vocals from Computer Music Magazine’s vocal pack given away last year, sung by the excellent Donna Marie and loaded them into Logic.

We then upped the tempo from the original 110bpm to a more early Beatles 130bpm using Logic’s Timestretch. We also created a Drummer track to help with our timing. We then simply exported the new faster audio track.

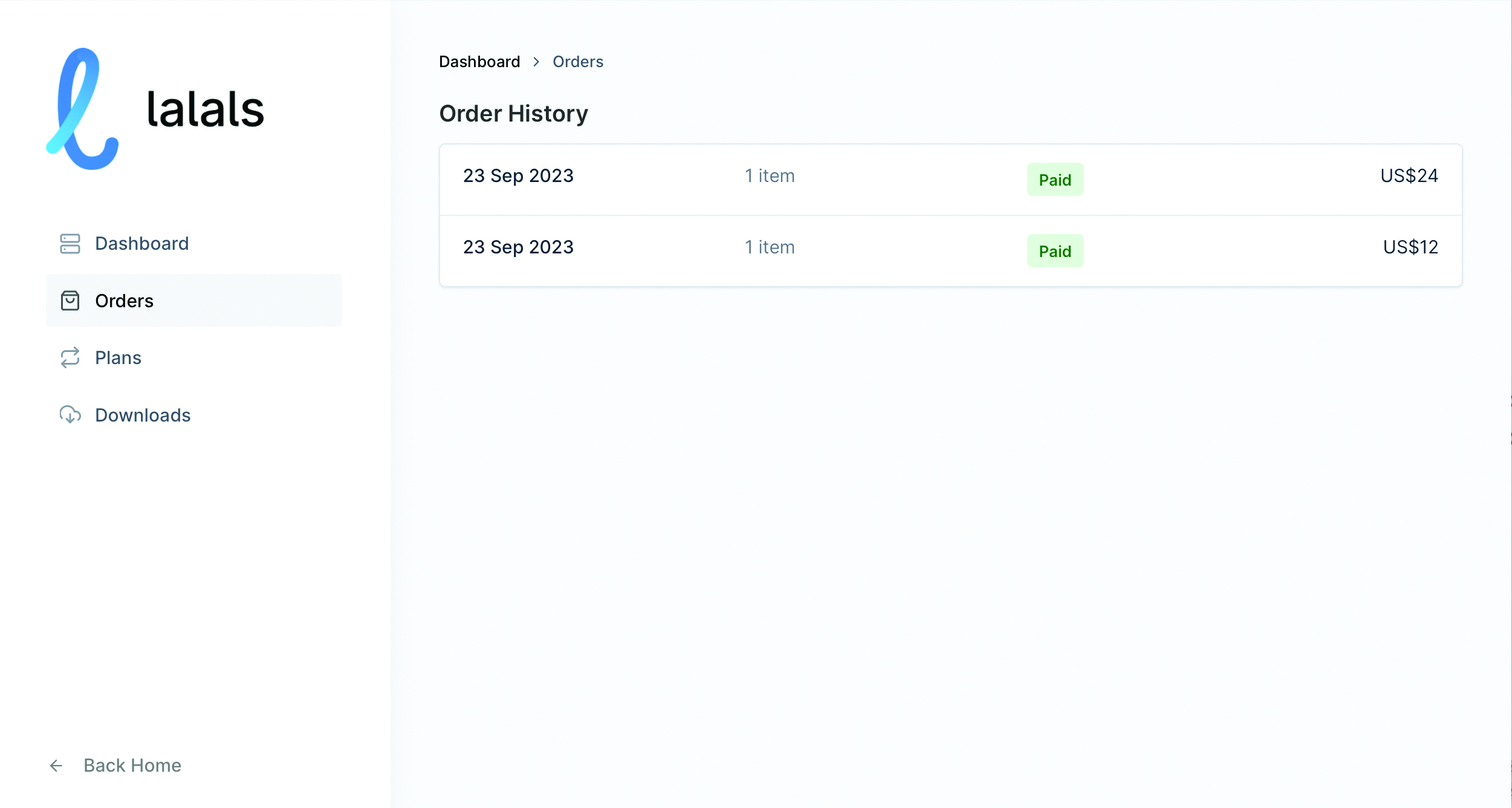

We chose Lalals.com as it has the most well known singer AI models, including both an AI John Lennon and Paul McCartney. We signed up for the Basic subscription of $12/month but then found out both AI models require the Pro account and had to pay another $24 rather than the $12 difference (we were refunded this later).

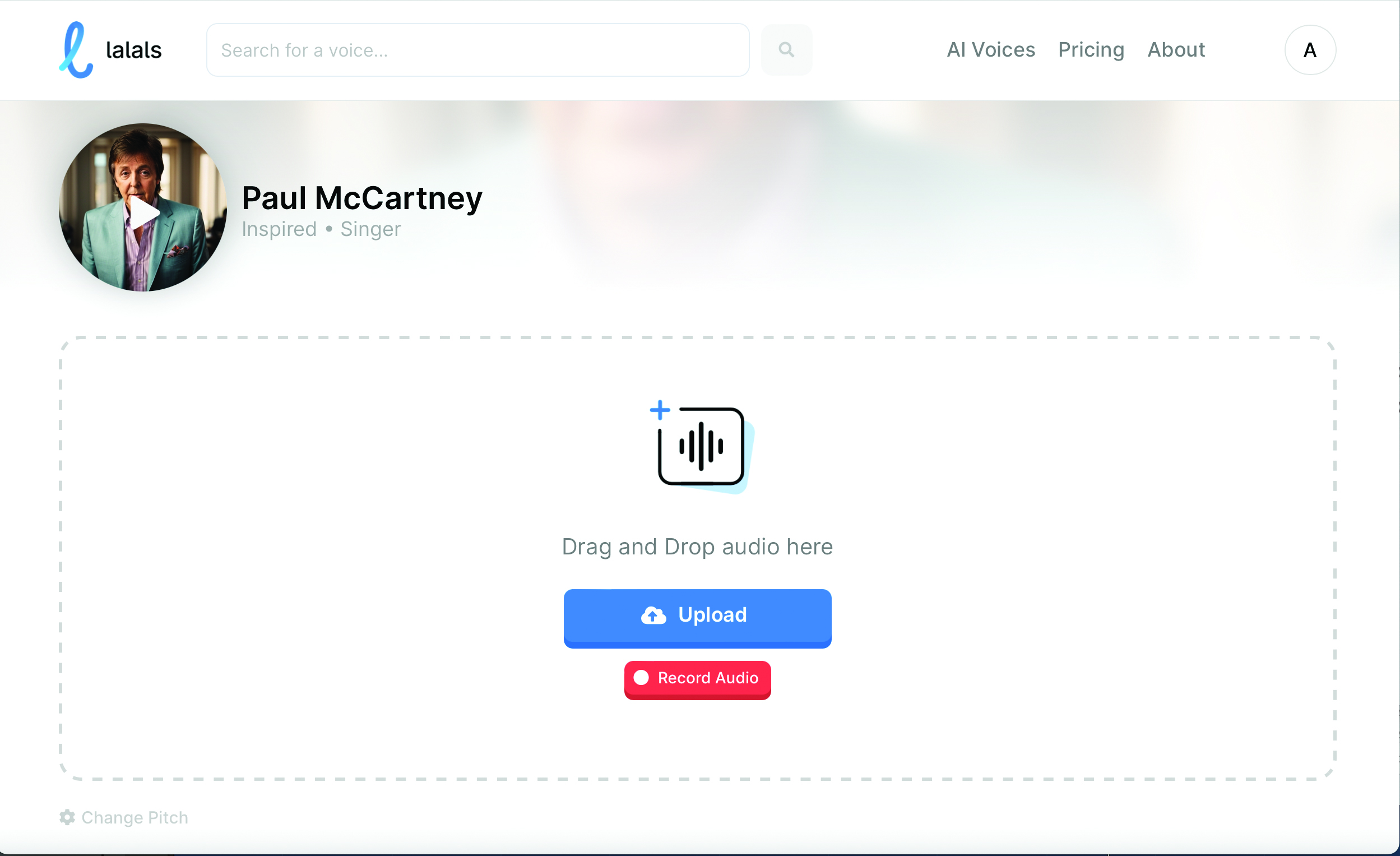

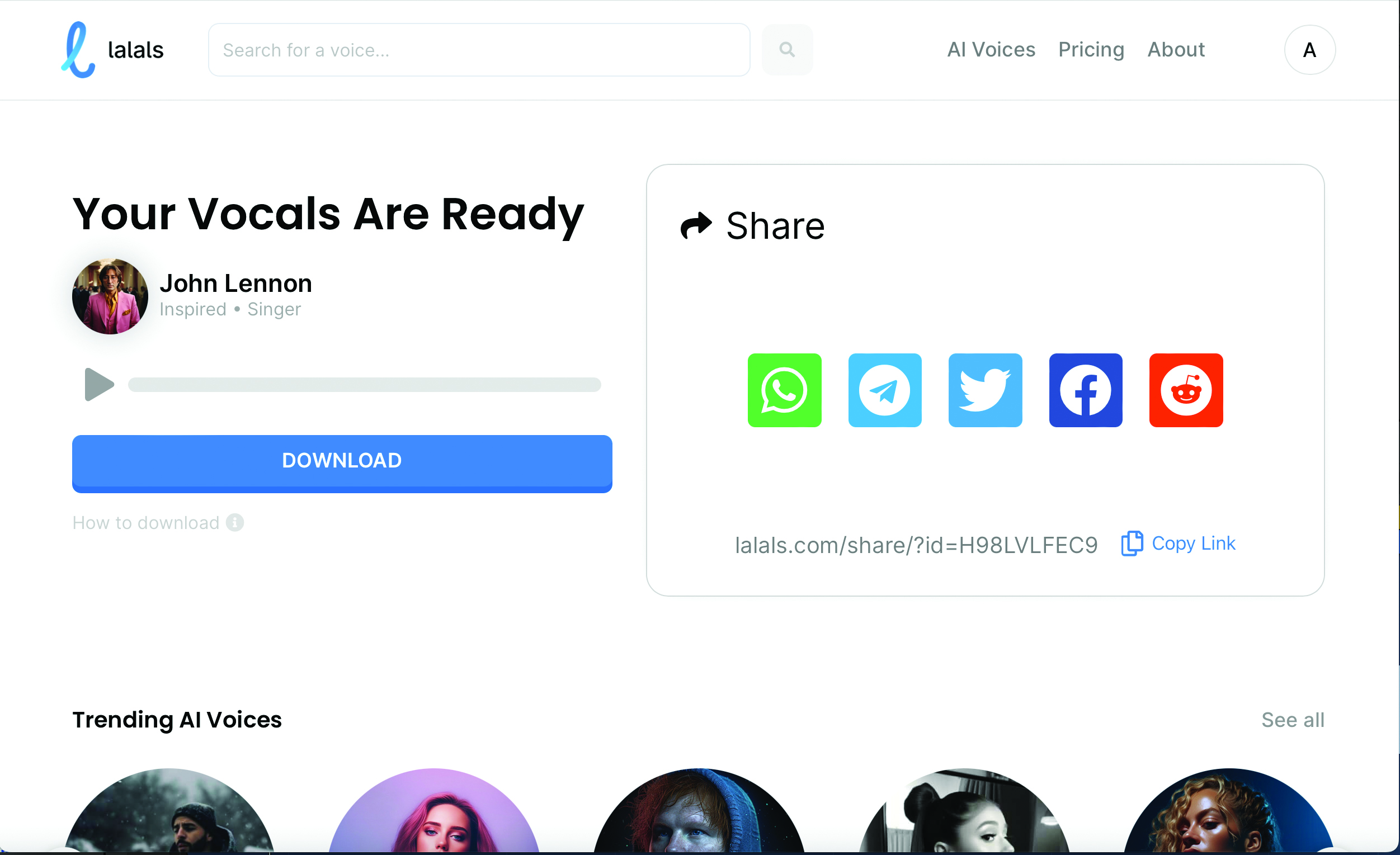

Now search for Paul McCartney and upload the Donna Marie female vocal audio we saved at 130bpm. The processing time took around the same length as the audio, around 30 seconds.

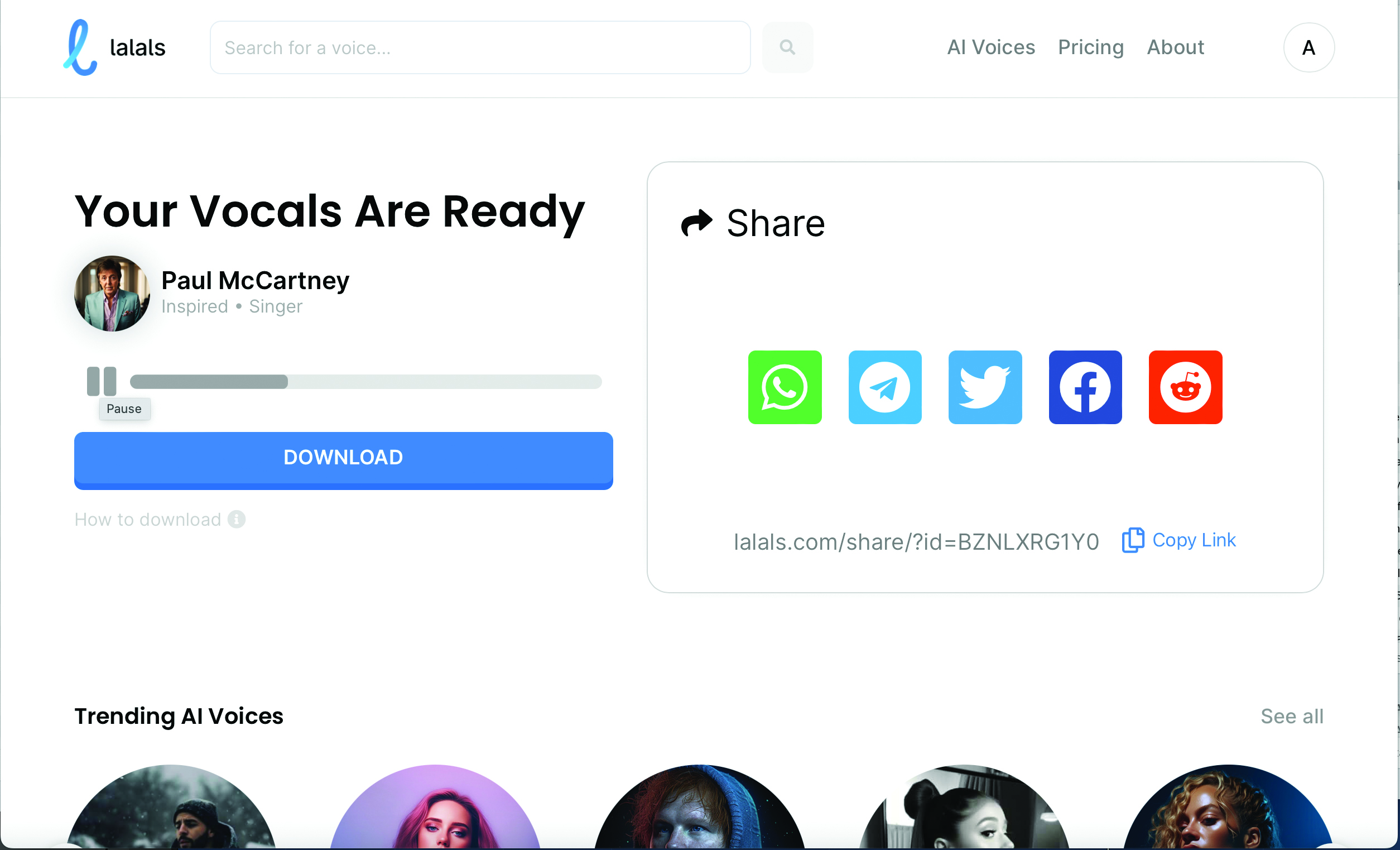

“Your Paul McCartney vocals are ready”, Lalals then tells us, words we never expected to write. We give them a play and are not impressed so far. They are a little high – probably as we used a female original vocal – and American, but there is a touch of the Paul in there.

We then load our McCartney vocal into Logic and experiment with pitching the vocal down to make it more realistic. We find that by pitching it down two semitones it definitely improves, but beyond that it starts to sound worse.

Back on Lalals.com and we repeat the process, this time using the website’s John Lennon AI model. Again Lennon is a Pro AI model so you’ll need to be subscribed to the $24/month service. The processing time is similarly quick.

Again it sounds too high – the lesson here is to maybe use source vocals of the same sex as your target AI models – but there is some Lennon character as it progresses. It’s also artefact-free which is impressive given the speed of processing.

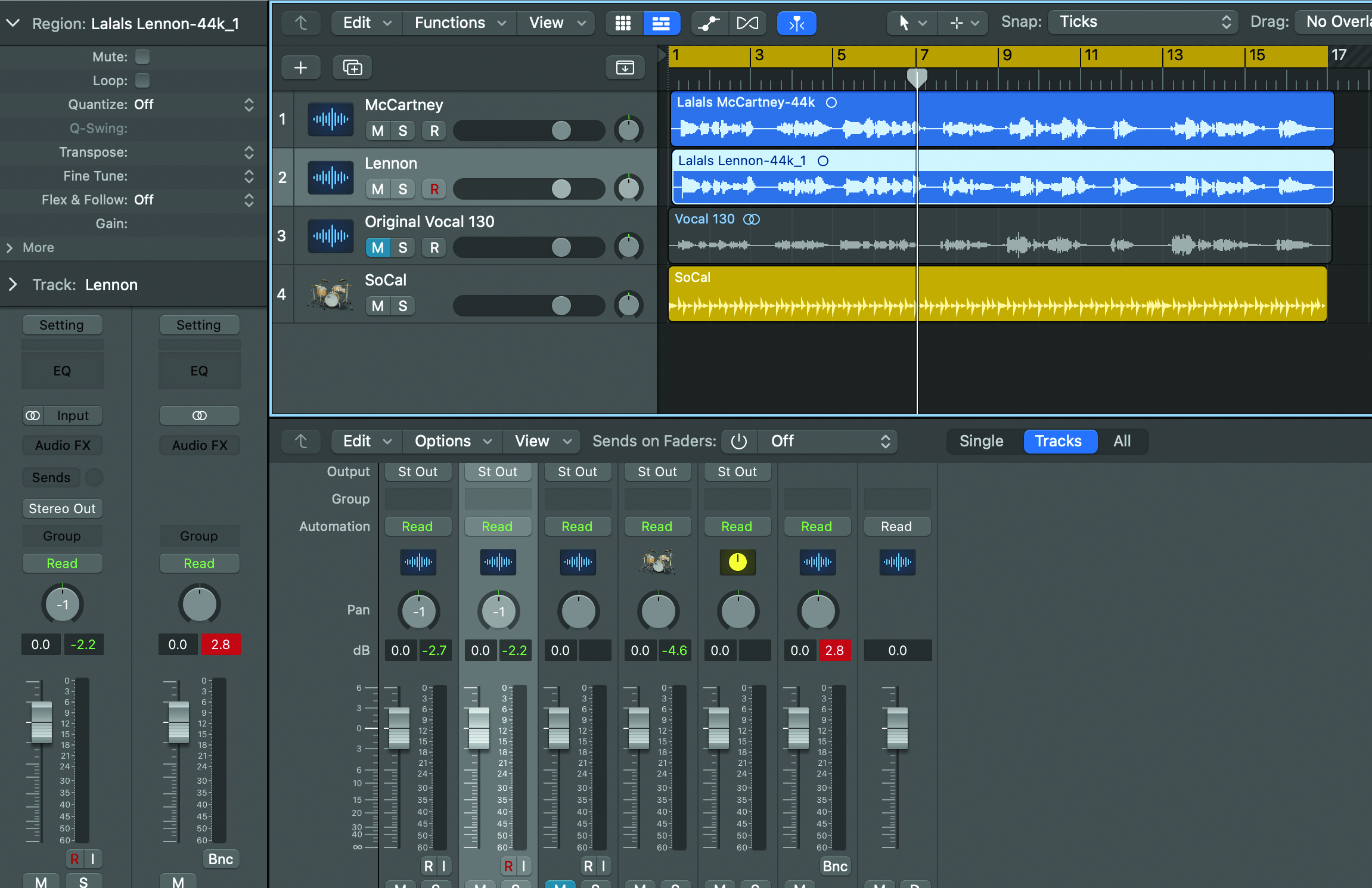

Back in Logic we load in the ‘Lennon’ vocal to sit alongside the ‘McCartney’ one… as you do. We find that playing them side by side results in just a single-sounding vocal, not what we are after. We experiment with shifting their pan position which make them more distinguishable. And then there are the classic harmonies.

At this point we tried varying the Lennon pitch to harmonise with ‘Paul’s’ but it didn’t work at too far a distance away (4+ semitones) so we settled on taking ‘Paul’s’ vocal back to its original pitch and ‘John’s’ down by three semitones as he tended to sing the lower parts. It’s better but it’s not there yet.

We then try to mix our way out of trouble by using ChromaVerb to add a touch of reverb to our vocals. This helps to make them distinct. But by now we’ve realised that we should have started with a male vocal and one not singing in an American accent!

Finally, add some Liverpool drums by way of Logic’s library and some guitars and you have, well, something that approximates the Beatles. It’s an interesting exercise though. Try it again in 2025, and it’ll likely be more on the way to being perfect.