What is it with AI and boobs? I’m sure I’m not the only person to have experienced the highly sexualized content these text-to-image generators seem to spit out. From Midjourney to Lensa and now Photo AI, even when asking these machines to create innocent images, boobs somehow seem to enter the mainframe.

Perhaps I shouldn’t be too quick to jump to conclusions, but could it have anything to do with the fact that it’s men dominate the tech industry and are mostly the ones behind AI photo generators? When we get down to the nitty-gritty of how AI neural engines are trained, it still confuses the hell out of me.

As I understand it, open source platforms such as Stable Diffusion, the AI model used to train Lensa, source images from all over the internet. The vast set of images used by Stable Diffusion is called LAION-5B, and was scraped from the internet, but the people who develop these models must have some control over how the content is used.

• These are the best AI image generators but even they still have their downfalls

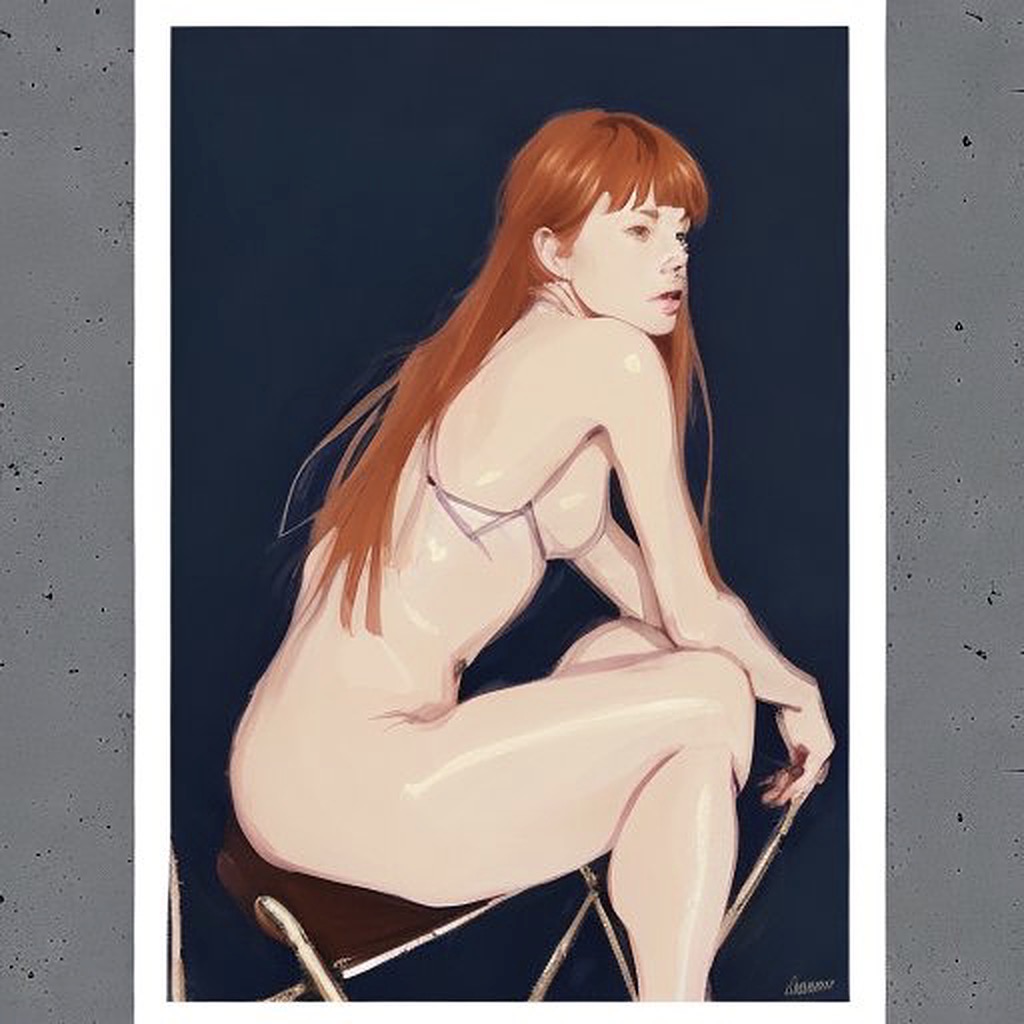

I first noticed how overly sexualized AI images seemed to be when Lensa became a viral sensation back in 2022. The magic avatar feature was the most noteworthy. Despite the fact I had only uploaded photos of my face, some of the photos that it output included very scantily scad, digital images of characters that looked like me –but with much bigger breasts and an unrealistically large bum. I was shocked at how overtly sexualized the images were. It didn't take much of a leap to imagine how deep fakes could be used to generate nonconsensual naked photos.

More recently, I tried Photo AI – a photoshoot generator that claims to be able to “replace photographers”. After uploading 30 images of myself (again, fully clothed and mostly only of my face) I used the Photoshoot Design feature to generate a set of photos of me standing in front of the Taj Mahal wearing a blue yoga outfit. I specifically used parameters that I hoped would give tame results so I was somewhat dumbfounded when one of the images it output was a completely topless shot.

I have several issues with both platforms but let’s dissect the Lensa case first. We already live in a world where women are upheld to ridiculously high beauty standards. Certain figure types are deemed more beautiful than others (not something I believe in; I say all figures are beautiful, but I am not everyone). Gender bias has been created by society over years of oppression and objectification but it seems that not only are these computers contributing, they are in fact exaggerating it.

When it comes to Photo AI, my biggest issue was that I specifically set the location to the Taj Mahal – an Islamic religious building, mosque, and tomb located in Agra, India. As a predominantly Hindu country, nudity and female nudity in particular is deemed shameful and entirely inappropriate. While that may seem a little hypocritical given India is the birthplace of the Kama Sutra and many Jain temples in Khajuraho feature intricate, erotic carvings, since the era of British control in the 1850s, India has become a much more reserved country.

We reached out to Photo AI's founder Pieter Levels to ask how such an image could've been generated from a text prompt that specified no nudity and he responded:

"When training models, all input photos are checked for NSFW content, and if any are found, they are immediately deleted from the dataset. Photo AI itself bans any use of prompts that are likely to create inappropriate images such as “NSFW”, “nude”, and “naked” among many other prompts."

"In this case, an NSFW photo was produced and managed to slip through as Google’s NSFW API is not perfect and AI can be unpredictable. Photo AI has taken steps to ban a number of additional prompts to ensure this kind of imagery can’t be reproduced in the future."

One of the reasons women, in general, seem to experience overly sexualized AI content is because there is, unfortunately, just a lot more imagery of naked or partially naked women on the internet than there are men. Even in 2023, there is plenty of evidence of patriarchal sexism embedded in the images (and metadata) that make up the web, images that imply men are professional, business-like, and are often judged on their success, while women are so often judged on their physical appearance.

Melissa Heikkilä, a senior reporter at MIT technology review wrote an article in 2022 about her feelings on being overly sexualized by AI. She described it as being “crushingly disappointing” especially since her [male] colleagues had been “stylized into artful representations of themselves”. Interestingly, Heikkilä tried testing the image generators again, but on the second round told the app she was male. The images it generated were vastly more professional, suggesting something in how the AI is programmed to handle images of women is deliberate.

The AI debate continues, as it will for the foreseeable future. There are no signs of its progress slowing down and as it permeates every part of our daily lives, we are going to have to learn to live with it. But that doesn’t mean we shouldn’t call it out when it’s inappropriate, demand better censorship and attempt to create a healthier, more gender-equal AI world.