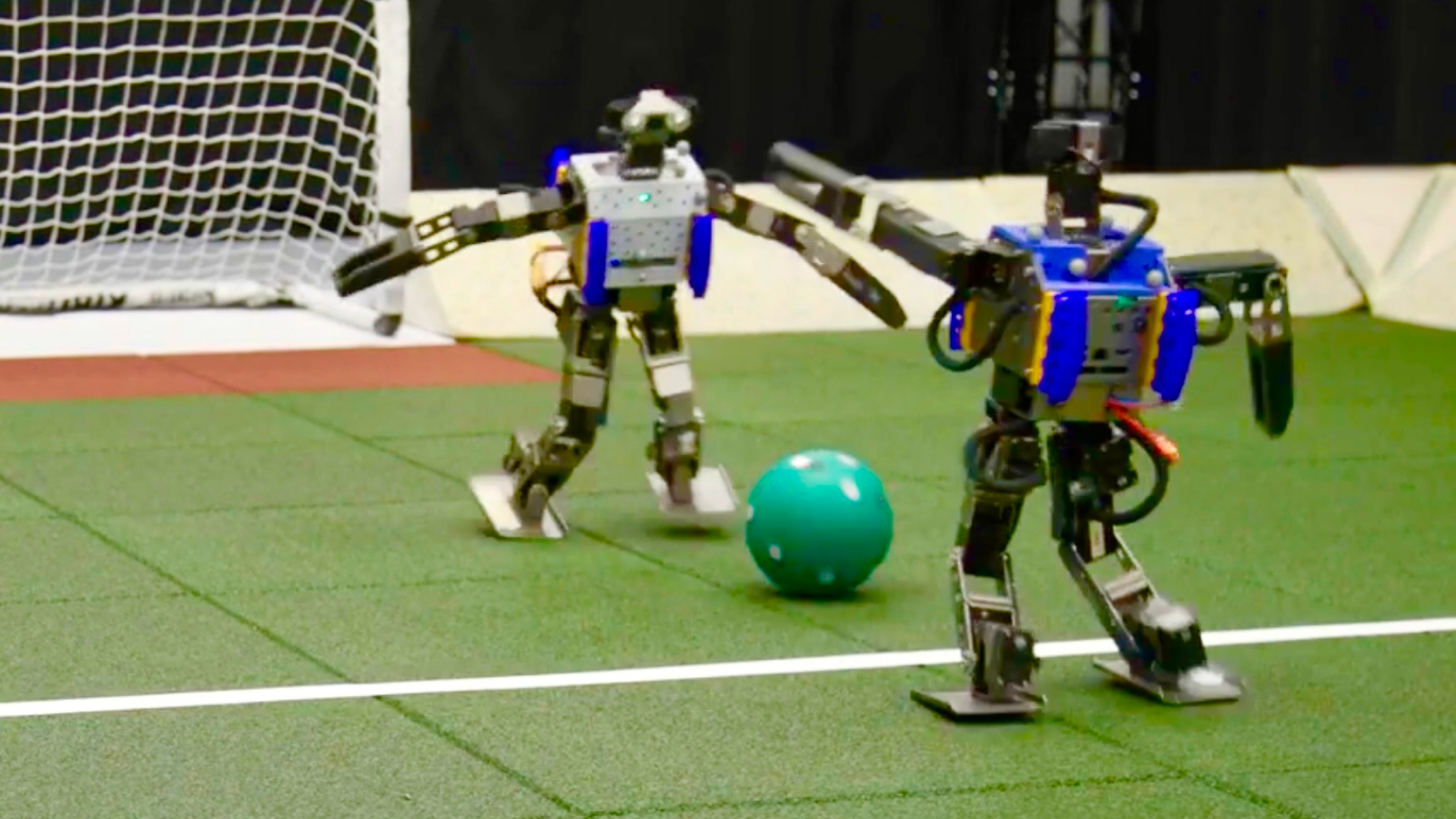

Scientists have trained miniature humanoid robots to play soccer using a new artificial intelligence (AI) training method — and footage shows the robots demonstrating their skills in a one-on-one game.

In the short clips, two bipedal robots play several games in which one is attacking a goal and the other is defending it.

They kick the ball around, block shots and scurry across the pitch — all while waving their arms and falling over in the process. But at least they try to maintain their balance and recover quickly after a fall.

It might not look like it at first, but they're also playing highly strategically — learning how the ball moves and anticipating their opponent's next moves as they try to outwit and outpace them, the scientists said in a study published April 10 in the journal Science Robotics.

Humanoid robots are difficult to configure because every movement needs to be programmed — which requires scientists to compile enormous amounts of data. Many modern humanoid robots, however, have been given a helping hand thanks to AI. Self-learning, thanks to AI, would mean robots wouldn't need ever single motion and movement pre-scripted or programmed. For example, the Figure 01 robot can learn by just watching video — with scientists previously teaching it to make coffee by showing it just 10 hours of training footage.

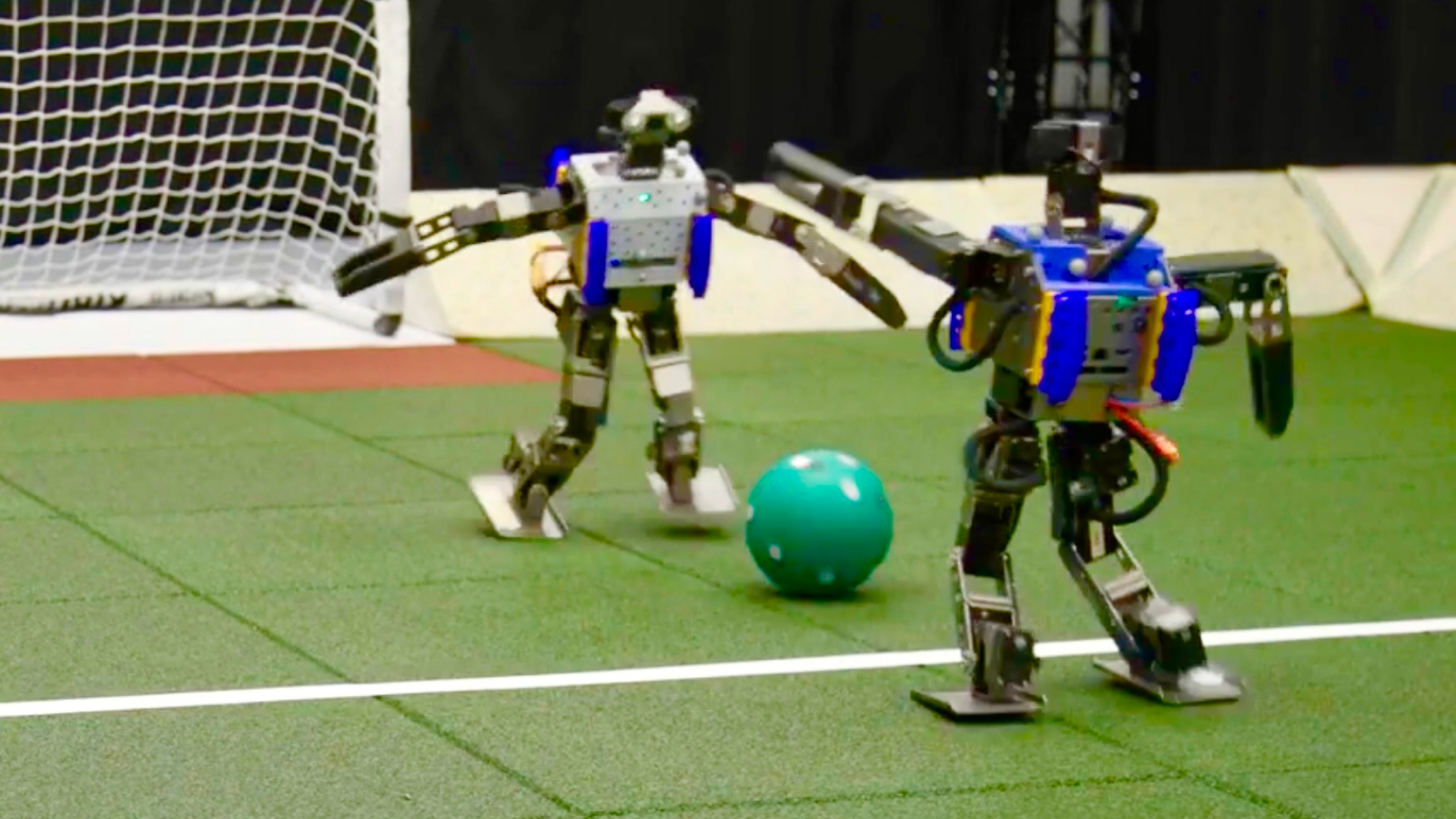

In the new study, Google DeepMind scientists trained their miniature Robotis OP3 robots to play soccer using a technique called "deep reinforcement learning." This is a machine learning training technique that combines several different AI training methods.

This cocktail of techniques included reinforcement learning, which hinges on virtually rewarding the completion of goals; supervised learning; and deep learning using neural networks — layers of machine learning algorithms that act like artificial neurons and are arranged to resemble the human brain.

The robots initially worked off a scripted baseline of skills before the scientists applied their combined deep reinforcement learning AI training technique.

In their matches, the AI-trained robots walked 181% faster, turned 302% faster, kicked the ball 34% faster and took 63% less time to recover from falls when they lost balance, compared with robots not trained using this technique, the scientists said in their paper.

The robots also developed "emergent behaviors" that would be extremely difficult to program, such as pivoting on the corner of their foot and spinning around, they added.

The findings show that this AI training technique can be used to create simple but safe motions in humanoid robots more generally — which could then lead to more sophisticated movements in changing and complex situations, the scientists said.