Of all the things I expected to read in my morning feed of tech news, a report from the US White House stating that tech companies and governments need to stop using certain programming languages to combat cybercrime wasn't top of my list. But that's exactly what has happened and the document in question, Back to the Building Blocks, lays out the changes required and the reasons behind them.

The first thing that needs to go, according to the report, is the use of memory-unsafe programming languages to create the applications and codebases on which large-scale critical systems are reliant. Languages such as C and C++ are classed as being memory-unsafe as they have no automatic system to manage the use of memory; instead, it's down to the programmers themselves to prevent problems such as buffer overflows, either by checking the code directly or by using additional applications.

Agencies such as the NSA, CISA, and FBI recommend that the likes of C#, Python, and Rust should be used, as these are all deemed memory-safe. Rewriting every piece of critical software is a monumental task and the report suggests that even just reworking a handful of small libraries will help. At the very least, all new applications should be developed using a memory-safe language.

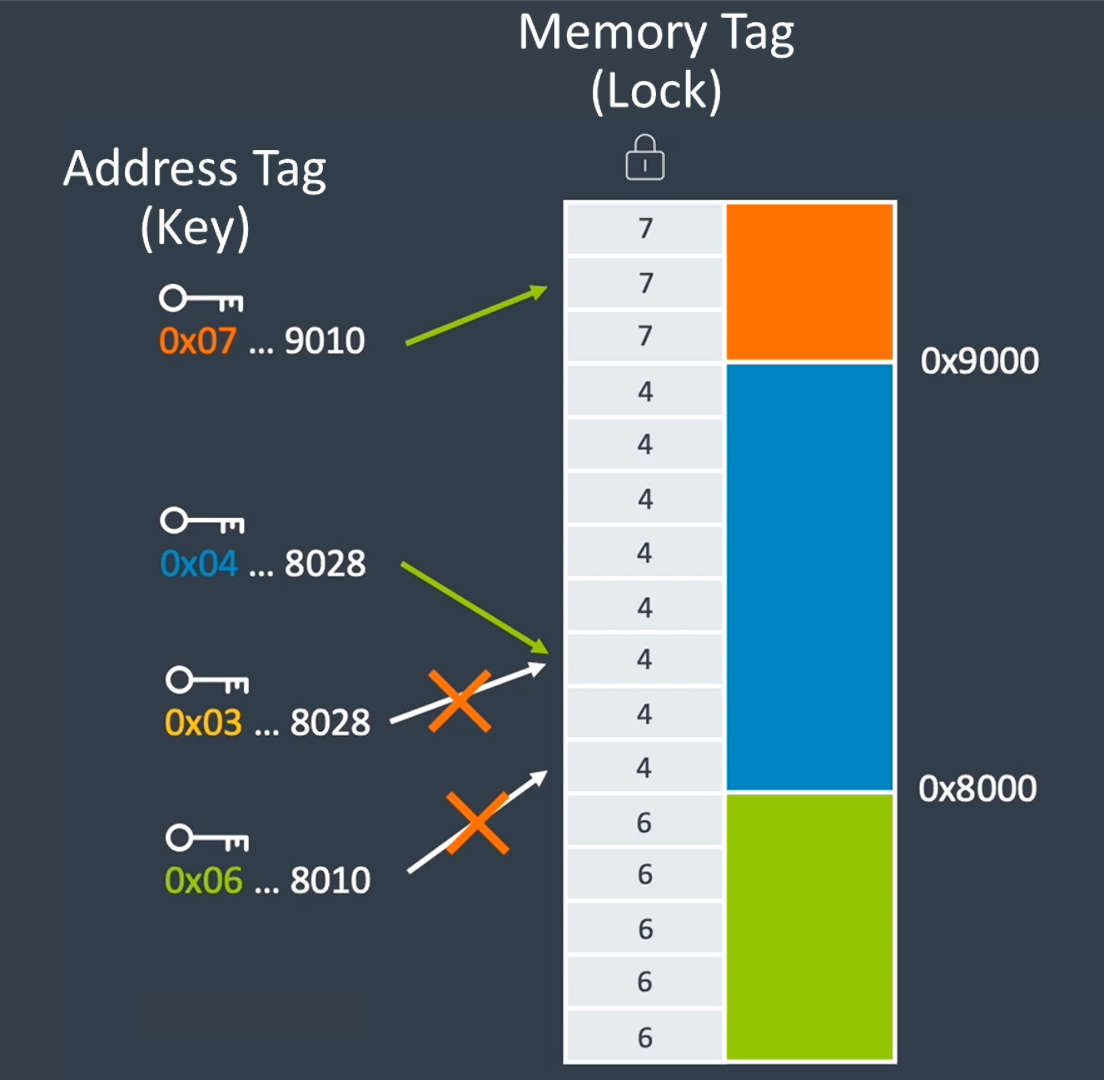

And it's not just about software, as choosing the right hardware matters a lot, too. Pick any one of the latest processors from AMD, Intel, Nvidia, or Qualcomm and you'll see that they're packed with all kinds of features to improve their memory security. One such example is the memory tagging extension that checks to see if the correct memory locations are being addressed in the code. There's a performance impact to using it, of course, but this is true of all such measures.

The report goes on to state that developers should rely on so-called formal methods, which are mathematical techniques for designing, writing, and testing code, acting as a reliable means to ensure that applications are as robust as possible.

I noticed there was one area not covered in the report, though, and that's the use of generative AI to create code just from a few input words. Such models have been trained on code examples already in the wild, so to speak, and if a lot of that is memory-unsafe or contains several vulnerabilities, then there's a good chance that the AI code will do too.

This was a missed opportunity by the US government to highlight the risks of using generative AI in this manner and if it's not properly addressed, we could reach a point where such models would be near impossible to unravel, because as the models continue to be trained on existing code, there's an increased chance the training will be tainted by AI-code, building on top of itself, without ever removing the vulnerabilities.

A significant challenge that the report points out is how one measures just how cyber-secure an application or codebase is. Even relatively simple pieces of software can easily run into millions of lines of code, using hundreds or thousands of libraries. Manually checking all of that, by hand, just isn't feasible but the task of creating software to do the assessment is equally demanding.

This is especially problematic for open-source software. While various quality metrics can be monitored, a business can easily set up a system to ensure that this happens regularly and allocate dedicated staff to the role; open-source projects are heavily reliant on volunteers doing the same.

The report doesn't provide any solution to this and simply states the research community shouldn't ignore the issue, though I hasten to add that the problem is so complex that no single report could ever hope to address it.

Windows 11 review: What we think of the latest OS.

How to install Windows 11: Our guide to a secure install.

Windows 11 TPM requirement: Strict OS security.

There are a couple of other aspects the Back to the Building Blocks report covers but it ends with an interesting observation: "Software manufacturers are not sufficiently incentivized to devote appropriate resources to secure development practices, and their customers do not demand higher quality software because they do not know how to measure it."

The recommended solution to the first part of that statement is that "cybersecurity quality must also be seen as a business imperative for which the CEO and the board of directors are ultimately accountable." In other words, making software cyber-secure is the responsibility of large companies, not the individual user of said software.

Whether this report garners any traction within the tech industry is anyone's guess at this stage but it's good to see government bodies taking the matter seriously. Is there anything we could do that would make a difference? Yes, by protesting with your wallet. Don't give your hard-earned money (or personal data) to companies that aren't actively making their products as secure as possible.

Easier said than done, of course, and that's probably true of everything covered in the White House's report.