Between mini-PCs, laptops and handhelds, there are tons of sub-desktop PCs with gaming-capable CPUs which rely on integrated GPUs to push polygons. Wouldn't it be neat to bolt on a discrete GPU and unlock higher settings, higher resolutions and higher frame rates, on a lovely high-refresh monitor? Maybe even chuck a nice ray-traced cherry on top?

If only it were that simple. Sadly, external GPUs are still something of a dark art.

They have a reputation for being lossy in terms of quality and performance. Why is that, and are the losses acceptable or significant? Do the costs make sense? Is it cheaper to scratch-build a PC with a PCIe x16 slot that get 100% out of a GPU? In that event, should you reserve your laptop for sightseeing and have a second, stay-at-home machine purely for desktop gaming? What kind of ports do you need to plug an eGPU into, and does your target machine even have them? Is it fiddly to set up? Is it reliable? Is there a single best solution available? What's the post-purchase support like? Does that even exist? Aaargh!

With the exception of Razer's Core X eGPU enclosures and a few one-off Asus oddities, none of the big beasts of PC gaming hardware have really made a go of it with eGPUs. Which is hardly surprising when there isn't an established and measurable market in place to mitigate your R&D costs. There are smaller manufacturers with a greater appetite for risk though, and I'm excited by the Cambrian explosion in APU-driven PC design currently happening in the far east.

Mini-PC makers such as Geekom, Ayaneo, Minisoforum, and Beelink are experimenting with new form-factors at a crazy rate; barely a week goes by without some beguiling new announcement or launch, and we're seeing eGPU options appearing among designs. There's evolution afoot, which feels disruptive and not a little thrilling.

This article isn't designed to persuade you that an eGPU is some sort of silver bullet, or a golden ticket to a glorious high-frame rate world, or the future of PC gaming. The aim here is to kick the tyres of current eGPU tech, weigh up the benefits, spotlight the issues, look ahead to future solutions, and figure out if an eGPU is something you have a use-case for.

PCI Express delivery

The ability of any GPU to deliver to its full potential is contingent on the interface it uses to connect with your PC. It needs enough bandwidth to send and receive the data it works with unimpeded. But before we examine those interfaces, let's take a snapshot of how PCI Express works, and where an eGPU gets involved in the system.

The PCI-express bus is the data-thoroughfare connecting your CPU, RAM and various pluggable devices such as SSDs, GPUs and wireless cards. It consists of bi-directional pathways called lanes which pipe data around the system, and lane-bandwidth doubles with each new generation. PCIe 3.0 had a theoretical maximum of 8 GT/s (giga-transfers per second) per lane, which doubled to 16 GT/s with PCIe 4.0 and 32 GT/s for PCIe 5.0. Gen4 is still the most prevalent version in motherboards, though many modern mobos offer PCIE 5.0 support for M.2 drives, but only a few currently have Gen5 GPU slots. Which is fine right now, as PCIe 5.0 graphics cards don't even exist yet. For a deeper dive on the PCIe 5.0 state of play, you'll want to bookmark Nick's excellent exposé for later consumption.

The standard interface for graphics cards is the x16 PCIe slot. In a PCIe 4.0 system, that's 16 lanes, each capable of piping 16 GT/s to and from the PCIe bus. 256 GT/s is a crazy potential amount, more than even the fattest GPUs require to deliver the goods unimpeded; they don't even touch the sides. Put simply, until PCIe 5.0 graphics cards appear, bellowing demands for greater bandwidth, the PCIe 4.0 x16 slot still offers buckets of transfer headroom.

How does all this relate to eGPUs, you might wonder? Well, the current interfaces by which you can externally connect a graphics card to a PC employ just four PCIe lanes. So the question becomes: how is performance impacted when a GPU only gets a quarter of the bandwidth it would get in a regular desktop PC?

Thunderbolt vs. OCuLink

The two principal interfaces currently used by eGPUs are Thunderbolt and OCuLink. Each uses four PCIe lanes, but that doesn't make them equal. Each has its pros and cons.

Thunderbolt was designed by Intel in 2010 and eGPU enclosures really became viable with Thunderbolt 3, which has a maximum 40 GT/s transfer rate across four PCIe lanes, and that's the bit we're most interested in. There are some benefits to Thunderbolt 4, the current version, but greater bandwidth than Thunderbolt 3 isn't one of them; it still caps out at 40 GT/s. As far as eGPUs are concerned then, you can expect broadly the same level of performance-boost regardless of whether you plug into Thunderbolt 3 or 4.

The beauty of Thunderbolt lies in its ubiquity, and the fact it can carry a charge as well as data, granting hot-swap functionality and powering or charging pluggable doodads. It's widely adopted in many laptops and PCs and terminates in a USB type-C connection. That's not to say all USB-C ports support Thunderbolt though; the system needs specific Thunderbolt hardware controllers to enable the protocol's transfer speeds, as is the case with USB4. The downside is that while Thunderbolt offers a connection to the PCIe bus, the data encoding/decoding process managed by the controllers introduces latency between the device and the bus, and this has an impact on gaming performance.

OCuLink—or Optical Copper Link to give it its full title—is a different beast. It's been around for 12 years, was designed as a competitor to Thunderbolt and is more of a companion standard to PCI Express. Where Thunderbolt employs micro-controllers between device and bus, OCuLink plugs directly into it and is therefore not subject to Thunderbolt's latency.

The current generation is OCuLink 2, developed to match bandwidth standards and interface with PCIe 4.0, so each lane carries 16 GT/s. This means a four-lane OcuLink cable is capable of transferring 64 GT/s, compared to Thunderbolt's 40 GT/s. In short, it's a more direct, higher-bandwidth, lower latency connection to the PCI Express bus than Thunderbolt.

The downside of OCuLink is that it was never adopted as an industry standard by PC and laptop manufacturers in the same way as Thunderbolt, so OCuLink ports are rare as hen's teeth. Unlike USB or Thunderbolt, it doesn't carry power, only data, which massively limits the range of pluggable devices that can use it. This also means no hot-swap, so every time you connect a device via OCuLink you'll need a system reboot to use it. So while it's a manifestly better data-connection, it isn't as device-friendly, versatile and all-round useful as Thunderbolt. No wonder that it's still a rarity as an external I/O port in PCs and laptops, and as a result, no manufacturers have taken a punt on developing consumer-friendly OCuLink eGPU enclosures akin to Razer's Thunderbolt-compliant Core X eGPU boxes. With so few OCuLink ports out there, the potential market is just too small.

Ali Express, however, is awash with OCuLink GPU docks, cables and M.2-to-OCuLink converter cards, which can theoretically make any machine with an M.2 port talk to an external GPU. Homebrew communities have adopted these components with some success, but quite often this involves some invasive surgery, namely drilling a hole in your device's case to feed an OCuLink cable out from the M.2 port and into an external GPU dock. It's fiddly, messy, and the results aren't guaranteed. Consumer-friendly OCuLink setups are rare, but we've procured one for this test.

Who's better, who's best?

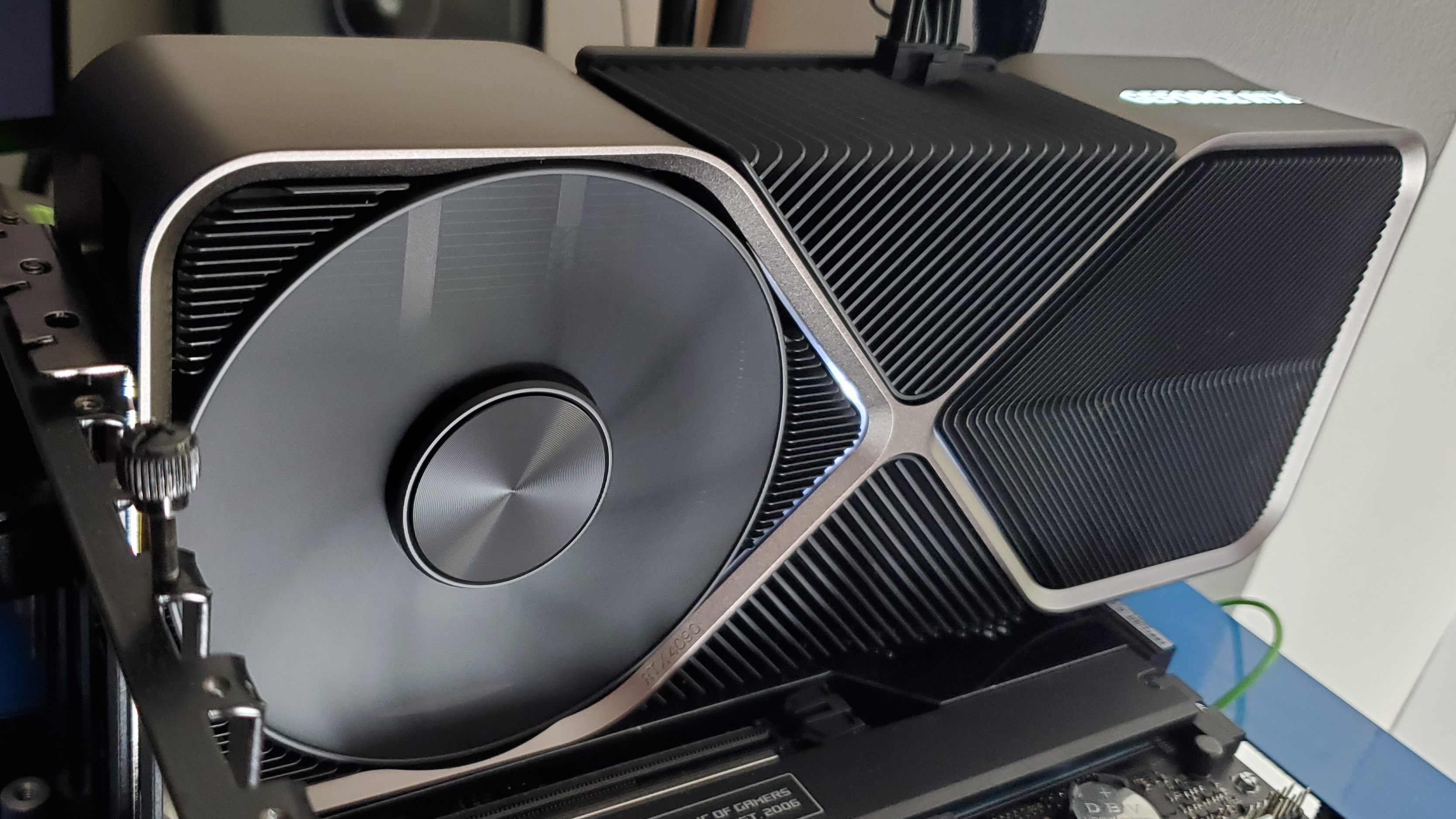

To get a handle on how eGPU performance differs between the two interfaces, and how they both compare with a desktop PC using the same GPU, we assembled four platforms to benchmark a series of games at 1440p and ultra settings. These platforms test two GPUs—the RTX 4070 Ti and the RTX 4090—connected to PCs using Thunderbolt, OCuLink, x8 PCIe and x16 PCIe.

It's worth highlighting that these systems aren't all equal in terms of their other compute components. While Thunderbolt and OCuLink were tested on the same machine, we had to turn to different PCs to test PCIe x8 and x16 connections. There is simply no single machine that enables you to test all these interfaces with identical components elsewhere. However, all systems feature modern, performant CPUs, 32 GB of DDR5 RAM, and all utilise PCI Express 4.0. So, while there's definitely some margin for error, the systems are broadly similar enough to give us a ballpark picture of performance across the interfaces.

eGPU test systems

System 1: eGPU via Thunderbolt

Minisforum AtomMan X7 Ti

CPU: Intel Core Ultra 9 185H

RAM: 32 GB DDR5 5600

GPU: RTX 4070 Ti / RTX 4090 via Razer Core X Chroma over USB4 (Thunderbolt)

Notes: The X7 Ti has a pair of Type-C USB4 ports supporting Thunderbolt devices and speeds, which is what we need to test performance using the Razer Core X Chroma. Happily, it also has an OCuLink port, enabling us to get a direct comparison between the two using the same base machine.

System 2: eGPU via OCuLink

Minisforum AtomMan X7 Ti

CPU: Intel Core Ultra 9 185h

RAM: 32 GB DDR5 5600

GPU: RTX 4070 Ti / RTX 4090 via Minisforum DEG-1 Dock over OCuLink

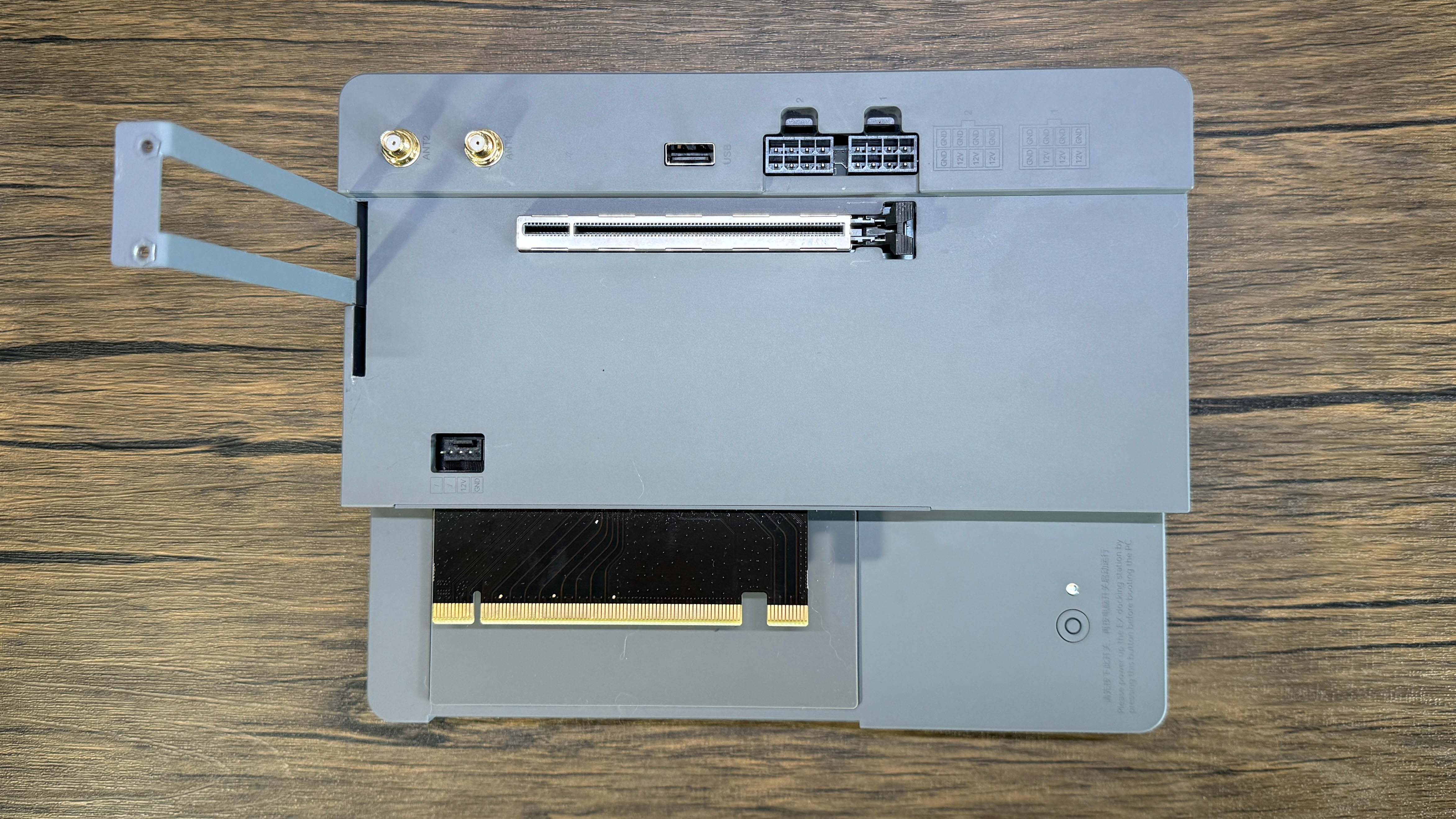

Notes: A number of Minisforum's recent mini-PCs have OCuLink ports, so it makes sense that the company complements these with the DEG-1 OCuLink GPU dock, and it's the tidiest OCuLink setup I've clapped eyes on. The GPU and dock both require a PSU to power them however; we used Corsair's small form-factor SF650, though the DEG-1 has mounting points for a standard ATX PSU as well.

System 3: PCIe x8

Beelink GTi 12

CPU: Intel Core i9-12900H

RAM: 32 GB DDR5 4800

GPU: RTX 4070 Ti via Beelink EX Dock over PCIe x8

Notes: The Beelink GTi 12 features a x8 PCIe interface on the underside, and bolts neatly onto the EX dock, which can be bought with it as a bundle. The EX dock has its own internal 600-Watt PSU, which is ample, but it doesn't support the triple-slot design or power and cabling requirements of the RTX 4090, so we only tested the dual-slot RTX 4070 Ti in this setup.

System 4: PCIe x16

My desktop PC

CPU: Ryzen 9 7900

RAM: 32 GB DDR5 5600

GPU: RTX 4070 Ti / RTX 4090 via motherboard PCIe x16 slot

Notes: as a desktop system, this setup utilises a full-fat x16 PCIe port, so we'd naturally expect it to outperform the eGPU contenders, all of which are limited to four or eight PCIe lanes. We treat the benchmarks generated on this machine as baselines against which we can judge the performance of the eGPU setups.

RTX 4070 Ti performance

Analysis: RTX 4070 Ti

Given what we know regarding the lower bandwidth and higher latency of Thunderbolt in comparison OCuLink, it's no surprise to see it coming last in all benchmarks at 1440p. it performs around 25% worse than OCuLink in many scenarios, with lower, more jarring 1% low frame rate drops. It's prone to much worse stuttering too; generally speaking, it's easy to ignore stuttering frames if they're 0.5% or of the total frames generated, but as they climb past 1% and upwards they begin to become more noticeable and irksome. It's abundantly clear there's some bottlenecking at work.

That's not to say Oculink is perfect. It enjoys higher frame rates and better 1% lows, sitting it midway in performance terms between Thunderbolt and a full PCIe x8 or x16 system using the same card. It's still prone to some stutter though, which we can attribute to some bottlenecking due to the fact it only grants four lanes for these graphics cards to work with. It's feasible that if you stick a lesser GPU in either a Thunderbolt or OCuLink setup, the lower data-demands of that GPU will match the interface's throughput and you'll see reduced —and below a certain grade of GPU, possibly eliminate—bottlenecking and stutter.

The surprise result here is just how closely the x8 and x16 setups compare with the RTX 4070 Ti. The x16 system has a small but discernible lead in most games—which we can perhaps attribute to the newer CPU in that system—but neither direct PCIe interface exhibits noteworthy stutter, with the exception of eight lane setup's Homeworld 3 effort. I'm certain this is down to its 65W-limited i9 CPU though, as indicated by the in-game benchmark which noted several instances of CPU binding. All in all, it's fairly safe to conclude that an x8 PCIe 4.0 GPU slot offers all the bandwidth the RTX 4070 Ti needs to deliver in full.

RTX 4090 performance

Analysis: RTX 4090

Oookay, it's time to talk about diminishing returns. We've already concluded that both Thunderbolt and Oculus, limited to four PCIe lanes, can't get the most out of the RTX 4070 Ti, exacerbated for the former by its higher latency and lower bandwidth.

Looking at Cyberpunk, the RTX 4090 in our x16 PCIe slot sees a 45% frame rate uplift over the RTX 4070 Ti with no stutter. For OCuLink this drops to a 22% uplift, and for Thunderbolt, it's a miserly 19%. With a little variance per title, these sorts of percentages are seen across all the games we benchmarked.

To make matters worse, the RTX 4090's hugely higher throughput clearly needs more than four lanes to send and receive data unimpeded. This results in some furious stutter, particularly in the Thunderbolt setup. Let's just say you don't want to be playing Total War: Warhammer 3 at 1440p on a RTX 4090 over Thunderbolt… 26 - 28 fps and 3.5 - 4% stutter is a downright horrible experience.

The conclusion we can draw from all this is that the bigger and more expensive the GPU, the worse returns you'll see in terms of performance. Neither OcuLink or Thunderbolt are worth the outlay on a high-end GPU. The four-lane pipeline can't handle the much larger throughput without clogging up and creating a stutter-fest, so you're just throwing good money after bad.

It's just a shame that our x8 PCIe setup isn't capable of using the RTX 4090. It would have been academically interesting to note whether the difference between eight lanes and 16 lanes made a major difference when handling such a beast of a card.

Mulling it over

I wouldn't touch Thunderbolt with a bargepole. Let's stick it in the cupboard where we keep PS2 mice, the AGP port, and Windows Vista.

It's plain to see that limiting a high-end GPU's pipeline to four PCIe lanes nobbles its ability to deliver in full, as our x8 and x16 comparison results clearly show.

However, less powerful cards in these eGPU setups come closer to their full potential and with less hitching at our chosen resolution and settings. If I were in a situation where I had a laptop or mini-PC as my main or only machine, I might consider an eGPU setup, but I wouldn't shell out much on the GPU. I reckon an RTX 3070 or RTX 4060 feels about right. Much more powerful than that, and you just know you're throwing away sweet frames that you've paid dearly for. I'd aim for midrange at best.

OCuLink saw some reasonable results out of the 4070 Ti at 1440p, though with lower 1% lows and higher stutter than you'd expect from an x8 or x16 desktop machine. Again, you'll get a lower frame rate but likely a smoother result with a lower-end card. And while a Thunderbolt eGPU setup delivers much higher frame rates than an integrated GPU, it falls short of OCuLink's cleaner performance delivery. Once, it was the only eGPU game in town. Nowadays, with PCIe 4.0 cards, I wouldn't touch Thunderbolt with a bargepole. Let's stick it in the cupboard where we keep PS2 mice, the AGP port, and Windows Vista.

This is also worth noting. If you have a laptop with an OCulink port and are considering something like Minisforum's DEG-1 dock—or an all-in-one eGPU box like the GPD G1—to bolster its gaming performance, you'll also want to output the signal from the GPU directly to an external monitor. If you use the laptop's built-in panel, you're effectively doubling the data-burden on that four-lane OCulink connection, as the signal needs to be piped from the GPU back up the line and over the PCIe bus to the laptop's display. We didn't test this, but the outcome is predictable: higher latency, lower frame rates and 1% lows, and troublesome stutter.

A word on costs

As stated, I wouldn't consider a Thunderbolt eGPU enclosure as an eGPU solution. It'll run games better than the integrated GPU of an APU, but it's the worst-performing solution in terms of what it can get out of a discrete graphics card, and recent options start at around £200/$200 mark—that's without the GPU or the PSU to power it. So I'm writing it off.

The DEG-1 OCuLink dock we used for this test will set you back £95/$99 and comes with an OCuLink cable. You still need a PSU and a GPU of course. If you're doing this on a budget and shopping for a 2nd hand GPU, I think you could set a budget of around four hundred bucks and come out of it with an Oculink setup running a RTX 4060, for reasonable 1440p performance at medium to high settings.

The Beelink GTi 12 and EX Dock bundle we tested here is an interesting one. It's an eight-lane setup which, as we've seen, is all an RTX 4070 Ti needs to deliver the goods in full, and it's well-specced all round. But this isn't a bolt-on eGPU like a Thunderbolt/OCuLink dock or enclosure; it's basically an off-the-peg desktop PC without a GPU; it just doesn't look like one. But it's banging value for money as the basis for a system. Whack in a second-hand RTX 4070 and you're looking at a killer 1440p system for around a grand. Just be mindful that buying the dock on its own won't work as an eGPU solution for anything other than Beelink's GTi-series mini-PCs. They connect using a full-sized x8 PCIe interface, which you won't find on any other mini-PCs, laptops or handhelds.

Final thoughts

So TL;DR: If you want some extra grunt for your Laptop, Mini-PC or handheld, It's hard to recommend a Thunderbolt setup. You'll get better frame rates than your iGPU provides, but it's just too lossy, with a risk of noticeable stutter, and a waste of your GPU's potential. It's possible that the throughput of a low-end GPU will be much less affected by Thunderbolt's latency and 4-lane limit, but that kind of defeats the purpose of getting an eGPU in the first place.

OCuLink fares rather better, and might be a consideration if your target machine already features an OCuLink port. You can convert any machine with a spare X4 M.2 slot to run over OCuLink, but be prepared to get involved with some custom eGPU communities and to put some elbow grease into what's going to be a fiddly job, I'd only suggest attempting this if you're seriously DIY-minded, and relish encountering and solving problems. Plus, the four-lane limit means that throwing a high-end card in an OCuLink setup is a recipe for stutter stew. Aim for midrange at best.

Type-C Thunderbolt 5 and USB4v2 ports are beginning to make their way into laptops, and that's intriguing, as they double Thunderbolt 4 and USB4's PCIe data throughput to 80 GT/s. And while that's a solid bandwidth figure—16 GT/s fatter than OCuLink's 64 GT/s—the jury's out on whether they'll suffer the same encode/decode latency issues as the previous generation. There's no knowing till we get them up on the test-bench, so as they say, watch this space.