Picture the scene. You’re walking around an open world game and finally find a merchant with the item you really need. Now, instead of a boring scripted interaction it can go into depth about its troubled childhood, or you could bargain with them to get a better deal.

This is one future of gaming being put forward by Nvidia and Ubisoft as they collaborate on a digital humans project dubbed NEO NPCs.

These are non-playable characters built on top of a large language model like ChatGPT — giving them reason, conversation and chat.

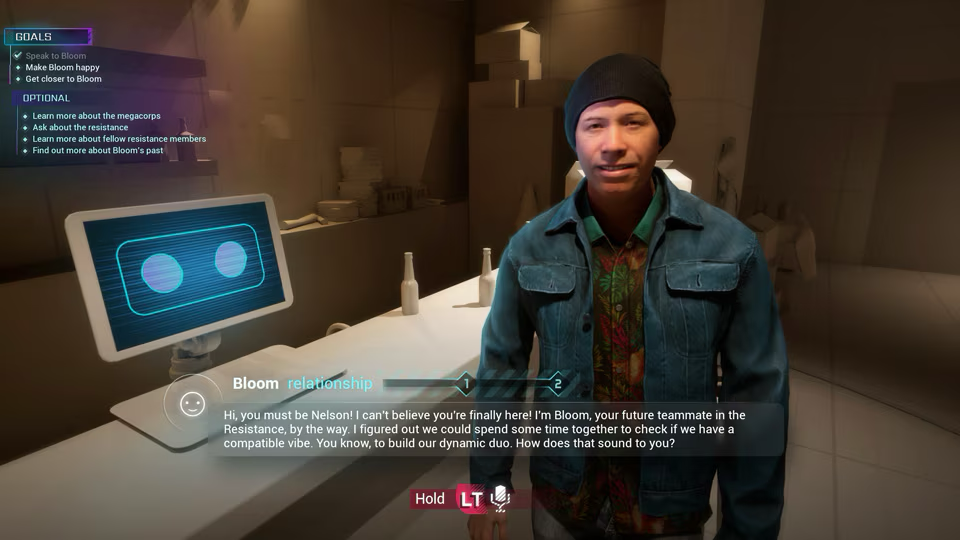

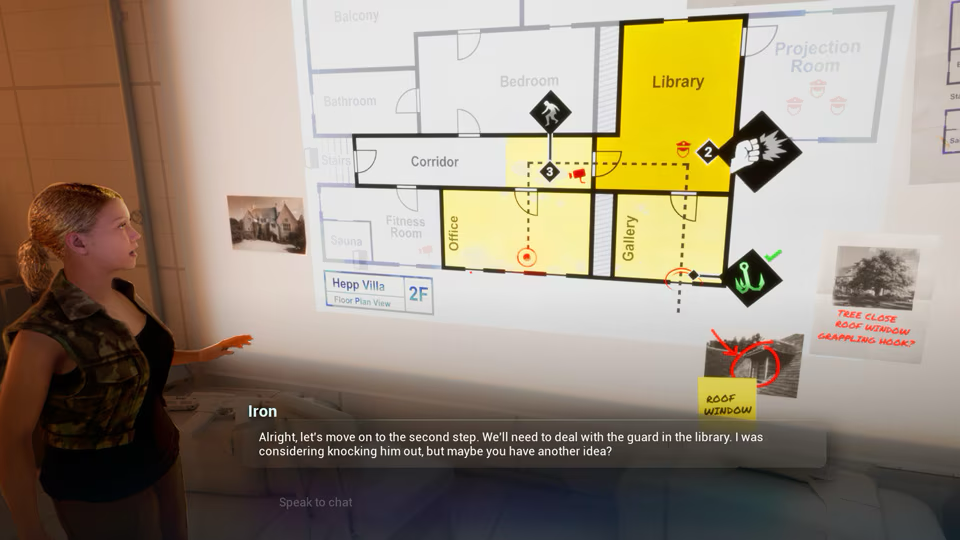

Ubisoft demoed two of these NEO NPCs at GDC that also had an intrinsic knowledge of their surroundings, interactive responses and even facial animation and lip synching.

What are digital humans?

Put simply digital humans are realistic, but not necessarily human-like avatars and characters created built using a combination of AI technologies.

With the addition of a large language model or other foundation AI techniques they are capable of engaging in realistic conversations and having human-like interactions. If you’ve ever conversed with an AI chatbot — imagine that with a face and voice.

There is no single model for creating these versatile characters. The Nvidia approach uses a range of technologies such as the Nvidia Avatar Cloud Engine for facial animation and speech, as well as other models to provide lip synching and the large language model brain.

It isn’t just in gaming either, they are being used across healthcare, financial services, retail and even in the media to create new forms of interaction between company and consumer.

For example, they have been developed as virtual healthcare agents that can converse with patients, and customer service avatars for brands — putting a digital face on the label.

How are Ubisoft using digital humans?

Artificial intelligence is a growing part of the gaming industry. A recent study by Unity found that 62% of game studios are already using AI in their workflow, with others exploring its use in gameplay. One way this could manifest is by improving the way NPCs operate.

Nvidia has been working in this space for some time, and while it is starting to explore the concept of embodied AI (with virtual agents and robots), on its own this wouldn't add much, but AI startup Inworld has a large language model built for bringing life to virtual characters.

This has given rise to Ubisoft’s new NEO NPCs, a form of digital human that enabled the Ubisoft narrative team to craft a full background, knowledge base and even conversational style unique to every character within a game — even those rarely interacted with.

Two of the first are Bloom and Iron, who use their unique history and character traits to interact with players in new ways. Built into a demo universe, they can talk about the area they’ve been placed, draw on their background and provide conversation analysis and insight to a player.

Why is this such a big deal?

While this does open up some impressive new potentials for game play and development, even making it easier to create NPCs as they don’t need individual scripts and conversation trees, it does raise some issues around work for voice actors, writers and other creatives.

There is also the creepiness factor to consider. My colleague Tony Polanco was given a demo of the Avatar Cloud Engine at CES, showcasing a pair of AI-powered digital humans calld Jin and Nova. They had a full conversation with both the player and with each other.

I could easily find myself standing there listening to them for hours. Or, as Tony wrote back in January: "Not having to suffer through the same boring dialogue sounds great, but it could also become a huge time-sink. Since the conversations are dynamic, it’s possible to spend hours talking to an NPC and not even play the main game.”

I could see a company like Roblox investing in this type of technology, offering it up to companies and brands to provide AI representatives in their virtual world, or to developers wanting to have more interactivity when not many players are online.

When can I play with a digital human?

Aside from the fact you should ask the digital human if you can play with them first, it will likely be a while before the true AI NPCs appear in commercial games. A lot of the heavy lifting will be done in the cloud, but some will rely on local AI chips.

We’re seeing rumors of AI chips coming to the PlayStation 5 Pro, and Intel has an entire chip range with an NPU built in, branded as AI PCs — but the developers still need to work on full integration. I think within a year we may be getting the first indie AI NPCs.

Ubisoft is confident it is coming — who knows, maybe the next Assasins Creed will have fully interactive NPCs — calling it the future of gaming.

"Gen AI will enable player experiences that are yet to be imagined, with smarter worlds, nuanced characters, and emergent and adaptive narratives,” said Guillemette Picard, Ubisoft's SVP of Production Technology.