As A.I.-generated fake images are going viral, more and more people are urging tech companies to pump the brakes to implement safety measures around the technology.

More than 1,300 people have signed an open letter published yesterday urging companies to take a six-month pause before A.I. systems become more powerful than OpenAI’s newly-released GPT-4. Signatories included Elon Musk, who cofounded OpenAI and was its largest initial donor; Apple cofounder Steve Wozniak; Stability AI founder and CEO Emad Mostaque; Connor Leahy, the CEO of A.I. lab Conjecture; and the deep learning pioneer Yoshua Bengio, who is a Turing Award–winning computer scientist. There were also a handful of people who said they hailed from Google and Microsoft, which have both rolled out new A.I.-powered search engine assistants in recent weeks (both of which I have been using).

The message was clear: “A.I. systems with human-competitive intelligence can pose profound risks to society and humanity,” going so far as to say that advanced A.I. “could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources.” Sufficient planning and management is not happening, the signatories argue.

Concerns over responsible A.I. usage have compounded as companies including Microsoft, Meta, Google, Amazon, and Twitter have laid off members of their responsible A.I. teams during recent cuts, as was reported by the Financial Times. While impacted employees are a small handful relative to the broader cuts happening across the tech industry, they are taking place at a rather inopportune time.

Generative A.I. tools are now widely being used by the masses—ever since OpenAI unleashed ChatGPT into the world last fall. Microsoft and Google have only just released their respective search engine chatbots (OpenAI-powered Bing and Bard, respectively) to the public, unleashing brand-new, very powerful, consumer-facing technology and sparking substantial critique over how these bots are spinning conspiracy theories or spewing misinformation.

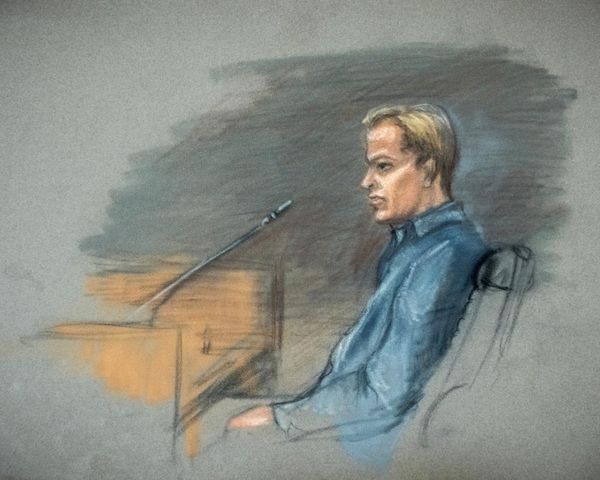

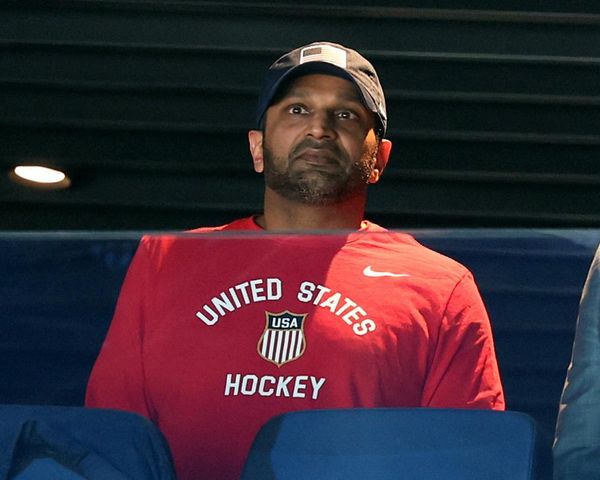

Now, as A.I.-generated, fake images of Trump getting arrested or of the Pope wearing a puffer jacket, are going viral, it is raising questions over misinformation becoming much more rampant. But critics take things a step further: Should artificial intelligence surpass that of human intelligence, it could be dangerous for the future of democracy and society. As OpenAI CEO Sam Altman now-infamously said at a venture capital event earlier this year: "I think the worst case is lights-out for all of us.” (No one from OpenAI has signed the letter, although new signatures are still being vetted.)

If signatories have their way, further development of machine models can wait another six months while A.I. labs and policymakers jointly develop a set of safety protocols and work to accelerate the development of things like regulatory authorities dedicated to A.I., auditing and certification, provenance and watermarking systems to “help distinguish real from synthetic and to track model leaks.”

A six-month pause may not be a realistic ask for companies that have deployed billions of dollars into their models and are plunging headfirst into improving them. But plenty of people think it’s absolutely necessary.

See you tomorrow,

Jessica Mathews

Twitter: @jessicakmathews

Email: jessica.mathews@fortune.com

Submit a deal for the Term Sheet newsletter here.

Jackson Fordyce curated the deals section of today’s newsletter.