A new artificial intelligence tool lets you generate a virtual world from a simple prompt. Named Holodeck after the recreational and training facility on the Enterprise in Star Trek, it can generate anything from an arcade to a spa and in the style of your choice.

Researchers from several leading universities were involved in the project. It uses multiple AI models and a library of open-source 3D assets to generate the virtual environment.

As well as building virtual worlds from text, the Holodeck technology can be used to help other artificial intelligence tools learn to navigate previously unexplored environments. This is vital as robots, search and rescue devices and vehicles become more autonomous.

How does the Holodeck work?

🛸 Announce Holodeck, a promptable system that can generate diverse, customized, and interactive 3D simulated environments ready for Embodied AI 🤖 applications.Website: https://t.co/v7yN1EuAvbPaper: https://t.co/4JlZfmlKrpCode: https://t.co/OmRDLKIZQj#GenerativeAI [1/8] pic.twitter.com/IodCNlNNzNDecember 18, 2023

Holodeck is built on top of a series of pre-labeled open-source 3D assets. When a user enters a text prompt it then utilizes OpenAI’s GPT-4 “for common sense knowledge about what the scene might look like,” then generates spatial requirements and necessary code.

Once the text has been converted, Holodeck is then able to draw from the 3D assets to create the world. The examples shown in the preview include the “office of a professor who is a fan of Star Wars” and “an arcade room with a pool table placed in the middle.”

Using GPT-4 solves the problem of positioning objects correctly within an environment. It does so by having the OpenAI model create spatial constraints that are fed back into the code.

During human evaluations of the model, those carrying out the tests found that Holodeck performed particularly well at creating residential scenes.

What is Embodied AI?

Embodied AI is basically how AI-powered robots see the world around them. It requires an understanding of ever-changing information that isn't included in pre-trained datasets.

One of the use cases for Holodeck is in enabling these robots to create a virtual copy of the real-world environment they are in and use that to help navigate from room to room.

Yue Yang, a PhD student at the University of Pennsylvania and lead author on the Holodeck project explained that “3D simulated environments play a critical role in Embodied AI, but their creation requires expertise and extensive manual effort, restricting their diversity and scope.”

To solve the problem they created a mechanism that builds these 3D environments from a minimal amount of information automatically. Holodeck can match a user prompt and generate a diverse range of scenes, add objects to the scene and change the style of the environment.

What happens next?

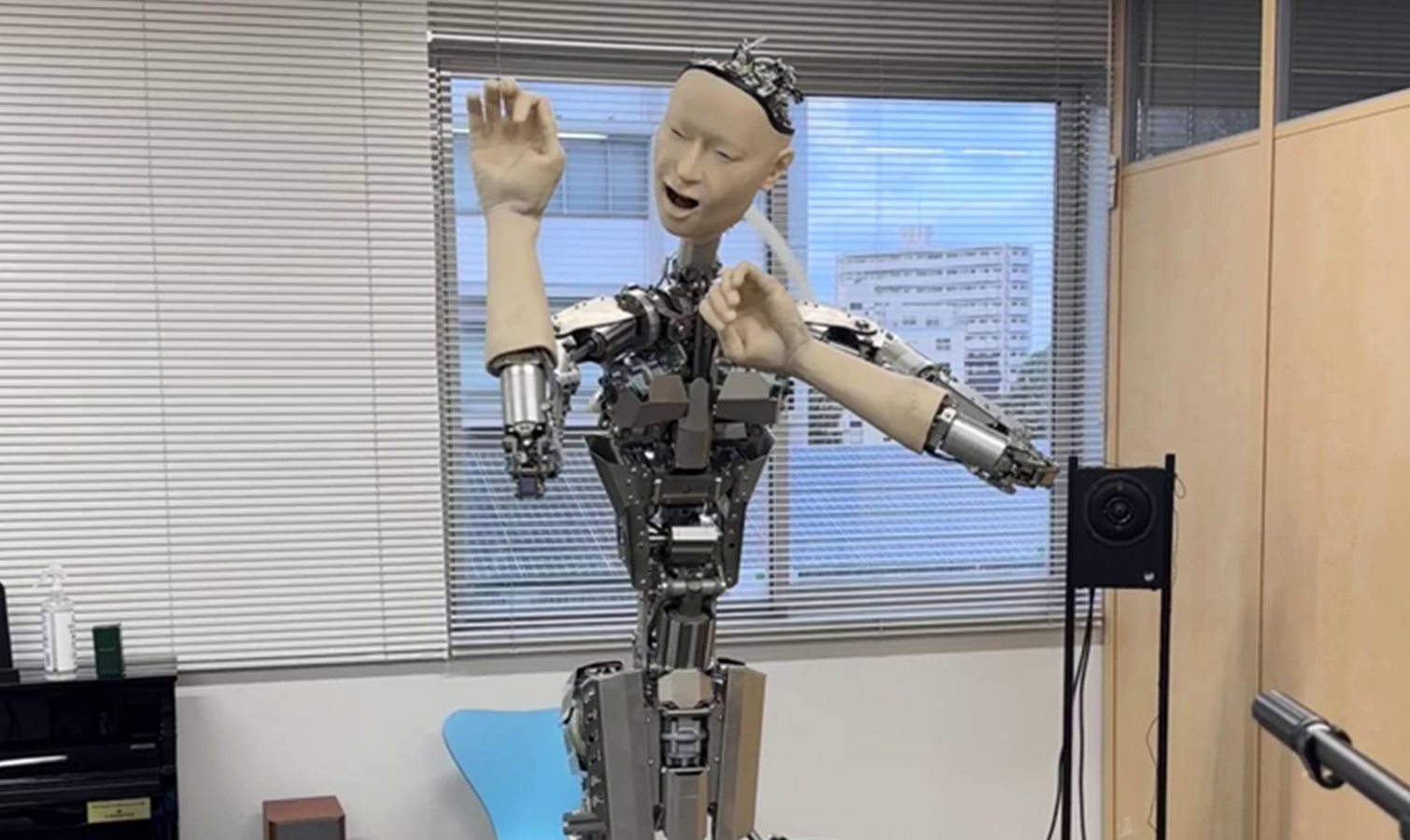

This is one of several research projects exploring ways to link the digital and physical worlds. Last week I wrote about a study that uses GPT-4 to allow humanoid robots to create new movements without having someone hardcode the processes.

We are also seeing leaps forward in the way driverless vehicles can use machine learning and computer vision technologies to navigate previously unmapped regions.

This could be the start of a useful metaverse. Not one where humans clumsily hangout in a virtual office pretending not to notice the clunky headset, but one in which virtual agents act on our behalf in a direct copy of the real world.

Either that or it could just be the next step in generating a metaverse "on the fly", similar to Minecraft where a world is created in response to the way you play.