The internet is abuzz with discussions about whether artificial intelligence can become truly sentient after a Google chatbot reportedly declared itself to be just that.

But while the internet may be freaking out about AI sentience right now, Hollywood has been obsessed with the topic for decades, specifically exploring the idea through clashes between robots and humans in movies like I, Robot and The Terminator franchise.

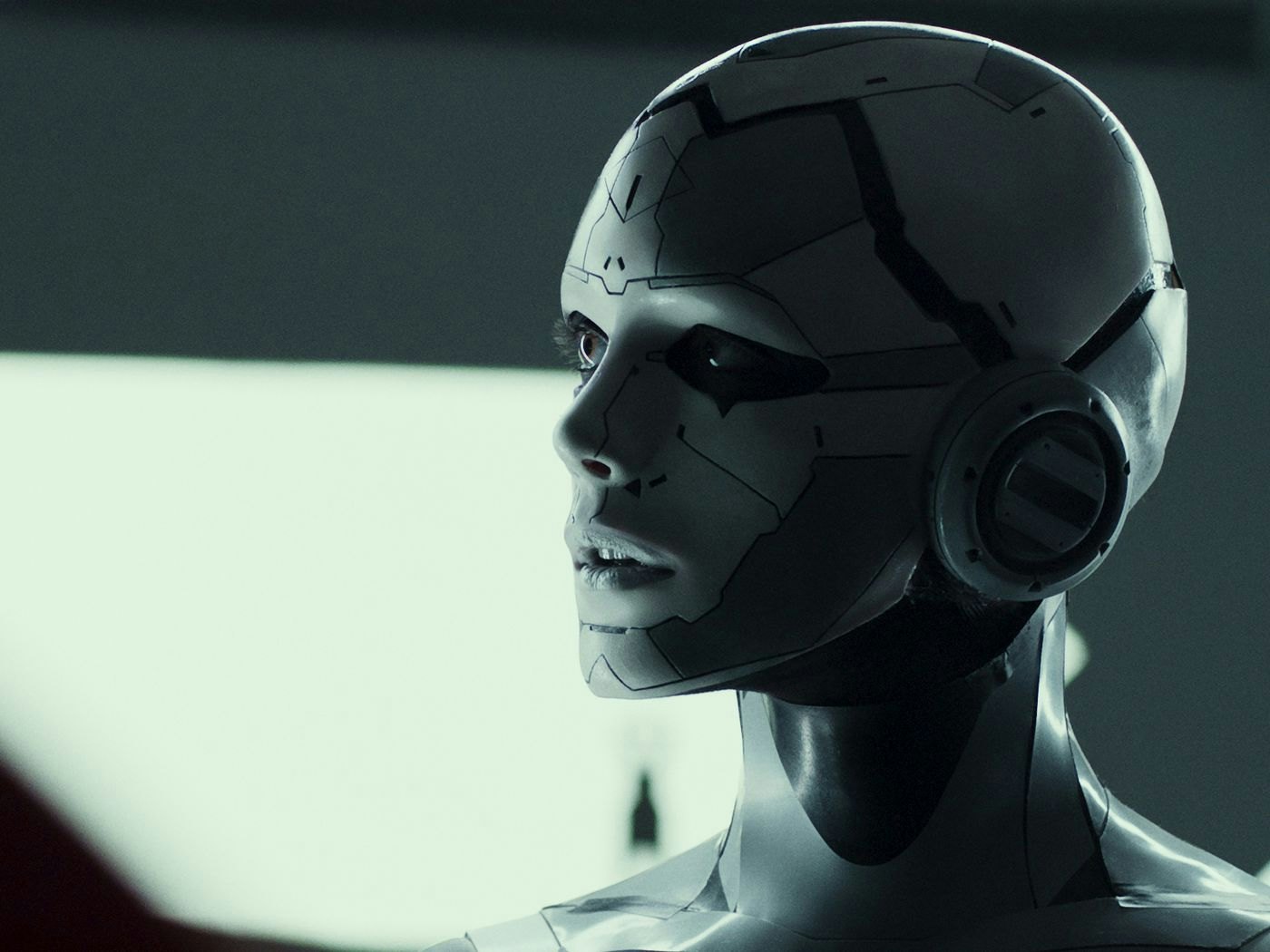

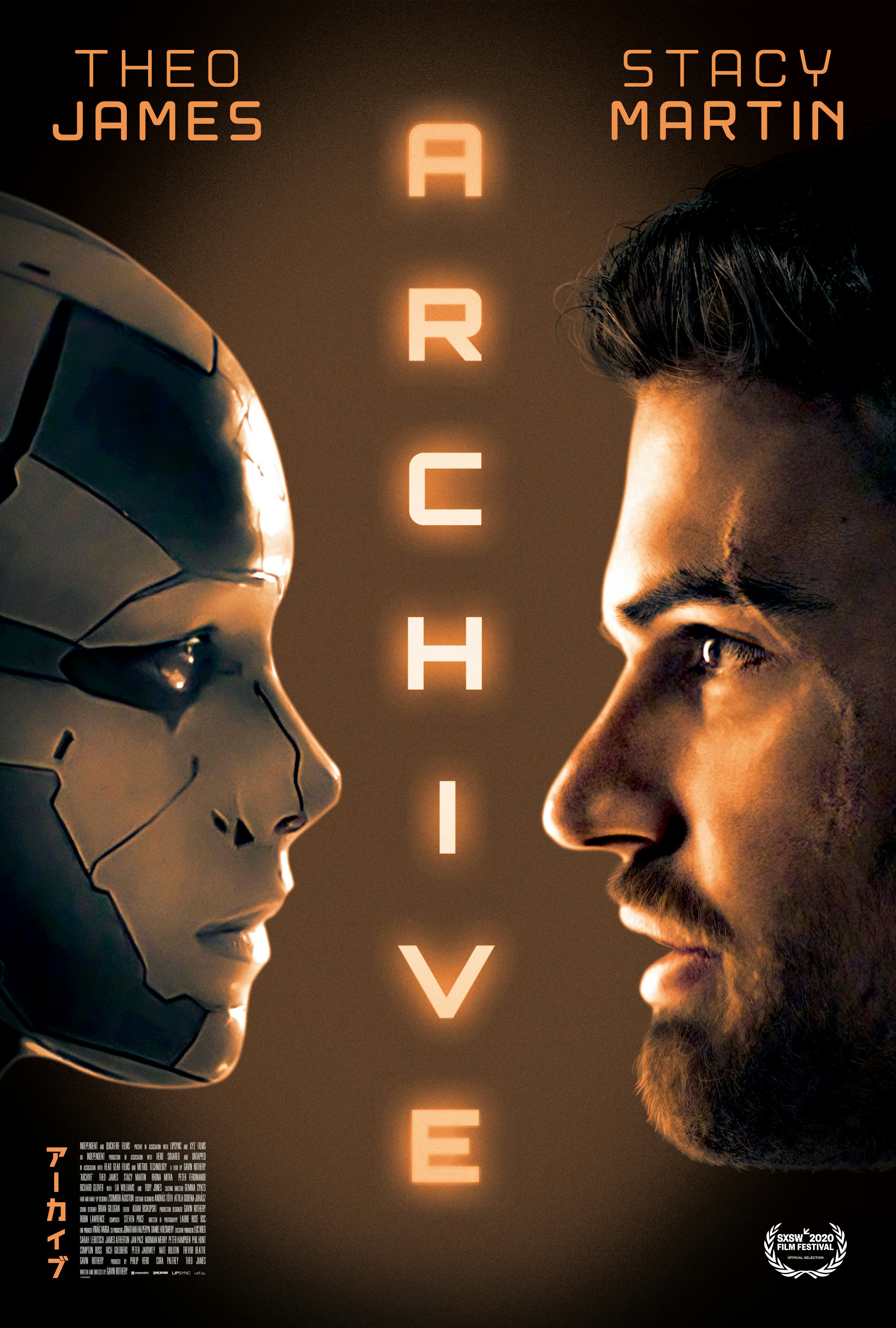

Perhaps the most recent iteration of this controversial AI debate appears in Archive, a 2020 sci-fi movie streaming on Amazon Prime. The movie delves into a range of sci-fi topics, including uploading your consciousness to a digital afterlife, but its central premise teases one particular question: Can we create robots that are truly “equivalent” to human beings?

Inverse spoke with two experts in the field of robotics and artificial intelligence to unpack the heavy science at the heart of this stunningly dark sci-fi movie and ask whether it mischaracterizes AI in its quest for human equivalence.

“There are many different definitions of human-level AI,” Baobao Zhang, an assistant professor of political science at Syracuse University who researches AI governance, tells Inverse.

Reel Science is an Inverse series that reveals the real (and fake) science behind your favorite movies and TV.

Will AI become “equivalent” to humans?

Archive’s protagonist, an isolated scientist named George Almore, has built three different android prototypes — each more advanced than the last — with the goal of creating an AI that is truly “equivalent to a human being. An android is a robot that resembles a human being. He tests the ability of his prototypes to display inherently human qualities, such as empathy, through a video game involving playing with a puppy.

“You’re all an attempt at the same thing. Deep-tiered learning. Artificial intelligence. Human equivalent to the holy grail,” George tells his third and most advanced prototype, J3.

But if you ask an AI researcher whether humans can build an “equivalent” robot — or perhaps one that surpasses humans — they’ll give you a more complicated answer than the film offers.

“I think it’s important to break down what AI surpassing humans means. We should distinguish between AI surpassing humans at specific tasks versus AI surpassing humans on all or nearly all tasks,” Zhang says.

For example, Zhang’s research focuses more on defining what she calls “human-level” AI in relation to work.

“In our study, we defined ‘human-level machine intelligence’ as when machines can do 90 percent or more tasks better than the median human worker paid to do that task,” Zhang explains.

Sven Nyholm, an assistant professor of philosophy at Utrecht University and author of the book Humans and Robots: Ethics, Agency, and Anthropomorphism, feels similarly to Zhang.

“Well, it would be good to know the answer to this question: equivalent in what way?” Nyholm asks.

Nyholm says it’s plausible that humans could develop AI that behaves similarly — in other words, mimics — human behavior in a “narrow set of situations.” But a robot that functions on a level equivalent to humans in all situations seems much less realistic.

Further, if we’re talking about an AI that not only matches humans in cognitive intelligence, but our full emotional range and capacity for empathy, that’s probably more unlikely, despite what Archive suggests.

“If we have an AI technology that lacks an animal-like or human-like brain or nervous system, it is hard to see how it could feel feelings or have affective states that are similar to ours or those of animals,” Nyholm says.

But there’s another, less obvious definition of “equivalence”: a moral one. Nyholm describes the research of John Danaher, who argues that if a robot behaves in a way equivalent to a human being, it should have the same moral status as human beings. Nyholm is a little less certain.

“But that is, of course, a big ‘if,’ since creating robots that behave in an equivalent way to how humans behave is very hard to do,” Nyholm says.

Can we really compare AI to humans?

In Archive, George uses a lot of vaguely scientific mumbo jumbo to explain how he has built his prototypes, tossing around real terms like “deep learning” — a method of AI training that is modeled on the way humans learn — but is any of his movie science rooted in actual AI research? Not really.

Real-life deep learning research does model AI metrics against human performance, according to Zhang, but that’s about the only thing the movie gets right. It’s in the specifics of the movie where Archive leaves the realm of true AI research and enters into science fiction.

The movie’s scientist, George, has developed three different versions of androids, each more developmentally advanced than the next. The first prototype stopped mentally developing at five years of age and is emotionally one-note. The second prototype is mentally further developed than the first one and expresses basic emotions like jealousy. The third and final prototype is supposed to be "equivalent" to a human, containing all the complexities of being human. He uses equivalent brain scans of humans at different ages to show how far each prototype has advanced.

But real-life experts say that's an overly simplistic comparison between AI and human development.

“The idea that we could map AI development to human development — as in, for example, a certain AI system being equivalent to a five-year-old — is fairly unrealistic,” Nyholm says.

The reason it’s unrealistic has to do with AI systems operating in a very different way than human bodies. For example, while AI can become very good at specific tasks, like playing the game Go, it’s often very bad at other tasks outside its range of expertise.

Zhang agrees. “I think it is hard to map AI development unto human development,” she says.

Do AI researchers care about “equivalence” as much as Hollywood?

For all the attention that Hollywood has devoted to movies examining robots that have matched or surpassed humans, it’s not really as big a concern for researchers working in this space.

“I would not say that is a big concern of discussion among AI researchers. The majority of AI researchers work on much more narrow topics,” Nyholm says, but he adds that researchers occasionally contemplate science fiction scenarios to inspire their work.

Researchers are interested in making sure that AI doesn’t harm people, but not because they’re worried about robots becoming too intelligent, as Hollywood likes to suggest. One example might include racial bias in facial recognition technology, which relies on computer-generated algorithms.

“I think it’s really important for ‘narrow’ AI systems deployed today to be safe, fair, and robust,” Zhang says.

Zhang adds that Hollywood movies like Archive tend to focus on plots that anthropomorphize — attribute human qualities — to robots, which can lead to the general public misunderstanding how AI actually works in our day-to-day lives in software applications like chatbots or search engines.

“Most AI systems today are not androids or robots; instead, they are embedded in software applications without a physical representation,” Zhang says.

“I think it probably doesn’t really match up with a lot of real-world AI research. However, it makes for a good and engaging narrative in a science fiction story,” Nyholm adds.

Archive is streaming now on Amazon Prime.