Occasionally a tech story makes its way out of our nerdy little bubble and into the big wide world. Outages, bugs, and cybersecurity incidents happen in the tech industry, but the damage usually isn't large enough to warrant the sustained interest of the general public at large.

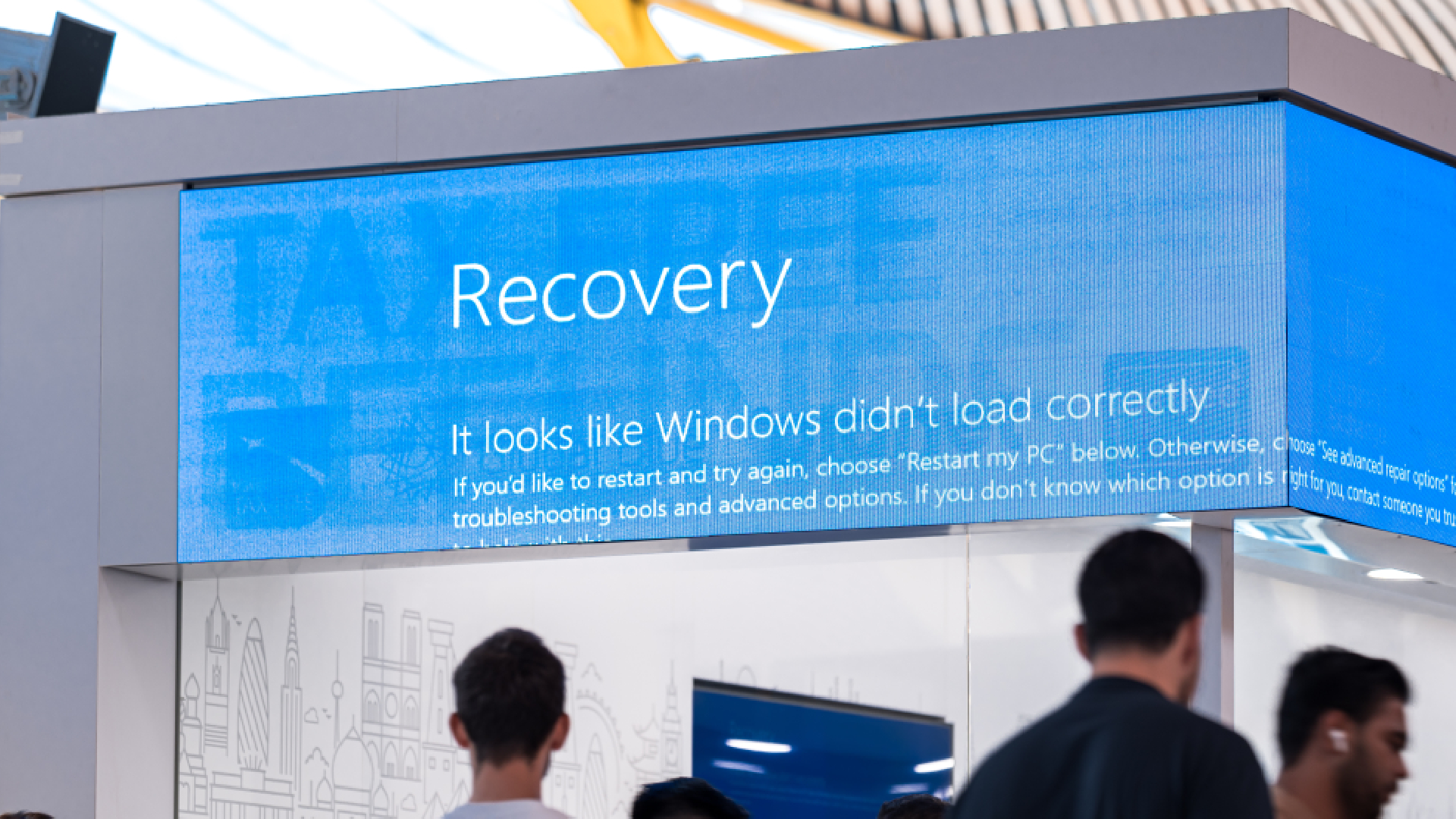

The CrowdStrike outage was different. Health services, flights, global banking operations, significantly affected. All-too-familiar blue screens of death cropping up on billboards in Times Square. And a million headlines, many of which name-dropped Microsoft and borked Windows PCs as the root cause of the issue.

Of course, that's not wrong, to a certain extent. It was indeed Microsoft Windows machines that unceremoniously fell over en masse. However, it was quickly revealed that, while Microsoft certainly had its part to play, another, lesser-known company was the cause of the issue behind the issue: CrowdStrike.

Previously a name not particularly well-known to the general population (at least, not to the same extent as Microsoft), CrowdStrike, a Texas-based cybersecurity firm, found itself embroiled in a global disaster. Now the dust has settled, more information has been revealed as to exactly what happened and why.

And while we certainly have more answers, they prompt some troubling questions. What does this sort of outage say about the stability of our ever-connected world? How could such a disastrous bug make its way into so many systems at once? And what's to prevent events like it, perhaps on an even larger scale, happening in the future?

What happened?

On Friday, July 19, at nine minutes past four in the morning, UTC, CrowdStrike pushed a content configuration update for a Windows sensor on systems using its Falcon cybersecurity platform. The update itself seemed innocuous enough. CrowdStrike regularly tweaks its sensor configuration files, referred to by the company as "Channel Files", as they're part of the protection mechanisms that can detect cybersecurity breaches.

This particular update was what the company refers to as a Rapid Response Content update. These updates are delivered as "Template Instances" which are themselves instantiations of a Template Type. Template Instances map to specific behaviours for sensors to observe and detect, and are a crucial part of Falcon's cybersecurity protection capabilities.

Keeping them updated, at a speed that keeps up with ongoing cybersecurity developments, is no easy task, especially without error. Still, these updates normally go through an extensive testing procedure. At the end of that testing procedure is a Content Validator, that performs validation checks on the content before publication.

This is a classic example of the cascading impact a simple upgrade can cause to multiple business sectors and in this case some critical infrastructure providers

According to CrowdStrike, it was this Content Validator that failed in its duties.

"Due to a bug in the Content Validator, one of the two Template Instances passed validation despite containing problematic content data. Based on the testing performed before the initial deployment of the Template Type (on March 05, 2024), trust in the checks performed in the Content Validator, and previous successful IPC Template Instance deployments, these instances were deployed into production."

For a more detailed breakdown, CrowdStrike has since released an external technical root cause analysis (PDF) of the exact causes. Ultimately, however, its testing system let through an erroneous file, which was then pushed right to the heart of many machines at once.

What happened next was disastrous. When the content in Channel File 291 was received by the sensor on a Windows machine, it caused an out-of-bounds memory read, which in turn triggered an exception. This exception caused a blue screen of death.

Worse still, these machines were then stuck in a boot loop, in which the machine crashes, restarts, and then crashes again. For some this was a mild irritation, and a bad day in the office. For others, however, the stakes were much higher.

As Microsoft systems began crashing around the world, some 911 dispatchers were reduced to working on pen and paper. In Alaska, emergency calls went unanswered for hours, with similar issues affecting multiple emergency services worldwide. Doctors appointments and medical procedures were cancelled, as the systems behind them failed. Some public transport systems ground to a halt. Flights, banks, and media services went down with them.

Microsoft and CrowdStrike pulled the update before it could spread any further, with the latter releasing an update file without the error—but the damage was done, and chaos was well underway.

For a time, quick fix solutions were suggested. Microsoft's Azure status page suggested that users repeatedly reboot their affected machines, with the suggestion that some of Microsoft's customers had rebooted their systems up to 15 times before the system was able to grab a non-broken update. Other alternatives included booting affected machines into Safe Mode and manually deleting the erroneous update file, or attaching known-working virtual disks to repair VMs.

CrowdStrike's CEO issued a public apology. IT workers dug in for the weekend, setting to work fixing boot-looping machines. Eventually, Microsoft released a recovery tool, along with a statement estimating that 8.5 million Windows devices were affected, and that it had deployed hundreds of engineers and experts to restore affected services.

By the time it was over, insurance firm Parametrix estimated that, out of the top 500 US companies by revenue affected (excluding Microsoft), financial losses were around $5.4 billion, with only an estimated $540 million to $1.08 billion of those losses being insured.

So what's to prevent such a disaster from happening again? Well, from CrowdStrike's perspective, its testing procedures are under review. The company has pledged to improve its Rapid Response Content testing, and add additional validation checks to "guard against this type of problematic content from being deployed in the future".

But there's a wider problem here, and it's partly to do with The Cloud.

A weak link in the chain

In the vast, interconnected world we currently live in, it's become increasingly impractical for huge service providers, such as Microsoft, to handle something as vital as cybersecurity and cybersecurity updates across all of its networks in-house. This necessitates the need for third party providers, and those third party providers have to be able to update their services, deep within the system, en masse and at speed, to keep up with the latest threats.

However, this is essentially like handing someone the keys to your house to come and check the locks while you're away, and hoping they don't knock over your Ming vase in the process. With the best will in the world, mistakes will be made, and without overseeing things yourself (or in Microsoft's case, itself), responsibility is left to the third party to ensure that nothing is broken in the process.

However, if that third party fails, it's you that ultimately takes the blame. Or in this case, Microsoft, at least in terms of public perception. It might have been CrowdStrike's name in the headlines, but right next to it was Microsoft, along with images of blue screens the world over—an image tied so distinctively to the perception of Windows instability that it's become representative of the term 'system error' in many people's minds.

There were a lot of smug Linux folk out there crowing about how happy they were to not be using Microsoft's ubiquitous OS, even though CrowdStrike had bricked a bunch of Linux-based systems just a month earlier. But because of the pervasiveness of the Windows ecosystem, that failure didn't have the widespread institutional damage or media attention that the Microsoft-related error carried.

How we did this in the old days:When I was on Windows, this was the type of thing that greeted you every morning. Every. Single. Morning.You see, we all had a secondary "debug" PC, and each night we'd run NTStress on all of them, and all the lab machines. NTStress would… pic.twitter.com/rZkvpujbcrJuly 20, 2024

David W Plummer tweeted an interesting comparison of how things work now compared to his days as a Microsoft engineer. Essentially, while Windows builds and drivers themselves still have to pass WHQL (Windows Hardware Quality Labs testing), and the process is rigorous, a cloud-based system will need to download and execute code that hasn't been tested by Microsoft specifically. If that code falls over, it can still potentially take the system down with it.

Interconnectivity and the butterfly effect

And then there's the problem of interconnectivity as a whole. Many vital systems are now so wholly dependent on cloud providers and online updates that, despite staging and rigorous testing procedures, a small mistake can magnify itself quickly. In this case, so quickly that it was able to take down millions of machines in one fell swoop, and many of those machines were crucial for maintaining a vast network of others.

Not only that, but in a world of increasing cyberattacks and more and more third party providers attempting to defeat them, speed is of the essence. In the ongoing game of cat and mouse between cybercrime and cybersecurity providers, those who snooze will inevitably lose. It's telling that, at the heart of the issue here, was a "Rapid Response" update.

As Muttukrishnan Rajarajan, Professor of Security Engineering and Director of the Institute for Cyber Security at City University London, puts it:

"As cyber threats are evolving at a rapid phase these companies are also under a lot of pressure to upgrade their systems. However, they have limited resources to scale at the level they need to manage such upgrades carefully as there are a lot of interdependencies in the supply chain.

"This is a classic example of the cascading impact a simple upgrade can cause to multiple business sectors and in this case some critical infrastructure providers."

While this issue was caused by CrowdStrike, and affected Microsoft machines, there's nothing to say that this sort of systemic failure couldn't affect any other large cloud tech provider. Especially as Microsoft is far from the only company relying on a small group of providers like CrowdStrike to supplement its cybersecurity needs.

In a digital monoculture, single vulnerabilities across an interconnected set of systems can create a butterfly effect that ripples through infrastructure worldwide. Currently, 15 companies worldwide are estimated to account for 62 percent of the market in cybersecurity services. That's a lot of eggs in relatively few baskets.

While the CrowdStrike debacle is now over, and lessons have been learned, the fundamental causes behind the issue are difficult to fix. The cyber world is vast, inherently interconnected, and moves at an ever increasing pace. While more rigorous testing, better procedure, and more robust release processes may help mitigate the issue, the fundamental process behind it hinges on an interconnected system that will—by its very nature—require a combination of speed and deep system access across vast numbers of machines to function effectively as a whole. One weak link, one small update gone awry, and the results magnify at a pace.

Here, that potent combination resulted in a broken update that spread too quickly, to too many systems—and chaos was the result. Move fast, break stuff, goes the adage. And in this case, a whole lot was broken here.