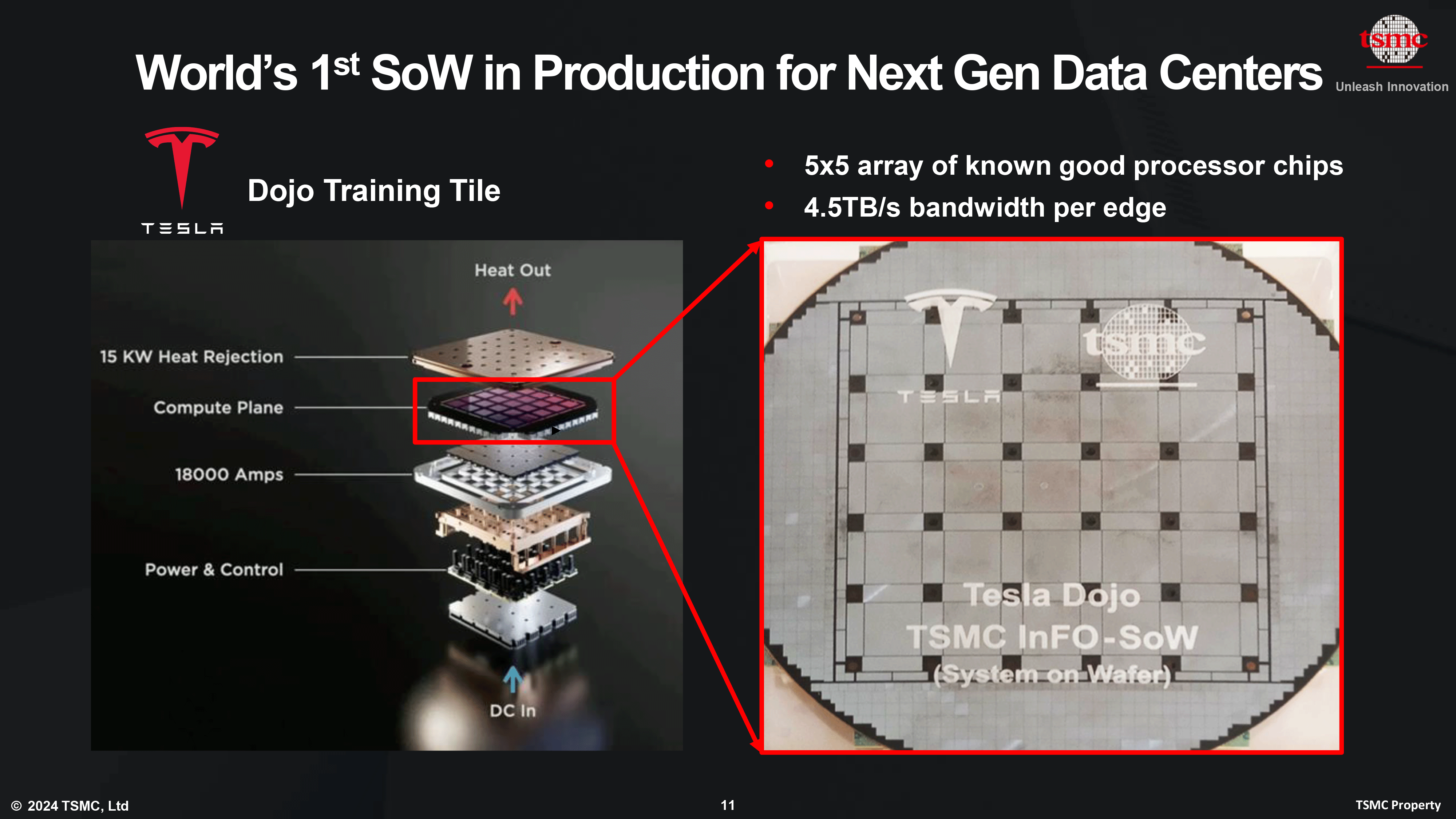

One of the less-noticed tidbits from last week's TSMC North American Technology Symposium was announcement that Tesla's Dojo system-on-wafer processor for AI training is now in mass production and is on track to be deployed shortly. More details about the giant processor were revealed at the event.

Tesla's Dojo system-on-wafer processor (or, as Tesla calls it, the Dojo Training Tile) relies on a 5-by-5 array of known good processor chips (which are reticle size, or close to that) that are placed on a carrier wafer and interconnected using TSMC's integrated fan-out (InFO) technology for wafer-scale interconnections (InFO_SoW). The InFO_SoW technology is designed to enable such high-performance connectivity that 25 dies of Tesla's Dojo would act like a single processor, reports IEEE Spectrum. Meanwhile, to make the wafer-scale processor uniform, TSMC fills in blank spots between dies with dummies.

Since the Tesla Dojo Training Tile essentially packs 25 ultra-high-performance processors, it is exceptionally power hungry and requires a sophisticated cooling system. To feed the system-on-wafer, Tesla uses a highly complex voltage-regulating module that delivers 18,000 Amps of power to the compute plane. The latter dissipates as much as 15,000W of heat and thus requires liquid cooling.

Tesla has yet to disclose the performance of its Dojo system-on-wafer — though, considering all the challenges with its development, it seems poised to be a very powerful solution for AI training.

Wafer-scale processors, such as Tesla's Dojo and Cerebras' wafer scale engine (WSE), are considerably more performance-efficient that multi-processor machines. Their main advantages include high-bandwidth and low-latency communications between cores, reduced power delivery network impedance, and superior energy efficiency. Additionally, these processors can benefit from having redundant 'extra' cores — or, in case of Tesla, known-good processor cores.

But there are inherent challenges with such processors for now. System-on-wafers currently have to exclusively use on-chip memory, which is not flexible — and, which may not be enough for all types of applications. This will be solved by the next-generation system-on-wafer platform called CoW_SoW, which will enable 3D stacking and installation of HBM4 memory on processor tiles.

For now, only Cerebras and Tesla have system-on-wafer designs. But TSMC is certain that, over time, more developers of AI and HPC processors will build wafer scale designs.