Imagine finding that someone has taken a picture of you from the internet and superimposed it on a sexually explicit image available online. Or that a video appears showing you having sex with someone you have never met.

Imagine worrying that your children, partner, parents or colleagues might see this and believe it is really you. And that your frantic attempts to take it off social media keep failing, and the fake “you” keeps reappearing and multiplying. Imagine realising that these images could remain online for ever and discovering that no laws exist to prosecute the people who created it.

For many people across the world, this nightmare has already become a reality. A few weeks ago, nonconsensual deepfake pornography claimed the world’s biggest pop star as one of its victims, with the social-media platform X blocking users from searching for the singer after a proliferation of explicit deepfake images.

Yet Taylor Swift is just one of countless women to suffer this humiliating, exploitative and degrading experience.

Last year’s State of Deepfakes report revealed a sixfold increase in deepfake pornography in the year to 2023. Unsurprisingly, women were the victims in 99% of recorded cases.

Technology now allows a 60-second deepfake video to be created from a single clear image in under 25 minutes – at no cost. Often using images lifted from private social-media accounts, every day more than 100,000 sexually explicit fabricated images and videos are spread across the web. Referral links to the companies providing these images have increased by 2,408% year on year.

There is no doubt that nonconsensual deepfake pornography has become a growing human rights crisis. But what steps can be taken to stop this burgeoning industry from continuing to steal identities and destroy lives?

Britain is ahead of the US in having criminalised the sharing – but not creation – of deepfakes and has some legislation designed to bring greater accountability to search engines and user-to-user platforms. But the legislation does not go far enough.

And no such protection yet exists in the US, although a bipartisan bill was introduced in the Senate last month that would allow victims to sue those involved in the creation and distribution of such images.

While introducing regulation to criminalise sexual nonconsensual deepfake production and distribution is obviously crucial, this would not be enough. The whole system enabling these businesses must be forced to take responsibility.

Experts on images created with artificial intelligence (AI) concur that for the proliferation of sexual deepfakes to be curtailed, social media companies, search engines and the payment companies processing transactions – as well as businesses providing domain names, security and cloud-computing services – must hit the companies making deepfake videos where it hurts: in their wallets.

Sophie Compton is a founder of the #MyImageMyChoice campaign against deepfake imagery and director of Another Body, a 2023 documentary following female students seeking justice after falling victim to nonconsensual deepfake pornography. For her, search engines have a key role in disabling this abuse.

However, according to Prof Hany Farid, a forensics specialist in digital images at the University of California, Berkeley, all of those parties indirectly making money from deepfake abuse of women are unlikely to act. Their “moral bankruptcy” will mean they continue to turn a blind eye to the practice in the name of profits unless forced to do otherwise, he says.

As a gender-equity expert, it is also clear to me that there is something deeper and more systemic at play here.

My research has highlighted that male-dominated AI companies and engineering schools appear to incubate a culture that fosters a profound lack of empathy towards the plight of women online and the devastating impact that sexual deepfakes have on survivors. With this comes scant enthusiasm for fighting the growing nonconsensual sexual image industry.

A recent report revealed that gender discrimination is a growing problem across the hugely male-dominated tech industry, where women account for 28% of tech professionals in the US, and a mere 15% of engineering jobs.

When I interviewed Compton about her work on the nonconsensual sexual abuse industry, she talked of witnessing the constant subjugation of women in online forums frequented by engineering students working on AI technology and how the women she followed for her documentary spoke of “constant jokes about porn, people spending a lot of time online, on 4chan, and definitely a feeling of looking down on normality and women”.

All of this breeds a sense that because these images are not real, no harm has been done. Yet this could not be further from the truth. We urgently need support services for survivors and effective response systems to block and remove nonconsensual sexual deepfakes.

In the time that it has taken to read this article, hundreds of nonconsensual new images or videos of women will have been uploaded to the internet, potentially tearing lives apart, and nothing is being done to stop it.

Generative AI technologies have the potential to enable the abuse of women at an unprecedented scale and speed. Thousands of women need help now. If governments, regulators and businesses do not act, the scale of the harm inflicted on women across the world will be immense.

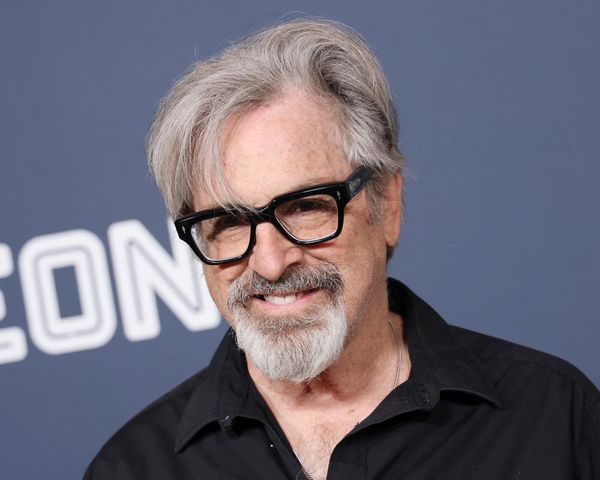

Luba Kassova is a speaker, journalist and consultant on gender equality