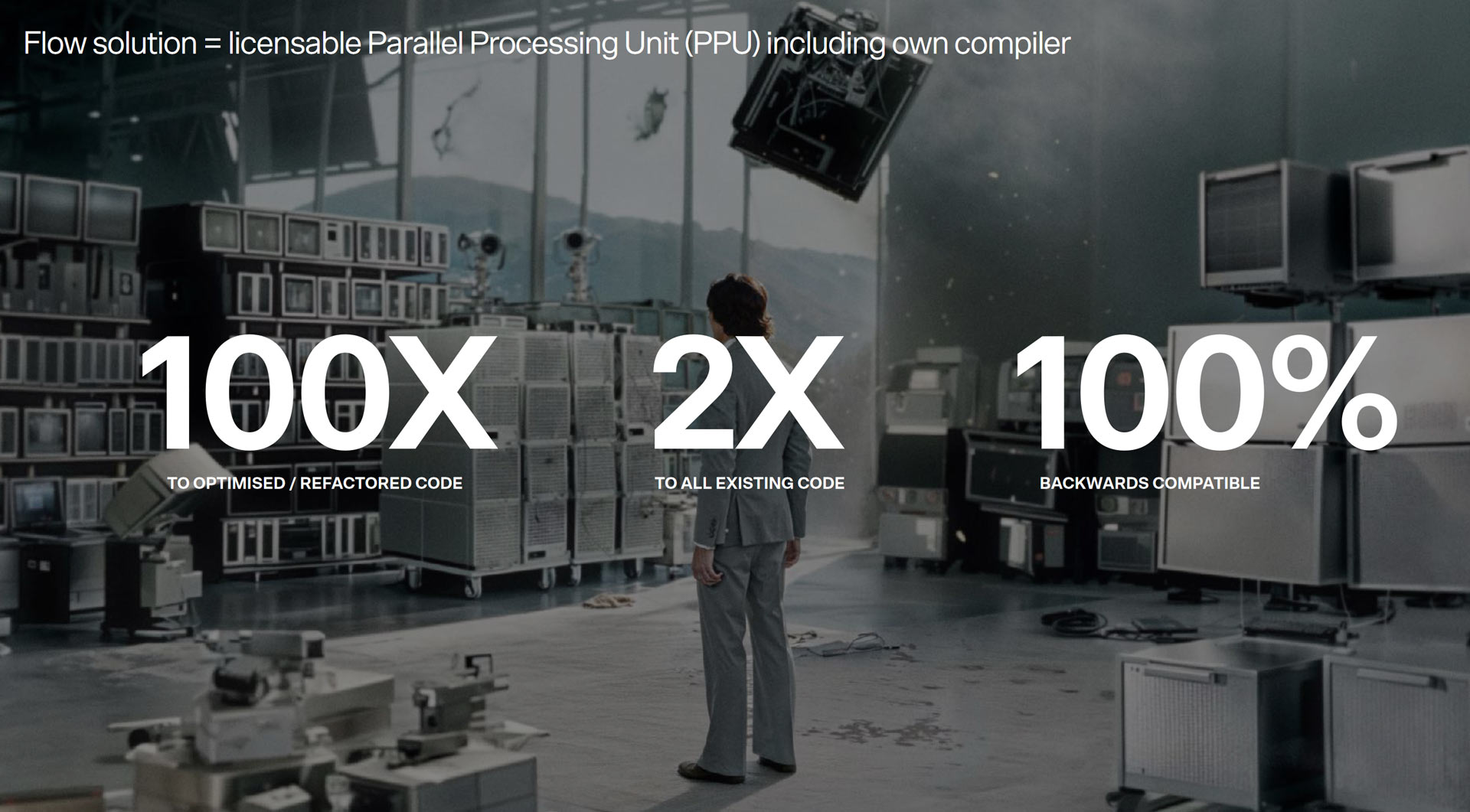

Flow Computing has exited stealth mode with some of the most explosive claims from an emerging technology company we have seen in a long time. A spinout from Finland’s acclaimed VTT Technical Research Center, Flow says its Parallel Processing Unit (PPU) can enable “100X Improved performance for any CPU architecture.” The startup has just secured €4M in pre-seed funding and its founders and backers are asking the tech world to brace for its self-titled era of "CPU 2.0."

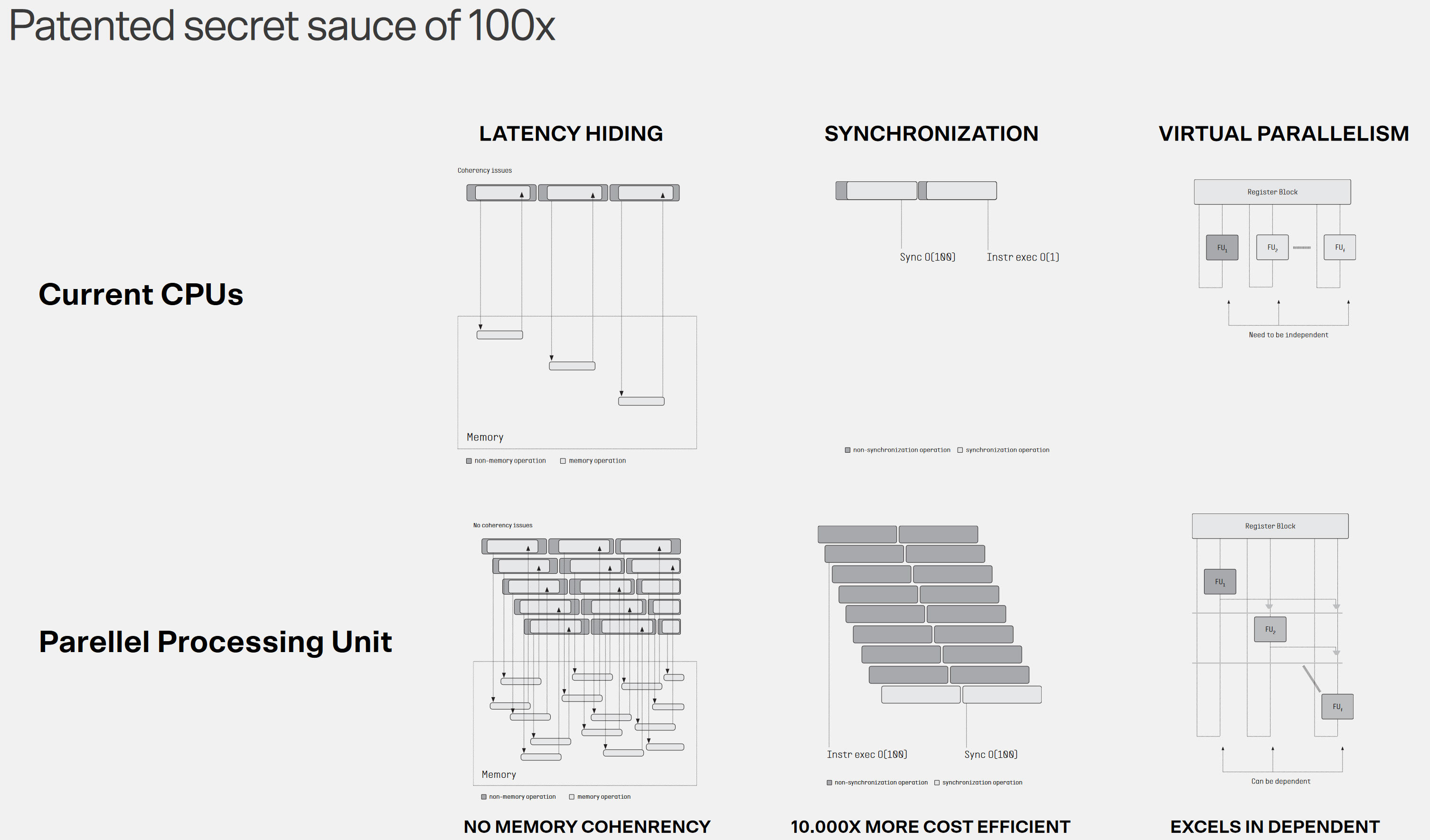

According to Timo Valtonen, co-founder and CEO of Flow Computing, the new PPU was designed to break a decades-long stagnation in CPU performance. Valtonen says that this has made the CPU the weakest link in computing in recent years. “Flow intends to lead the SuperCPU revolution through its radical new Parallel Performance Unit (PPU) architecture, enabling up to 100X the performance of any CPU,” boldly claimed the Flow CEO.

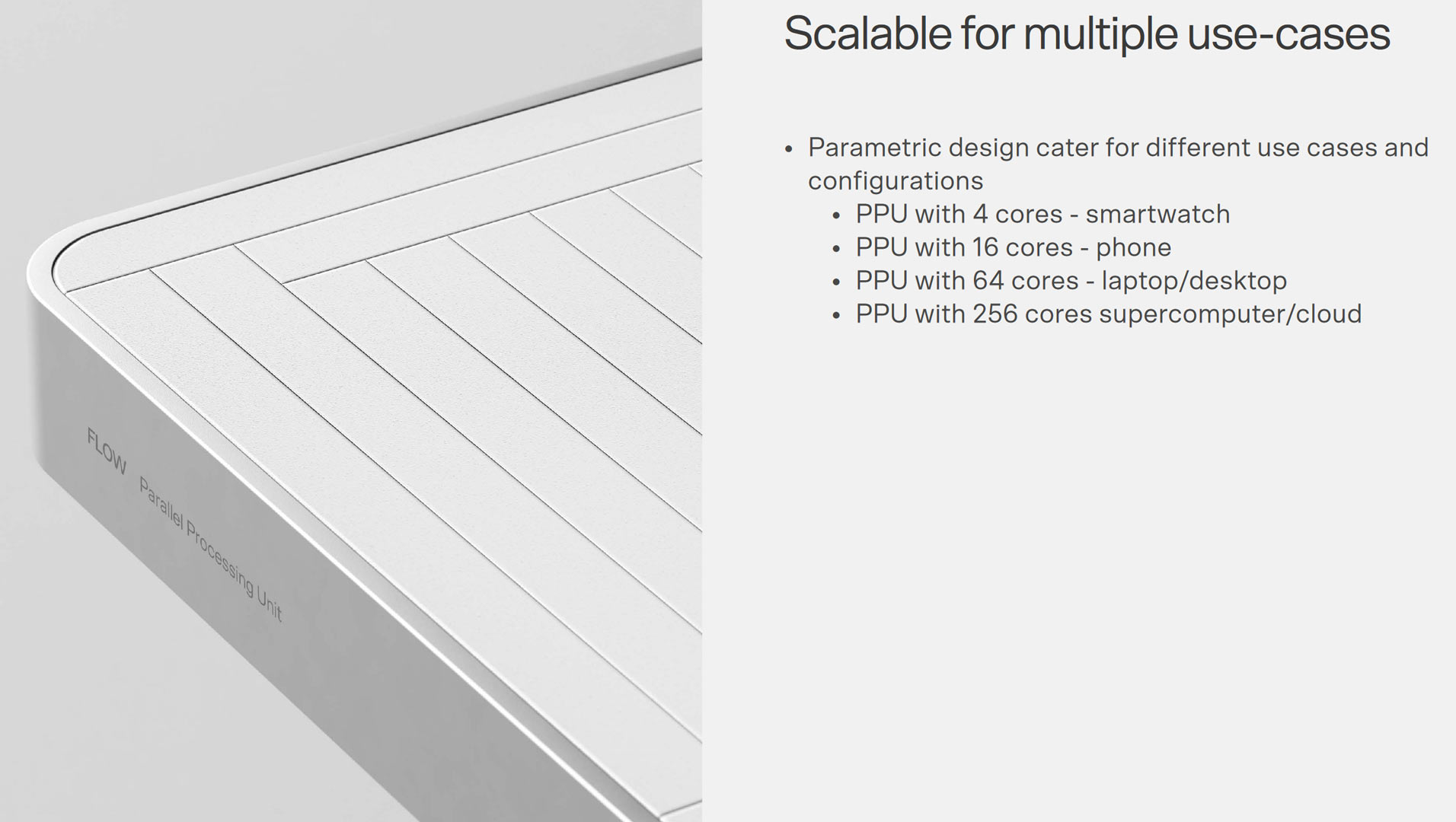

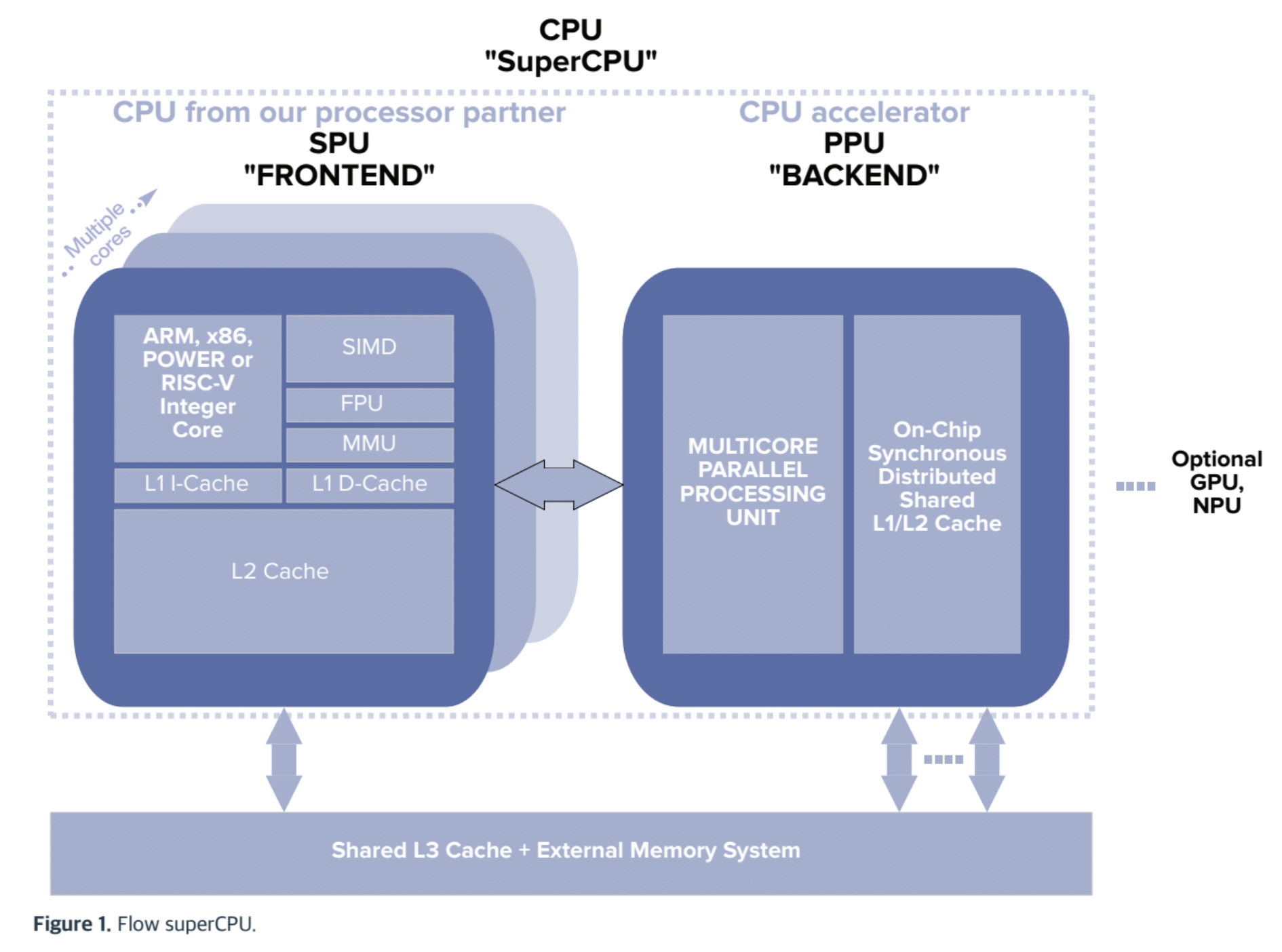

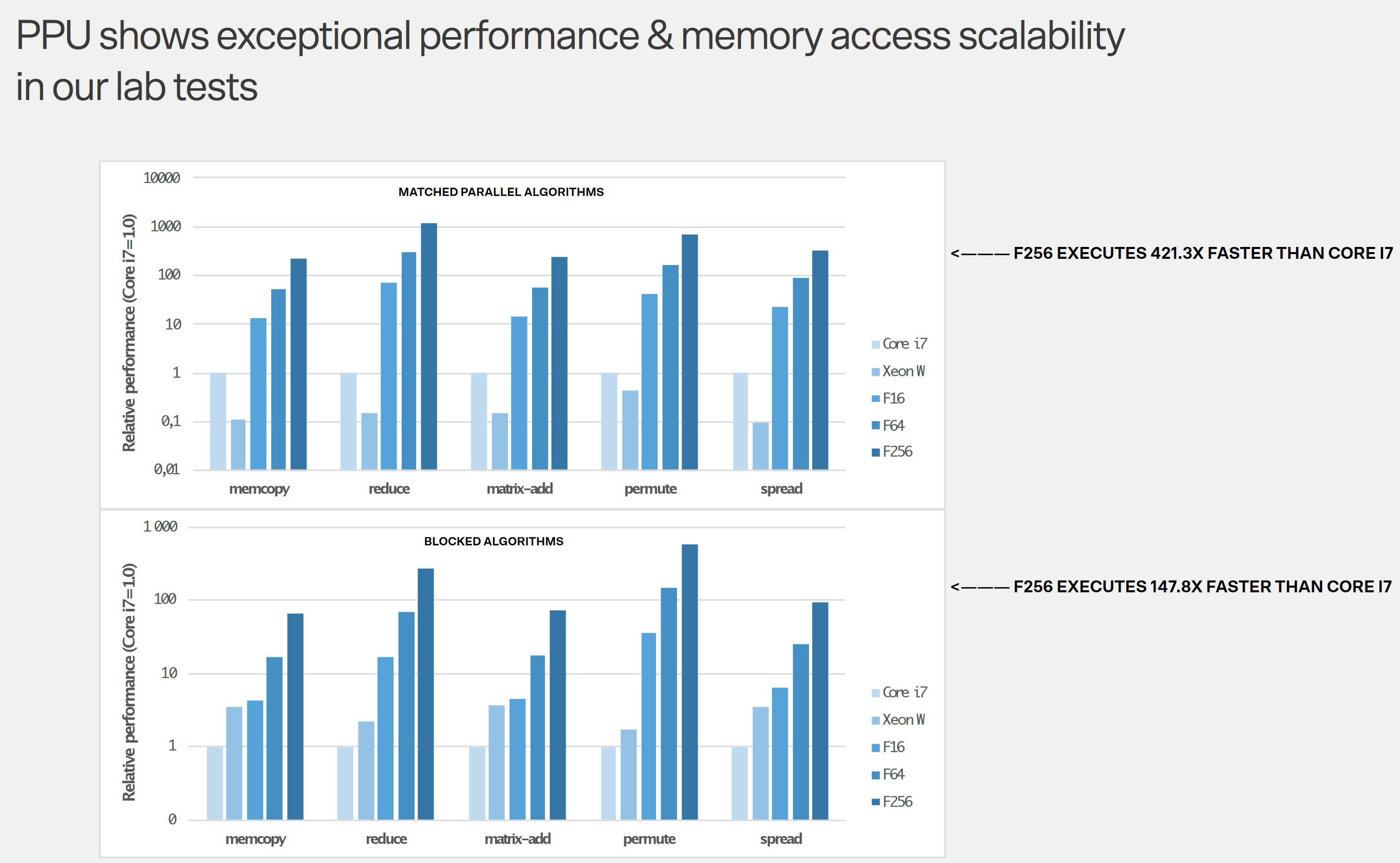

Flow’s PPU is claimed to be very broadly compatible with existing CPU architectures. It is claimed that any current Von Neumann architecture CPU can integrate a PPU. According to a press release we received, Flow has already prepared optimized PPU licenses targeting mobile, PC, and data center processors. Above, you can see a slide showing Flow PPUs configured with between 4 and 256 cores.

Another eyebrow-raising claim is that an integrated PPU can enable its astonishing 100x performance uplift “regardless of architecture and with full backward software compatibility.” Well-known CPU architectures/designs such as X86, Apple M-Series, Exynos, Arm, and RISC-V are name-checked. The company claims that despite the touted broad compatibility and boosting of existing parallel functionality in all existing software, there will still be worthwhile benefits gleaned from software recompilation for the PPU. In fact, recompilation will be necessary to reach the headlining 100X performance improvements. The company says existing code will run up to 2x faster, though.

PC DIYers have traditionally balanced systems to their preferences by choosing and budgeting between CPU and GPU. However, the startup claims Flow “eliminates the need for expensive GPU acceleration of CPU instructions in performant applications.” Meanwhile, any existing co-processors like Matrix units, Vector units, NPUs, or indeed GPUs will benefit from a “far-more-capable CPU,” asserts the startup.

Flow explains the key differences between its PPU and a modern GPU in a FAQ document. “PPU is optimized for parallel processing, while the GPU is optimized for graphics processing,” contrasts the startup. “PPU is more closely integrated with the CPU, and you could think of it as a kind of a co-processor, whereas the GPU is an independent unit that is much more loosely connected to the CPU.” It also highlights the importance of the PPU not requiring a separate kernel and its variable parallelism width.

For now, we are taking the above statements with bucketloads of salt. The claims about 100x performance and ease/transparency of adding a PPU seem particularly bold. Flow says it will deliver more technical details about the PPU in H2 this year. Hopefully, that will be a deeper dive stuffed with benchmarks and relevant comparisons.

The Helsinki-based startup hasn’t shared a commercialization timetable as yet but seems open to partnerships. It mentions the possibility of working with companies like AMD, Apple, Arm, Intel, Nvidia, Qualcomm, and Tenstorrent. Flow’s PR highlights its preference for an IP licensing model, similar to Arm, where customers embed its PPU for a fee.