Fourteen years after it was originally announced, Stalker 2 is finally here. Having gone through such a protracted development, you can be easily forgiven if you thought that day would never come. I had doubts about its readiness for the November launch myself but to my pleasant surprise, Stalker 2: Heart of Chornobyl (I'm not writing all those periods GSC wants me to) is bang on schedule.

If one ignores the first, aborted attempt at making the game using an in-house engine, the first few years of Stalker 2's development were done using Unreal Engine 4. GSC Game World switched to UE5 a few years ago and fully uses the engine's major rendering features: Nanite and Lumen.

Concerning the latter, there are no fallbacks involving traditional rendering pipelines (aka 'rasterisation'), so all of the lighting, shadows, and reflections are done using ray tracing.

However, unlike most UE5 games that boast GPU-hogging rays, Stalker 2 uses the software mode for Lumen. Your graphics card will still be doing the bulk of the ray tracing but it's done through asynchronous compute shaders and not via any ray tracing hardware inside the GPU.

That's great for making ray tracing more inclusive but, as you'll soon see, it comes at a cost. At the moment, developer GSC Game World is just focusing on optimising the software Lumen pipeline as much as possible, but it may release an option to use hardware Lumen (for AMD Radeon RX 6000/7000-series, Intel Arc, and Nvidia GeForce RTX graphics cards only) at some point in the future.

The same is also true with getting Stalker 2 to work properly on handheld gaming PCs. While it does run on the likes of the Asus ROG Ally, the game doesn't render lights and shadows correctly (and in some areas, it just skips it altogether).

The review code I've tested for this performance analysis has gone through some significant updates since it was sent out but I think it's fair to say that the game needs more work to run on all PCs, even those that are theoretically capable of supporting it.

For example, in the results below, you won't see many figures for an Alchemist Arc graphics card—Stalker 2 would just crash at anything beyond 1440p low quality and I'm currently talking with Intel about this (it's possibly a fault with the card I have).

Since Stalker 2's graphics settings don't alter what the engine is fundamentally doing to render the game, just the level of quality of each aspect, I've split the performance analysis up on the basis of how the game looks and runs when using each of the four presets.

It's important to note that, by default, Stalker 2 uses upscaling for the low, medium, and high presets but I've set them all to just use TAA (temporal anti-aliasing) with a 100% render scale. Performance figures were taken around a tiny base that you come across early in the game, starting inside a building, before moving around outside with a variety of NPCs moving about.

I've covered the tweaks you can make outside of the presets in my Stalker 2 best settings guide, which highlights which features can make the most impact on performance with the least impact on graphical fidelity.

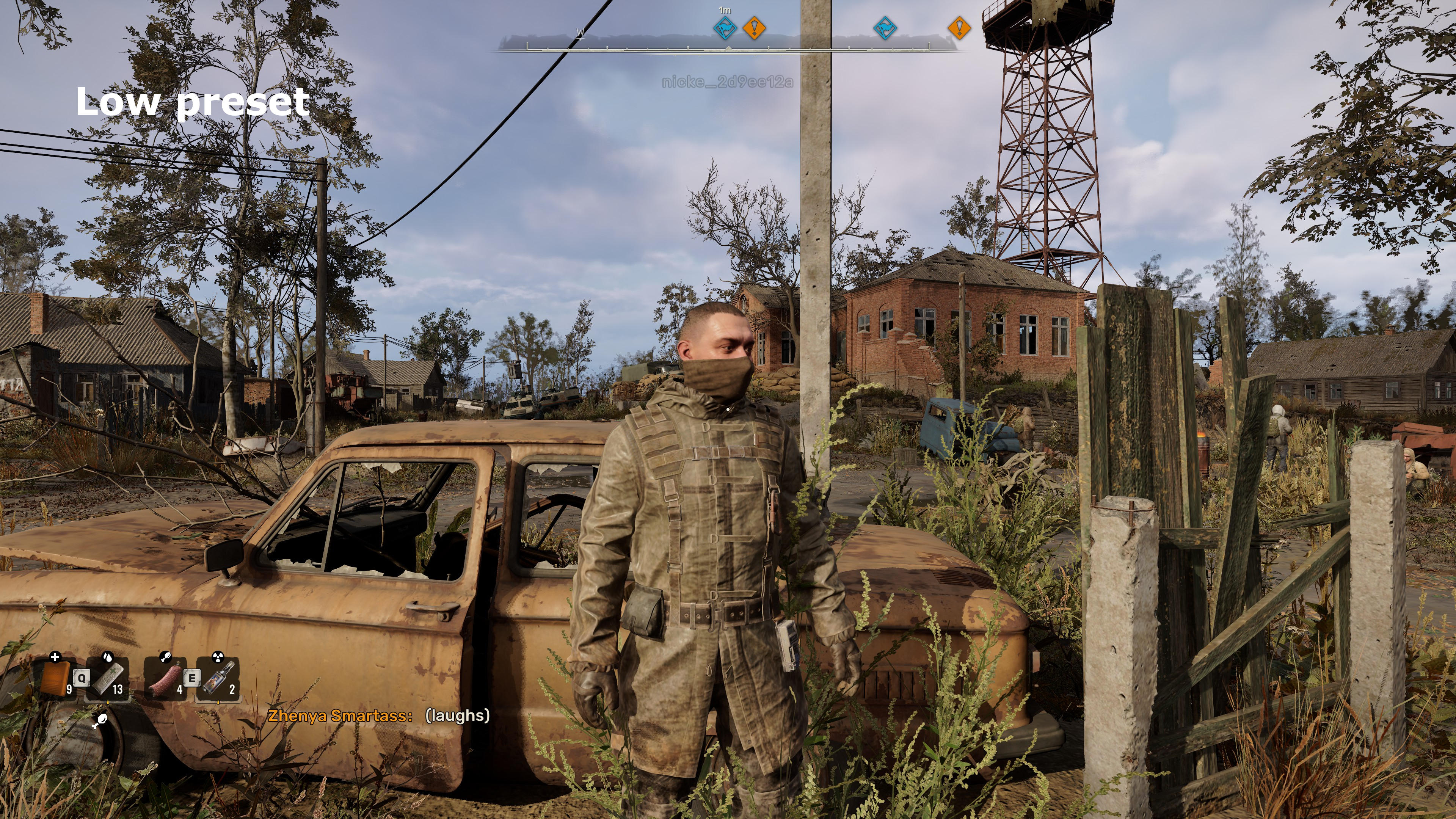

Low preset

The above results immediately highlight one problem with using ray tracing for everything. I'm not talking about the fact that none of the tested PCs run Stalker 2 at a very high frame rate. It's that ray tracing works best when paired with a high frame resolution, as more pixels involve more rays, which produces better quality lighting. However, as one can see by switching through the charts, increasing the resolution significantly affects the performance.

This means you're either going to use a resolution lower than you would do normally, to get the performance you want, or enable upscaling. How well Stalker 2 fares using that is covered later in this analysis.

You'll also notice the RTX 3060 Ti appears twice in the charts. I've done this to highlight how CPU/system-heavy Stalker 2 is. At 1080p low, the game runs 24% faster on average with a Ryzen 9 9900X using DDR5-6000 CL28 than it does with a Ryzen 5 5600X with DDR4-3200 CL16. The 1% low figures are an astonishing 55% better with the Zen 5 setup.

Note how poorly the Radeon RX 5700 XT copes at 4K. That performance was verified across several test PCs but understanding exactly why it's so poor has been difficult to pin down. It's not a VRAM issue, as the RTX 3060 Ti has the same 8 GB of video memory as the 5700 XT, so it must be something specific to that architecture.

It's not ideal to play Stalker 2 with the low preset. The review code's LOD (level of detail) system is overly aggressive, with some objects suddenly popping into view, and it's most noticeable with shadows—from a distance, they're quite glitchy and low resolution, but get to a certain position and they'll suddenly, jarringly snap into a more detailed version.

Medium preset

Switching to the medium preset immediately improves the overall look of Stalker 2. Not only is there a lot more vegetation and environmental detail, but shadows cast by objects are darker and of a higher resolution. The drawback is an immediate 10 fps drop for nearly all of the tested PCs, except for the 3060 Ti in the Ryzen 5 5600X system and the RTX 4050 laptop.

The latter is totally unplayable with this preset and regardless of what power profile I used, the laptop just couldn't run Stalker 2 without suffering from major stutters and long pauses. It's almost certainly a VRAM limitation as the RTX 4050 only has 6 GB available to it. At 1080p low, the game uses around 5.5 GB of memory in my benchmarking area but it increases to just under 6 GB with the medium preset (and reaches 7 GB at 4K).

Traversal stutter also begins to appear with the medium preset. As Stalker 2: Heart of Chornobyl is a completely seamless open world, it needs to stream assets into VRAM as you move about. This happens when you approach a defined but invisible boundary, and the 3060 Ti and 5700 XT both display light stuttering.

At least there's no sign of shader compilation stutter (also known as PSO or pipeline state object compilation). That's because all of the shaders are compiled when the game first loads—depending on whether it's the first time the game is fired up and on what CPU/system one has, this stage can take a long time. On my Asus ROG Ally, the compilation process took as long as 23 minutes with the first review code, though with a later patch it dropped to a couple of minutes.

It's much faster on high-power, multicore CPUs but it's frustrating that it takes place every time you load the game. Unless one has changed drivers or hardware, there's no need to do it so I hope GSC Game World addresses this in a future patch.

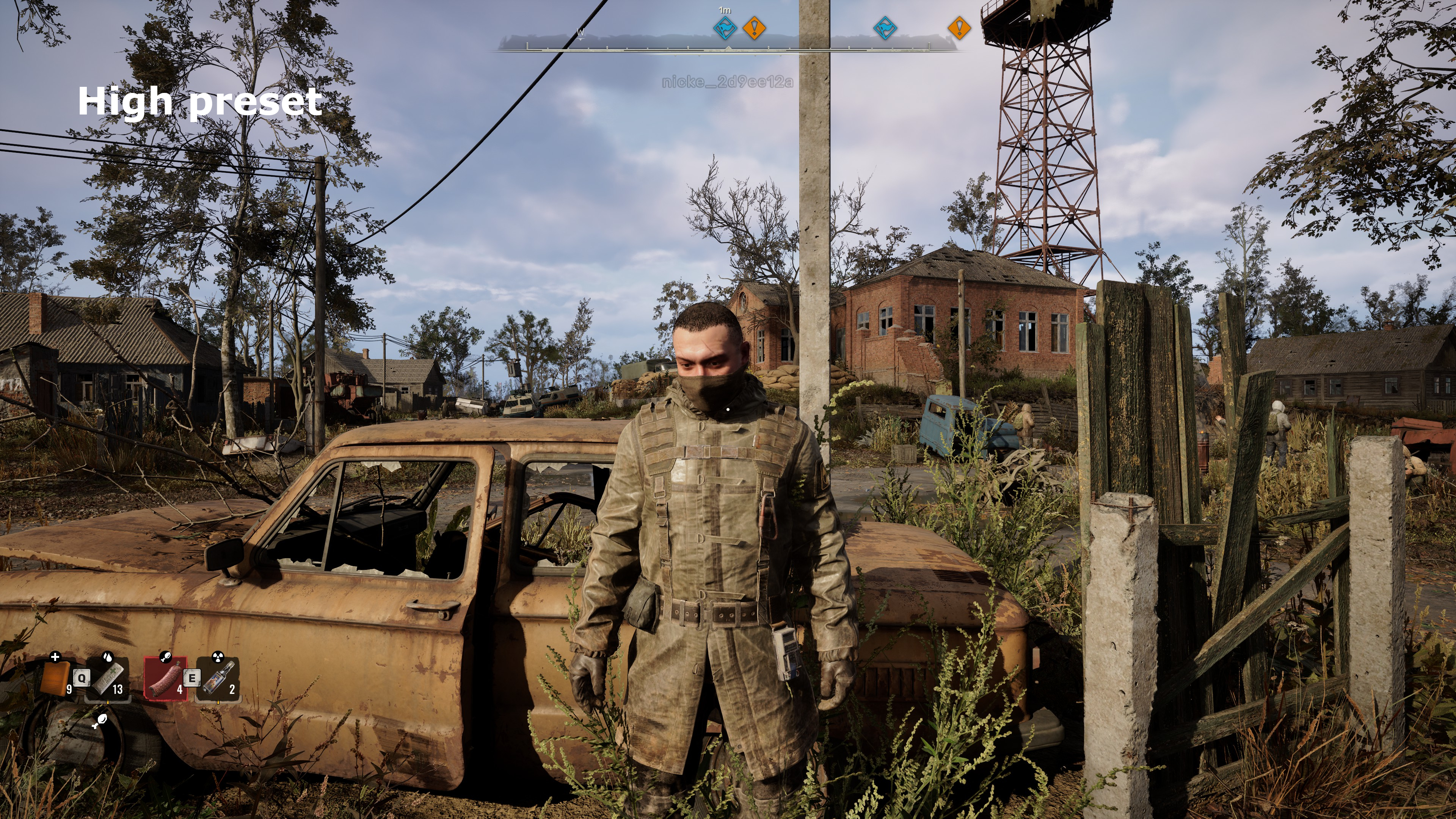

High preset

Unlike going from low to medium, there isn't an enormous boost in visual fidelity by using the high preset. The most noticeable gains are with shadows and reflections, where the overall resolution of them is a little better in both cases.

At 1080p, GPUs like the RTX 4080 Super will still be quite CPU-limited, hence the relative lack of difference between that graphics card and the RTX 4070, for example. However, at 1440p or 4K, it's clear as to what GPU you'll need in order to average 60 frames per second with the high preset.

Stalker 2 isn't unplayable with sub-60 fps performance, as it's really not a fast-paced, twitchy shooter, but those 1% low figures speak volumes. When everything is running smoothly, the game is absolutely fine but higher resolutions bump up the VRAM usage and at 4K, the frame rate effectively drops to zero on the 8 GB cards (not that they're 4K GPUs, anyway).

That's partly due to the sheer number of rays being processed but also because the game uses a little over 8 GB of VRAM, with peaks of 9 GB. GSC Game World might be able to optimise the asset loading to reduce the performance nose dive and since there's a day-0 patch with the game's release, you may already be getting better results than I did.

However, when an RTX 4080 Super begins to struggle at this resolution (it doesn't stutter as such, just runs slowly in certain outdoor areas), you just know that a 100% perfect memory management system can't offset the challenge of handling so many rays.

Epic preset

Stalker 2 undeniably looks best when using the epic (i.e. maximum quality) preset, as shadows and reflections are far more defined than with the high preset. The aggressiveness of the LOD system is less prevalent with this setting too, although some objects will still ping into view.

The best aspect of the epic preset is that the world environment is just a lot richer (I especially like all of the detritus tumbling through the air and skipping off the ground) and while there's an obvious cost to it all, it's only at 4K when things come crashing down. That said, the tested area of Stalker 2 isn't necessarily indicative of the whole game, and later sections demand even more of your gaming PC.

Unless you have a latest-generation high-end graphics card, I don't recommend using the epic preset, without any upscaling involved. It's not especially heavy on the VRAM (just under 8 GB at 1080p and around 10 GB or so at 4K) but the sheer amount of compute shading required to render everything will just grind down most GPUs.

It's especially noticeable in regions where there is a lot of geometry involved, such as factories and towns, and even the most powerful of gaming PCs will still hitch and stutter a little. It wouldn't be a Stalker game if it wasn't a little clunky, though, and after a while, I stopped paying attention to it.

Upscaling and frame gen performance

With everything being ray traced all the time, it's obvious that upscaling is a must for getting decent and consistent performance. Fortunately, GSC Game World has included all of the latest systems, including Epic's UE5 TSR, for improving performance. You also get AMD's FSR 3.1, Intel's XeSS, and Nvidia's DLSS 3.7 (but not Ray Reconstruction, as the ray tracing is software Lumen).

The inclusion of FSR 3.1 means anyone who can run the game can also enable AMD's frame generation system to boost frame rates, as it's decoupled from the upscaler. Rather than test every graphics card for its upscaling performance, I've focused on three cases: the RTX 4080 Super at 4K epic, the RX 6750 XT at 1440p medium, and the RTX 3060 Ti at 1080p high.

This is because all three were running at around the 45 to 47 fps mark natively with those settings, so I wanted to see how much of an improvement upscaling and frame generation could offer.

DLSS 3.7 upscaling and frame generation

RTX 4080 Super, 4K with epic preset

As you can see above, just using DLSS Quality immediately brings the 4080 Super back into the realms of playability at 4K epic, though the 1% low figures are still somewhat on the low side. There's little to be gained from using a higher level of scaling than this, though, and you're better off enabling DLSS frame generation.

Combined with DLSS Quality upscaling, the use of frame gen fixes does wonders for the performance and the implementation of the system is pretty solid, with few visual artefacts occurring. The input lag is higher, of course, but you're not going to be whizzing about the Zone like it's a CoD round. Well, if you are, then you're probably about to get toasted by a monster anyway.

FSR 3.1 upscaling and frame generation

RX 6750XT, 1440p with medium preset

It's a similar situation for the RX 6750 XT when using FSR upscaling and frame generation. Ordinarily, I'd be reluctant to enable the latter at 1440p, as the lower the resolution, the less information the frame interpolation algorithm has to work with, in order to create as good a frame as possible. As you can see, it's perfectly acceptable—certain elements, such as cables hanging between poles, are fuzzier than normal but foliage and smoke are rendered correctly.

The use of upscaling does impact the 1% low figures, unfortunately, and the only way to get around that problem is to use FSR frame generation. The increase in input lag is perhaps a little more noticeable than it is with DLSS frame gen, but I found it acceptable to play with. If you have a good CPU and a mid-range or lower-mid-range GPU, then the lag is a minor inconvenience.

DLSS 3.7 upscaling and FSR 3.1 frame generation

RTX 3060 Ti, 1080p with high preset

No, these results for the Ryzen 5 5600X and RTX 3060 Ti combination aren't a mistake. I spent over three hours repeating the test runs, including wiping and reinstalling drivers. What's happening here is that the game is very CPU/system-limited at 1080p High and if you click through to the second chart, showing the same GPU in a Ryzen 9 9900X gaming PC, you can see this clearly.

The heavy stutters you can see in the video are partly due to the system struggling to run Stalker 2 and record video at the same time, as they're not as pronounced while just playing normally—instead of the game momentarily freezing, you just get a brief stutter instead.

The obvious solution here is to use a lower quality preset or lower a couple of the graphics settings, rather than relying on upscaling to boost performance. Stalker 2 is one of the very few modern games that really exposes the difference between generations of CPUs—if you have a processor from the past couple of years or so (and one that uses high-speed DDR5), you should be fine. Anything older than that and the CPU will be a significant limiting factor.

Performance impressions

After benchmarking Stalker 2: Heart of Chornobyl for well over 20 hours in total, I have to say that I rather mixed feelings about how well it runs across different gaming PCs. On a powerful high-end system, such as the Core i7 14700KF and RTX 4080 Super combination I used, it runs pretty smoothly at 4K with the epic preset, as long as you use upscaling and frame generation. Remove the latter and it's okay, but not consistently so.

Going down a tier or two with the GPU (e.g. RTX 4070, RX 7800 XT) and it's a similar story, though you're better off using the high preset with a 1440p resolution. You won't need to rely on frame gen but you should definitely enable upscaling, if only for the superior antialiasing it offers.

Where things aren't so great is down at the bottom end of the gaming PC scale. GCS Game World claims something like a Core i7 7700K with a GeForce GTX 1060 is good enough for 30 fps at 1080p low. Considering an RTX 4050 gets almost double that figure, at the same resolution and quality settings, suggests that the developers are correct (as the 4050 is pretty much equivalent to two 1060s).

However, that CPU will not only take ages to grind through the shader compilation, it'll have a noticeable impact on the 1% low frame rates. In other words, it won't be a particularly enjoyable gaming experience and given that even the best gaming PCs experience stutters and hiccups in Stalker 2, an eight years old CPU+GPU combination is going to have a hard time of it.

During those numerous hours of testing, I experienced multiple crashes, freezes, and bugs. The developers have said that the day-0 patch, which comes with the game's release, should resolve many of these, but I think the fundamental problem with the performance and graphics won't be solved until it implements a hardware Lumen rendering option.

I appreciate that focusing on just one option (e.g., software Lumen) reduces the amount of work and testing required and opens up ray tracing to more PC gamers, but it also leaves performance and visual quality off the table for those with the relevant hardware.

Stalker 2: Heart of Chornobyl doesn't have the best performance I've seen in the games I tested this year but there's plenty of room for it to be improved and it's certainly been one of the most enjoyable games to play. It's nice to have you back in the Zone, Stalker.