SoftBank Group plans to build the industry's first Nvidia Blackwell-based AI supercomputer, according to an announcement at Nvidia's AI Summit in Tokyo this week. This announcement confirms SoftBank's AI ambitions, which have been rumored for some time. Interestingly, the supercomputer will use x86 CPU-based DGX B200 servers, not Arm-based processors.

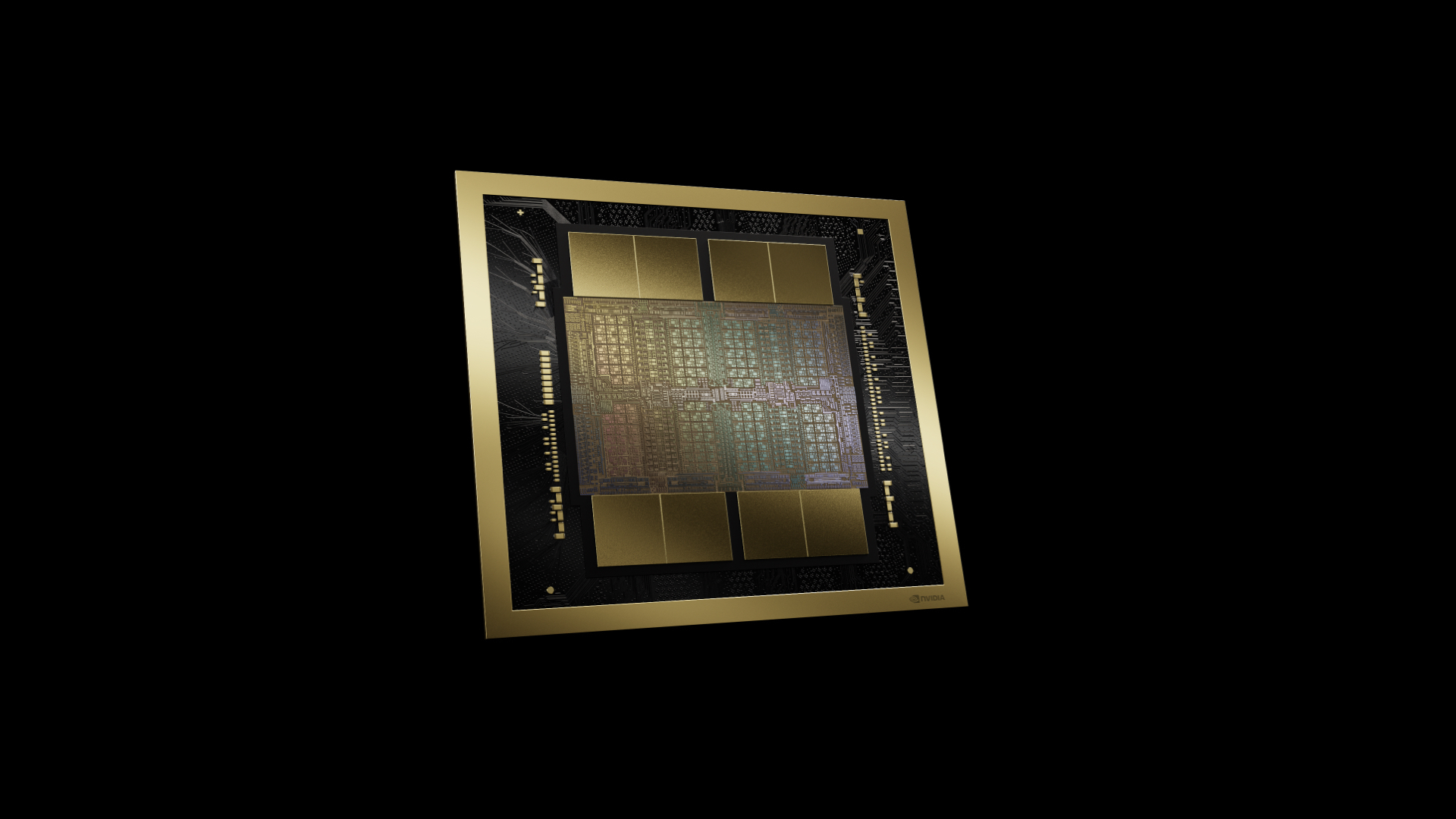

SoftBank's s telecom arm will build an AI supercomputer based on Nvidia's DGX B200 servers, featuring x86 processors and eight B200 GPU modules per server. SoftBank plans to adopt the Grace Blackwell platform, which combines Arm-based Grace CPUs and Blackwell AI GPUs, eventually (it's unclear when).

Neither Nvidia nor SoftBank disclosed how many B200 processors the Japanese company will purchase — merely that it will get "a lot of them." Elon Musk's companies Tesla and xAI are deploying tens of thousands of Nvidia processors to train their latest AI models, so SoftBank will need to be somewhere in that ballpark if it wants to compete. But getting enough Nvidia GPUs will be hard, as virtually all global high-tech companies currently need them.

Beyond supercomputers, SoftBank's telecom unit plans to integrate Nvidia's AI technology into Japan's mobile networks to create what Huang described as an 'AI grid' across the nation. A significant feature of this rollout will be the deployment of AI radio access networks (AI-RANs), which will use less energy than traditional RAN hardware. Such RANs are said to be a better fit for innovative services, such as autonomous vehicles and remote robotics, and will repurpose the communications network as a nationwide AI infrastructure.

SoftBank's telecom division will be trialing the AI-enhanced network in collaboration with Fujitsu and IBM subsidiary Red Hat.

Nvidia and SoftBank claim the upcoming supercomputer will be the first Blackwell-based AI supercomputer, but this may not be technically true. Microsoft is already testing Blackwell-based machines, and is expected to be the first company to deploy them. But perhaps SoftBank will be the first company to deploy DGX B200-based servers.

It will take some time before Microsoft optimizes its software for Nvidia Blackwell, so don't expect the company to roll out a wide range of services powered by Nvidia's latest GPUs overnight.

After a delay involving an Nvidia B100/B200 design flaw, which was discovered and promptly fixed earlier this year, the final revision of B100/B200 entered mass production in late October. As a result, the first batch of these processors isn't expected to arrive until late Jan. 2025. Nvidia revealed that, in order to meet the high demand for Blackwell GPUs from leading cloud providers such as AWS, Google, and Microsoft, it will need to start shipping some of the early low-yield Blackwell processors in 2024; it's not clear how many of these GPUs will be delivered to datacenters throughout the year.